Tutorial 10.6a - Poisson regression and log-linear models

05 Sep 2016

Poisson regression

Poisson regression is a type of generalized linear model (GLM) that models a positive integer (natural number) response against a linear predictor via a specific link function. The linear predictor is typically a linear combination of effects parameters (e.g. $\beta_0 + \beta_1x_x$). The role of the link function is to transform the expected values of the response y (which is on the scale of (0,$\infty$), as is the Poisson distribution from which expectations are drawn) into the scale of the linear predictor (which is $-\infty,\infty$).

Poisson regression is a type of generalized linear model (GLM) that models a positive integer (natural number) response against a linear predictor via a specific link function. The linear predictor is typically a linear combination of effects parameters (e.g. $\beta_0 + \beta_1x_x$). The role of the link function is to transform the expected values of the response y (which is on the scale of (0,$\infty$), as is the Poisson distribution from which expectations are drawn) into the scale of the linear predictor (which is $-\infty,\infty$).

As implied in the name of this group of analyses, a Poisson rather than Gaussian (normal) distribution is used to represent the errors (residuals). Like count data (number of individuals, species etc), the Poisson distribution encapsulates positive integers and is bound by zero at one end. Consequently, the degree of variability is directly related the expected value (equivalent to the mean of a Gaussian distribution). Put differently, the variance is a function of the mean. Repeated observations from a Poisson distribution located close to zero will yield a much smaller spread of observations than will samples drawn from a Poisson distribution located a greater distance from zero. In the Poisson distribution, the variance has a 1:1 relationship with the mean.

The canonical link function for the Poisson distribution is a log-link function.

Whilst the expectation that the mean=variance ($\mu=\sigma$) is broadly compatible with actual count data (that variance increases at the same rate as the mean), under certain circumstances, this might not be the case. For example, when there are other unmeasured influences on the response variable, the distribution of counts might be somewhat clumped which can result in higher than expected variability (that is $\sigma\gt\mu$). The variance increases more rapidly than does the mean. This is referred to as overdispersion. The degree to which the variability is greater than the mean (and thus the expected degree of variability) is called dispersion. Effectively, the Poisson distribution has a dispersion parameter (or scaling factor) of 1.

It turns out that overdispersion is very common for count data and it typically underestimates variability, standard errors and thus deflated p-values. There are a number of ways of overcoming this limitation, the effectiveness of which depend on the causes of overdispersion.

- Quasi-Poisson models - these introduce the dispersion parameter ($\phi$) into the model.

This approach does not utilize an underlying error distribution to calculate the maximum likelihood (there is no quasi-Poisson distribution).

Instead, if the Newton-Ralphson iterative reweighting least squares algorithm is applied using a direct specification of the relationship between

mean and variance ($var(y)=\phi\mu$), the estimates of the regression coefficients are identical to those of the maximum

likelihood estimates from the Poisson model. This is analogous to fitting ordinary least squares on symmetrical, yet not normally distributed data -

the parameter estimates are the same, however they won't necessarily be as efficient.

The standard errors of the coefficients are then calculated by multiplying the Poisson model coefficient

standard errors by $\sqrt{\phi}$.

Unfortunately, because the quasi-poisson model is not estimated via maximum likelihood, properties such as AIC and log-likelihood cannot be derived. Consequently, quasi-poisson and Poisson model fits cannot be compared via either AIC or likelihood ratio tests (nor can they be compared via deviance as uasi-poisson and Poisson models have the same residual deviance). That said, quasi-likelihood can be obtained by dividing the likelihood from the Poisson model by the dispersion (scale) factor.

- Negative binomial model - technically, the negative binomial distribution is a probability distribution for the number of successes before a specified number of failures.

However, the negative binomial can also be defined (parameterized) in terms of a mean ($\mu$) and scale factor ($\omega$),

$$p(y_i)=\frac{\Gamma(y_i+\omega)}{\Gamma(\omega)y!}\times\frac{\mu_i^{y_i}\omega^\omega}{(\mu_i+\omega)^{\mu_i+\omega}}$$

where the expectected value of the values $y_i$ (the means) are ($mu_i$) and the variance is $y_i=\frac{\mu_i+\mu_i^2}{\omega}$.

In this way, the negative binomial is a two-stage hierarchical process in which the response is modeled against a Poisson distribution whose expected count is in turn

modeled by a Gamma distribution with a mean of $\mu$ and constant scale parameter ($\omega$).

Strictly, the negative binomial is not an exponential family distribution (unless $\omega$ is fixed as a constant), and thus negative binomial models cannot be fit via the usual GLM iterative reweighting algorithm. Instead estimates of the regression parameters along with the scale factor ($\omega$) are obtained via maximum likelihood.

The negative binomial model is useful for accommodating overdispersal when it is likely caused by clumping (due to the influence of other unmeasured factors) within the response.

- Zero-inflated Poisson model - overdispersion can also be caused by the presence of a greater number of zero's than would otherwise be expected for a Poisson distribution.

There are potentially two sources of zero counts - genuine zeros and false zeros.

Firstly, there may genuinely be no individuals present. This would be the number expected by a Poisson distribution.

Secondly, individuals may have been present yet not detected or may not even been possible.

These are false zero's and lead to zero inflated data (data with more zeros than expected).

For example, the number of joeys accompanying an adult koala could be zero because the koala has no offspring (true zero) or because the koala is male or infertile (both of which would be examples of false zeros). Similarly, zero counts of the number of individual in a transect are due either to the absence of individuals or the inability of the observer to detect them. Whilst in the former example, the latent variable representing false zeros (sex or infertility) can be identified and those individuals removed prior to analysis, this is not the case for the latter example. That is, we cannot easily partition which counts of zero are due to detection issues and which are a true indication of the natural state.

Consistent with these two sources of zeros, zero-inflated models combine a binary logistic regression model (that models count membership according to a latent variable representing observations that can only be zeros - not detectable or male koalas) with a Poisson regression (that models count membership according to a latent variable representing observations whose values could be 0 or any positive integer - fertile female koalas).

Poisson regression

Scenario and Data

Lets say we wanted to model the abundance of an item ($y$) against a continuous predictor ($x$). As this section is mainly about the generation of artificial data (and not specifically about what to do with the data), understanding the actual details are optional and can be safely skipped. Consequently, I have folded (toggled) this section away.

- the sample size = 20

- the continuous $x$ variable is a random uniform spread of measurements between 1 and 20

- the rate of change in log $y$ per unit of $x$ (slope) = 0.1.

- the value of $x$ when log$y$ equals 0 (when $y$=1)

- to generate the values of $y$ expected at each $x$ value, we evaluate the linear predictor (created by calculating the outer product of the model matrix and the regression parameters). These expected values are then transformed into a scale mapped by (0,$\infty$) by using the log function $e^{linear~predictor}$

- finally, we generate $y$ values by using the expected $y$ values ($\lambda$) as probabilities when drawing random numbers from a Poisson distribution. This step adds random noise to the expected $y$ values and returns only 0's and positive integers.

set.seed(8) #The number of samples n.x <- 20 #Create x values that at uniformly distributed throughout the rate of 1 to 20 x <- sort(runif(n = n.x, min = 1, max =20)) mm <- model.matrix(~x) intercept <- 0.6 slope=0.1 #The linear predictor linpred <- mm %*% c(intercept,slope) #Predicted y values lambda <- exp(linpred) #Add some noise and make binomial y <- rpois(n=n.x, lambda=lambda) dat <- data.frame(y,x) data.pois <- dat write.table(data.pois, file='../downloads/data/data.pois.csv', quote=F, row.names=F, sep=',')

With these sort of data, we are primarily interested in investigating whether there is a relationship between the positive integer response variable and the linear predictor (linear combination of one or more continuous or categorical predictors).

Exploratory data analysis and initial assumption checking

- All of the observations are independent - this must be addressed at the design and collection stages

- The response variable (and thus the residuals) should be matched by an appropriate distribution (in the case of positive integers response - a Poisson is appropriate).

- All observations are equally influential in determining the trends - or at least no observations are overly influential. This is most effectively diagnosed via residuals and other influence indices and is very difficult to diagnose prior to analysis

- the relationship between the linear predictor (right hand side of the regression formula) and the link function should be linear. A scatterplot with smoother can be useful for identifying possible non-linearity.

- The dispersion factor is close to 1

There are five main potential models we could consider fitting to these data:

- Ordinary least squares regression (general linear model) - assumes normality of residuals

- Poisson regression - assumes mean=variance (dispersion=1)

- Quasi-poisson regression - a general solution to overdispersion. Assumes variance is a function of mean, dispersion estimated, however likelihood based statistics unavailable

- Negative binomial regression - a specific solution to overdispersion caused by clumping (due to an unmeasured latent variable). Scaling factor ($\omega$) is estimated along with the regression parameters.

- Zero-inflation model - a specific solution to overdispersion caused by excessive zeros (due to an unmeasured latent variable). Mixture of binomial and Poisson models.

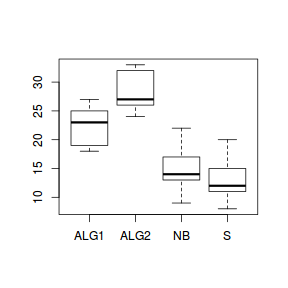

Confirm non-normality and explore clumping

When counts are all very large (not close to 0) and their ranges do not span orders of magnitude, they take on very Gaussian properties (symmetrical distribution and variance independent of the mean). Given that models based on the Gaussian distribution are more optimized and recognized than Generalized Linear Models, it can be prudent to adopt Gaussian models for such data. Hence it is a good idea to first explore whether a Poisson model is likely to be more appropriate than a standard Gaussian model.

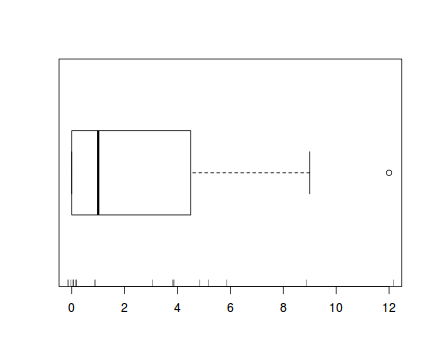

The potential for overdispersion can be explored by adding a rug to boxplot. The rug is simply tick marks on the inside of an axis at the position corresponding to an observation. As multiple identical values result in tick marks drawn over one another, it is typically a good idea to apply a slight amount of jitter (random displacement) to the values used by the rug.

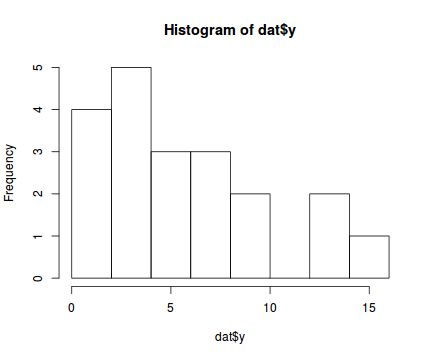

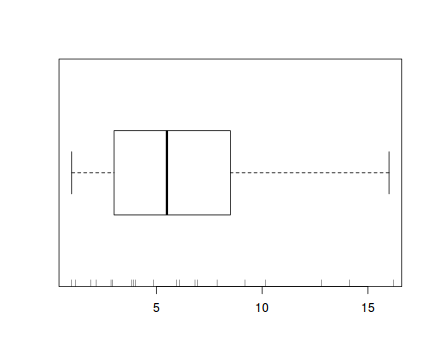

hist(dat$y)

boxplot(dat$y, horizontal=TRUE) rug(jitter(dat$y), side=1)

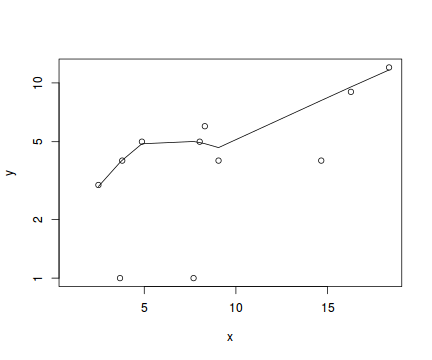

Confirm linearity

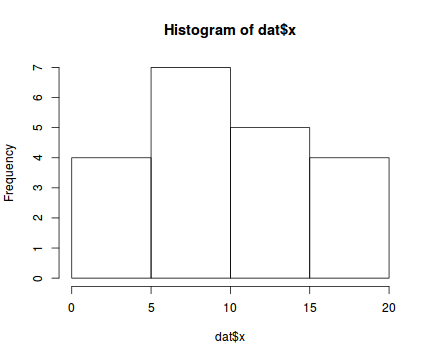

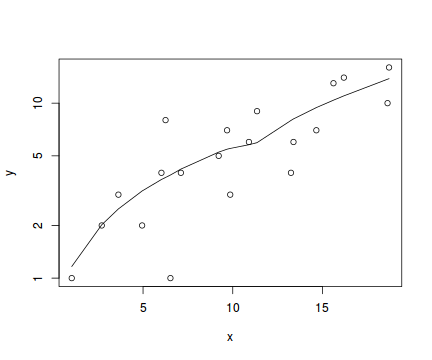

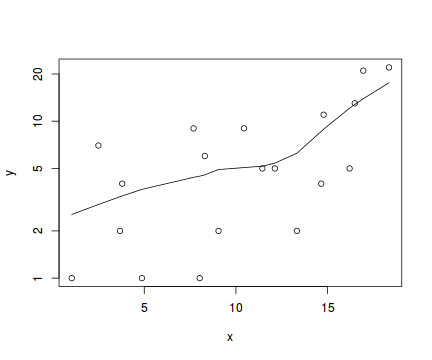

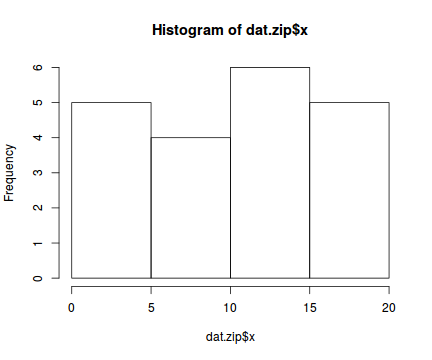

Lets now explore linearity by creating a histogram of the predictor variable ($x$) and a scatterplot of the relationship between the response ($y$) and the predictor ($x$)

hist(dat$x)

#now for the scatterplot plot(y~x, dat, log="y") with(dat, lines(lowess(y~x)))

Conclusions: the predictor ($x$) does not display any skewness or other issues that might lead to non-linearity. The lowess smoother on the scatterplot does not display major deviations from a straight line and thus linearity is satisfied. Violations of linearity could be addressed by either:

- define a non-linear linear predictor (such as a polynomial, spline or other non-linear function)

- transform the scale of the predictor variables

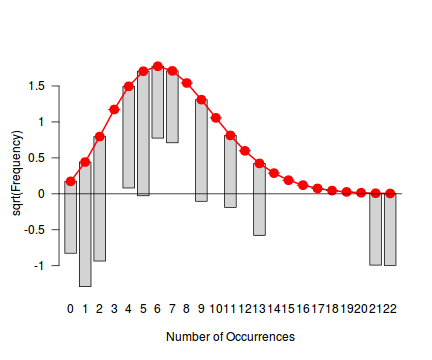

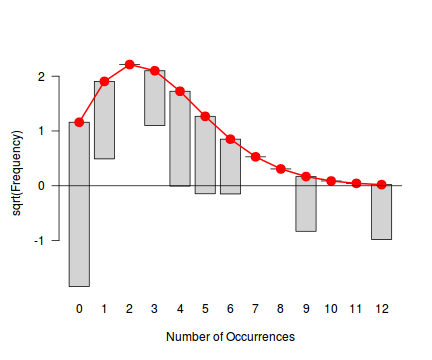

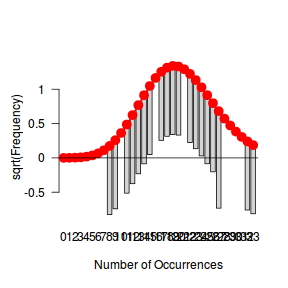

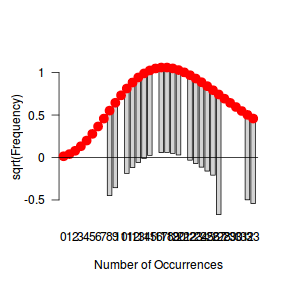

Explore the distributional properties of the response

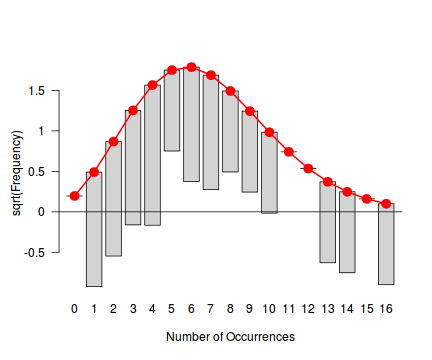

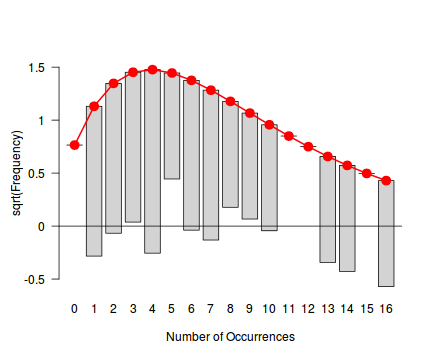

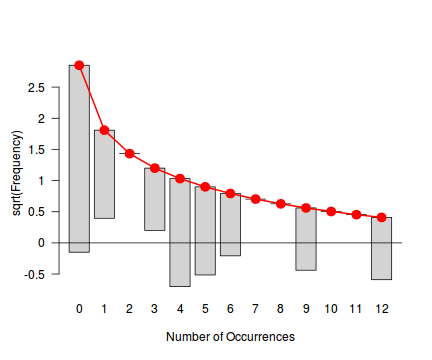

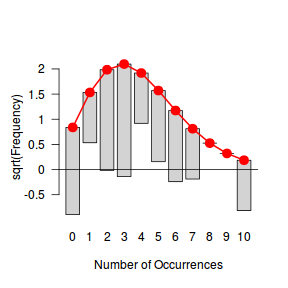

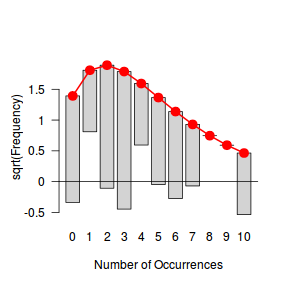

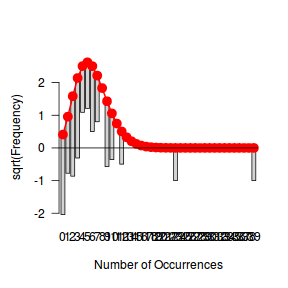

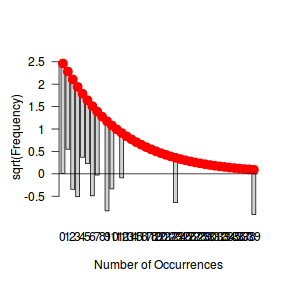

Tukey suggested a variation on a histogram for non-Gaussian distributions. His hanging rootogram features a frequency histogram (on a square-root scale) hanging from an equivalent reference distribution (such as a Poisson distribution). Apparently, the hanging nature makes it easier to detect deviations of the observed counts from the reference distribution (since the distribution will be curvilinear) and the square-root scale allows greater emphasis of smaller frequencies (since a histogram will normally be dominated by large frequencies).

The goodfit() and rootogram() functions in the vcd (Visualizing Categorical Data) package provide convenient ways of quickly exploring the broad suitability of Poisson, Binomial and Negative Binomial distributions to a response. Note however, these are of limited use on small (n<30) data sets and in the current case are only provided for illustrative purposes.

library(vcd) fit <- goodfit(dat$y, type='poisson') summary(fit)

Goodness-of-fit test for poisson distribution

X^2 df P(> X^2)

Likelihood Ratio 25.33272 11 0.008146985

rootogram(fit)

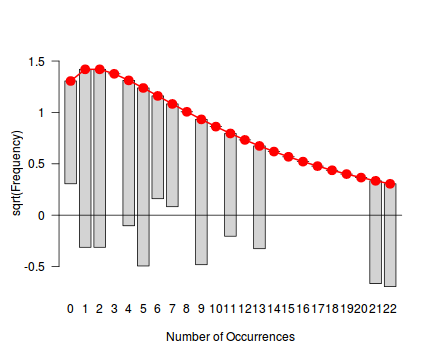

fit <- goodfit(dat$y, type='nbinom') summary(fit)

Goodness-of-fit test for nbinomial distribution

X^2 df P(> X^2)

Likelihood Ratio 9.978856 10 0.4423503

rootogram(fit)

Conclusions - the goodness of fit and rootogram explorations suggest that a Negative Binomial is a good fit whereas the Poisson is less so (deviations of the observed counts from Poisson are greater than they from the Negative Binomial). However, this may well be as much of an artifact of the very small sample size as it is a genuine reflection of the most appropriate distribution against which to fit a model.

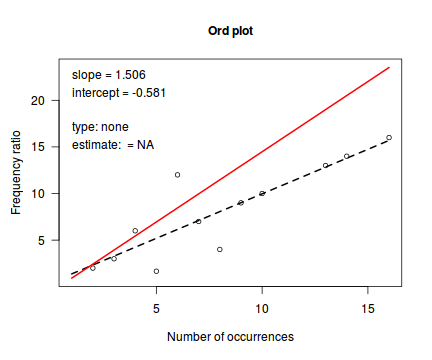

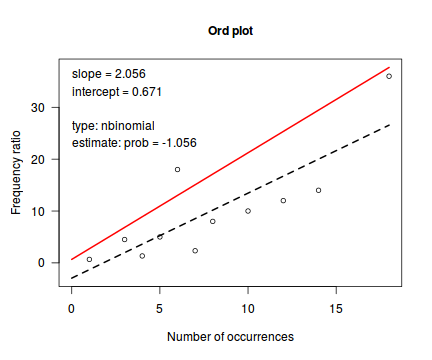

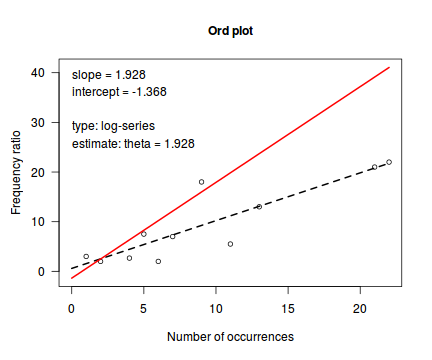

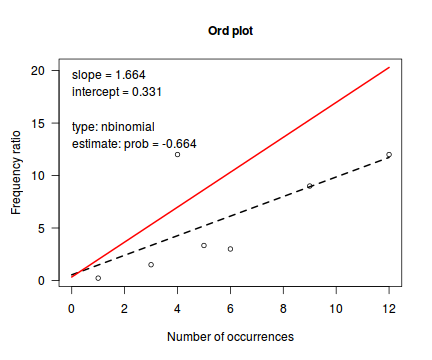

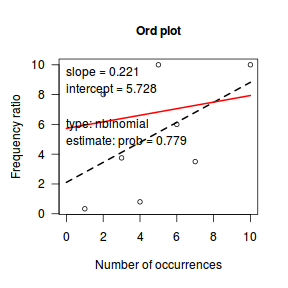

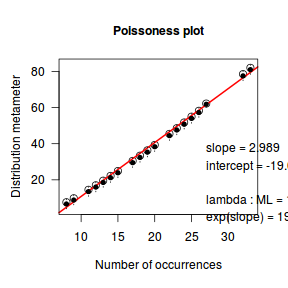

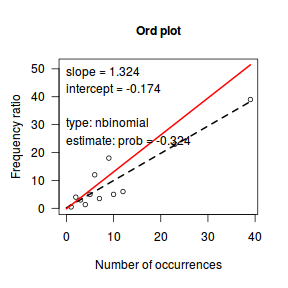

Ord demonstrated that the relationship between the ratio of predicted counts to predicted counts of previous bin ($k\frac{p_k}{p_{k-1}}$) and the count bins ($k$) followed a linear relationship for Poisson, Binomial, Negative Binomial and logarithmic series distributions (yet with different characteristic intercepts and slopes) and thus proposed a simple plot (Ord plot) for diagnosing appropriate discrete distributions. Ord indicated that parameters must be within a certain tolerance (nominally 0.1) of the defining coefficient to yield a conclusive diagnostic. The following table indicates the interpretation of Intercept and Slope parameters. Ord also suggested weighting the relationship by the square-root of the count frequencies so as to dampen fluctuations due to sampling variance. The table is also presented in order of testing - that is, first the routine tests whether a Poission is appropriate, if not, it explores a Binomial and so on.

| Intercept (a) | Slope (b) | Tolerance | Suggested Distribution (and parameters) | Estimated Parameter |

|---|---|---|---|---|

| + | 0 | abs(b)<tol | Poisson(λ) | λ=a |

| + | - | b<(-1*tol) | Binomial(n,p) | p = b/(b-1) |

| + | + | a<(-1*tol) | Negative Binomial(n,p) | p = 1-b |

| - | + | abs(a+b)< 4*tol | Logarithmic(θ) | θ = b θ = -a |

library(vcd) Ord_plot(dat$y, tol=0.2)

Conclusions- the small sample size makes this difficult to assess.

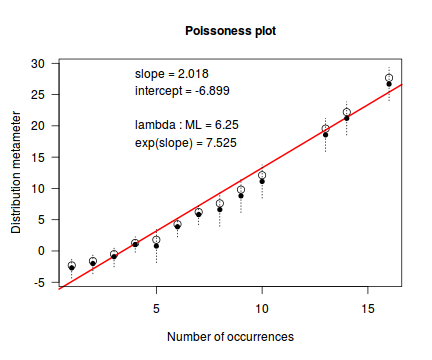

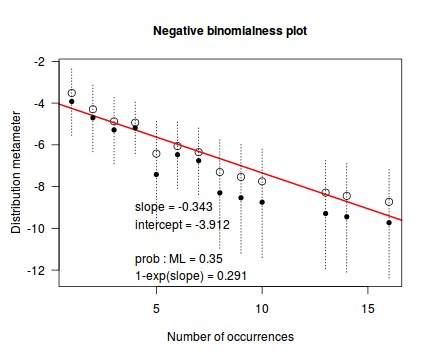

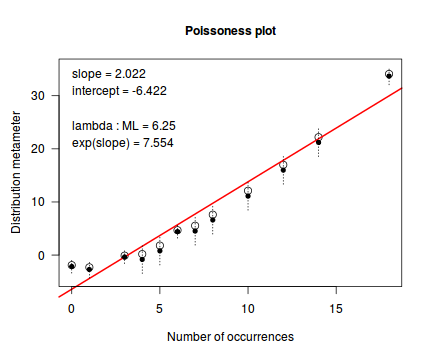

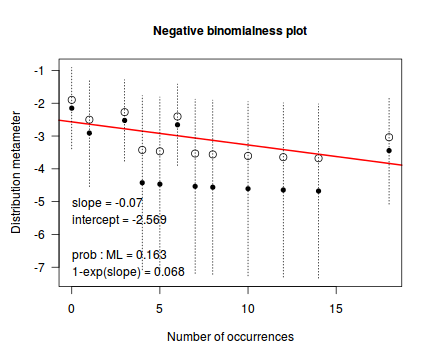

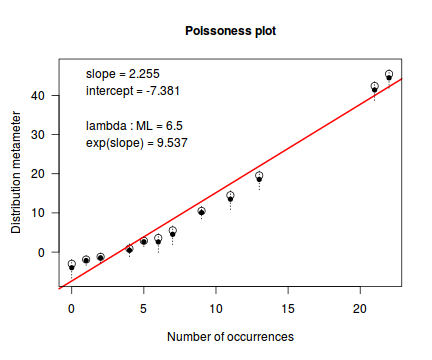

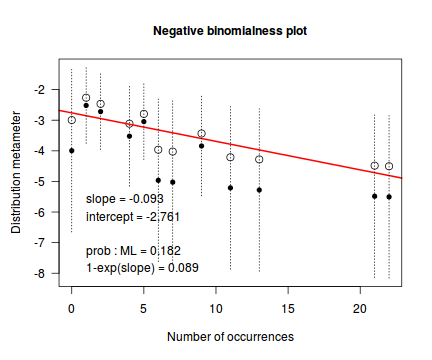

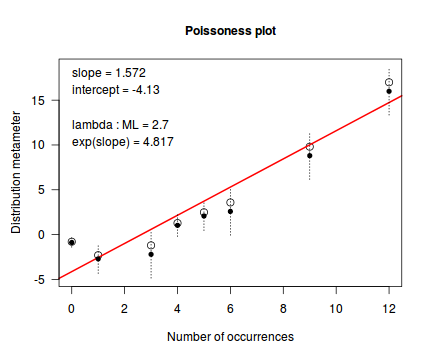

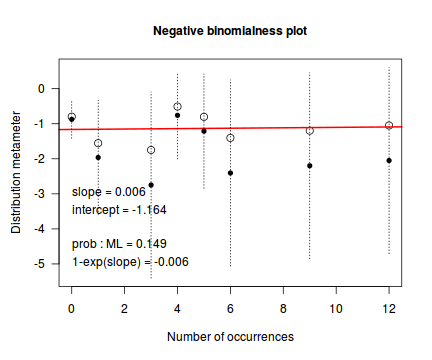

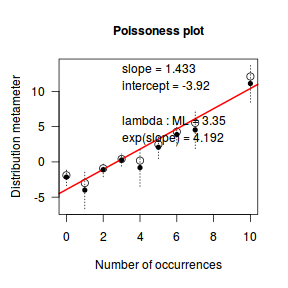

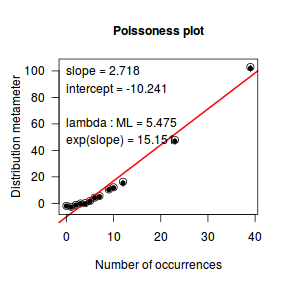

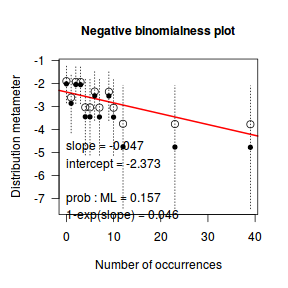

An alternative to the Ord plot is the robust distribution plot. Similar to a Q-Q normal plot, the robust distribution plot compares data to a reference distribution. Deviations from a straight line are indicative of a poor match between the data and the reference distribution. Open circles on the plot represent the observed count distribution metameter. Dotted lines and solid circles represent the 95% confidence intervals and centers thereof. Also provided on the plot are the coefficients (intercept and slope) of the relationship as well as the maximum likelihood estimates of the distribution parameters (Poisson: $\lambda$; Negative Binomial: $p$).

library(vcd) distplot(dat$y, type='poisson')

distplot(dat$y, type="nbinom")

Conclusions - the data is a closer match to the Poisson distribution than the Negative Binomial distribution.

Explore zero inflation

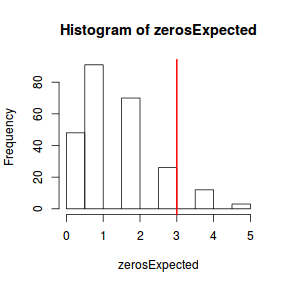

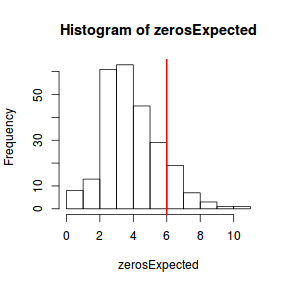

Although we have already established that there are few zeros in the data (and thus overdispersion is unlikely to be an issue), we can also explore this by comparing the number of zeros in the data to the number of zeros that would be expected from a Poisson distribution with a mean equal to the mean count of the data.

#proportion of 0's in the data dat.tab<-table(dat$y==0) dat.tab/sum(dat.tab)

FALSE

1

#proportion of 0's expected from a Poisson distribution mu <- mean(dat$y) cnts <- rpois(1000, mu) dat.tab <- table(cnts == 0) dat.tab/sum(dat.tab)

FALSE TRUE 0.997 0.003

Model fitting or statistical analysis

We perform the Poisson regression using the glm() function. The most important (=commonly used) parameters/arguments for logistic regression are:

- formula: the linear model relating the response variable to the linear predictor

- family: specification of the error distribution (and link function).

Can be specified as either a string or as one of the family functions (which allows for the explicit declaration of the link function).

For examples:

- family="poisson" or equivalently family=poisson(link="log")

- data: the data frame containing the data

I will now fit the Poisson regression: $$log(\mu)=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Poisson(\lambda)$$

dat.glm <- glm(y~x, data=dat, family="poisson")

Model evaluation

Prior to exploring the model parameters, it is prudent to confirm that the model did indeed fit the assumptions and was an appropriate fit to the data. There are a number of extractor functions (functions that extract or derive specific information from a model) available including:

| Extractor | Description |

|---|---|

| residuals() | Extracts the residuals from the model |

| fitted() | Extracts the predicted (expected) response values (on the link scale) at the observed levels of the linear predictor |

| predict() | Extracts the predicted (expected) response values (on either the link, response or terms (linear predictor) scale) |

| coef() | Extracts the model coefficients |

| deviance() | Extracts the deviance from the model |

| AIC() | Extracts the Akaike's Information Criterion from the model |

| AICc() | Extracts the Akaike's Information Criterion corrected for small sample sizes from the model (MuMIn package) |

| extractAIC() | Extracts the generalized Akaike's Information Criterion from the model |

| summary() | Summarizes the important output and characteristics of the model |

| anova() | Computes an analysis of deviance or log-likelihood ratio test (LRT) from the model |

| plot() | Generates a series of diagnostic plots from the model |

| effects() | Generates partial effects from fitted model (effects package) |

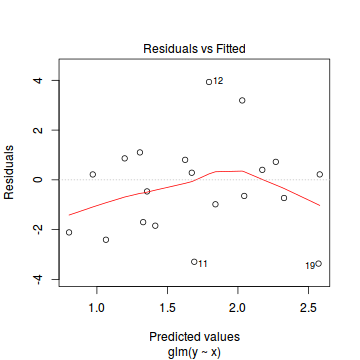

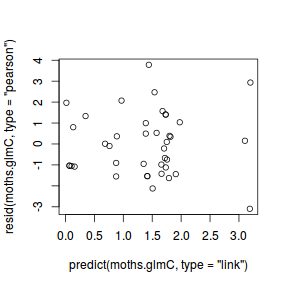

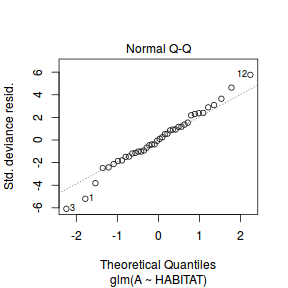

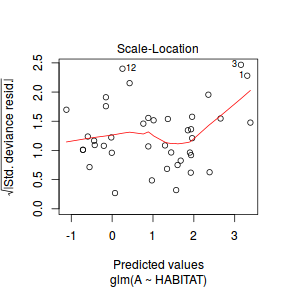

Lets explore the diagnostics - particularly the residuals

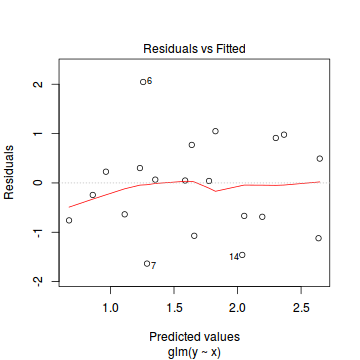

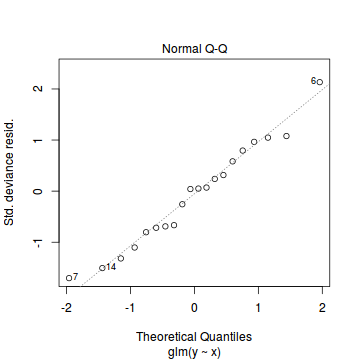

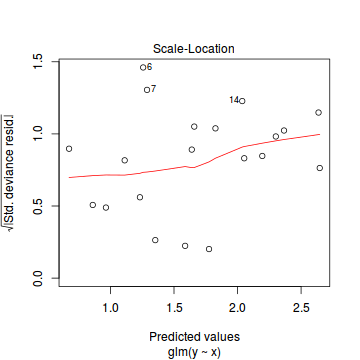

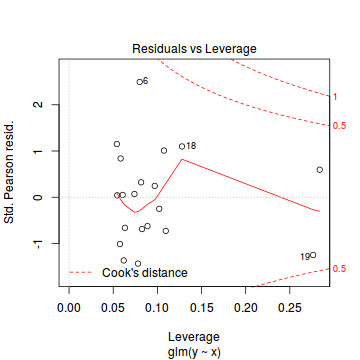

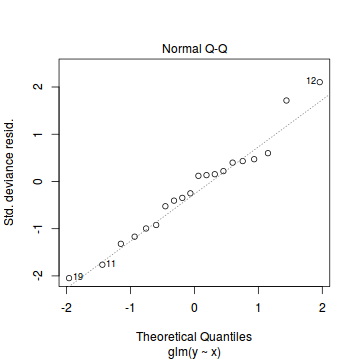

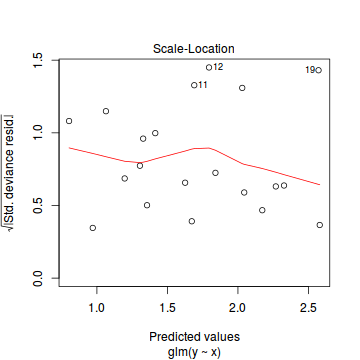

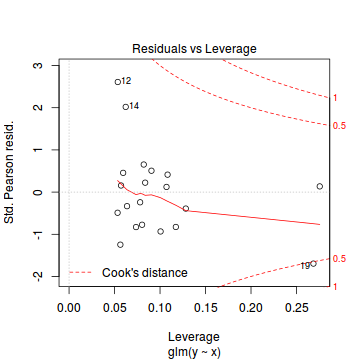

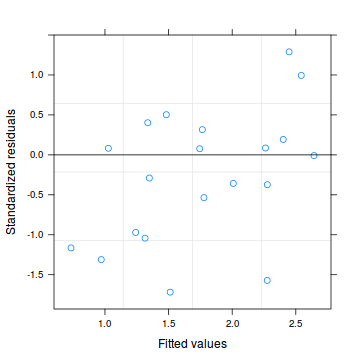

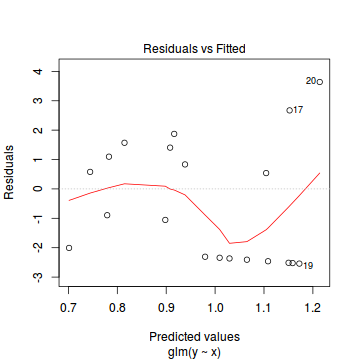

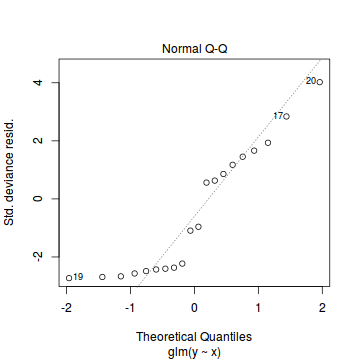

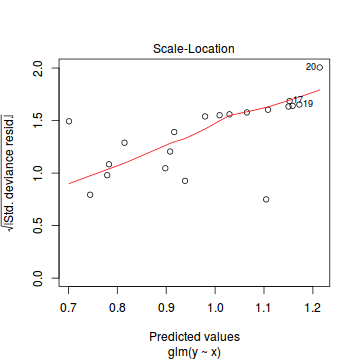

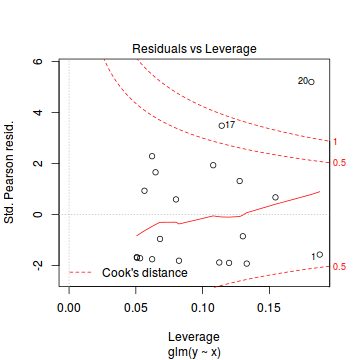

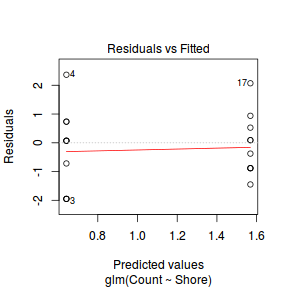

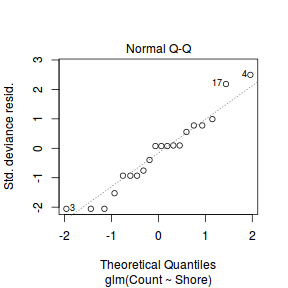

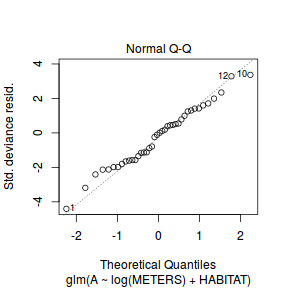

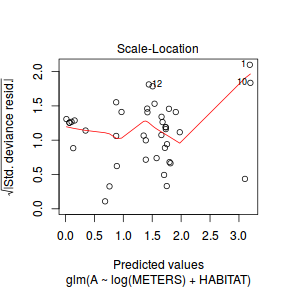

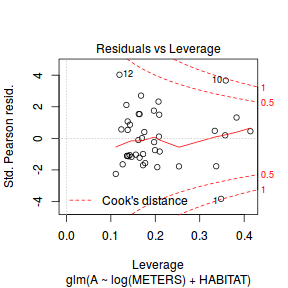

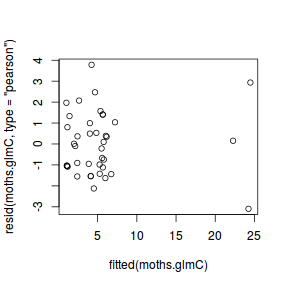

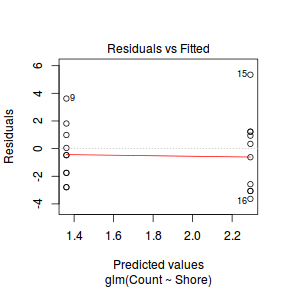

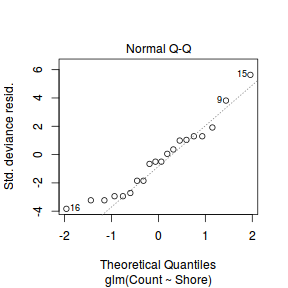

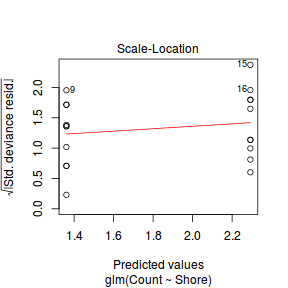

plot(dat.glm)

Unfortunately, unlike with linear models (Gaussian family), the expected distribution of data (residuals) varies over the range of fitted values for numerous (often competing) ways that make diagnosing (and attributing causes thereof) miss-specified generalized linear models from standard residual plots very difficult. The use of standardized (Pearson) residuals or deviance residuals can partly address this issue, yet they still do not offer completely consistent diagnoses across all issues (miss-specified model, over-dispersion, zero-inflation).

An alternative approach is to use simulated data from the fitted model to calculate an empirical cumulative density function from which residuals are are generated as values corresponding to the observed data along the density function. The (rather Bayesian) rationale is that if the model is correctly specified, then the observed data can be considered as a random draw from the fitted model. If this is the case, then residuals calculated from empirical cumulative density functions based on data simulated from the fitted model, should be totally uniform (flat) across the range of the linear predictor (fitted values) regardless of the model (Binomial, Poisson, linear, quadratic, hierarchical or otherwise). This uniformity can be explored by examining qq-plots (in which the trend should match a straight line) and plots of residual against the fitted values and each individual predictor (in which the noise should be uniform around zero across the range of x-values).

To illustrate this, lets generate 10 simulated data sets from our fitted model. This will generate a matrix with 10 columns and as many rows as there were in the original data. Think of it as 10 attempts to simulate the original data from the model.

dat.sim <- simulate(dat.glm, n=10) dat.sim

sim_1 sim_2 sim_3 sim_4 sim_5 sim_6 sim_7 sim_8 sim_9 sim_10 1 1 2 0 1 2 1 0 2 3 0 2 4 3 4 1 3 2 1 4 2 2 3 2 3 2 1 3 3 4 7 1 1 4 0 1 4 4 1 2 7 4 3 3 5 2 11 1 0 4 6 1 6 4 7 6 6 6 3 2 2 5 6 5 3 3 7 5 1 7 4 1 4 2 5 3 5 8 2 0 3 6 3 4 6 1 6 4 9 3 8 5 4 3 5 7 4 6 3 10 6 8 2 3 9 6 2 9 6 7 11 4 7 8 3 5 6 7 4 5 3 12 8 3 9 9 2 6 11 14 7 4 13 5 4 6 5 8 8 7 7 8 6 14 4 6 9 13 6 8 6 10 10 5 15 6 3 4 8 13 8 8 7 9 5 16 14 11 7 10 6 14 8 8 10 12 17 7 8 12 7 10 11 6 6 11 6 18 12 6 10 8 11 14 13 10 13 14 19 14 16 14 15 12 13 9 11 13 15 20 11 23 9 6 14 15 21 9 11 9

dat.sim <- simulate(dat.glm, n=250) dat$y

[1] 1 2 3 2 4 8 1 4 5 7 3 6 9 4 6 7 13 14 10 16

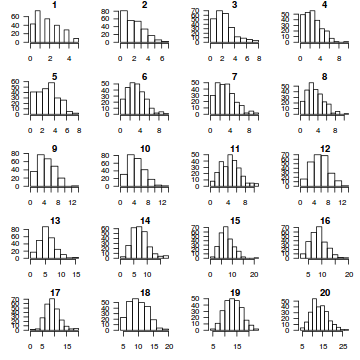

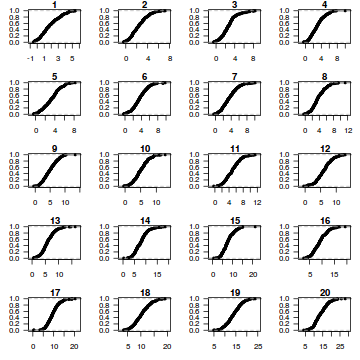

par(mfrow=c(5,4), mar=c(3,3,1,1)) resid <- NULL for (i in 1:nrow(dat.sim)) { e=data.matrix(dat.sim[i,]) hist(e, main=i,las=1) resid[i] <- dat$y[i] - mean(e) }

resid

[1] -0.860 -0.432 0.252 -1.116 0.632 4.292 -2.656 0.168 -0.008 1.912 -2.032 -0.076 2.968 [14] -3.880 -1.804 -2.184 3.368 3.548 -3.992 2.060

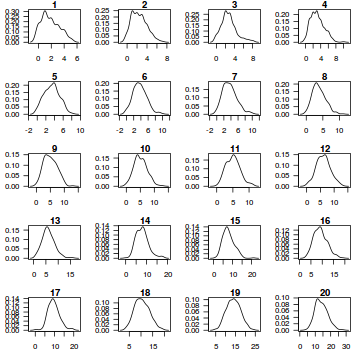

resid <- NULL for (i in 1:nrow(dat.sim)) { e=data.matrix(dat.sim[i,]) d=density(e) plot(d, main=i,las=1) e=d$x[d$y==max(d$y)] resid[i] <- (dat$y[i]) - e }

resid

[1] -0.01423095 0.91310617 0.93170248 -0.95423394 0.11994091 4.84640620 -1.72654712 0.98374257 [9] 1.48647220 3.00604679 -2.19419319 -0.65422933 3.54516521 -4.16700100 -0.96024660 -2.02230842 [17] 3.85646518 4.49531160 -4.09508226 4.42694204

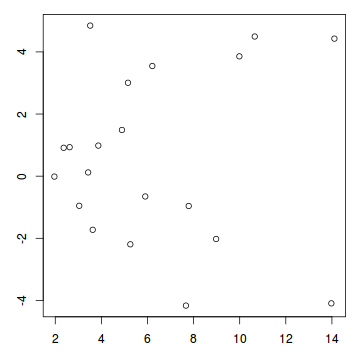

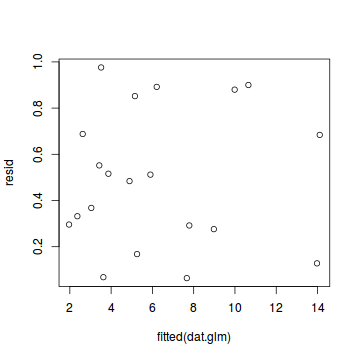

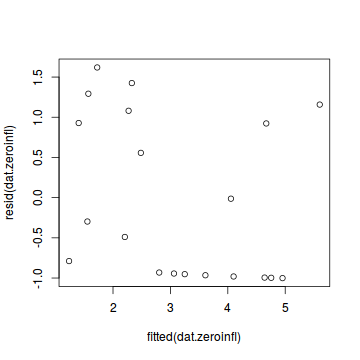

par(mfrow=c(1,1)) plot(resid~fitted(dat.glm))

Now for each row of these simulated data, we calculate the empirical cumulative density function and use this function to predict new y-values (=residuals) corresponding to each observed y-value. Actually, 10 simulated samples is totally inadequate, we should use at least 250. I initially used 10, just so we could explore the output. We will re-simulate 250 times. Note also, for integer responses (including binary), uniform random noise is added to both the simulated and observed data so that we can sensibly explore zero inflation. The resulting residuals will be on a scale from 0 to 1 and therefore the residual plot should be centered around a y-value of 0.5.

dat.sim <- simulate(dat.glm, n=250) par(mfrow=c(5,4), mar=c(3,3,1,1)) resid <- NULL for (i in 1:nrow(dat.sim)) { e=ecdf(data.matrix(dat.sim[i,] + runif(250, -0.5, 0.5))) plot(e, main=i,las=1) resid[i] <- e(dat$y[i] + runif(250, -0.5, 0.5)) }

resid

[1] 0.296 0.332 0.688 0.368 0.552 0.976 0.068 0.516 0.484 0.852 0.168 0.512 0.892 0.064 0.292 0.276 [17] 0.880 0.900 0.128 0.684

plot(resid ~ fitted(dat.glm))

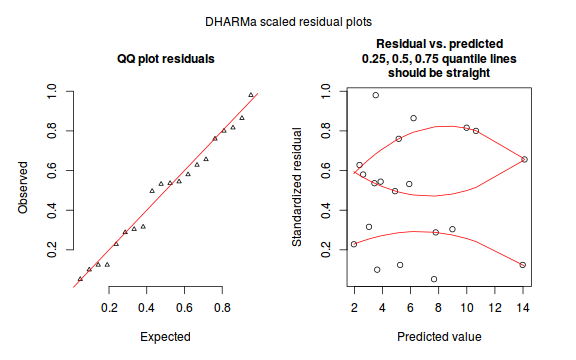

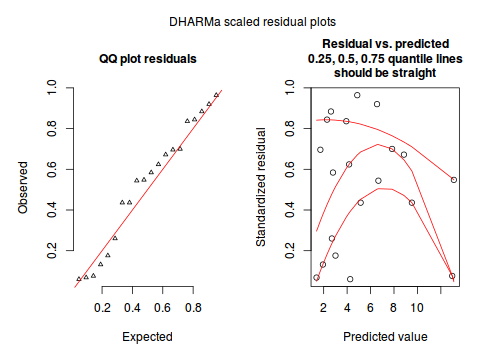

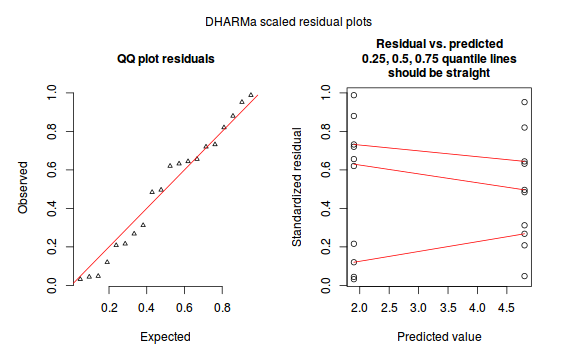

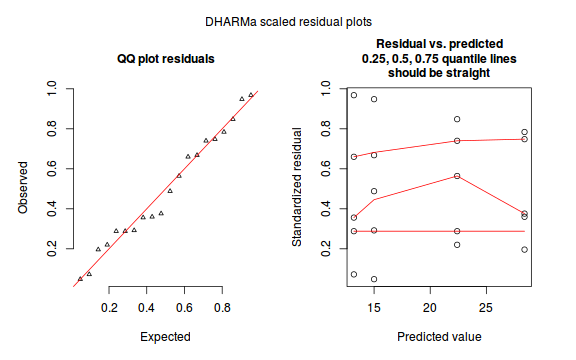

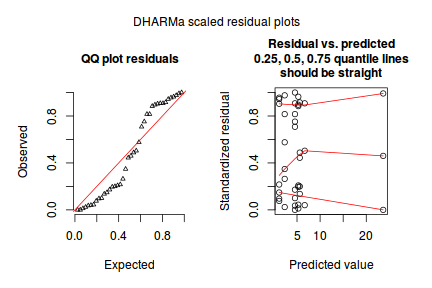

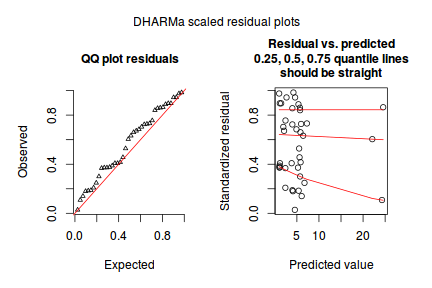

The DHARMa package provides a number of convenient routines to explore standardized residuals simulated from fitted models based on the concepts outlined above. Along with generating simulated residuals, simple qq plots and residual plots are available. By default, the residual plots include quantile regression lines (0.25, 0.5 and 0.75), each of which should be straight and flat.

- in overdispersed models, the qq trend will deviate substantially from a straight line

- non-linear models will display trends in the residuals

library(DHARMa)

dat.sim <- simulateResiduals(dat.glm) dat.sim

[1] "Class DHARMa with simulated residuals based on 250 simulations with refit = FALSE" [1] "see ?DHARMa::simulateResiduals for help" [1] "-----------------------------" [1] 0.228 0.628 0.580 0.316 0.536 0.980 0.100 0.544 0.496 0.760 0.124 0.532 0.864 0.052 0.288 0.304 [17] 0.816 0.800 0.124 0.656

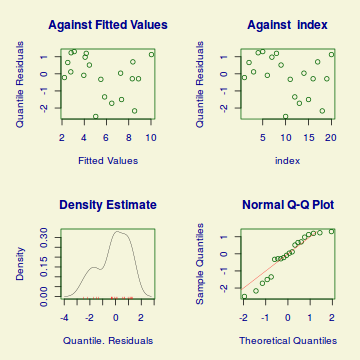

plotSimulatedResiduals(dat.sim)

Conclusions: there is no obvious patterns in the qq-plot or residuals, or at least there are no obvious trends remaining that would be indicative of overdispersion or non-linearity.

Goodness of fit of the model

We can also explore the goodness of the fit of the model via:

- Pearson's $\chi^2$ residuals

- explores whether there are any significant patterns remaining in the residuals

dat.resid <- sum(resid(dat.glm, type = "pearson")^2) 1 - pchisq(dat.resid, dat.glm$df.resid)

[1] 0.4896553

- Deviance ($G^2$) - similar to the $\chi^2$ test above, yet uses deviance

1-pchisq(dat.glm$deviance, dat.glm$df.resid)

[1] 0.5076357

- The DHARMa package also has a routine for running a Kologorov-Smirnov test test to explore overall uniformity of the residuals as a goodness-of-fit test on the scaled residuals.

Conclusions: neither Pearson residuals, Deviance or Kolmogorov-Smirnov test of uniformity indicate a lack of fit (p values greater than 0.05)testUniformity(dat.sim)

One-sample Kolmogorov-Smirnov test data: simulationOutput$scaledResiduals D = 0.096, p-value = 0.9928 alternative hypothesis: two-sided

In this demonstration we fitted the Poisson model. We could also compare (using AIC or deviance) its fit to that of a Gaussian model.

Conclusions: from the perspective of both AIC and deviance, the Poisson model would be considered a better fit to the data.dat.glmG <- glm(y~x, data=dat, family="gaussian") AIC(dat.glm, dat.glmG)

Error in AIC.glm(dat.glm, dat.glmG): unused argument (dat.glmG)

#Poisson deviance dat.glm$deviance

[1] 17.2258

#Gaussian deviance dat.glmG$deviance

[1] 118.1146

Dispersion

Recall that the Poisson regression model assumes that variance=mean ($var=\mu\phi$ where $\phi=1$) and thus dispersion ($\phi=\frac{var}{\mu}=1$). However, we can also calculate approximately what the dispersion factor would be by using the model deviance as a measure of variance and the model residual degrees of freedom as a measure of the mean (since the expected value of a Poisson distribution is the same as its degrees of freedom). $$\phi=\frac{Deviance}{df}$$

Conclusion: the estimated dispersion parameter is very close to 1 thereby confirming that overdispersion is unlikely to be an issue.dat.glm$deviance/dat.glm$df.resid

[1] 0.9569887

#Or alternatively, via Pearson's residuals Resid <- resid(dat.glm, type = "pearson") sum(Resid^2) / (nrow(dat) - length(coef(dat.glm)))

[1] 0.9716981

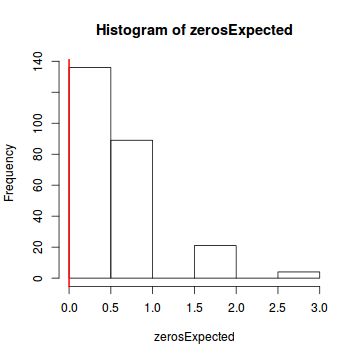

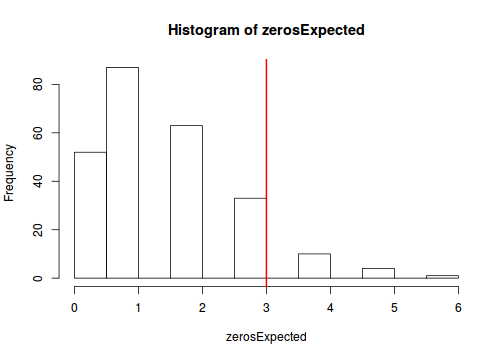

The DHARMa package also provides elegant ways to explore overdispersion, zero-inflation (and spatial and temporal autocorrelation). For these methods, the model is repeatedly refit with the simulated data to yield bootstrapped refitted residuals. The test for overdispersion compares the approximate deviances of the observed model with the those of the simulated models.

Conclusions: there is no evidence of overdispersion nor zero-inflation.testOverdispersion(simulateResiduals(dat.glm, refit=T))

Overdispersion test via comparison to simulation under H0 data: simulateResiduals(dat.glm, refit = T) dispersion = 0.95895, p-value = 0.472 alternative hypothesis: overdispersion

testZeroInflation(simulateResiduals(dat.glm, refit=T))

Zero-inflation test via comparison to expected zeros with simulation under H0 data: simulateResiduals(dat.glm, refit = T) ratioObsExp = 0, p-value = 0.456 alternative hypothesis: more

The AER package includes a dispersiontest() function which takes a slightly different approach. In its simplest form, the dispersiontest() function considers variance as equal to: $$ var(y) = \mu + \alpha \times \mu $$ if $\alpha < 0$, the model is underdispersed and if $\alpha > 0$, the model is overdispersed. $\alpha$ is estimated via auxillary OLS regression and tested against a null hypothesis of $\alpha = 0$. Dispersion is also estimated according to: $$ \phi = \alpha + 1 $$

Conclusions - there is no evidence that the model is overdispersed. If anything it is slightly underdispersed (however as this is a very small fabricated data set, such underdispersion is likely to be an artifact)library(AER) dispersiontest(dat.glm)

Overdispersion test data: dat.glm z = -0.4719, p-value = 0.6815 alternative hypothesis: true dispersion is greater than 1 sample estimates: dispersion 0.8813912

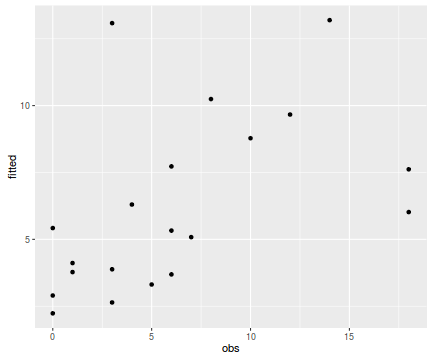

Comparing fitted values to observed counts

Whilst it does not necessarily stand that a model that can accurately predict the training data is a good model, it is true that a model that cannot predict the training data is unlikely to be a good model. Therefore if there is not a reasonably good relationship between the fitted values against the observed values, it is likely that our model is ill-defined or inadequate in some way.

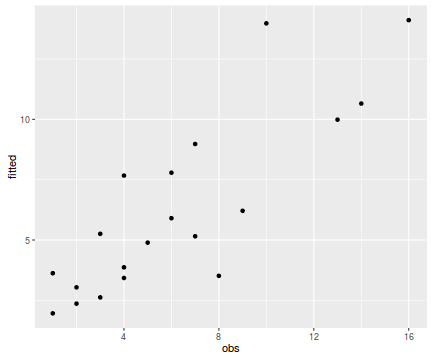

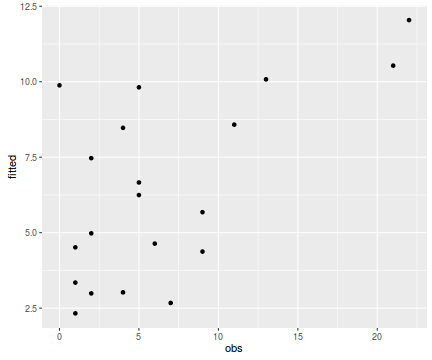

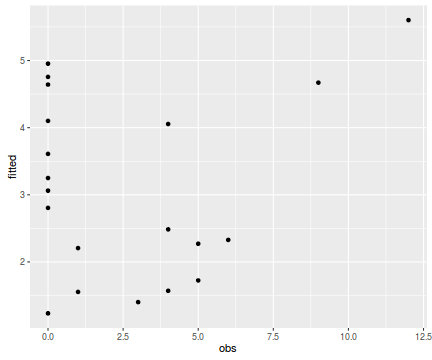

tempdata <- data.frame(obs=dat$y, fitted=fitted(dat.glm)) library(ggplot2) ggplot(tempdata, aes(y=fitted, x=obs)) + geom_point()

Conclusions - the relationship between fitted values and observed counts is reasonably good. There is nothing to suggest that the model is grossly inadequate etc.

Exploring the model parameters, test hypotheses

If there was any evidence that the assumptions had been violated or the model was not an appropriate fit, then we would need to reconsider the model and start the process again. In this case, there is no evidence that the test will be unreliable so we can proceed to explore the test statistics. The main statistic of interest is the Wald statistic ($z$) for the slope parameter.

summary(dat.glm)

Call: glm(formula = y ~ x, family = "poisson", data = dat) Deviance Residuals: Min 1Q Median 3Q Max -1.6353 -0.7049 0.0440 0.5624 2.0457 Coefficients: Estimate Std. Error z value Pr(>|z|) (Intercept) 0.56002 0.25395 2.205 0.0274 * x 0.11151 0.01858 6.000 1.97e-09 *** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 (Dispersion parameter for poisson family taken to be 1) Null deviance: 55.614 on 19 degrees of freedom Residual deviance: 17.226 on 18 degrees of freedom AIC: 90.319 Number of Fisher Scoring iterations: 4Conclusions: We would reject the null hypothesis (p<0.05). An increase in x is associated with a significant linear increase (positive slope) in log $y$ abundance. Every 1 unit increase in $x$ is associated with a 0.1115 unit increase in $\log y$ abundance We usually express this in terms of $y$ than $\log y$, so every 1 unit increase in $x$ is associated with a ($e^{0.1115}=1.12$) 1.12 unit increase in $y$ abundance.

P-values are widely acknowledged as a rather flawed means of inference testing (as in hypothesis testing in general). An alternate way of exploring the characteristics of parameter estimates is to calculate their confidence intervals. Confidence intervals that do not overlap with the null hypothesis parameter value (e.g. effect of 0), are an indication of a significant effect. Recall however, that in frequentist statistics the confidence intervals are interpreted as the range of values that are likely to contain the true mean on 95 out of 100 (95%) repeated samples.

Conclusions: A 1 unit increase in $x$ is associated with a ($e^{0.1115}=1.12$) 1.12 (1.08,1.16) unit increase in $y$ abundance.library(gmodels) ci(dat.glm)

Estimate CI lower CI upper Std. Error p-value (Intercept) 0.5600204 0.02649343 1.0935474 0.25394898 2.743671e-02 x 0.1115114 0.07246668 0.1505562 0.01858457 1.970579e-09

Further explorations of the trends

A measure of the strength of the relationship can be obtained according to: $$quasi R^2 = 1-\left(\frac{deviance}{null~deviance}\right)$$

Conclusions: approximately1-(dat.glm$deviance/dat.glm$null)

[1] 0.6902629

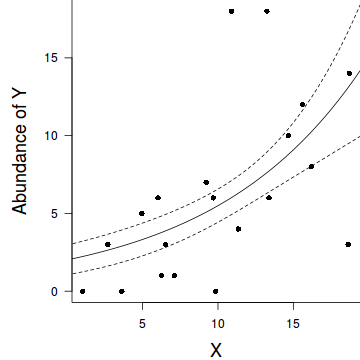

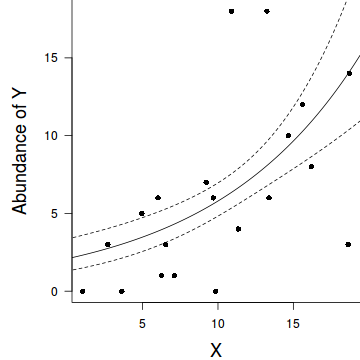

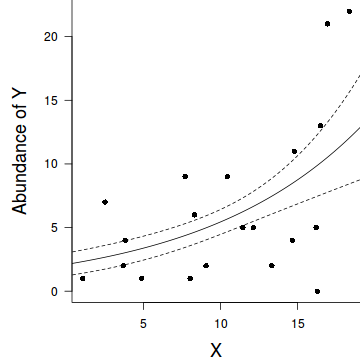

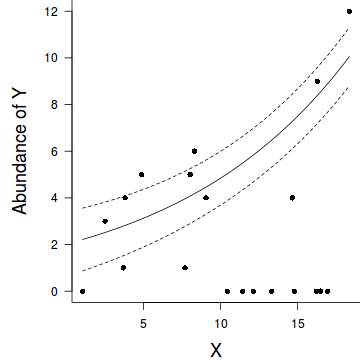

69% of the variability in abundance can be explained by its relationship to $x$. Technically, it is69% of the variability in $\log y$ (the link function) is explained by its relationship to $x$.Finally, we will create a summary plot.

par(mar = c(4, 5, 0, 0)) plot(y ~ x, data = dat, type = "n", ann = F, axes = F) points(y ~ x, data = dat, pch = 16) xs <- seq(0, 20, l = 1000) ys <- predict(dat.glm, newdata = data.frame(x = xs), type = "response", se = T) points(ys$fit ~ xs, col = "black", type = "l") lines(ys$fit - 1 * ys$se.fit ~ xs, col = "black", type = "l", lty = 2) lines(ys$fit + 1 * ys$se.fit ~ xs, col = "black", type = "l", lty = 2) axis(1) mtext("X", 1, cex = 1.5, line = 3) axis(2, las = 2) mtext("Abundance of Y", 2, cex = 1.5, line = 3) box(bty = "l")

Quasi-Poisson regression

Lets say we wanted to model the abundance of an item ($y$) against a continuous predictor ($x$). As this section is mainly about the generation of artificial data (and not specifically about what to do with the data), understanding the actual details are optional and can be safely skipped. Consequently, I have folded (toggled) this section away.

Random data incorporating the following trends (effect parameters)- define a function that will generate random samples from a quasi-poisson distribution $$QuasiPoisson\left(\frac{\mu}{\phi-1}, \frac{1}{\phi}\right)$$ where $\mu$ is the expected value (mean) and $\phi$ is the dispersion factor such that $var(y)=\phi\mu$.

- the sample size = 20

- the continuous $x$ variable is a random uniform spread of measurements between 1 and 20

- the rate of change in log $y$ per unit of $x$ (slope) = 0.1.

- the value of $x$ when log$y$ equals 0 (when $y$=1)

- to generate the values of $y$ expected at each $x$ value, we evaluate the linear predictor (created by calculating the outer product of the model matrix and the regression parameters). These expected values are then transformed into a scale mapped by (0,$\infty$) by using the log function $e^{linear~predictor}$

- finally, we generate $y$ values by using the expected $y$ values ($\lambda$) as probabilities when drawing random numbers from a quasi-poisson distribution. This step adds random noise to the expected $y$ values and returns only 0's and positive integers.

#Quasi-poisson distrition rqpois <- function(n, lambda, phi) { mu = lambda r = rnbinom(n, size=mu/phi/(1-1/phi),prob=1/phi) return(r) } set.seed(8) #The number of samples n.x <- 20 #Create x values that at uniformly distributed throughout the rate of 1 to 20 x <- sort(runif(n = n.x, min = 1, max =20)) mm <- model.matrix(~x) intercept <- 0.6 slope=0.1 #The linear predictor linpred <- mm %*% c(intercept,slope) #Predicted y values lambda <- exp(linpred) #Add some noise and make binomial y <- rqpois(n=n.x, lambda=lambda, phi=4) dat.qp <- data.frame(y,x) data.qp <- dat.qp write.table(data.qp, file='../downloads/data/data.qp.csv', quote=F, row.names=F, sep=',')

Exploratory data analysis and initial assumption checking

The assumptions are:- All of the observations are independent - this must be addressed at the design and collection stages

- The response variable (and thus the residuals) should be matched by an appropriate distribution (in the case of positive integers response - a Poisson is appropriate).

- All observations are equally influential in determining the trends - or at least no observations are overly influential. This is most effectively diagnosed via residuals and other influence indices and is very difficult to diagnose prior to analysis

- the relationship between the linear predictor (right hand side of the regression formula) and the link function should be linear. A scatterplot with smoother can be useful for identifying possible non-linearity.

- Dispersion is either 1 or overdispersion is accounted for in the model and is not due to excessive clumping or zero-inflation

Recall from Poisson regression, there are five main potential models that we could consider fitting to these data.

There are five main potential models we could consider fitting to these data:

- Ordinary least squares regression (general linear model) - assumes normality of residuals

- Poisson regression - assumes mean=variance (dispersion=1)

- Quasi-poisson regression - a general solution to overdispersion. Assumes variance is a function of mean, dispersion estimated, however likelihood based statistics unavailable

- Negative binomial regression - a specific solution to overdispersion caused by clumping (due to an unmeasured latent variable). Scaling factor ($\omega$) is estimated along with the regression parameters.

- Zero-inflation model - a specific solution to overdispersion caused by excessive zeros (due to an unmeasured latent variable). Mixture of binomial and Poisson models.

Confirm non-normality and explore clumping

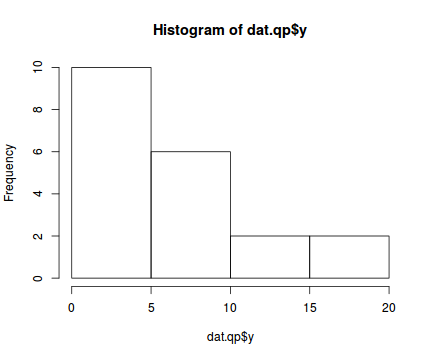

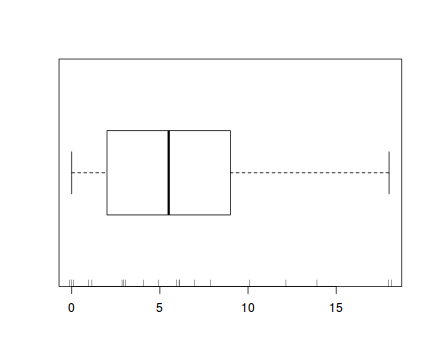

Check the distribution of the $y$ abundances

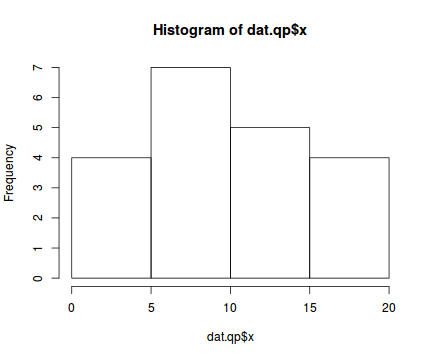

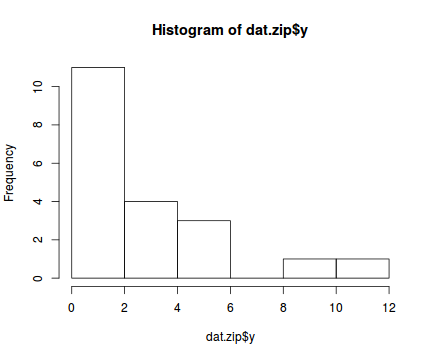

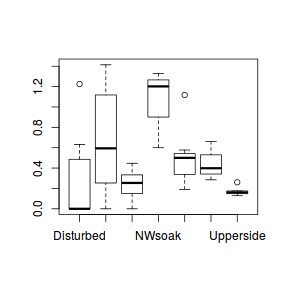

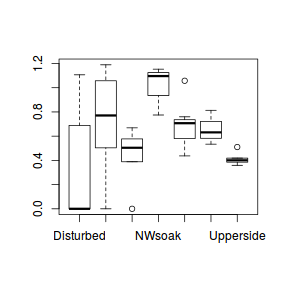

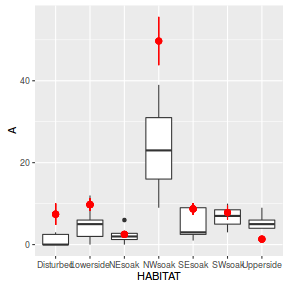

There is definitely signs of non-normality that would warrant some form of Poisson model. There is also some evidence of clumping that might result in overdispersion. Some zero values are present, yet there are not so many that we would be concerned about zero-inflation.hist(dat.qp$y)

boxplot(dat.qp$y, horizontal=TRUE) rug(jitter(dat.qp$y), side=1)

Confirm linearity

Lets now explore linearity by creating a histogram of the predictor variable ($x$) and a scatterplot of the relationship between the response ($y$) and the predictor ($x$)

hist(dat.qp$x)

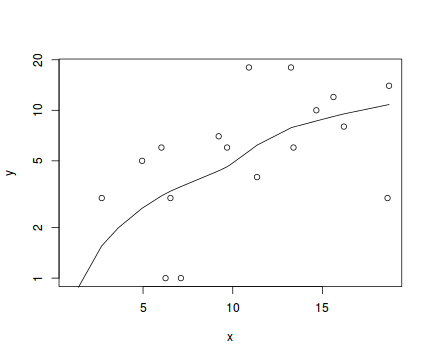

#now for the scatterplot plot(y~x, dat.qp, log="y") with(dat.qp, lines(lowess(y~x)))

Conclusions: the predictor ($x$) does not display any skewness or other issues that might lead to non-linearity. The lowess smoother on the scatterplot does not display major deviations from a straight line and thus linearity is satisfied. Violations of linearity could be addressed by either:

- define a non-linear linear predictor (such as a polynomial, spline or other non-linear function)

- transform the scale of the predictor variables

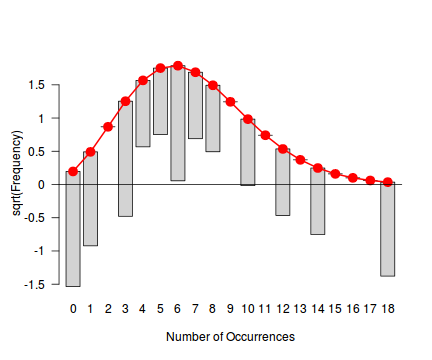

Explore the distributional properties of the response

Tukey suggested a variation on a histogram for non-Gaussian distributions. His hanging rootogram features a frequency histogram (on a square-root scale) hanging from an equivalent reference distribution (such as a Poisson distribution). Apparently, the hanging nature makes it easier to detect deviations of the observed counts from the reference distribution (since the distribution will be curvilinear) and the square-root scale allows greater emphasis of smaller frequencies (since a histogram will normally be dominated by large frequencies).

The goodfit() and rootogram() functions in the vcd (Visualizing Categorical Data) package provide convenient ways of quickly exploring the broad suitability of Poisson, Binomial and Negative Binomial distributions to a response. Note however, these are of limited use on small (n<30) data sets and in the current case are only provided for illustrative purposes.

library(vcd) fit <- goodfit(dat.qp$y, type='poisson') summary(fit)

Goodness-of-fit test for poisson distribution X^2 df P(> X^2) Likelihood Ratio 67.9097 10 1.12105e-10rootogram(fit)

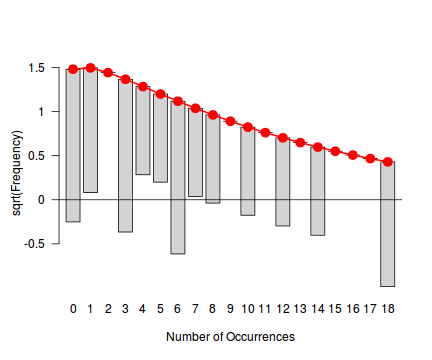

fit <- goodfit(dat.qp$y, type='nbinom') summary(fit)

Goodness-of-fit test for nbinomial distribution X^2 df P(> X^2) Likelihood Ratio 21.60097 9 0.01023342rootogram(fit)

Conclusions - the goodness of fit and rootogram explorations suggest that a Poisson distribution is not a good fit. Potentially, a Negative Binomial is a better choice, although again, small sample sizes will hinder the usefulness of this diagnostic.

As an alternative, lets explore the Ord and robust distribution plots

library(vcd) Ord_plot(dat.qp$y, tol=0.1)

distplot(dat.qp$y, type='poisson')

distplot(dat.qp$y, type="nbinom")

Conclusions - the Ord plot recommends a Negative Binomial. The Poissoness plot does show some evidence of curvilinearity (if that is indeed a word!). Not that the Negative binomialness plot is much better.

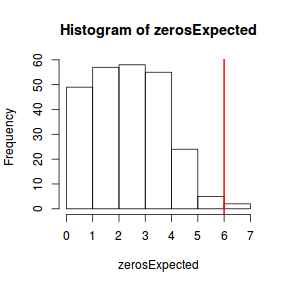

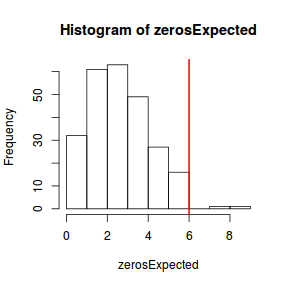

Explore zero inflation

Although we have already established that there are few zeros in the data (and thus overdispersion is unlikely to be an issue), we can also explore this by comparing the number of zeros in the data to the number of zeros that would be expected from a Poisson distribution with a mean equal to the mean count of the data.

In the above, the value under FALSE is the proportion of non-zero values in the data and the value under TRUE is the proportion of zeros in the data. In this example, there appears to be a higher proportion of zeros than would be expected. However, if we consider the small sample size of the observed data ($n=20$), there are still only a small number of zeros (#proportion of 0's in the data dat.qp.tab<-table(dat.qp$y==0) dat.qp.tab/sum(dat.qp.tab)

FALSE TRUE 0.85 0.15

#proportion of 0's expected from a Poisson distribution mu <- mean(dat.qp$y) cnts <- rpois(1000, mu) dat.qp.tabE <- table(cnts == 0) dat.qp.tabE/sum(dat.qp.tabE)

FALSE TRUE 0.997 0.003

3). Nevertheless, a slight excess of zeros might also contribute to overdispersion.Model fitting or statistical analysis

A number of properties of the data during exploratory data analysis have suggested that overdispersion could be an issue. There is a hint of clumping of the response variable and there are also more zeros than we might have expected. At this point, we could consider which of these issues were likely to most severely impact on the fit of the models (clumping or excessive zeros) and fit a model that specifically addresses the issue (negative binomial, zero-inflated Poisson or even zero-inflated binomial). Alternatively, we could perform the Quasi-Poisson regression (which is a more general approach) using the glm() function. The most important (=commonly used) parameters/arguments for logistic regression are:

- formula: the linear model relating the response variable to the linear predictor

- family: specification of the error distribution (and link function).

Can be specified as either a string or as one of the family functions (which allows for the explicit declaration of the link function).

For examples:

- family="quasipoisson" or equivalently family=quasipoisson(link="log")

- data: the data frame containing the data

I will now fit the Quasi-Poisson regression: $$log(\mu)=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}var(y)=\omega\mu$$

dat.qp.glm <- glm(y~x, data=dat.qp, family="quasipoisson")

Model evaluation

Prior to exploring the model parameters, it is prudent to confirm that the model did indeed fit the assumptions and was an appropriate fit to the data. There are a number of extractor functions (functions that extract or derive specific information from a model) available including:

Extractor Description residuals() Extracts the residuals from the model fitted() Extracts the predicted (expected) response values (on the link scale) at the observed levels of the linear predictor predict() Extracts the predicted (expected) response values (on either the link, response or terms (linear predictor) scale) coef() Extracts the model coefficients deviance() Extracts the deviance from the model summary() Summarizes the important output and characteristics of the model anova() Computes an analysis of deviance or log-likelihood ratio test (LRT) from the model plot() Generates a series of diagnostic plots from the model Lets explore the diagnostics - particularly the residuals

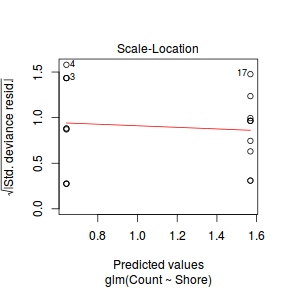

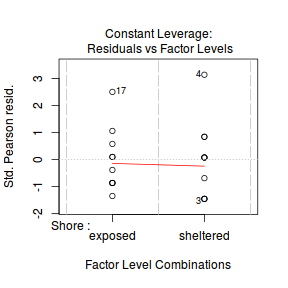

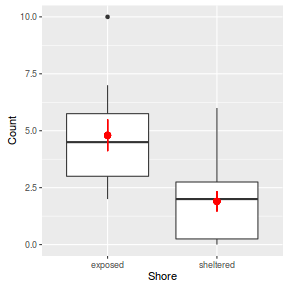

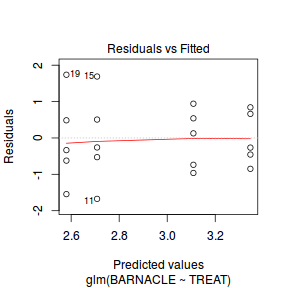

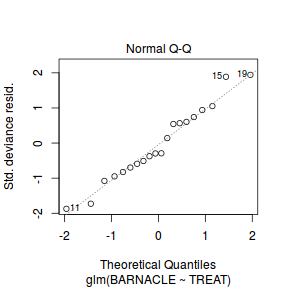

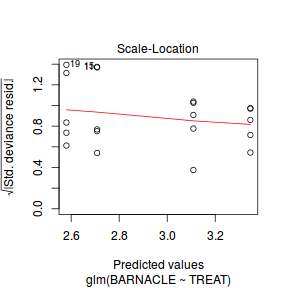

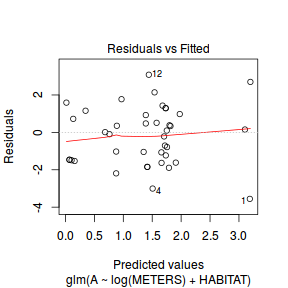

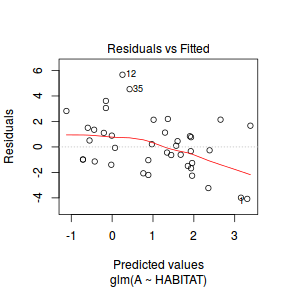

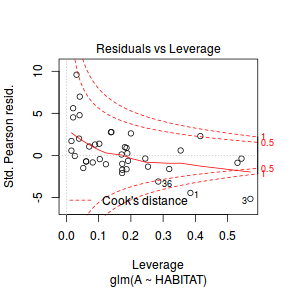

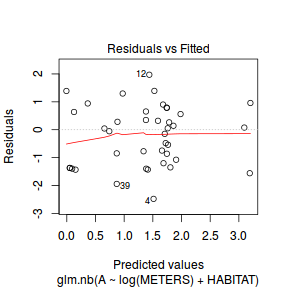

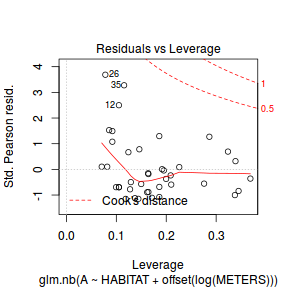

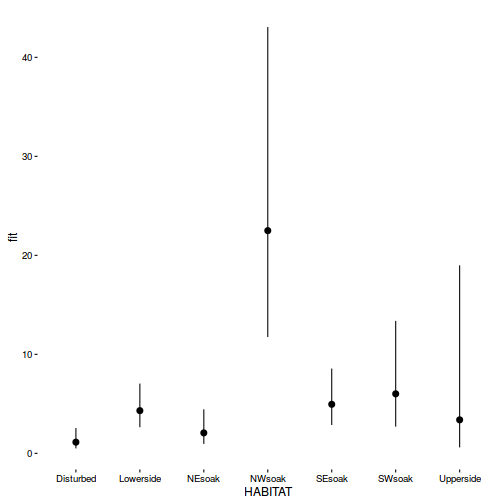

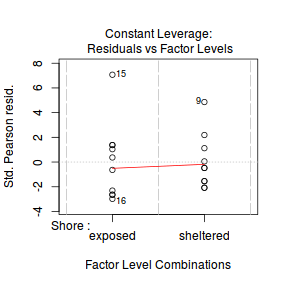

Conclusions: there is no obvious patterns in the residuals, or at least there are no obvious trends remaining that would be indicative of non-linearity. None of the observations are considered overly influential (Cooks' D values not approaching 1).plot(dat.qp.glm)

Since the regression coefficients are estimated directly from maximum likelihood without taking into account an error distribution, genuine log-likelihoods are not calculated. Consequently, derived model comparison metrics such as AIC are not formally available. Furthermore, as both Poisson and Quasi-Poisson models yield identical deviance, we cannot compare the fit of the quasi-poisson to a Poisson model via deviance either

dat.p.glm <- glm(y~x, data=dat.qp, family="poisson") #Poisson deviance dat.p.glm$deviance

[1] 69.98741

#Quasi-poisson deviance dat.qp.glm$deviance

[1] 69.98741

tempdata <- data.frame(obs=dat.qp$y, fitted=fitted(dat.qp.glm)) library(ggplot2) ggplot(tempdata, aes(y=fitted, x=obs)) + geom_point()

Conclusions - the relationship between fitted values and observed counts is ok without being great. There are clearly a few observations for which the model in very inaccurate.

Exploring the model parameters, test hypotheses

If there was any evidence that the assumptions had been violated or the model was not an appropriate fit, then we would need to reconsider the model and start the process again. In this case, there is no evidence that the test will be unreliable so we can proceed to explore the test statistics. The main statistic of interest is the t-statistic ($t$) for the slope parameter.

summary(dat.qp.glm)

Call: glm(formula = y ~ x, family = "quasipoisson", data = dat.qp) Deviance Residuals: Min 1Q Median 3Q Max -3.3665 -1.7360 -0.1239 0.7436 3.9335 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 0.6996 0.4746 1.474 0.1577 x 0.1005 0.0353 2.846 0.0107 * --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 (Dispersion parameter for quasipoisson family taken to be 3.698815) Null deviance: 101.521 on 19 degrees of freedom Residual deviance: 69.987 on 18 degrees of freedom AIC: NA Number of Fisher Scoring iterations: 5Conclusions: Note the dispersion (scale) factor is estimated as 3.699. Whilst this is greater than zero (thus confirming overdispersion), it would not be considered a large degree of overdispersion. So although negative binomial and zero-inflation models are typically preferred over Quasi-Poisson models (as they address the causes of overdispersion more directly), in this case, it is unlikely to make much difference.

We would reject the null hypothesis (p<0.05). An increase in x is associated with a significant linear increase (positive slope) in log $y$ abundance. Every 1 unit increase in $x$ is associated with a 0.1005 unit increase in $\log y$ abundance We usually express this in terms of $y$ than $\log y$, so every 1 unit increase in $x$ is associated with a ($e^{0.1}=1.11$) 1.11 unit increase in $y$ abundance.

P-values are widely acknowledged as a rather flawed means of inference testing (as in hypothesis testing in general). An alternate way of exploring the characteristics of parameter estimates is to calculate their confidence intervals. Confidence intervals that do not overlap with the null hypothesis parameter value (e.g. effect of 0), are an indication of a significant effect. Recall however, that in frequentist statistics the confidence intervals are interpreted as the range of values that are likely to contain the true mean on 95 out of 100 (95%) repeated samples.

Conclusions: A 1 unit increase in $x$ is associated with a ($e^{0.1005}=1.11$) 1.11 (1.03,1.19) unit increase in $y$ abundance.library(gmodels) ci(dat.qp.glm)

Estimate CI lower CI upper Std. Error p-value (Intercept) 0.6996503 -0.29743825 1.6967388 0.47459569 0.15770206 x 0.1004823 0.02631858 0.1746461 0.03530057 0.01071259

Note that if we compare the Quasi-poisson parameter estimates to those of a Poisson, they have identical point estimates yet the Quasi-poisson model yields wider standard errors and confident intervals reflecting the greater variability modeled. In fact, the standard error of the Quasi-poisson model regression coefficients will be $\sqrt{\phi}$ times greater than those of the Poisson model. ($0.035 = \sqrt{3.699}\times 0.0184$).

summary(dat.p.glm<-glm(y~x,data=dat.qp, family="poisson"))

Call: glm(formula = y ~ x, family = "poisson", data = dat.qp) Deviance Residuals: Min 1Q Median 3Q Max -3.3665 -1.7360 -0.1239 0.7436 3.9335 Coefficients: Estimate Std. Error z value Pr(>|z|) (Intercept) 0.69965 0.24677 2.835 0.00458 ** x 0.10048 0.01835 5.474 4.39e-08 *** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 (Dispersion parameter for poisson family taken to be 1) Null deviance: 101.521 on 19 degrees of freedom Residual deviance: 69.987 on 18 degrees of freedom AIC: 134.88 Number of Fisher Scoring iterations: 5ci(dat.p.glm)

Estimate CI lower CI upper Std. Error p-value (Intercept) 0.6996503 0.18120562 1.2180949 0.24677006 4.579247e-03 x 0.1004823 0.06192025 0.1390444 0.01835483 4.389110e-08

Further explorations of the trends

A measure of the strength of the relationship can be obtained according to: $$quasi R^2 = 1-\left(\frac{deviance}{null~deviance}\right)$$

Conclusions: approximately1-(dat.qp.glm$deviance/dat.qp.glm$null)

[1] 0.310614

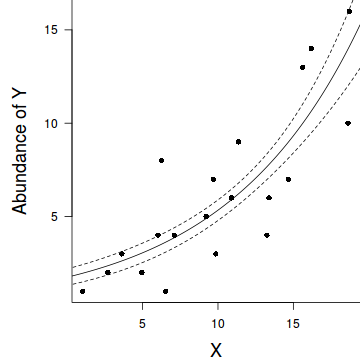

31.1% of the variability in abundance can be explained by its relationship to $x$. Technically, it is31.1% of the variability in $\log y$ (the link function) is explained by its relationship to $x$.Finally, we will create a summary plot.

par(mar = c(4, 5, 0, 0)) plot(y ~ x, data = dat.qp, type = "n", ann = F, axes = F) points(y ~ x, data = dat.qp, pch = 16) xs <- seq(0, 20, l = 1000) ys <- predict(dat.qp.glm, newdata = data.frame(x = xs), type = "response", se = T) points(ys$fit ~ xs, col = "black", type = "l") lines(ys$fit - 1 * ys$se.fit ~ xs, col = "black", type = "l", lty = 2) lines(ys$fit + 1 * ys$se.fit ~ xs, col = "black", type = "l", lty = 2) axis(1) mtext("X", 1, cex = 1.5, line = 3) axis(2, las = 2) mtext("Abundance of Y", 2, cex = 1.5, line = 3) box(bty = "l")

Observation-level random effect

One of the 'causes' of an overdispersed model is that important unmeasured (latent) effect(s) have not been incorporated. That is, there are one or more unobserved influences that result in a greater amount of variability in the response than would be expected from the Poisson distribution. We could attempt to 'mop up' this addition variation by adding a latent effect as a observation-level random effect in the model.

This random effect is just a numeric vector containing a sequence of integers from 1 to the number of observations. It acts as a proxy for the latent effects.

We will use the same data as the previous section on Quasipoisson to demonstrate observation-level random effects. Consequently, the exploratory data analysis section is the same as above (and therefore not repeated here).

Model fitting or statistical analysis

In order to incorporate observation-level random effects, we must use generalized linear mixed effects modelling. For this example, we will use the lme() function of the nlme package. The most important (=commonly used) parameters/arguments for logistic regression are:

- fixed: the linear model relating the response variable to the fixed component of the linear predictor

- random: the linear model relating the response variable to the random component of the linear predictor

- family: specification of the error distribution (and link function).

Can be specified as either a string or as one of the family functions (which allows for the explicit declaration of the link function).

For examples:

- family="poisson" or equivalently family=poisson(link="log")

- data: the data frame containing the data

I will now fit the observation-level random effects Poisson regression: $$log(\mu)=\beta_0+\beta_1x_1+Z\beta_2+\epsilon_i, \hspace{1cm}var(y)=\omega\mu$$

dat.qp$units <- 1:nrow(dat.qp) library(MASS) dat.qp.glmm <- glmmPQL(y~x, random=~1|units, data=dat.qp, family="poisson") #OR library(lme4) dat.qp.glmer <- glmer(y~x+(1|units), data=dat.qp, family="poisson")

Model evaluation

Prior to exploring the model parameters, it is prudent to confirm that the model did indeed fit the assumptions and was an appropriate fit to the data. There are a number of extractor functions (functions that extract or derive specific information from a model) available including:

Extractor Description residuals() Extracts the residuals from the model fitted() Extracts the predicted (expected) response values (on the link scale) at the observed levels of the linear predictor predict() Extracts the predicted (expected) response values (on either the link, response or terms (linear predictor) scale) coef() Extracts the model coefficients fixef() Extracts the model coefficients of the fixed components ranef() Extracts the model coefficients of the fixed components deviance() Extracts the deviance from the model summary() Summarizes the important output and characteristics of the model anova() Computes an analysis of deviance or log-likelihood ratio test (LRT) from the model plot() Generates a series of diagnostic plots from the model Lets explore the diagnostics - particularly the residuals

plot(dat.qp.glmm)

dat.qp.resid <- sum(resid(dat.qp.glmm, type = "pearson")^2) 1-pchisq(dat.qp.resid, dat.qp.glmm$fixDF$X[2])

[1] 0.703327

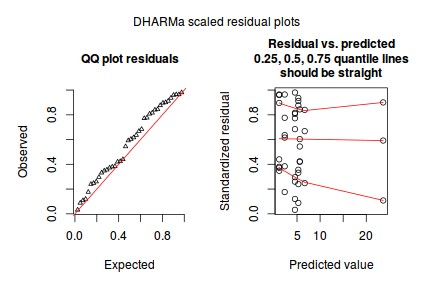

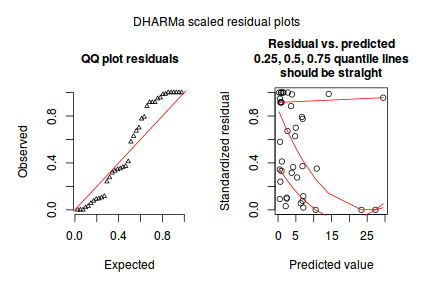

Conclusions:library(DHARMa) dat.qp.glmer.sim <- simulateResiduals(dat.qp.glmer) plotSimulatedResiduals(dat.qp.glmer.sim)

testUniformity(dat.qp.glmer.sim)

One-sample Kolmogorov-Smirnov test data: simulationOutput$scaledResiduals D = 0.144, p-value = 0.8013 alternative hypothesis: two-sided

dat.qp.glmer.sim <- simulateResiduals(dat.qp.glmer, refit=T) testOverdispersion(dat.qp.glmer.sim)

Overdispersion test via comparison to simulation under H0 data: dat.qp.glmer.sim dispersion = 0.97779, p-value = 0.412 alternative hypothesis: overdispersion

testZeroInflation(dat.qp.glmer.sim)

Zero-inflation test via comparison to expected zeros with simulation under H0 data: dat.qp.glmer.sim ratioObsExp = 1.9841, p-value = 0.06 alternative hypothesis: more

- the qq-plot looks fine.

- although there is a bit of a pattern in the residuals, the data set is very small, and the pattern is really only due to a couple of points.

- no evidence of a lack of fit - based on Kolmogorov-Smirnov test of uniformity

- there is no evidence for overdispersion

- there is only weak evidence of zero-inflation

Exploring the model parameters, test hypotheses

If there was any evidence that the assumptions had been violated or the model was not an appropriate fit, then we would need to reconsider the model and start the process again. In this case, there is no evidence that the test will be unreliable so we can proceed to explore the test statistics. The main statistic of interest is the t-statistic ($t$) for the slope parameter.

summary(dat.qp.glmm)

Linear mixed-effects model fit by maximum likelihood Data: dat.qp AIC BIC logLik NA NA NA Random effects: Formula: ~1 | units (Intercept) Residual StdDev: 0.3998288 1.503205 Variance function: Structure: fixed weights Formula: ~invwt Fixed effects: y ~ x Value Std.Error DF t-value p-value (Intercept) 0.7366644 0.4999627 18 1.473439 0.1579 x 0.1020621 0.0392409 18 2.600913 0.0181 Correlation: (Intr) x -0.935 Standardized Within-Group Residuals: Min Q1 Med Q3 Max -1.7186856 -0.9891204 -0.1497398 0.2234752 1.2896136 Number of Observations: 20 Number of Groups: 20Conclusions: We would reject the null hypothesis (p<0.05). An increase in x is associated with a significant linear increase (positive slope) in log $y$ abundance. Every 1 unit increase in $x$ is associated with a 0.1021 unit increase in $\log y$ abundance We usually express this in terms of $y$ than $\log y$, so every 1 unit increase in $x$ is associated with a ($e^{0.1}=1.11$) 1.11 unit increase in $y$ abundance.

As indicated, P-values are widely acknowledged as a rather flawed means of inference testing (as in hypothesis testing in general). This is further exacerbated with mixed effects models. An alternate way of exploring the characteristics of parameter estimates is to calculate their confidence intervals. Confidence intervals that do not overlap with the null hypothesis parameter value (e.g. effect of 0), are an indication of a significant effect. Recall however, that in frequentist statistics the confidence intervals are interpreted as the range of values that are likely to contain the true mean on 95 out of 100 (95%) repeated samples.

Conclusions: A 1 unit increase in $x$ is associated with a ($e^{0.1021}=1.107$) 1.11 (1.02,1.20) unit increase in $y$ abundance.library(gmodels) ci(dat.qp.glmm)

Estimate CI lower CI upper Std. Error DF p-value (Intercept) 0.7366644 -0.31371824 1.7870471 0.4999627 18 0.15790575 x 0.1020621 0.01962008 0.1845042 0.0392409 18 0.01806468

Compared to either the Quasi-Poisson or the simple Poisson models fitted above, the observation-level random effects model, has substantially larger standard errors and confidence intervals (in this case) reflecting the greater variability built in to the model.

summary(dat.p.glm<-glm(y~x,data=dat.qp, family="poisson"))

Call: glm(formula = y ~ x, family = "poisson", data = dat.qp) Deviance Residuals: Min 1Q Median 3Q Max -3.3665 -1.7360 -0.1239 0.7436 3.9335 Coefficients: Estimate Std. Error z value Pr(>|z|) (Intercept) 0.69965 0.24677 2.835 0.00458 ** x 0.10048 0.01835 5.474 4.39e-08 *** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 (Dispersion parameter for poisson family taken to be 1) Null deviance: 101.521 on 19 degrees of freedom Residual deviance: 69.987 on 18 degrees of freedom AIC: 134.88 Number of Fisher Scoring iterations: 5ci(dat.p.glm)

Estimate CI lower CI upper Std. Error p-value (Intercept) 0.6996503 0.18120562 1.2180949 0.24677006 4.579247e-03 x 0.1004823 0.06192025 0.1390444 0.01835483 4.389110e-08

Finally, we will create a summary plot.

par(mar = c(4, 5, 0, 0)) plot(y ~ x, data = dat.qp, type = "n", ann = F, axes = F) points(y ~ x, data = dat.qp, pch = 16) xs <- seq(0, 20, l = 1000) coefs <- fixef(dat.qp.glmm) mm <- model.matrix(~x, data=data.frame(x=xs)) ys <- as.vector(coefs %*% t(mm)) se <- sqrt(diag(mm %*% vcov(dat.qp.glmm) %*% t(mm))) lwr <- exp(ys-qt(0.975,dat.qp.glmm$fixDF$X[2])*se) upr <- exp(ys+qt(0.975,dat.qp.glmm$fixDF$X[2])*se) lwr50 <- exp(ys-se) upr50 <- exp(ys+se) points(exp(ys) ~ xs, col = "black", type = "l") #lines(lwr ~ xs, col = "black", type = "l", lty = 2) #lines(upr ~ xs, col = "black", type = "l", lty = 2) lines(lwr50 ~ xs, col = "black", type = "l", lty = 2) lines(upr50 ~ xs, col = "black", type = "l", lty = 2) axis(1) mtext("X", 1, cex = 1.5, line = 3) axis(2, las = 2) mtext("Abundance of Y", 2, cex = 1.5, line = 3) box(bty = "l")

Negative binomial regression

Scenario and Data

Lets say we wanted to model the abundance of an item ($y$) against a continuous predictor ($x$). As this section is mainly about the generation of artificial data (and not specifically about what to do with the data), understanding the actual details are optional and can be safely skipped. Consequently, I have folded (toggled) this section away.

Random data incorporating the following trends (effect parameters)- the sample size = 20

- the continuous $x$ variable is a random uniform spread of measurements between 1 and 20

- the rate of change in log $y$ per unit of $x$ (slope) = 0.1.

- the value of $x$ when log$y$ equals 0 (when $y$=1)

- to generate the values of $y$ expected at each $x$ value, we evaluate the linear predictor (created by calculating the outer product of the model matrix and the regression parameters). These expected values are then transformed into a scale mapped by (0,$\infty$) by using the log function $e^{linear~predictor}$

- finally, we generate $y$ values by using the expected $y$ values ($\lambda$) as probabilities when drawing random numbers from a Poisson distribution. This step adds random noise to the expected $y$ values and returns only 0's and positive integers.

set.seed(37) #16 #35 #The number of samples n.x <- 20 #Create x values that at uniformly distributed throughout the rate of 1 to 20 x <- sort(runif(n = n.x, min = 1, max =20)) mm <- model.matrix(~x) intercept <- 0.6 slope=0.1 #The linear predictor linpred <- mm %*% c(intercept,slope) #Predicted y values lambda <- exp(linpred) #Add some noise and make binomial y <- rnbinom(n=n.x, mu=lambda, size=1) dat.nb <- data.frame(y,x) data.nb <- dat.nb write.table(data.nb, file='../downloads/data/data.nb.csv', quote=F, row.names=F, sep=',')

Exploratory data analysis and initial assumption checking

The assumptions are:- All of the observations are independent - this must be addressed at the design and collection stages

- The response variable (and thus the residuals) should be matched by an appropriate distribution (in the case of positive integers response - a Poisson is appropriate).

- All observations are equally influential in determining the trends - or at least no observations are overly influential. This is most effectively diagnosed via residuals and other influence indices and is very difficult to diagnose prior to analysis

- the relationship between the linear predictor (right hand side of the regression formula) and the link function should be linear. A scatterplot with smoother can be useful for identifying possible non-linearity.

- Dispersion is either 1 or overdispersion is otherwise accounted for in the model

- The number of zeros is either not excessive or else they are specifically addressed by the model

When counts are all very large (not close to 0) and their ranges do not span orders of magnitude, they take on very Gaussian properties (symmetrical distribution and variance independent of the mean). Given that models based on the Gaussian distribution are more optimized and recognized than Generalized Linear Models, it can be prudent to adopt Gaussian models for such data. Hence it is a good idea to first explore whether a Poisson model is likely to be more appropriate than a standard Gaussian model.

Recall from Poisson regression, there are five main potential models that we could consider fitting to these data.

There are five main potential models we could consider fitting to these data:

- Ordinary least squares regression (general linear model) - assumes normality of residuals

- Poisson regression - assumes mean=variance (dispersion=1)

- Quasi-poisson regression - a general solution to overdispersion. Assumes variance is a function of mean, dispersion estimated, however likelihood based statistics unavailable

- Negative binomial regression - a specific solution to overdispersion caused by clumping (due to an unmeasured latent variable). Scaling factor ($\omega$) is estimated along with the regression parameters.

- Zero-inflation model - a specific solution to overdispersion caused by excessive zeros (due to an unmeasured latent variable). Mixture of binomial and Poisson models.

Confirm non-normality and explore clumping

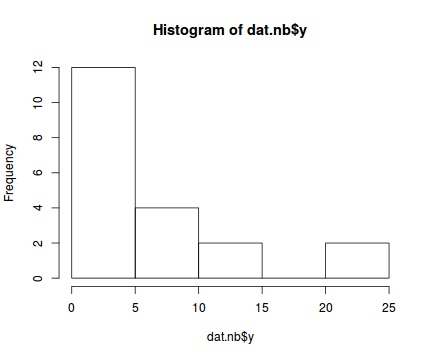

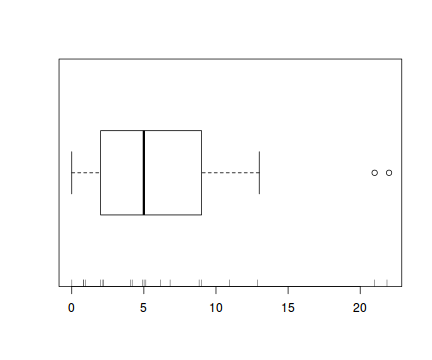

Check the distribution of the $y$ abundances

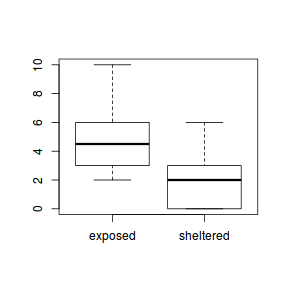

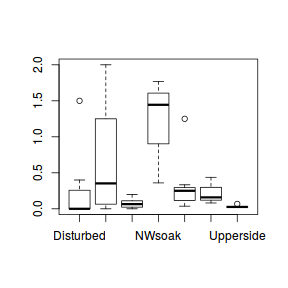

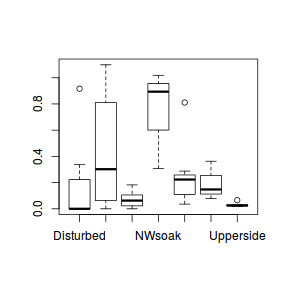

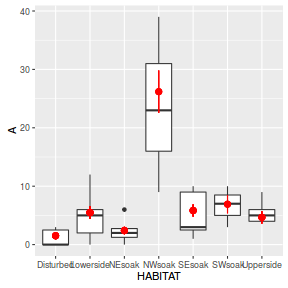

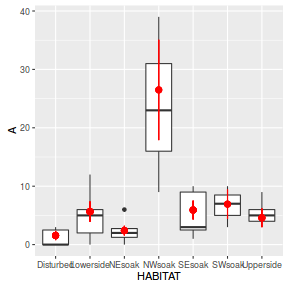

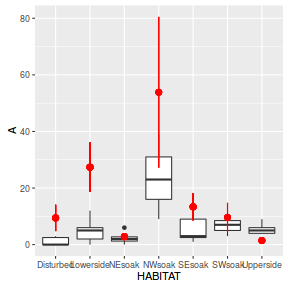

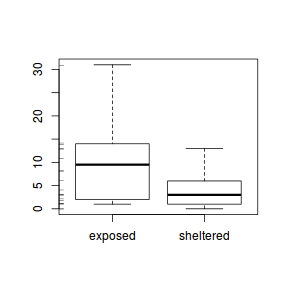

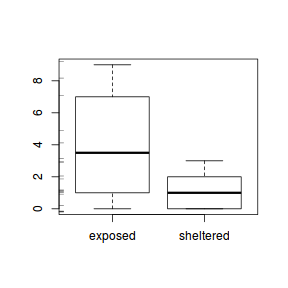

There is definitely signs of non-normality that would warrant Poisson models. Further to that, the rug on the boxplot is suggestive of clumping (observations are not evenly distributed and well spaced out). Together the, skewness and clumping could point to an overdispersed Poisson. Negative binomial models are the most effective at modeling such data.hist(dat.nb$y)

boxplot(dat.nb$y, horizontal=TRUE) rug(jitter(dat.nb$y))

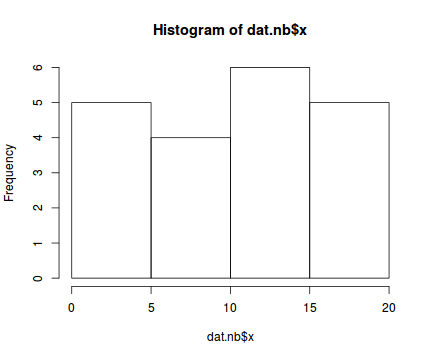

Confirm linearity

Lets now explore linearity by creating a histogram of the predictor variable ($x$) and a scatterplot of the relationship between the response ($y$) and the predictor ($x$)

hist(dat.nb$x)

#now for the scatterplot plot(y~x, dat.nb, log="y") with(dat.nb, lines(lowess(y~x)))

Conclusions: the predictor ($x$) does not display any skewness or other issues that might lead to non-linearity. The lowess smoother on the scatterplot does not display major deviations from a straight line and thus linearity is satisfied. Violations of linearity could be addressed by either:

- define a non-linear linear predictor (such as a polynomial, spline or other non-linear function)

- transform the scale of the predictor variables

Explore the distributional properties of the response

Lets explore the goodness of fit of the data to both Poisson and Negative Binomial distributions.

library(vcd) fit <- goodfit(dat.nb$y, type='poisson') summary(fit)

Goodness-of-fit test for poisson distribution X^2 df P(> X^2) Likelihood Ratio 73.64611 10 8.722665e-12rootogram(fit)

fit <- goodfit(dat.nb$y, type='nbinom') summary(fit)

Goodness-of-fit test for nbinomial distribution X^2 df P(> X^2) Likelihood Ratio 22.38866 9 0.007725515rootogram(fit)

Ord_plot(dat.nb$y, tol=0.2)

distplot(dat.nb$y, type='poisson')

distplot(dat.nb$y, type="nbinom")

Conclusions - not withstanding the very small sample sizes, it would appear that a Negative Binomial is a better fit to the data than a Poisson. Note, the Ord plot is likely to be suffering due to the small sample size and I am going to ignore it.

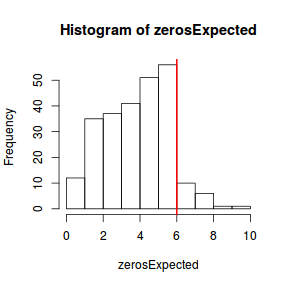

Explore zero inflation

Although we have already established that there are few zeros in the data (and thus overdispersion is unlikely to be an issue), we can also explore this by comparing the number of zeros in the data to the number of zeros that would be expected from a Poisson distribution with a mean equal to the mean count of the data.

In the above, the value under FALSE is the proportion of non-zero values in the data and the value under TRUE is the proportion of zeros in the data. In this example, the proportion of zeros observed is similar to the proportion expected. Indeed, there was only a single zero observed. Hence it is likely that if there is overdispersion it is unlikely to be due to excessive zeros.#proportion of 0's in the data dat.nb.tab<-table(dat.nb$y==0) dat.nb.tab/sum(dat.nb.tab)

FALSE TRUE 0.95 0.05

#proportion of 0's expected from a Poisson distribution mu <- mean(dat.nb$y) cnts <- rpois(1000, mu) dat.nb.tabE <- table(cnts == 0) dat.nb.tabE/sum(dat.nb.tabE)

FALSE 1Model fitting or statistical analysis

The boxplot of $y$ with the axis rug suggested that there might be some clumping (possibly due to some other unmeasured influence). We will therefore perform negative binomial regression using the glm.nb() function. The most important (=commonly used) parameters/arguments for negative binomial regression are:

- formula: the linear model relating the response variable to the linear predictor

- data: the data frame containing the data

I will now fit the negative binomial regression: $$log(\mu)=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim NB(\lambda, \phi)$$

library(MASS) dat.nb.glm <- glm.nb(y~x, data=dat.nb)

Model evaluation

Prior to exploring the model parameters, it is prudent to confirm that the model did indeed fit the assumptions and was an appropriate fit to the data. There are a number of extractor functions (functions that extract or derive specific information from a model) available including:

Extractor Description residuals() Extracts the residuals from the model fitted() Extracts the predicted (expected) response values (on the link scale) at the observed levels of the linear predictor predict() Extracts the predicted (expected) response values (on either the link, response or terms (linear predictor) scale) coef() Extracts the model coefficients deviance() Extracts the deviance from the model AIC() Extracts the Akaike's Information Criterion from the model extractAIC() Extracts the generalized Akaike's Information Criterion from the model summary() Summarizes the important output and characteristics of the model anova() Computes an analysis of deviance or log-likelihood ratio test (LRT) from the model plot() Generates a series of diagnostic plots from the model Lets explore the diagnostics - particularly the residuals

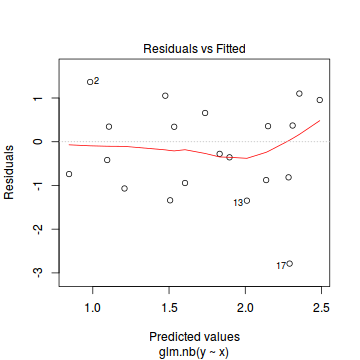

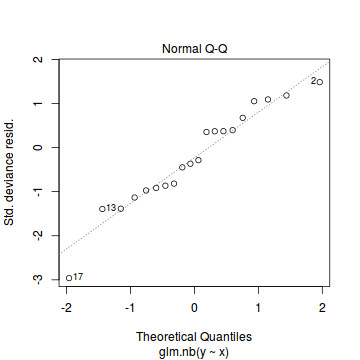

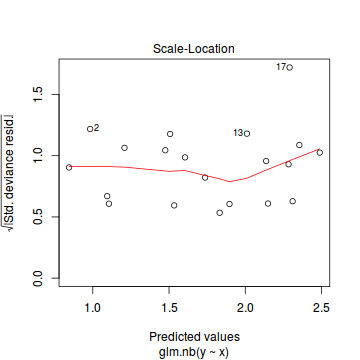

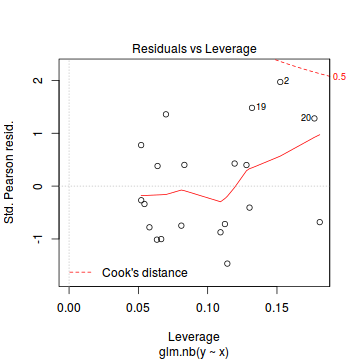

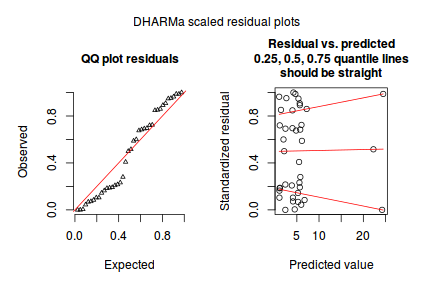

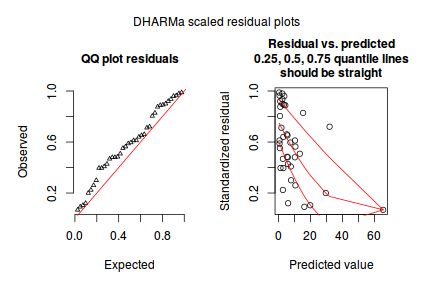

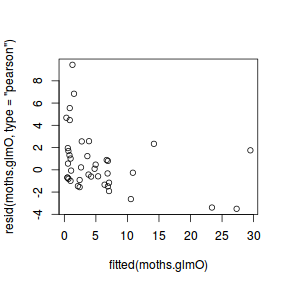

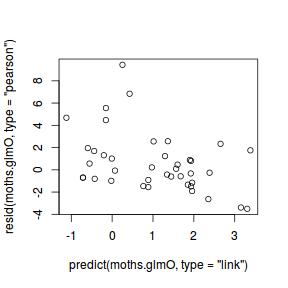

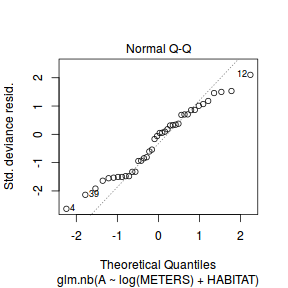

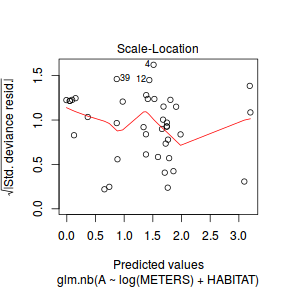

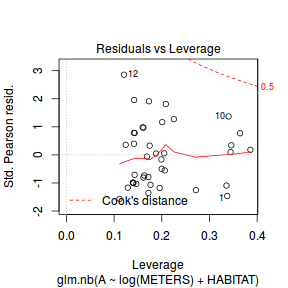

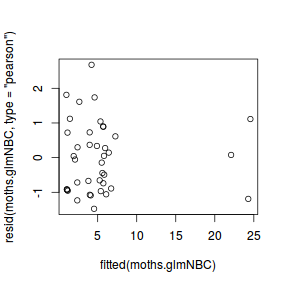

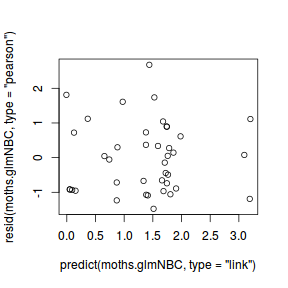

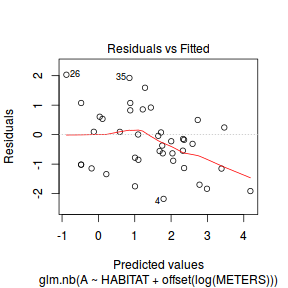

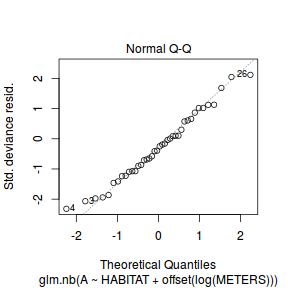

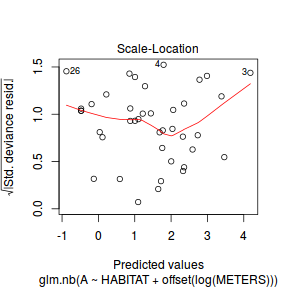

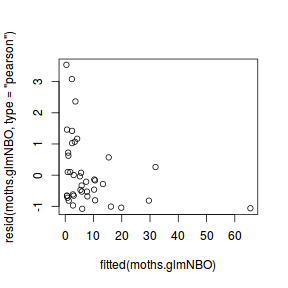

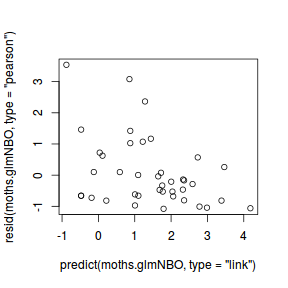

And now exploring the simulated residuals via the DHARMa package.plot(dat.nb.glm)

Conclusions: there is no obvious patterns in the residuals, or at least there are no obvious trends remaining that would be indicative of non-linearity.

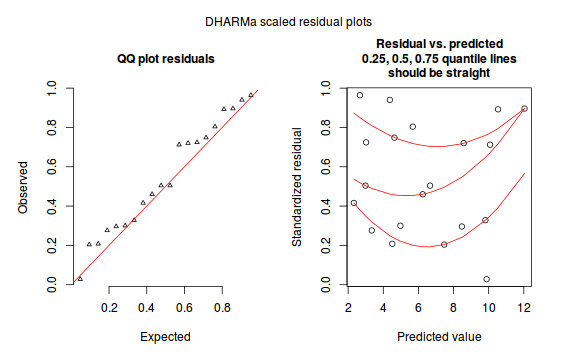

Conclusions: there is no obvious patterns in the residuals, or at least there are no obvious trends remaining that would be indicative of non-linearity.library(DHARMa)

dat.nb.sim <- simulateResiduals(dat.nb.glm) dat.nb.sim

[1] "Class DHARMa with simulated residuals based on 250 simulations with refit = FALSE" [1] "see ?DHARMa::simulateResiduals for help" [1] "-----------------------------" [1] 0.416 0.964 0.504 0.724 0.276 0.940 0.208 0.748 0.300 0.804 0.460 0.504 0.204 0.296 0.720 0.328 [17] 0.028 0.712 0.892 0.896

plotSimulatedResiduals(dat.nb.sim)

Exploring goodness-of-fit, overdispersion and zero-inflation

The negative binomial family should handle the majority of overdispersion. However, zero-inflation can still be an issue.

- Kolmogorov-Smirnov test for uniformity

testUniformity(dat.nb.sim)

One-sample Kolmogorov-Smirnov test data: simulationOutput$scaledResiduals D = 0.162, p-value = 0.6702 alternative hypothesis: two-sided

- Exploring overdispersion

dat.nb.sim <- simulateResiduals(dat.nb.glm, refit=T) testOverdispersion(dat.nb.sim)

Overdispersion test via comparison to simulation under H0 data: dat.nb.sim dispersion = 0.83369, p-value = 0.892 alternative hypothesis: overdispersion

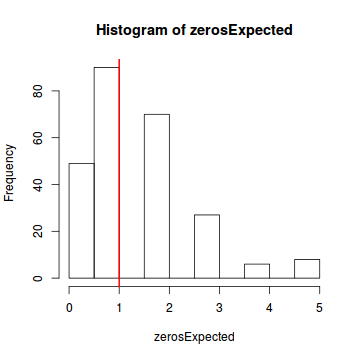

- Exploring zero-inflation

testZeroInflation(dat.nb.sim)

Zero-inflation test via comparison to expected zeros with simulation under H0 data: dat.nb.sim ratioObsExp = 0.66667, p-value = 0.444 alternative hypothesis: more

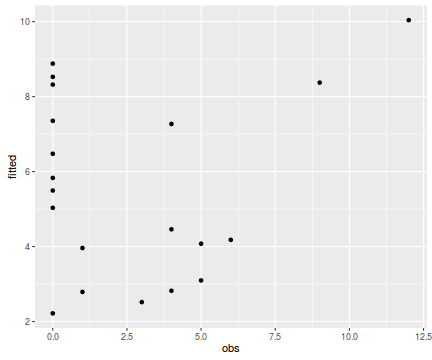

Comparing fitted values to observed counts

tempdata <- data.frame(obs=dat.nb$y, fitted=fitted(dat.nb.glm)) library(ggplot2) ggplot(tempdata, aes(y=fitted, x=obs)) + geom_point()

Conclusions - the relationship between fitted values and observed counts is reasonable without being spectacular.

In this demonstration we fitted the Negative binomial model. As well as estimating the regression coefficients, the negative binomial also estimates the dispersion parameter ($\phi$), thus requiring an additional degree of freedom. We could compare the fit of the negative binomial to that of a regular Poisson model (that assumes a dispersion of 1), using AIC.

Conclusions: The AIC is smaller for the Negative binomial model, suggesting that it is a better fit than the Poisson model.dat.glm <- glm(y~x, data=dat.nb, family="poisson") AIC(dat.nb.glm, dat.glm)

Error in AIC.glm(dat.nb.glm, dat.glm): unused argument (dat.glm)

Exploring the model parameters, test hypotheses

If there was any evidence that the assumptions had been violated or the model was not an appropriate fit, then we would need to reconsider the model and start the process again. In this case, there is no evidence that the test will be unreliable so we can proceed to explore the test statistics. The main statistic of interest is the Wald statistic ($z$) for the slope parameter.

summary(dat.nb.glm)

Call: glm.nb(formula = y ~ x, data = dat.nb, init.theta = 2.359878187, link = log) Deviance Residuals: Min 1Q Median 3Q Max -2.7874 -0.8940 -0.3172 0.4420 1.3660 Coefficients: Estimate Std. Error z value Pr(>|z|) (Intercept) 0.74543 0.42534 1.753 0.07968 . x 0.09494 0.03441 2.759 0.00579 ** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 (Dispersion parameter for Negative Binomial(2.3599) family taken to be 1) Null deviance: 30.443 on 19 degrees of freedom Residual deviance: 21.806 on 18 degrees of freedom AIC: 115.85 Number of Fisher Scoring iterations: 1 Theta: 2.36 Std. Err.: 1.10 2 x log-likelihood: -109.849Conclusions: We would reject the null hypothesis (p<0.05). An increase in x is associated with a significant linear increase (positive slope) in log $y$ abundance. Every 1 unit increase in $x$ is associated with a 0.1115 unit increase in $\log y$ abundance We usually express this in terms of $y$ than $\log y$, so every 1 unit increase in $x$ is associated with a ($e^{0.1115}=1.12$) 1.12 unit increase in $y$ abundance.

P-values are widely acknowledged as a rather flawed means of inference testing (as in hypothesis testing in general). An alternate way of exploring the characteristics of parameter estimates is to calculate their confidence intervals. Confidence intervals that do not overlap with the null hypothesis parameter value (e.g. effect of 0), are an indication of a significant effect. Recall however, that in frequentist statistics the confidence intervals are interpreted as the range of values that are likely to contain the true mean on 95 out of 100 (95%) repeated samples.

Conclusions: A 1 unit increase in $x$ is associated with a ($e^{0.62}=1.89$) 1.89 (1.14,3.02) unit increase in $y$ abundance.library(gmodels) ci(dat.nb.glm)

Estimate CI lower CI upper Std. Error p-value (Intercept) 0.74542589 -0.14818316 1.639035 0.42534137 0.079681751 x 0.09493852 0.02265303 0.167224 0.03440655 0.005792266

Further explorations of the trends

A measure of the strength of the relationship can be obtained according to: $$quasi R^2 = 1-\left(\frac{deviance}{null~deviance}\right)$$

Conclusions: approximately1-(dat.nb.glm$deviance/dat.nb.glm$null)

[1] 0.2836978

28.4% of the variability in abundance can be explained by its relationship to $x$. Technically, it is28.4% of the variability in $\log y$ (the link function) is explained by its relationship to $x$.Finally, we will create a summary plot.

par(mar = c(4, 5, 0, 0)) plot(y ~ x, data = dat.nb, type = "n", ann = F, axes = F) points(y ~ x, data = dat.nb, pch = 16) xs <- seq(0, 20, l = 1000) ys <- predict(dat.nb.glm, newdata = data.frame(x = xs), type = "response", se = T) points(ys$fit ~ xs, col = "black", type = "l") lines(ys$fit - 1 * ys$se.fit ~ xs, col = "black", type = "l", lty = 2) lines(ys$fit + 1 * ys$se.fit ~ xs, col = "black", type = "l", lty = 2) axis(1) mtext("X", 1, cex = 1.5, line = 3) axis(2, las = 2) mtext("Abundance of Y", 2, cex = 1.5, line = 3) box(bty = "l")

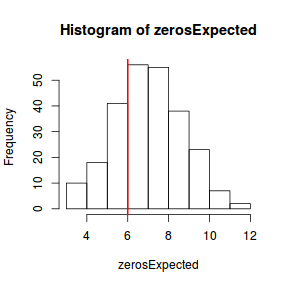

Zero-inflation Poisson (ZIP) regression

Scenario and Data

Lets say we wanted to model the abundance of an item ($y$) against a continuous predictor ($x$). As this section is mainly about the generation of artificial data (and not specifically about what to do with the data), understanding the actual details are optional and can be safely skipped. Consequently, I have folded (toggled) this section away.

Random data incorporating the following trends (effect parameters)- the sample size = 20

- the continuous $x$ variable is a random uniform spread of measurements between 1 and 20

- the rate of change in log $y$ per unit of $x$ (slope) = 0.1.

- the value of $x$ when log$y$ equals 0 (when $y$=1)

- to generate the values of $y$ expected at each $x$ value, we evaluate the linear predictor (created by calculating the outer product of the model matrix and the regression parameters). These expected values are then transformed into a scale mapped by (0,$\infty$) by using the log function $e^{linear~predictor}$

- finally, we generate $y$ values by using the expected $y$ values ($\lambda$) as probabilities when drawing random numbers from a Poisson distribution. This step adds random noise to the expected $y$ values and returns only 0's and positive integers.

set.seed(37) #34.5 #4 #10 #16 #17 #26 #The number of samples n.x <- 20 #Create x values that at uniformly distributed throughout the rate of 1 to 20 x <- sort(runif(n = n.x, min = 1, max =20)) mm <- model.matrix(~x) intercept <- 0.6 slope=0.1 #The linear predictor linpred <- mm %*% c(intercept,slope) #Predicted y values lambda <- exp(linpred) #Add some noise and make binomial library(gamlss.dist) #fixed latent binomial y<- rZIP(n.x,lambda, 0.4) #latent binomial influenced by the linear predictor #y<- rZIP(n.x,lambda, 1-exp(linpred)/(1+exp(linpred))) dat.zip <- data.frame(y,x) data.zip <- dat.zip write.table(data.zip, file='../downloads/data/data.zip.csv', quote=F, row.names=F, sep=',') summary(glm(y~x, dat.zip, family="poisson"))

Call: glm(formula = y ~ x, family = "poisson", data = dat.zip) Deviance Residuals: Min 1Q Median 3Q Max -2.5415 -2.3769 -0.9753 1.1736 3.6380 Coefficients: Estimate Std. Error z value Pr(>|z|) (Intercept) 0.67048 0.33018 2.031 0.0423 * x 0.02961 0.02663 1.112 0.2662 --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 (Dispersion parameter for poisson family taken to be 1) Null deviance: 85.469 on 19 degrees of freedom Residual deviance: 84.209 on 18 degrees of freedom AIC: 124.01 Number of Fisher Scoring iterations: 6plot(glm(y~x, dat.zip, family="poisson"))

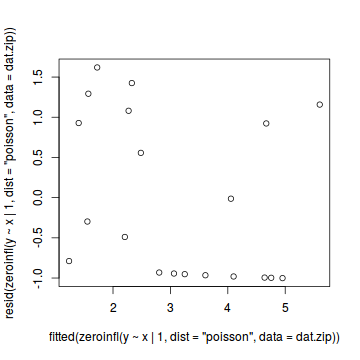

library(pscl) summary(zeroinfl(y ~ x | 1, dist = "poisson", data = dat.zip))

Call: zeroinfl(formula = y ~ x | 1, data = dat.zip, dist = "poisson") Pearson residuals: Min 1Q Median 3Q Max -1.0015 -0.9556 -0.3932 0.9663 1.6195 Count model coefficients (poisson with log link): Estimate Std. Error z value Pr(>|z|) (Intercept) 0.70474 0.31960 2.205 0.027449 * x 0.08734 0.02532 3.449 0.000563 *** Zero-inflation model coefficients (binomial with logit link): Estimate Std. Error z value Pr(>|z|) (Intercept) -0.2292 0.4563 -0.502 0.615 --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 Number of iterations in BFGS optimization: 13 Log-likelihood: -36.17 on 3 Dfplot(resid(zeroinfl(y ~ x | 1, dist = "poisson", data = dat.zip))~fitted(zeroinfl(y ~ x | 1, dist = "poisson", data = dat.zip)))

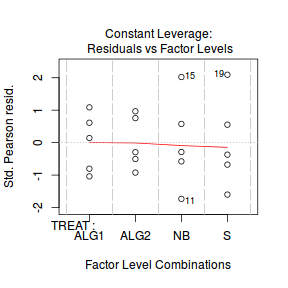

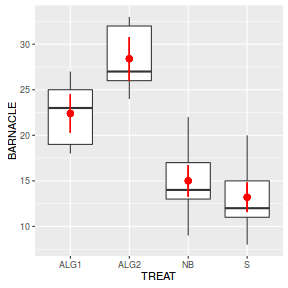

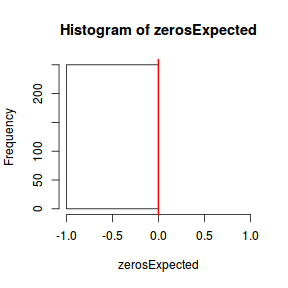

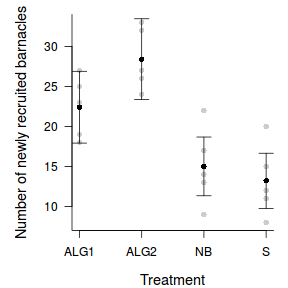

library(gamlss) summary(gamlss(y~x,data=dat.zip, family=ZIP))