Tutorial 9.3b - Randomized Complete Block ANOVA (Bayesian)

24 Dec 2015

If you are completely ontop of the conceptual issues pertaining to Randomized Complete Block (RCB) ANOVA, and just need to use this tutorial in order to learn about RCB ANOVA in R, you are invited to skip down to the section on RCB ANOVA in R.

Overview

You are strongly advised to review the information on the nested design in tutorial 9.3a. I am not going to duplicate the overview here.

Tutorial 9.2a (Nested ANOVA), introduced the concept of employing sub-replicates that are nested within the main treatment levels as a means of absorbing some of the unexplained variability that would otherwise arise from designs in which sampling units are selected from amongst highly heterogeneous conditions. Such (nested) designs are useful in circumstances where the levels of the main treatment (such as burnt and un-burnt sites) occur at a much larger temporal or spatial scale than the experimental/sampling units (e.g. vegetation monitoring quadrats).

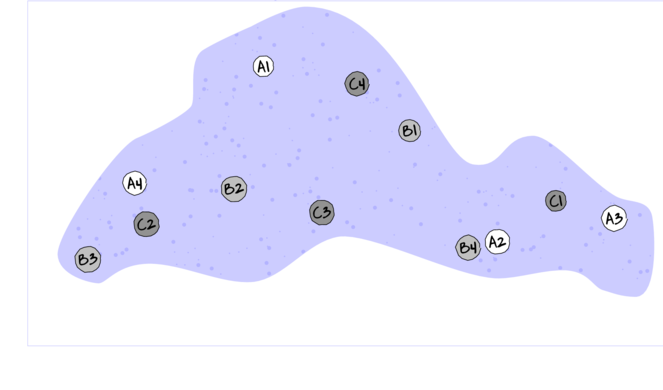

For circumstances in which the main treatments can be applied (or naturally occur) at the same scale as the sampling units (such as whether a stream rock is enclosed by a fish proof fence or not), an alternative design is available. In this design (randomized complete block design), each of the levels of the main treatment factor are grouped (blocked) together (in space and/or time) and therefore, whilst the conditions between the groups (referred to as `blocks') might vary substantially, the conditions under which each of the levels of the treatment are tested within any given block are far more homogeneous (see Figure below).

If any differences between blocks (due to the heterogeneity) can account for some of the total variability between the sampling units (thereby reducing the amount of variability that the main treatment(s) failed to explain), then the main test of treatment effects will be more powerful/sensitive.

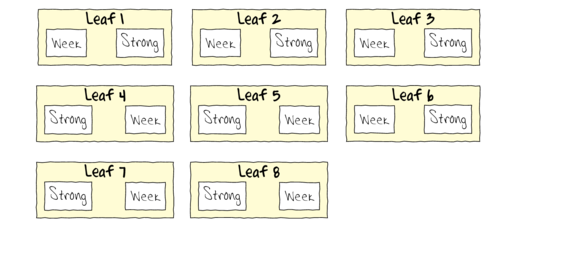

As an simple example of a randomized complete block (RCB) design, consider an investigation into the roles of different organism scales (microbial, macro invertebrate and vertebrate) on the breakdown of leaf debris packs within streams. An experiment could consist of four treatment levels - leaf packs protected by fish-proof mesh, leaf packs protected by fine macro invertebrate exclusion mesh, leaf packs protected by dissolving antibacterial tablets, and leaf packs relatively unprotected as controls.

As an acknowledgement that there are many other unmeasured factors that could influence leaf pack breakdown (such as flow velocity, light levels, etc) and that these are likely to vary substantially throughout a stream, the treatments are to be arranged into groups or 'blocks' (each containing a single control, microbial, macro invertebrate and fish protected leaf pack). Blocks of treatment sets are then secured in locations haphazardly selected throughout a particular reach of stream. Importantly, the arrangement of treatments in each block must be randomized to prevent the introduction of some systematic bias - such as light angle, current direction etc.

Blocking does however come at a cost. The blocks absorb both unexplained variability as well as degrees of freedom from the residuals. Consequently, if the amount of the total unexplained variation that is absorbed by the blocks is not sufficiently large enough to offset the reduction in degrees of freedom (which may result from either less than expected heterogeneity, or due to the scale at which the blocks are established being inappropriate to explain much of the variation), for a given number of sampling units (leaf packs), the tests of main treatment effects will suffer power reductions.

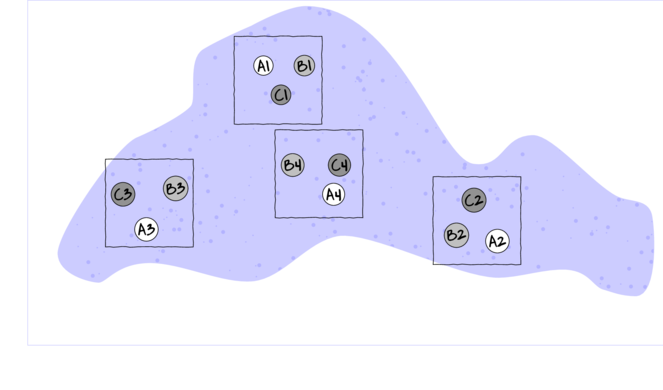

Treatments can also be applied sequentially or repeatedly at the scale of the entire block, such that at any single time, only a single treatment level is being applied (see the lower two sub-figures above). Such designs are called repeated measures. A repeated measures ANOVA is to an single factor ANOVA as a paired t-test is to a independent samples t-test.

One example of a repeated measures analysis might be an investigation into the effects of a five different diet drugs (four doses and a placebo) on the food intake of lab rats. Each of the rats (`subjects') is subject to each of the four drugs (within subject effects) which are administered in a random order.

In another example, temporal recovery responses of sharks to bi-catch entanglement stresses might be simulated by analyzing blood samples collected from captive sharks (subjects) every half hour for three hours following a stress inducing restraint. This repeated measures design allows the anticipated variability in stress tolerances between individual sharks to be accounted for in the analysis (so as to permit more powerful test of the main treatments). Furthermore, by performing repeated measures on the same subjects, repeated measures designs reduce the number of subjects required for the investigation.

Essentially, this is a randomized complete block design except that the within subject (block) effect (e.g. time since stress exposure) cannot be randomized (the consequences of which are discussed in section on Sphericity).

To suppress contamination effects resulting from the proximity of treatment sampling units within a block, units should be adequately spaced in time and space. For example, the leaf packs should not be so close to one another that the control packs are effected by the antibacterial tablets and there should be sufficient recovery time between subsequent drug administrations.

In addition, the order or arrangement of treatments within the blocks must be randomized so as to prevent both confounding as well as computational complications (Sphericity). Whilst this is relatively straight forward for the classic randomized complete block design (such as the leaf packs in streams), it is logically not possible for repeated measures designs.

Blocking factors are typically random factors (see section~\ref{chpt:ANOVA.fixedVsRandomFactor}) that represent all the possible blocks that could be selected. As such, no individual block can truly be replicated. Randomized complete block and repeated measures designs can therefore also be thought of as un-replicated factorial designs in which there are two or more factors but that the interactions between the blocks and all the within block factors are not replicated.

Linear models

The linear models for two and three factor nested design are:

$$

\begin{align}

y_{ij}&=\mu+\beta_{i}+\alpha_j + \varepsilon_{ij} &\hspace{2em} \varepsilon_{ij} &\sim\mathcal{N}(0,\sigma^2), \hspace{1em}\sum{}{\beta=0}\\

y_{ijk}&=\mu+\beta_{i} + \alpha_j + \gamma_{k} + \beta\alpha_{ij} + \beta\gamma_{ik} + \alpha\gamma_{jk} + \gamma\alpha\beta_{ijk} + \varepsilon_{ijk} \hspace{2em} (Model 1)\\

y_{ijk}&=\mu+\beta_{i} + \alpha_j + \gamma_{k} + \alpha\gamma_{jk} + \varepsilon_{ijk} \hspace{2em}(Model 2)

\end{align}

$$

where $\mu$ is the overall mean, $\beta$ is the effect of the Blocking

Factor B, $\alpha$ and $\gamma$ are the effects of withing block Factor A

and Factor C respectively and $\varepsilon$ is the random unexplained or

residual component.

Tests for the effects of blocks as well as effects within blocks assume that there are no interactions between blocks and the within block effects. That is, it is assumed that any effects are of similar nature within each of the blocks. Whilst this assumption may well hold for experiments that are able to consciously set the scale over which the blocking units are arranged, when designs utilize arbitrary or naturally occurring blocking units, the magnitude and even polarity of the main effects are likely to vary substantially between the blocks.

The preferred (non-additive or `Model 1') approach to un-replicated factorial analysis of some bio-statisticians is to include the block by within subject effect interactions (e.g. $\beta\alpha$). Whilst these interaction effects cannot be formally tested, they can be used as the denominators in F-ratio calculations of their respective main effects tests (see the tables that follow).

Proponents argue that since these blocking interactions cannot be formally tested, there is no sound inferential basis for using these error terms separately. Alternatively, models can be fitted additively (`Model 2') whereby all the block by within subject effect interactions are pooled into a single residual term ($\varepsilon$). Although the latter approach is simpler, each of the within subject effects tests do assume that there are no interactions involving the blocks and that perhaps even more restrictively, that sphericity (see section Sphericity) holds across the entire design.

Assumptions

As with other ANOVA designs, the reliability of hypothesis tests is dependent on the residuals being:

- normally distributed. Boxplots using the appropriate scale of replication (reflecting the appropriate residuals/F-ratio denominator (see Tables above) should be used to explore normality. Scale transformations are often useful.

- equally varied. Boxplots and plots of means against variance (using the appropriate scale of replication) should be used to explore the spread of values. Residual plots should reveal no patterns. Scale transformations are often useful.

- independent of one another. Although the observations within a block may not strictly be independent, provided the treatments are applied or ordered randomly within each block or subject, within block proximity effects on the residuals should be random across all blocks and thus the residuals should still be independent of one another. Nevertheless, it is important that experimental units within blocks are adequately spaced in space and time so as to suppress contamination or carryover effects.

RCB in R (JAGS and STAN)

Simple RCB

Scenario and Data

Imagine we has designed an experiment in which we intend to measure a response ($y$) to one of treatments (three levels; 'a1', 'a2' and 'a3'). Unfortunately, the system that we intend to sample is spatially heterogeneous and thus will add a great deal of noise to the data that will make it difficult to detect a signal (impact of treatment).

Thus in an attempt to constrain this variability you decide to apply a design (RCB) in which each of the treatments within each of 35 blocks dispersed randomly throughout the landscape. As this section is mainly about the generation of artificial data (and not specifically about what to do with the data), understanding the actual details are optional and can be safely skipped. Consequently, I have folded (toggled) this section away.

- the number of treatments = 3

- the number of blocks containing treatments = 35

- the mean of the treatments = 40, 70 and 80 respectively

- the variability (standard deviation) between blocks of the same treatment = 12

- the variability (standard deviation) between treatments withing blocks = 5

library(plyr) set.seed(1) nTreat <- 3 nBlock <- 35 sigma <- 5 sigma.block <- 12 n <- nBlock*nTreat Block <- gl(nBlock, k=1) A <- gl(nTreat,k=1) dt <- expand.grid(A=A,Block=Block) #Xmat <- model.matrix(~Block + A + Block:A, data=dt) Xmat <- model.matrix(~-1+Block + A, data=dt) block.effects <- rnorm(n = nBlock, mean = 40, sd = sigma.block) A.effects <- c(30,40) all.effects <- c(block.effects,A.effects) lin.pred <- Xmat %*% all.effects # OR Xmat <- cbind(model.matrix(~-1+Block,data=dt),model.matrix(~-1+A,data=dt)) ## Sum to zero block effects block.effects <- rnorm(n = nBlock, mean = 0, sd = sigma.block) A.effects <- c(40,70,80) all.effects <- c(block.effects,A.effects) lin.pred <- Xmat %*% all.effects ## the quadrat observations (within sites) are drawn from ## normal distributions with means according to the site means ## and standard deviations of 5 y <- rnorm(n,lin.pred,sigma) data.rcb <- data.frame(y=y, expand.grid(A=A, Block=Block)) head(data.rcb) #print out the first six rows of the data set

y A Block 1 37.39761 1 1 2 61.47033 2 1 3 78.07370 3 1 4 30.59803 1 2 5 59.00035 2 2 6 76.72575 3 2

Exploratory data analysis

Normality and Homogeneity of variance

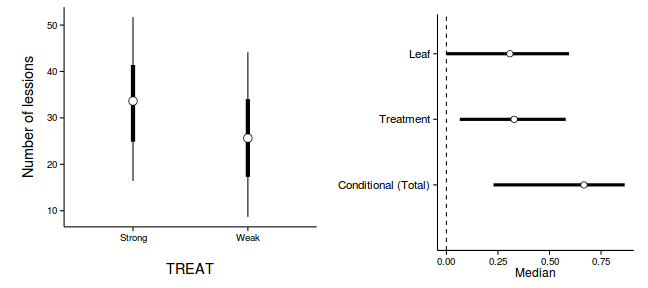

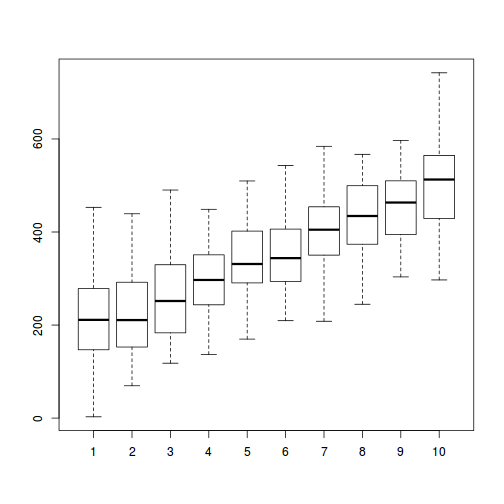

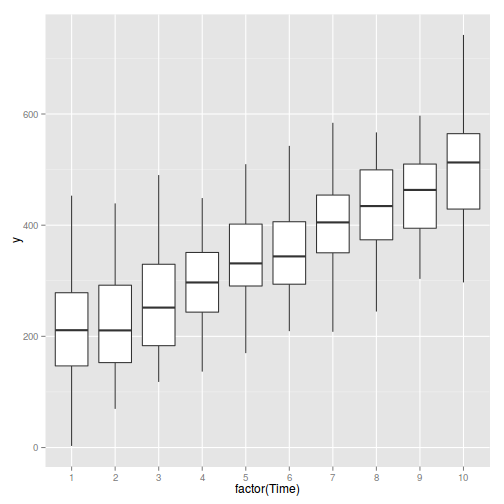

boxplot(y~A, data.rcb)

Conclusions:

- there is no evidence that the response variable is consistently non-normal across all populations - each boxplot is approximately symmetrical

- there is no evidence that variance (as estimated by the height of the boxplots) differs between the five populations. . More importantly, there is no evidence of a relationship between mean and variance - the height of boxplots does not increase with increasing position along the y-axis. Hence it there is no evidence of non-homogeneity

- transform the scale of the response variables (to address normality etc). Note transformations should be applied to the entire response variable (not just those populations that are skewed).

Block by within-Block interaction

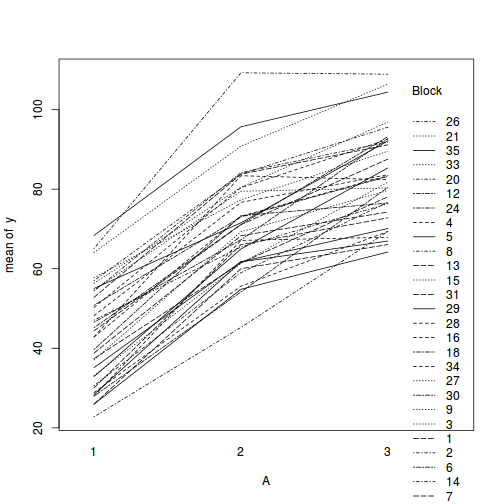

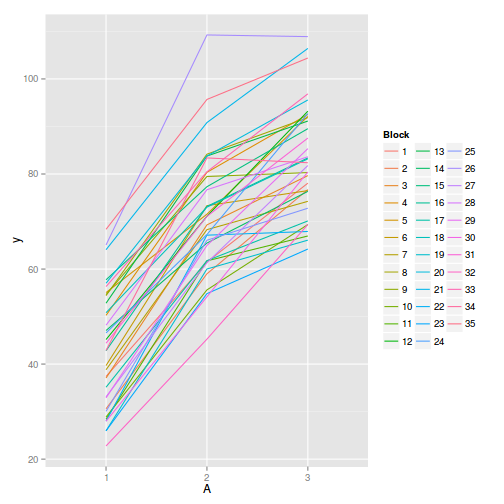

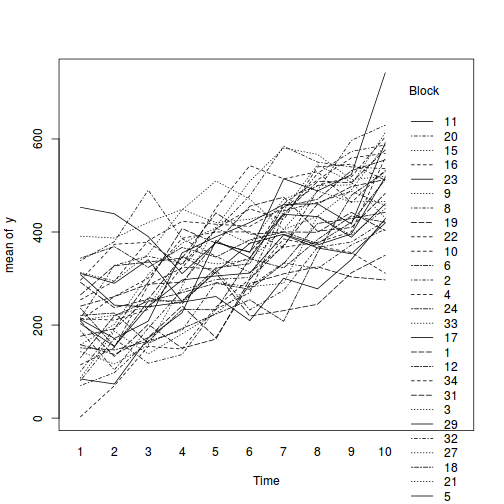

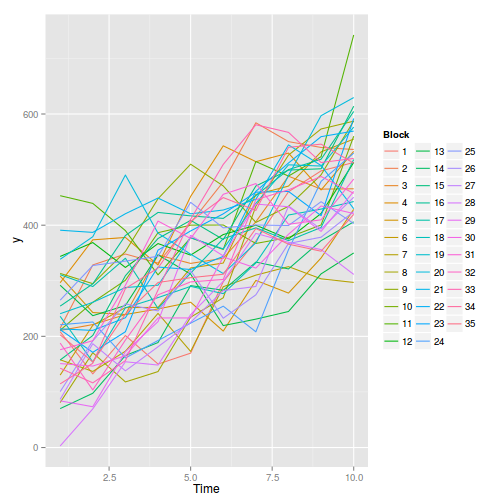

library(car) with(data.rcb, interaction.plot(A,Block,y))

#OR with ggplot library(ggplot2) ggplot(data.rcb, aes(y=y, x=A, group=Block,color=Block)) + geom_line() + guides(color=guide_legend(ncol=3))

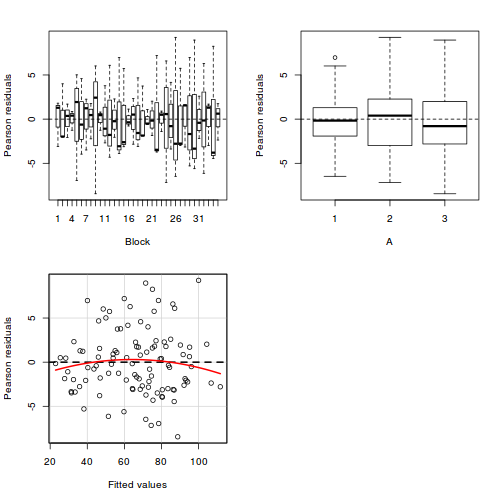

library(car) residualPlots(lm(y~Block+A, data.rcb))

Test stat Pr(>|t|) Block NA NA A NA NA Tukey test -0.885 0.376

# the Tukey's non-additivity test by itself can be obtained via an internal function # within the car package car:::tukeyNonaddTest(lm(y~Block+A, data.rcb))

Test Pvalue -0.8854414 0.3759186

# alternatively, there is also a Tukey's non-additivity test within the # asbio package library(asbio) with(data.rcb,tukey.add.test(y,A,Block))

Tukey's one df test for additivity F = 0.7840065 Denom df = 67 p-value = 0.3790855

Conclusions:

- there is no visual or inferential evidence of any major interactions between Block and the within-Block effect (A). Any trends appear to be reasonably consistent between Blocks.

Model fitting or statistical analysis

JAGS

| Full parameterization | Matrix parameterization | Heirarchical parameterization |

|---|---|---|

| $$ \begin{array}{rcl} y_{ijk}&\sim&\mathcal{N}(\mu_{ij},\sigma^2)\\ \mu_{ij} &=& \beta_0 + \beta_{i} + \gamma_{j(i)}\\ \gamma_{i{j}}&\sim&\mathcal{N}(0,\sigma_{B}^2)\\ \beta_0, \beta_i&\sim&\mathcal{N}(0,100000)\\ \sigma^2, \sigma_{B}&\sim&\mathcal{Cauchy}(0,25)\\ \end{array} $$ | $$ \begin{array}{rcl} y_{ijk}&\sim&\mathcal{N}(\mu_{ij},\sigma^2)\\ \mu_{ij} &=& \beta\mathbf{X} + \gamma_{j(i)}\\ \gamma_{i{j}}&\sim&\mathcal{N}(0,\sigma_{B}^2)\\ \beta&\sim&\mathcal{MVN}(0,100000)\\ \sigma^2, \sigma_{B}^2&\sim&\mathcal{Cauchy}(0,25)\\ \end{array} $$ | $$ \begin{array}{rcl} y_{ijk}&\sim&\mathcal{N}(\mu_{ij},\sigma^2)\\ \mu_{ij} &=& \beta_0 + \beta_{i} + \gamma_{j(i)}\\ \alpha_{i{j}}&\sim&\mathcal{N}(0,\sigma_{B}^2)\\ \beta_0, \beta_i&\sim&\mathcal{N}(0, 1000000)\\ \sigma^2, \sigma_{B}^2&\sim&\mathcal{Cauchy}(0,25)\\ \end{array} $$ |

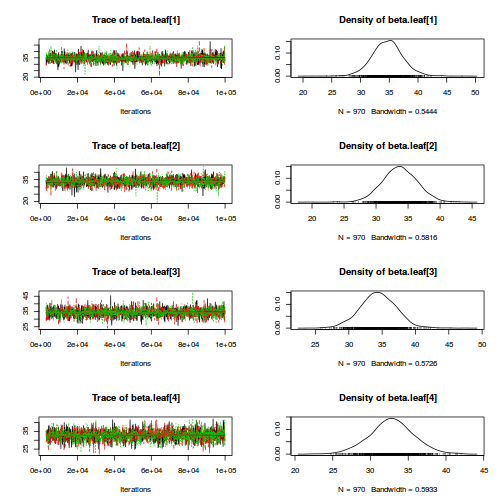

The full parameterization, shows the effects parameterization in which there is an intercept ($\alpha_0$) and two treatment effects ($\alpha_i$, where $i$ is 1,2).

The matrix parameterization is a compressed notation, In this parameterization, there are three alpha parameters (one representing the mean of treatment a1, and the other two representing the treatment effects (differences between a2 and a1 and a3 and a1). In generating priors for each of these three alpha parameters, we could loop through each and define a non-informative normal prior to each (as in the Full parameterization version). However, it turns out that it is more efficient (in terms of mixing and thus the number of necessary iterations) to define the priors from a multivariate normal distribution. This has as many means as there are parameters to estimate (3) and a 3x3 matrix of zeros and 100 in the diagonals. $$ \mu\sim\left[ \begin{array}{c} 0\\ 0\\ 0\\ \end{array} \right], \hspace{2em} \sigma^2\sim\left[ \begin{array}{ccc} 1000000&0&0\\ 0&1000000&0\\ 0&0&1000000\\ \end{array} \right] $$

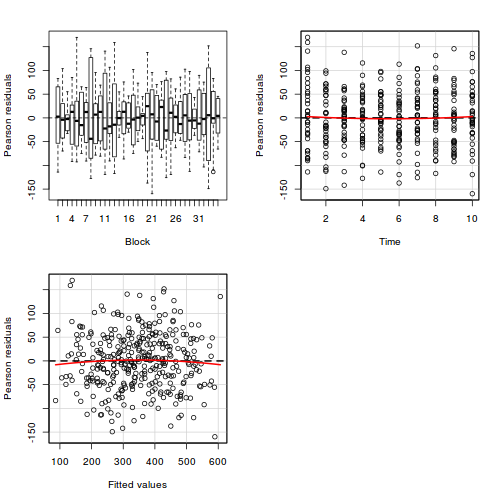

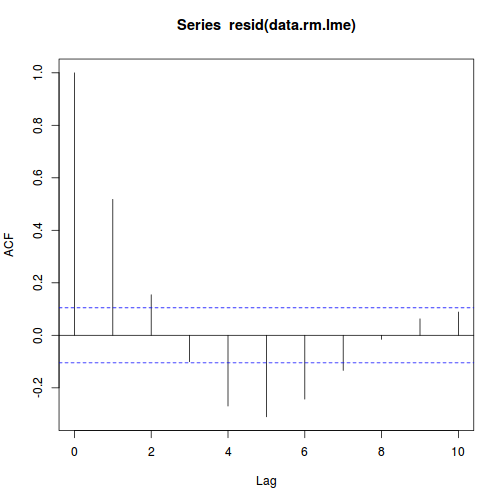

Rather than assume a specific variance-covariance structure, just like lme() we can incorporate an appropriate structure to account for different dependency/correlation structures in our data. In RCB designs, it is prudent to capture the residuals to allow checks that there are no outstanding dependency issues following model fitting.

Full effects parameterization

modelString=" model { #Likelihood for (i in 1:n) { y[i]~dnorm(mu[i],tau) mu[i] <- beta0 + beta[A[i]] + gamma[Block[i]] res[i] <- y[i]-mu[i] } #Priors beta0 ~ dnorm(0, 1.0E-6) beta[1] <- 0 for (i in 2:nA) { beta[i] ~ dnorm(0, 1.0E-6) #prior } for (i in 1:nBlock) { gamma[i] ~ dnorm(0, tau.B) #prior } tau <- pow(sigma,-2) sigma <- z/sqrt(chSq) z ~ dnorm(0, 0.0016)I(0,) #1/25^2 = 0.0016 chSq ~ dgamma(0.5, 0.5) tau.B <- pow(sigma.B,-2) sigma.B <- z/sqrt(chSq.B) z.B ~ dnorm(0, 0.0016)I(0,) #1/25^2 = 0.0016 chSq.B ~ dgamma(0.5, 0.5) } "

data.rcb.list <- with(data.rcb, list(y=y, Block=as.numeric(Block), A=as.numeric(A), n=nrow(data.rcb), nBlock=length(levels(Block)), nA = length(levels(A)) ) ) params <- c("beta0","beta",'gamma',"sigma","sigma.B","res") burnInSteps = 3000 nChains = 3 numSavedSteps = 3000 thinSteps = 10 nIter = burnInSteps+ceiling((numSavedSteps * thinSteps)/nChains) library(R2jags) rnorm(1)

[1] -1.523615

jags.effects.f.time <- system.time( data.rcb.r2jags.f <- jags(data=data.rcb.list, inits=NULL, parameters.to.save=params, model.file=textConnection(modelString), n.chains=3, n.iter=nIter, n.burnin=burnInSteps, n.thin=thinSteps ) )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 583 Initializing model

jags.effects.f.time

user system elapsed 9.997 0.056 10.096

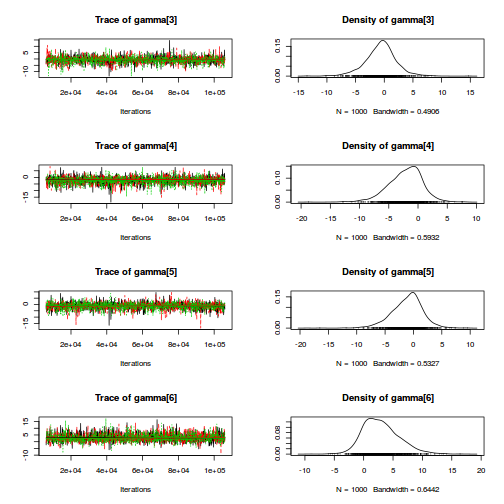

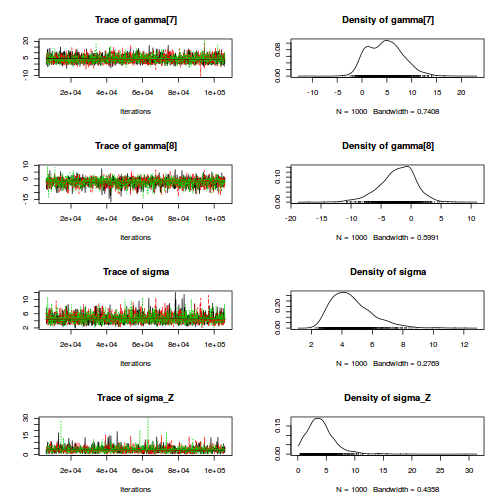

print(data.rcb.r2jags.f)

Inference for Bugs model at "5", fit using jags,

3 chains, each with 13000 iterations (first 3000 discarded), n.thin = 10

n.sims = 3000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

beta[1] 0.000 0.000 0.000 0.000 0.000 0.000 0.000 1.000 1

beta[2] 28.469 1.126 26.153 27.751 28.488 29.220 30.719 1.002 1000

beta[3] 40.184 1.142 37.899 39.422 40.197 40.955 42.340 1.001 2400

beta0 43.037 2.081 38.975 41.670 42.995 44.422 47.240 1.001 2100

gamma[1] -6.545 3.277 -13.077 -8.749 -6.447 -4.305 -0.256 1.001 3000

gamma[2] -9.939 3.295 -16.422 -12.129 -9.923 -7.758 -3.491 1.001 3000

gamma[3] -3.657 3.200 -10.086 -5.802 -3.580 -1.443 2.429 1.001 3000

gamma[4] 8.098 3.218 1.748 5.947 8.174 10.221 14.243 1.001 3000

gamma[5] 6.653 3.248 0.236 4.426 6.749 8.818 12.743 1.001 3000

gamma[6] -2.505 3.247 -8.804 -4.673 -2.526 -0.333 3.916 1.002 1400

gamma[7] -5.171 3.227 -11.428 -7.280 -5.194 -3.033 1.165 1.001 3000

gamma[8] 10.231 3.286 3.708 8.154 10.241 12.387 16.709 1.001 3000

gamma[9] 5.248 3.181 -0.990 3.159 5.240 7.377 11.443 1.001 2700

gamma[10] -13.826 3.276 -20.135 -15.990 -13.849 -11.565 -7.473 1.001 3000

gamma[11] -12.819 3.222 -19.059 -15.027 -12.928 -10.603 -6.459 1.001 3000

gamma[12] 3.656 3.234 -2.720 1.541 3.714 5.793 9.919 1.001 3000

gamma[13] 9.356 3.252 2.986 7.195 9.343 11.525 15.670 1.001 2700

gamma[14] -2.748 3.261 -9.239 -4.968 -2.729 -0.488 3.448 1.001 3000

gamma[15] 8.406 3.250 1.930 6.245 8.416 10.576 14.647 1.001 3000

gamma[16] 0.543 3.287 -5.740 -1.666 0.548 2.720 7.044 1.002 1600

gamma[17] -9.671 3.297 -16.274 -11.779 -9.696 -7.482 -3.120 1.002 1700

gamma[18] 2.926 3.222 -3.310 0.778 2.863 5.093 9.110 1.001 3000

gamma[19] -14.431 3.318 -21.075 -16.618 -14.419 -12.220 -7.883 1.001 3000

gamma[20] 12.128 3.277 5.838 9.866 12.188 14.364 18.513 1.004 3000

gamma[21] 19.986 3.212 13.484 17.855 20.016 22.086 26.175 1.001 3000

gamma[22] -10.910 3.256 -17.414 -13.042 -10.904 -8.742 -4.613 1.003 680

gamma[23] -16.639 3.281 -22.930 -18.849 -16.679 -14.453 -10.149 1.002 1800

gamma[24] 2.792 3.222 -3.478 0.682 2.788 4.937 9.149 1.002 1800

gamma[25] -9.145 3.224 -15.343 -11.347 -9.171 -6.980 -2.877 1.001 3000

gamma[26] 26.933 3.213 20.648 24.800 26.915 29.148 33.162 1.001 3000

gamma[27] -6.852 3.194 -12.844 -9.012 -6.910 -4.652 -0.689 1.002 1200

gamma[28] 3.393 3.236 -3.175 1.312 3.323 5.569 9.801 1.002 1700

gamma[29] -4.603 3.256 -11.078 -6.800 -4.532 -2.391 1.601 1.001 3000

gamma[30] -11.052 3.274 -17.184 -13.362 -11.095 -8.746 -4.506 1.001 2100

gamma[31] 1.626 3.211 -4.607 -0.526 1.700 3.682 7.894 1.002 1900

gamma[32] -18.959 3.266 -25.345 -21.118 -18.922 -16.788 -12.651 1.001 3000

gamma[33] 11.276 3.220 4.864 9.069 11.224 13.512 17.440 1.001 3000

gamma[34] 3.350 3.214 -2.866 1.181 3.302 5.428 9.763 1.001 2200

gamma[35] 22.236 3.288 15.714 20.028 22.275 24.431 28.651 1.002 1400

res[1] 0.906 2.722 -4.421 -0.874 0.883 2.698 6.305 1.001 3000

res[2] -3.490 2.710 -8.813 -5.343 -3.513 -1.652 1.824 1.001 3000

res[3] 1.398 2.718 -3.720 -0.402 1.363 3.185 7.003 1.001 3000

res[4] -2.500 2.772 -8.090 -4.294 -2.464 -0.627 2.822 1.001 3000

res[5] -2.566 2.781 -8.090 -4.398 -2.537 -0.753 2.800 1.001 3000

res[6] 3.444 2.752 -2.045 1.664 3.453 5.220 8.840 1.001 3000

res[7] -2.308 2.747 -7.733 -4.123 -2.393 -0.395 2.968 1.001 2300

res[8] 1.445 2.728 -3.863 -0.395 1.398 3.327 6.838 1.001 3000

res[9] 0.096 2.731 -5.327 -1.743 0.082 1.988 5.416 1.001 3000

res[10] -0.882 2.736 -6.231 -2.713 -0.877 0.940 4.363 1.001 3000

res[11] 0.754 2.744 -4.604 -1.004 0.760 2.521 6.107 1.001 3000

res[12] 1.206 2.730 -4.164 -0.593 1.207 3.023 6.522 1.001 3000

res[13] 5.359 2.695 0.038 3.542 5.321 7.107 10.648 1.002 1800

res[14] -6.618 2.681 -11.749 -8.360 -6.666 -4.816 -1.213 1.001 3000

res[15] 2.254 2.693 -2.929 0.440 2.214 4.034 7.574 1.001 3000

res[16] -0.841 2.707 -6.181 -2.626 -0.870 0.938 4.364 1.004 540

res[17] 4.340 2.762 -1.007 2.517 4.286 6.136 9.900 1.002 1000

res[18] -4.211 2.726 -9.462 -6.025 -4.200 -2.389 1.039 1.003 780

res[19] 0.944 2.696 -4.547 -0.786 0.993 2.725 6.147 1.001 3000

res[20] 1.961 2.695 -3.592 0.232 2.032 3.736 7.243 1.001 3000

res[21] -3.803 2.703 -9.307 -5.520 -3.795 -2.018 1.359 1.001 3000

res[22] 1.135 2.745 -4.483 -0.677 1.193 3.017 6.519 1.001 2600

res[23] 2.429 2.740 -3.107 0.657 2.442 4.279 7.780 1.001 3000

res[24] -1.588 2.730 -6.896 -3.360 -1.551 0.245 3.776 1.001 3000

res[25] 6.330 2.696 1.076 4.448 6.339 8.154 11.768 1.001 3000

res[26] 2.719 2.695 -2.601 0.901 2.715 4.540 8.096 1.001 3000

res[27] -8.172 2.704 -13.495 -9.958 -8.193 -6.360 -2.940 1.001 3000

res[28] -0.343 2.765 -5.837 -2.189 -0.298 1.453 5.075 1.002 1300

res[29] -2.068 2.760 -7.584 -3.885 -1.988 -0.220 3.364 1.001 3000

res[30] -0.028 2.751 -5.527 -1.872 -0.004 1.821 5.361 1.001 2400

res[31] -1.809 2.735 -7.302 -3.599 -1.796 0.011 3.367 1.002 1300

res[32] 3.034 2.719 -2.556 1.310 3.069 4.915 8.268 1.001 3000

res[33] -3.446 2.727 -9.016 -5.177 -3.400 -1.683 1.761 1.001 2600

res[34] -1.527 2.724 -7.069 -3.317 -1.525 0.289 3.849 1.001 3000

res[35] -4.059 2.701 -9.400 -5.833 -4.075 -2.242 1.201 1.001 3000

res[36] 6.335 2.717 0.896 4.564 6.361 8.154 11.681 1.001 3000

res[37] 0.413 2.690 -4.816 -1.409 0.447 2.232 5.717 1.001 3000

res[38] 2.911 2.711 -2.391 1.087 2.910 4.708 8.236 1.002 3000

res[39] -1.434 2.709 -6.944 -3.272 -1.466 0.460 3.779 1.001 3000

res[40] 6.774 2.711 1.664 4.888 6.706 8.625 12.169 1.001 3000

res[41] -3.285 2.705 -8.297 -5.213 -3.312 -1.492 2.117 1.001 3000

res[42] -4.130 2.703 -9.197 -6.032 -4.196 -2.288 1.163 1.001 3000

res[43] 6.292 2.704 1.020 4.478 6.287 8.059 11.568 1.002 1500

res[44] -2.591 2.682 -7.877 -4.418 -2.563 -0.833 2.610 1.001 3000

res[45] -2.090 2.670 -7.294 -3.906 -2.060 -0.348 3.112 1.001 2300

res[46] -0.766 2.756 -6.077 -2.633 -0.773 1.078 4.543 1.002 1300

res[47] 1.129 2.772 -4.371 -0.676 1.107 2.901 6.573 1.002 1600

res[48] -0.382 2.761 -5.747 -2.237 -0.366 1.429 5.037 1.002 1500

res[49] 1.761 2.748 -3.654 -0.114 1.792 3.620 7.126 1.002 1400

res[50] -0.065 2.759 -5.450 -1.891 -0.089 1.808 5.350 1.002 1100

res[51] -3.424 2.746 -8.714 -5.276 -3.342 -1.578 1.927 1.002 1300

res[52] 4.845 2.700 -0.535 3.015 4.890 6.622 10.024 1.001 3000

res[53] -1.411 2.689 -6.681 -3.247 -1.407 0.467 3.718 1.001 3000

res[54] -2.952 2.686 -8.183 -4.759 -2.886 -1.168 2.234 1.001 3000

res[55] -2.659 2.751 -8.104 -4.518 -2.571 -0.821 2.757 1.001 3000

res[56] 2.936 2.755 -2.469 1.120 2.946 4.782 8.360 1.001 3000

res[57] -2.710 2.738 -8.279 -4.528 -2.659 -0.893 2.589 1.001 3000

res[58] 1.844 2.766 -3.497 0.050 1.823 3.664 7.223 1.001 3000

res[59] 0.155 2.790 -5.201 -1.718 0.118 1.970 5.796 1.001 3000

res[60] 0.226 2.754 -5.017 -1.690 0.237 2.080 5.671 1.001 3000

res[61] 1.043 2.731 -4.118 -0.805 1.014 2.869 6.475 1.001 2200

res[62] -0.672 2.717 -5.930 -2.542 -0.690 1.175 4.672 1.001 3000

res[63] 3.215 2.712 -1.901 1.373 3.187 4.994 8.558 1.001 3000

res[64] -4.125 2.681 -9.346 -5.880 -4.184 -2.306 1.166 1.002 1100

res[65] 6.530 2.707 1.270 4.686 6.502 8.365 11.787 1.004 560

res[66] -4.400 2.692 -9.636 -6.180 -4.409 -2.565 0.745 1.003 690

res[67] -0.432 2.719 -5.912 -2.194 -0.461 1.361 4.916 1.001 3000

res[68] -0.037 2.754 -5.676 -1.816 -0.011 1.792 5.376 1.002 1900

res[69] -2.372 2.731 -8.020 -4.098 -2.428 -0.523 2.843 1.001 2600

res[70] 0.723 2.715 -4.664 -1.099 0.707 2.501 6.099 1.003 830

res[71] -7.033 2.728 -12.237 -8.859 -7.074 -5.268 -1.462 1.002 1500

res[72] 6.706 2.697 1.459 4.915 6.671 8.521 12.203 1.002 1200

res[73] -3.838 2.731 -9.182 -5.619 -3.848 -2.028 1.580 1.001 3000

res[74] 3.701 2.720 -1.666 1.841 3.689 5.516 9.142 1.001 3000

res[75] -1.277 2.694 -6.652 -3.189 -1.300 0.554 4.038 1.001 3000

res[76] -4.904 2.739 -10.180 -6.773 -5.040 -3.003 0.637 1.001 2700

res[77] 10.817 2.739 5.596 8.895 10.702 12.682 16.279 1.001 3000

res[78] -1.247 2.740 -6.351 -3.123 -1.378 0.641 4.242 1.001 3000

res[79] -3.087 2.718 -8.356 -4.926 -3.133 -1.277 2.291 1.003 950

res[80] -3.328 2.709 -8.700 -5.154 -3.341 -1.532 1.944 1.002 1100

res[81] 5.411 2.735 -0.102 3.656 5.331 7.204 10.614 1.002 1000

res[82] 1.754 2.731 -3.572 -0.076 1.729 3.506 7.222 1.002 1400

res[83] 1.787 2.761 -3.617 0.001 1.804 3.662 7.121 1.002 1200

res[84] -2.984 2.742 -8.260 -4.829 -2.992 -1.193 2.432 1.002 1400

res[85] -5.535 2.729 -10.735 -7.426 -5.494 -3.718 -0.253 1.001 3000

res[86] -1.943 2.729 -7.217 -3.797 -1.940 -0.086 3.384 1.001 3000

res[87] 6.718 2.719 1.510 4.918 6.678 8.506 12.149 1.001 3000

res[88] -4.010 2.699 -9.338 -5.803 -4.018 -2.163 1.241 1.003 890

res[89] -6.295 2.727 -11.728 -8.105 -6.278 -4.386 -0.958 1.002 1700

res[90] 8.258 2.723 2.866 6.453 8.232 10.145 13.584 1.002 1300

res[91] -0.272 2.643 -5.598 -2.014 -0.311 1.493 4.848 1.001 3000

res[92] -2.059 2.638 -7.269 -3.796 -2.156 -0.282 3.194 1.002 2000

res[93] 2.711 2.667 -2.466 0.991 2.653 4.461 7.965 1.001 3000

res[94] -1.305 2.781 -6.717 -3.187 -1.250 0.519 4.101 1.001 2600

res[95] -7.302 2.755 -12.539 -9.135 -7.397 -5.428 -1.979 1.001 3000

res[96] 5.109 2.778 -0.259 3.251 5.119 6.951 10.524 1.001 3000

res[97] 1.998 2.708 -3.334 0.141 2.025 3.817 7.305 1.002 1400

res[98] -2.318 2.765 -7.704 -4.198 -2.341 -0.418 2.966 1.001 3000

res[99] 2.367 2.704 -2.953 0.584 2.401 4.164 7.680 1.001 3000

res[100] -3.510 2.681 -8.765 -5.295 -3.527 -1.747 1.878 1.004 560

res[101] 8.523 2.700 3.195 6.714 8.518 10.366 13.790 1.002 1200

res[102] -4.203 2.729 -9.719 -5.965 -4.206 -2.422 1.158 1.003 920

res[103] 3.084 2.756 -2.397 1.232 3.070 4.940 8.509 1.001 3000

res[104] 1.944 2.773 -3.604 0.122 1.974 3.758 7.409 1.002 1400

res[105] -1.056 2.807 -6.634 -2.988 -1.048 0.729 4.291 1.002 2000

sigma 4.692 0.414 3.993 4.403 4.657 4.951 5.599 1.002 1400

sigma.B 11.583 1.513 9.043 10.493 11.427 12.520 14.839 1.001 2700

deviance 619.973 11.360 600.077 611.961 618.909 627.051 644.698 1.002 1800

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 64.5 and DIC = 684.5

DIC is an estimate of expected predictive error (lower deviance is better).

data.rcb.mcmc.list.f <- as.mcmc(data.rcb.r2jags.f)

[1] "beta0" "beta[1]" "beta[2]" "beta[3]" "deviance" "gamma[1]" "gamma[10]" "gamma[11]" "gamma[12]" "gamma[13]" "gamma[14]" "gamma[15]" "gamma[16]" "gamma[17]" "gamma[18]" "gamma[19]" [17] "gamma[2]" "gamma[20]" "gamma[21]" "gamma[22]" "gamma[23]" "gamma[24]" "gamma[25]" "gamma[26]" "gamma[27]" "gamma[28]" "gamma[29]" "gamma[3]" "gamma[30]" "gamma[31]" "gamma[32]" "gamma[33]" [33] "gamma[34]" "gamma[35]" "gamma[4]" "gamma[5]" "gamma[6]" "gamma[7]" "gamma[8]" "gamma[9]" "res[1]" "res[10]" "res[100]" "res[101]" "res[102]" "res[103]" "res[104]" "res[105]" [49] "res[11]" "res[12]" "res[13]" "res[14]" "res[15]" "res[16]" "res[17]" "res[18]" "res[19]" "res[2]" "res[20]" "res[21]" "res[22]" "res[23]" "res[24]" "res[25]" [65] "res[26]" "res[27]" "res[28]" "res[29]" "res[3]" "res[30]" "res[31]" "res[32]" "res[33]" "res[34]" "res[35]" "res[36]" "res[37]" "res[38]" "res[39]" "res[4]" [81] "res[40]" "res[41]" "res[42]" "res[43]" "res[44]" "res[45]" "res[46]" "res[47]" "res[48]" "res[49]" "res[5]" "res[50]" "res[51]" "res[52]" "res[53]" "res[54]" [97] "res[55]" "res[56]" "res[57]" "res[58]" "res[59]" "res[6]" "res[60]" "res[61]" "res[62]" "res[63]" "res[64]" "res[65]" "res[66]" "res[67]" "res[68]" "res[69]" [113] "res[7]" "res[70]" "res[71]" "res[72]" "res[73]" "res[74]" "res[75]" "res[76]" "res[77]" "res[78]" "res[79]" "res[8]" "res[80]" "res[81]" "res[82]" "res[83]" [129] "res[84]" "res[85]" "res[86]" "res[87]" "res[88]" "res[89]" "res[9]" "res[90]" "res[91]" "res[92]" "res[93]" "res[94]" "res[95]" "res[96]" "res[97]" "res[98]" [145] "res[99]" "sigma" "sigma.B"

Error in HPDinterval.mcmc(as.mcmc(x)): obj must have nsamp > 1

Matrix parameterization

modelString=" model { #Likelihood for (i in 1:n) { y[i]~dnorm(mu[i],tau) mu[i] <- inprod(beta[],X[i,]) + gamma[Block[i]] res[i] <- y[i]-mu[i] } #Priors beta ~ dmnorm(a0,A0) for (i in 1:nBlock) { gamma[i] ~ dnorm(0, tau.B) #prior } tau <- pow(sigma,-2) sigma <- z/sqrt(chSq) z ~ dnorm(0, 0.0016)I(0,) #1/25^2 = 0.0016 chSq ~ dgamma(0.5, 0.5) tau.B <- pow(sigma.B,-2) sigma.B <- z/sqrt(chSq.B) z.B ~ dnorm(0, 0.0016)I(0,) #1/25^2 = 0.0016 chSq.B ~ dgamma(0.5, 0.5) } "

A.Xmat <- model.matrix(~A,data.rcb) data.rcb.list <- with(data.rcb, list(y=y, Block=as.numeric(Block), X=A.Xmat, n=nrow(data.rcb), nBlock=length(levels(Block)), nA = ncol(A.Xmat), a0=rep(0,3), A0=diag(0,3) ) ) params <- c("beta",'gamma',"sigma","sigma.B","res") adaptSteps = 1000 burnInSteps = 3000 nChains = 3 numSavedSteps = 3000 thinSteps = 10 nIter = burnInSteps+ceiling((numSavedSteps * thinSteps)/nChains) library(R2jags) rnorm(1)

[1] 1.174783

jags.effects.m.time <- system.time( data.rcb.r2jags.m <- jags(data=data.rcb.list, inits=NULL, parameters.to.save=params, model.file=textConnection(modelString), n.chains=3, n.iter=nIter, n.burnin=burnInSteps, n.thin=thinSteps ) )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 910 Initializing model

jags.effects.m.time

user system elapsed 9.888 0.060 9.989

print(data.rcb.r2jags.m)

Inference for Bugs model at "5", fit using jags,

3 chains, each with 13000 iterations (first 3000 discarded), n.thin = 10

n.sims = 3000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

beta[1] 43.004 2.154 38.742 41.589 43.024 44.450 47.311 1.002 1200

beta[2] 28.490 1.128 26.296 27.745 28.468 29.232 30.687 1.001 3000

beta[3] 40.174 1.119 37.894 39.445 40.217 40.945 42.305 1.001 3000

gamma[1] -6.523 3.316 -13.224 -8.720 -6.491 -4.331 -0.002 1.002 3000

gamma[2] -9.928 3.262 -16.347 -12.141 -9.928 -7.750 -3.371 1.002 1100

gamma[3] -3.790 3.261 -10.171 -5.930 -3.819 -1.648 2.568 1.001 3000

gamma[4] 8.056 3.294 1.682 5.791 8.029 10.306 14.360 1.001 3000

gamma[5] 6.700 3.285 0.285 4.408 6.772 8.851 13.058 1.001 3000

gamma[6] -2.556 3.281 -9.064 -4.748 -2.501 -0.465 4.022 1.002 1100

gamma[7] -5.123 3.245 -11.542 -7.238 -5.119 -3.038 1.359 1.002 1400

gamma[8] 10.357 3.230 4.101 8.183 10.293 12.512 16.746 1.001 3000

gamma[9] 5.189 3.293 -1.058 2.988 5.164 7.294 11.634 1.001 3000

gamma[10] -13.734 3.234 -19.824 -15.957 -13.783 -11.585 -7.237 1.001 3000

gamma[11] -12.787 3.274 -19.356 -15.003 -12.764 -10.485 -6.646 1.001 3000

gamma[12] 3.699 3.334 -3.009 1.446 3.721 6.013 10.243 1.002 1800

gamma[13] 9.537 3.285 3.082 7.277 9.515 11.773 16.179 1.004 700

gamma[14] -2.810 3.270 -9.311 -4.922 -2.875 -0.661 3.646 1.001 3000

gamma[15] 8.485 3.284 2.103 6.273 8.488 10.717 14.932 1.001 3000

gamma[16] 0.504 3.244 -5.816 -1.783 0.427 2.753 6.886 1.002 1700

gamma[17] -9.719 3.298 -15.908 -11.982 -9.843 -7.419 -3.233 1.002 3000

gamma[18] 2.954 3.271 -3.455 0.784 2.929 5.090 9.405 1.001 3000

gamma[19] -14.297 3.222 -20.502 -16.454 -14.387 -12.157 -7.738 1.002 1600

gamma[20] 12.195 3.241 5.871 10.051 12.263 14.406 18.318 1.001 3000

gamma[21] 20.036 3.334 13.391 17.852 20.066 22.230 26.832 1.002 3000

gamma[22] -10.911 3.278 -17.106 -13.208 -10.912 -8.698 -4.470 1.001 3000

gamma[23] -16.691 3.292 -23.152 -18.860 -16.741 -14.495 -10.125 1.002 3000

gamma[24] 2.696 3.284 -3.611 0.453 2.633 4.875 9.190 1.002 1100

gamma[25] -9.063 3.257 -15.351 -11.338 -9.052 -6.797 -2.875 1.001 3000

gamma[26] 26.953 3.339 20.622 24.648 26.899 29.207 33.513 1.002 1700

gamma[27] -6.736 3.322 -13.246 -8.906 -6.761 -4.509 -0.271 1.001 3000

gamma[28] 3.366 3.222 -3.119 1.210 3.379 5.481 9.746 1.001 3000

gamma[29] -4.626 3.287 -10.929 -6.910 -4.653 -2.364 1.806 1.001 3000

gamma[30] -11.090 3.275 -17.542 -13.353 -11.037 -8.833 -4.581 1.001 2200

gamma[31] 1.669 3.216 -4.783 -0.406 1.680 3.742 7.875 1.001 3000

gamma[32] -18.987 3.313 -25.508 -21.173 -18.976 -16.840 -12.251 1.003 870

gamma[33] 11.323 3.319 4.720 9.175 11.341 13.511 17.752 1.001 3000

gamma[34] 3.424 3.291 -2.896 1.251 3.394 5.548 10.024 1.002 1100

gamma[35] 22.371 3.309 15.878 20.171 22.370 24.577 28.991 1.001 3000

res[1] 0.917 2.759 -4.620 -0.940 0.880 2.729 6.493 1.001 3000

res[2] -3.500 2.772 -9.057 -5.434 -3.469 -1.693 2.092 1.001 3000

res[3] 1.418 2.760 -3.833 -0.468 1.381 3.256 6.862 1.001 3000

res[4] -2.478 2.737 -7.717 -4.410 -2.502 -0.647 2.944 1.001 3000

res[5] -2.566 2.743 -7.906 -4.443 -2.506 -0.741 2.697 1.001 2500

res[6] 3.475 2.705 -1.710 1.623 3.469 5.252 8.817 1.001 2000

res[7] -2.142 2.748 -7.596 -3.953 -2.146 -0.258 3.177 1.002 1700

res[8] 1.590 2.732 -3.843 -0.189 1.586 3.427 6.863 1.001 2300

res[9] 0.271 2.731 -5.077 -1.570 0.257 2.108 5.552 1.001 2700

res[10] -0.807 2.734 -6.303 -2.632 -0.735 1.028 4.582 1.001 3000

res[11] 0.807 2.737 -4.645 -0.994 0.806 2.604 6.060 1.001 3000

res[12] 1.290 2.734 -4.196 -0.559 1.311 3.144 6.653 1.001 3000

res[13] 5.344 2.750 -0.121 3.514 5.395 7.155 10.637 1.001 2600

res[14] -6.654 2.756 -12.035 -8.496 -6.678 -4.773 -1.251 1.001 3000

res[15] 2.249 2.726 -3.186 0.477 2.292 4.046 7.596 1.001 3000

res[16] -0.758 2.716 -6.013 -2.563 -0.808 1.092 4.477 1.001 3000

res[17] 4.403 2.726 -0.922 2.628 4.407 6.222 9.669 1.001 3000

res[18] -4.118 2.690 -9.356 -5.966 -4.124 -2.291 1.188 1.001 2800

res[19] 0.928 2.715 -4.362 -0.893 0.905 2.764 6.150 1.001 3000

res[20] 1.924 2.724 -3.450 0.100 1.859 3.752 7.179 1.001 3000

res[21] -3.809 2.713 -9.068 -5.634 -3.852 -2.023 1.562 1.001 3000

res[22] 1.041 2.731 -4.382 -0.753 1.098 2.864 6.299 1.001 2000

res[23] 2.314 2.716 -3.093 0.462 2.315 4.160 7.565 1.001 2700

res[24] -1.671 2.672 -6.945 -3.462 -1.615 0.091 3.339 1.001 3000

res[25] 6.421 2.734 1.089 4.552 6.410 8.200 11.946 1.001 3000

res[26] 2.789 2.737 -2.520 0.959 2.773 4.604 8.298 1.001 3000

res[27] -8.071 2.760 -13.435 -9.908 -8.100 -6.224 -2.329 1.001 3000

res[28] -0.402 2.701 -5.890 -2.202 -0.416 1.396 4.854 1.001 2000

res[29] -2.148 2.759 -7.566 -3.919 -2.174 -0.263 3.280 1.001 3000

res[30] -0.077 2.712 -5.592 -1.880 -0.072 1.667 5.222 1.001 3000

res[31] -1.809 2.691 -6.979 -3.644 -1.847 0.028 3.370 1.001 3000

res[32] 3.014 2.712 -2.336 1.175 2.948 4.859 8.457 1.001 3000

res[33] -3.436 2.731 -8.874 -5.345 -3.414 -1.597 2.086 1.001 3000

res[34] -1.538 2.743 -6.945 -3.379 -1.541 0.244 3.892 1.001 3000

res[35] -4.090 2.793 -9.334 -5.995 -4.088 -2.291 1.354 1.001 3000

res[36] 6.334 2.782 0.936 4.507 6.327 8.081 11.939 1.001 3000

res[37] 0.265 2.754 -5.047 -1.548 0.266 2.126 5.733 1.002 1900

res[38] 2.742 2.772 -2.723 0.984 2.748 4.623 8.056 1.002 1500

res[39] -1.573 2.758 -6.879 -3.416 -1.575 0.216 3.851 1.002 1400

res[40] 6.868 2.728 1.621 5.028 6.878 8.687 12.302 1.002 1500

res[41] -3.211 2.745 -8.689 -4.989 -3.264 -1.441 2.212 1.001 2100

res[42] -4.026 2.721 -9.412 -5.821 -4.026 -2.205 1.360 1.001 2400

res[43] 6.246 2.717 0.781 4.427 6.248 8.067 11.439 1.001 2700

res[44] -2.659 2.727 -8.028 -4.536 -2.625 -0.803 2.647 1.001 3000

res[45] -2.127 2.716 -7.465 -3.957 -2.154 -0.267 3.152 1.001 3000

res[46] -0.695 2.655 -5.883 -2.499 -0.605 1.073 4.420 1.001 2900

res[47] 1.179 2.677 -4.102 -0.615 1.197 2.956 6.499 1.001 3000

res[48] -0.301 2.679 -5.713 -2.050 -0.302 1.490 4.819 1.001 3000

res[49] 1.841 2.673 -3.491 0.047 1.930 3.609 7.021 1.001 3000

res[50] -0.006 2.680 -5.260 -1.870 0.040 1.822 5.162 1.001 3000

res[51] -3.334 2.673 -8.610 -5.127 -3.320 -1.547 1.788 1.001 3000

res[52] 4.850 2.717 -0.588 3.073 4.817 6.666 10.211 1.002 1300

res[53] -1.428 2.741 -6.836 -3.233 -1.452 0.417 3.954 1.002 1700

res[54] -2.937 2.704 -8.170 -4.720 -2.979 -1.154 2.298 1.002 1900

res[55] -2.761 2.652 -8.288 -4.517 -2.653 -1.001 2.233 1.001 3000

res[56] 2.814 2.694 -2.730 1.043 2.871 4.574 8.034 1.001 3000

res[57] -2.802 2.708 -8.353 -4.600 -2.691 -1.065 2.261 1.001 3000

res[58] 1.809 2.657 -3.322 -0.003 1.768 3.576 7.132 1.002 1200

res[59] 0.100 2.641 -4.964 -1.773 0.148 1.823 5.472 1.002 1500

res[60] 0.202 2.682 -4.849 -1.690 0.178 1.986 5.448 1.002 1800

res[61] 1.025 2.743 -4.220 -0.775 0.975 2.807 6.519 1.001 3000

res[62] -0.710 2.753 -5.840 -2.514 -0.770 1.069 4.743 1.001 3000

res[63] 3.207 2.731 -2.133 1.472 3.140 4.902 8.842 1.001 3000

res[64] -4.092 2.763 -9.609 -5.864 -4.053 -2.264 1.299 1.001 3000

res[65] 6.544 2.760 1.168 4.741 6.624 8.420 11.809 1.001 3000

res[66] -4.356 2.758 -9.929 -6.218 -4.308 -2.483 0.912 1.001 3000

res[67] -0.348 2.725 -5.659 -2.204 -0.316 1.523 4.917 1.001 3000

res[68] 0.026 2.708 -5.254 -1.860 0.123 1.905 5.243 1.001 3000

res[69] -2.278 2.710 -7.547 -4.109 -2.232 -0.488 3.060 1.001 3000

res[70] 0.851 2.695 -4.547 -0.884 0.848 2.640 6.153 1.001 3000

res[71] -6.925 2.721 -12.373 -8.670 -6.913 -5.141 -1.537 1.001 2600

res[72] 6.844 2.700 1.617 5.034 6.911 8.645 12.018 1.001 2200

res[73] -3.887 2.687 -8.936 -5.741 -3.932 -2.088 1.403 1.001 2900

res[74] 3.630 2.716 -1.602 1.849 3.575 5.459 9.102 1.001 3000

res[75] -1.316 2.710 -6.421 -3.129 -1.360 0.506 4.159 1.001 3000

res[76] -4.892 2.723 -9.983 -6.830 -4.919 -3.012 0.316 1.001 3000

res[77] 10.808 2.755 5.424 8.899 10.797 12.654 16.243 1.002 3000

res[78] -1.225 2.731 -6.517 -3.100 -1.273 0.665 4.258 1.001 3000

res[79] -3.170 2.752 -8.477 -5.005 -3.163 -1.317 2.249 1.002 1000

res[80] -3.432 2.756 -8.813 -5.308 -3.443 -1.555 1.853 1.002 1200

res[81] 5.338 2.762 0.002 3.510 5.327 7.194 10.680 1.002 1300

res[82] 1.814 2.727 -3.565 0.011 1.780 3.641 7.008 1.001 3000

res[83] 1.827 2.672 -3.589 0.115 1.861 3.604 6.985 1.001 3000

res[84] -2.914 2.718 -8.320 -4.720 -2.929 -1.077 2.320 1.001 3000

res[85] -5.479 2.751 -10.911 -7.350 -5.467 -3.666 -0.052 1.001 3000

res[86] -1.907 2.756 -7.162 -3.749 -1.938 -0.055 3.639 1.001 3000

res[87] 6.784 2.762 1.416 4.956 6.769 8.564 12.313 1.001 3000

res[88] -3.940 2.671 -9.110 -5.748 -3.987 -2.149 1.568 1.001 3000

res[89] -6.246 2.658 -11.470 -7.983 -6.301 -4.433 -1.107 1.001 3000

res[90] 8.338 2.668 3.224 6.599 8.287 10.080 13.656 1.001 3000

res[91] -0.283 2.687 -5.429 -2.159 -0.251 1.541 5.005 1.001 3000

res[92] -2.091 2.696 -7.290 -3.904 -2.072 -0.279 3.203 1.001 3000

res[93] 2.710 2.713 -2.517 0.875 2.760 4.515 8.115 1.001 3000

res[94] -1.244 2.716 -6.596 -3.068 -1.210 0.573 3.872 1.001 2700

res[95] -7.262 2.699 -12.509 -9.113 -7.225 -5.472 -2.189 1.002 1800

res[96] 5.180 2.706 -0.225 3.303 5.205 7.010 10.342 1.002 1600

res[97] 1.984 2.763 -3.475 0.153 1.960 3.775 7.552 1.002 2200

res[98] -2.353 2.755 -7.896 -4.114 -2.375 -0.530 3.125 1.001 2900

res[99] 2.363 2.764 -3.222 0.566 2.345 4.230 7.936 1.001 3000

res[100] -3.551 2.761 -8.907 -5.415 -3.585 -1.684 1.863 1.001 2700

res[101] 8.461 2.732 3.307 6.641 8.435 10.293 13.842 1.001 2000

res[102] -4.234 2.725 -9.536 -6.071 -4.277 -2.390 1.048 1.002 1700

res[103] 2.981 2.747 -2.332 1.113 2.987 4.808 8.341 1.001 3000

res[104] 1.821 2.769 -3.515 -0.091 1.789 3.685 7.272 1.001 3000

res[105] -1.148 2.757 -6.483 -3.016 -1.161 0.685 4.305 1.001 3000

sigma 4.682 0.404 3.967 4.400 4.658 4.938 5.551 1.001 3000

sigma.B 11.616 1.495 9.132 10.567 11.435 12.537 15.014 1.003 980

deviance 619.768 11.408 600.405 611.767 618.725 626.775 643.972 1.002 1400

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 65.0 and DIC = 684.8

DIC is an estimate of expected predictive error (lower deviance is better).

data.rcb.mcmc.list.m <- as.mcmc(data.rcb.r2jags.m) Data.Rcb.mcmc.list.m <- data.rcb.mcmc.list.m

modelString=" model { #Likelihood for (i in 1:n) { y[i]~dnorm(mu[i],tau) mu[i] <- inprod(beta[],X[i,]) + inprod(gamma[], Z[i,]) res[i] <- y[i] - mu[i] } #Priors beta ~ dmnorm(a0,A0) for (i in 1:nZ) { gamma[i] ~ dnorm(0, tau.B) #prior } tau <- pow(sigma,-2) sigma <- z/sqrt(chSq) z ~ dnorm(0, 0.0016)I(0,) #1/25^2 = 0.0016 chSq ~ dgamma(0.5, 0.5) tau.B <- pow(sigma.B,-2) sigma.B <- z/sqrt(chSq.B) z.B ~ dnorm(0, 0.0016)I(0,) #1/25^2 = 0.0016 chSq.B ~ dgamma(0.5, 0.5) } "

A.Xmat <- model.matrix(~A,data.rcb) Zmat <- model.matrix(~-1+Block, data.rcb) data.rcb.list <- with(data.rcb, list(y=y, X=A.Xmat, n=nrow(data.rcb), Z=Zmat, nZ=ncol(Zmat), nA = ncol(A.Xmat), a0=rep(0,3), A0=diag(0,3) ) ) params <- c("beta","gamma","sigma","sigma.B",'beta','res') burnInSteps = 3000 nChains = 3 numSavedSteps = 3000 thinSteps = 10 nIter = burnInSteps+ceiling((numSavedSteps * thinSteps)/nChains) library(R2jags) rnorm(1)

[1] -0.2973848

jags.effects.m2.time <- system.time( data.rcb.r2jags.m2 <- jags(data=data.rcb.list, inits=NULL, parameters.to.save=params, model.file=textConnection(modelString), n.chains=3, n.iter=nIter, n.burnin=burnInSteps, n.thin=thinSteps ) )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 4621 Initializing model

jags.effects.m2.time

user system elapsed 28.221 0.104 28.487

print(data.rcb.r2jags.m2)

Inference for Bugs model at "6", fit using jags,

3 chains, each with 13000 iterations (first 3000 discarded), n.thin = 10

n.sims = 3000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

beta[1] 43.074 2.115 38.783 41.715 43.067 44.446 47.249 1.002 1800

beta[2] 28.408 1.132 26.197 27.679 28.396 29.153 30.612 1.002 1400

beta[3] 40.127 1.121 37.966 39.394 40.112 40.884 42.261 1.002 1100

gamma[1] -6.667 3.325 -13.075 -8.904 -6.612 -4.499 -0.171 1.003 920

gamma[2] -9.884 3.264 -16.376 -12.029 -9.956 -7.709 -3.347 1.003 870

gamma[3] -3.780 3.240 -10.314 -5.931 -3.792 -1.646 2.595 1.003 950

gamma[4] 7.991 3.195 2.062 5.811 7.901 10.155 14.234 1.002 1900

gamma[5] 6.589 3.313 -0.059 4.437 6.627 8.769 12.997 1.001 3000

gamma[6] -2.549 3.207 -8.875 -4.747 -2.498 -0.389 3.616 1.002 1000

gamma[7] -5.185 3.308 -11.838 -7.415 -5.187 -2.967 1.175 1.001 3000

gamma[8] 10.322 3.301 3.840 8.052 10.361 12.493 16.938 1.001 3000

gamma[9] 5.307 3.282 -1.027 3.054 5.281 7.495 11.819 1.002 1400

gamma[10] -13.863 3.244 -20.343 -16.028 -13.793 -11.735 -7.595 1.001 2700

gamma[11] -12.894 3.289 -19.431 -15.060 -12.864 -10.684 -6.426 1.001 2900

gamma[12] 3.683 3.354 -2.647 1.389 3.607 5.961 10.515 1.001 3000

gamma[13] 9.408 3.294 2.830 7.205 9.442 11.594 15.889 1.002 1300

gamma[14] -2.811 3.278 -9.311 -5.032 -2.805 -0.621 3.792 1.001 2900

gamma[15] 8.458 3.329 2.028 6.190 8.355 10.700 15.089 1.001 2800

gamma[16] 0.494 3.250 -5.636 -1.745 0.430 2.671 7.074 1.001 3000

gamma[17] -9.665 3.212 -16.259 -11.844 -9.547 -7.521 -3.477 1.004 540

gamma[18] 2.969 3.249 -3.621 0.861 2.913 5.171 9.384 1.001 3000

gamma[19] -14.382 3.254 -20.722 -16.477 -14.365 -12.317 -7.966 1.001 3000

gamma[20] 12.242 3.282 5.882 10.045 12.274 14.373 18.782 1.002 1700

gamma[21] 20.046 3.341 13.551 17.772 19.968 22.289 26.473 1.001 3000

gamma[22] -10.907 3.272 -17.547 -13.073 -10.864 -8.779 -4.412 1.001 3000

gamma[23] -16.597 3.290 -23.064 -18.759 -16.587 -14.428 -10.232 1.002 1400

gamma[24] 2.726 3.227 -3.601 0.516 2.655 4.887 9.086 1.001 3000

gamma[25] -9.107 3.273 -15.445 -11.276 -9.195 -6.920 -2.539 1.001 3000

gamma[26] 26.961 3.260 20.632 24.757 26.954 29.123 33.400 1.001 3000

gamma[27] -6.803 3.228 -13.194 -8.882 -6.781 -4.698 -0.386 1.002 1400

gamma[28] 3.400 3.174 -2.655 1.241 3.424 5.586 9.404 1.001 3000

gamma[29] -4.577 3.245 -10.940 -6.738 -4.623 -2.357 1.739 1.001 3000

gamma[30] -11.103 3.260 -17.536 -13.304 -11.104 -8.933 -4.649 1.001 2300

gamma[31] 1.673 3.205 -4.774 -0.412 1.683 3.800 8.200 1.001 3000

gamma[32] -19.083 3.277 -25.486 -21.280 -19.145 -16.890 -12.623 1.001 3000

gamma[33] 11.267 3.306 4.944 9.043 11.298 13.471 17.724 1.001 3000

gamma[34] 3.393 3.297 -3.147 1.169 3.406 5.624 9.659 1.001 3000

gamma[35] 22.310 3.306 16.073 20.040 22.294 24.502 29.067 1.001 2400

res[1] 0.991 2.763 -4.476 -0.842 0.987 2.852 6.364 1.003 860

res[2] -3.345 2.733 -8.826 -5.139 -3.346 -1.501 1.964 1.002 1600

res[3] 1.540 2.756 -3.922 -0.278 1.568 3.352 6.954 1.002 1500

res[4] -2.592 2.682 -7.946 -4.446 -2.577 -0.765 2.776 1.002 1800

res[5] -2.597 2.704 -7.857 -4.417 -2.611 -0.798 2.682 1.002 1500

res[6] 3.409 2.682 -1.903 1.595 3.420 5.197 8.791 1.002 1100

res[7] -2.222 2.724 -7.516 -4.062 -2.296 -0.402 3.018 1.002 1000

res[8] 1.592 2.725 -3.658 -0.245 1.604 3.349 6.991 1.001 2000

res[9] 0.240 2.699 -5.083 -1.534 0.227 2.017 5.469 1.002 1700

res[10] -0.812 2.704 -6.071 -2.699 -0.795 1.109 4.262 1.002 1700

res[11] 0.884 2.738 -4.545 -1.002 0.936 2.795 6.111 1.001 3000

res[12] 1.333 2.703 -4.006 -0.550 1.321 3.278 6.428 1.001 3000

res[13] 5.386 2.733 0.046 3.497 5.424 7.262 10.938 1.001 3000

res[14] -6.530 2.742 -11.959 -8.372 -6.498 -4.749 -1.034 1.001 3000

res[15] 2.339 2.724 -2.835 0.440 2.337 4.140 7.880 1.001 3000

res[16] -0.834 2.743 -6.166 -2.698 -0.860 1.012 4.419 1.003 740

res[17] 4.408 2.726 -0.777 2.572 4.380 6.245 9.796 1.002 1500

res[18] -4.147 2.710 -9.534 -5.991 -4.136 -2.298 1.002 1.002 1500

res[19] 0.921 2.770 -4.377 -0.954 0.917 2.791 6.523 1.002 1500

res[20] 1.998 2.744 -3.170 0.146 1.967 3.864 7.473 1.001 3000

res[21] -3.768 2.774 -9.105 -5.655 -3.759 -1.955 1.747 1.001 3000

res[22] 1.008 2.709 -4.180 -0.818 1.022 2.772 6.392 1.001 3000

res[23] 2.362 2.713 -2.912 0.550 2.359 4.073 7.764 1.001 3000

res[24] -1.657 2.713 -7.029 -3.399 -1.703 0.080 3.783 1.001 3000

res[25] 6.233 2.704 0.886 4.381 6.296 8.055 11.477 1.002 1100

res[26] 2.683 2.727 -2.719 0.861 2.660 4.488 8.025 1.001 2800

res[27] -8.210 2.690 -13.525 -9.999 -8.205 -6.352 -3.031 1.001 2600

res[28] -0.342 2.717 -5.804 -2.172 -0.313 1.506 4.967 1.001 3000

res[29] -2.007 2.689 -7.343 -3.855 -2.027 -0.192 3.319 1.001 3000

res[30] 0.031 2.705 -5.273 -1.763 -0.041 1.869 5.263 1.001 3000

res[31] -1.771 2.718 -6.997 -3.637 -1.820 0.062 3.467 1.001 3000

res[32] 3.133 2.721 -2.123 1.321 3.079 4.966 8.433 1.001 3000

res[33] -3.350 2.719 -8.587 -5.227 -3.329 -1.513 2.071 1.001 3000

res[34] -1.591 2.753 -7.170 -3.417 -1.606 0.279 3.576 1.001 3000

res[35] -4.062 2.784 -9.632 -5.942 -4.026 -2.158 1.378 1.001 3000

res[36] 6.328 2.772 0.919 4.546 6.369 8.180 11.578 1.001 3000

res[37] 0.324 2.760 -5.057 -1.528 0.288 2.171 5.821 1.001 2300

res[38] 2.884 2.737 -2.526 1.098 2.857 4.658 8.231 1.001 3000

res[39] -1.465 2.767 -6.875 -3.291 -1.491 0.381 3.995 1.001 2900

res[40] 6.800 2.713 1.531 5.001 6.809 8.575 12.149 1.001 2600

res[41] -3.198 2.726 -8.514 -5.026 -3.158 -1.394 2.237 1.001 3000

res[42] -4.046 2.719 -9.413 -5.893 -4.029 -2.283 1.506 1.001 3000

res[43] 6.203 2.780 0.688 4.337 6.258 8.068 11.586 1.001 3000

res[44] -2.620 2.776 -8.081 -4.488 -2.621 -0.728 2.777 1.001 3000

res[45] -2.122 2.801 -7.630 -3.982 -2.096 -0.165 3.232 1.001 3000

res[46] -0.754 2.706 -6.194 -2.582 -0.768 1.127 4.512 1.001 3000

res[47] 1.202 2.726 -4.100 -0.626 1.208 3.112 6.430 1.001 3000

res[48] -0.312 2.717 -5.815 -2.091 -0.285 1.541 4.798 1.001 3000

res[49] 1.718 2.706 -3.537 -0.076 1.695 3.518 7.185 1.004 610

res[50] -0.048 2.725 -5.247 -1.874 -0.083 1.764 5.404 1.003 900

res[51] -3.410 2.701 -8.600 -5.222 -3.462 -1.614 2.077 1.003 750

res[52] 4.766 2.709 -0.518 2.929 4.779 6.527 10.146 1.002 1600

res[53] -1.430 2.734 -6.804 -3.201 -1.369 0.393 3.868 1.001 3000

res[54] -2.974 2.710 -8.302 -4.740 -2.998 -1.149 2.447 1.001 3000

res[55] -2.745 2.715 -8.144 -4.553 -2.812 -0.908 2.608 1.001 3000

res[56] 2.911 2.717 -2.442 1.143 2.883 4.705 8.267 1.001 3000

res[57] -2.738 2.719 -8.141 -4.530 -2.756 -0.910 2.721 1.001 3000

res[58] 1.693 2.738 -3.826 -0.132 1.758 3.514 6.930 1.001 2300

res[59] 0.064 2.776 -5.562 -1.818 0.117 1.961 5.396 1.001 3000

res[60] 0.133 2.764 -5.378 -1.693 0.198 1.969 5.549 1.001 2500

res[61] 0.946 2.802 -4.530 -0.924 0.960 2.837 6.333 1.002 1400

res[62] -0.708 2.813 -6.209 -2.573 -0.753 1.157 4.840 1.001 2500

res[63] 3.176 2.782 -2.266 1.334 3.136 5.049 8.635 1.001 3000

res[64] -4.165 2.709 -9.420 -5.987 -4.225 -2.381 1.145 1.001 3000

res[65] 6.552 2.660 1.316 4.784 6.539 8.286 11.674 1.001 3000

res[66] -4.382 2.690 -9.714 -6.166 -4.396 -2.602 0.776 1.001 3000

res[67] -0.511 2.754 -6.040 -2.327 -0.546 1.419 4.867 1.002 1300

res[68] -0.055 2.757 -5.724 -1.859 -0.079 1.845 5.428 1.003 700

res[69] -2.393 2.764 -7.893 -4.168 -2.369 -0.549 3.114 1.003 700

res[70] 0.753 2.718 -4.439 -1.030 0.707 2.546 6.266 1.001 3000

res[71] -6.943 2.712 -11.989 -8.803 -7.001 -5.190 -1.477 1.001 3000

res[72] 6.793 2.690 1.519 5.020 6.778 8.581 12.054 1.001 3000

res[73] -3.912 2.749 -9.547 -5.813 -3.874 -1.969 1.402 1.001 3000

res[74] 3.687 2.781 -1.830 1.846 3.706 5.569 8.909 1.001 3000

res[75] -1.294 2.728 -6.736 -3.132 -1.283 0.504 3.972 1.001 3000

res[76] -4.970 2.702 -10.044 -6.802 -4.959 -3.167 0.467 1.001 3000

res[77] 10.812 2.681 5.668 8.906 10.841 12.565 16.311 1.001 3000

res[78] -1.255 2.683 -6.487 -3.043 -1.297 0.495 4.065 1.001 3000

res[79] -3.173 2.712 -8.569 -4.945 -3.122 -1.316 1.945 1.002 1300

res[80] -3.352 2.693 -8.672 -5.162 -3.289 -1.527 1.882 1.001 3000

res[81] 5.383 2.716 0.135 3.576 5.406 7.204 10.612 1.001 3000

res[82] 1.711 2.669 -3.425 -0.086 1.686 3.520 6.877 1.001 3000

res[83] 1.805 2.649 -3.315 -0.002 1.763 3.645 6.896 1.001 3000

res[84] -2.970 2.641 -8.114 -4.783 -2.952 -1.228 2.196 1.001 3000

res[85] -5.598 2.741 -10.945 -7.442 -5.620 -3.755 -0.136 1.002 1300

res[86] -1.944 2.759 -7.379 -3.811 -1.929 -0.059 3.442 1.001 2200

res[87] 6.713 2.729 1.416 4.901 6.749 8.503 12.166 1.001 3000

res[88] -3.996 2.682 -9.294 -5.794 -3.958 -2.231 1.295 1.001 3000

res[89] -6.220 2.722 -11.648 -7.989 -6.183 -4.396 -0.984 1.001 3000

res[90] 8.330 2.700 2.973 6.604 8.334 10.121 13.526 1.001 3000

res[91] -0.355 2.690 -5.527 -2.151 -0.390 1.456 4.916 1.001 3000

res[92] -2.082 2.685 -7.274 -3.834 -2.156 -0.275 3.514 1.001 3000

res[93] 2.685 2.701 -2.625 0.890 2.690 4.461 8.277 1.001 3000

res[94] -1.218 2.759 -6.666 -3.017 -1.219 0.550 4.149 1.001 3000

res[95] -7.154 2.750 -12.652 -8.963 -7.228 -5.307 -1.734 1.001 3000

res[96] 5.254 2.780 -0.209 3.395 5.239 7.035 10.904 1.001 3000

res[97] 1.971 2.751 -3.399 0.120 1.989 3.804 7.443 1.002 1800

res[98] -2.284 2.732 -7.683 -4.131 -2.302 -0.416 3.174 1.001 3000

res[99] 2.398 2.737 -2.896 0.595 2.394 4.171 7.697 1.001 3000

res[100] -3.589 2.768 -8.965 -5.497 -3.564 -1.749 1.979 1.001 3000

res[101] 8.505 2.725 3.183 6.651 8.534 10.315 13.906 1.001 3000

res[102] -4.225 2.733 -9.489 -6.026 -4.236 -2.442 1.038 1.001 3000

res[103] 2.973 2.745 -2.387 1.160 3.003 4.793 8.346 1.001 3000

res[104] 1.894 2.757 -3.501 0.038 1.879 3.743 7.260 1.001 3000

res[105] -1.108 2.764 -6.620 -2.942 -1.108 0.771 4.185 1.001 3000

sigma 4.680 0.408 3.969 4.389 4.649 4.942 5.544 1.001 2100

sigma.B 11.682 1.534 9.146 10.592 11.534 12.562 15.177 1.001 3000

deviance 619.919 11.149 600.770 612.087 619.098 626.943 644.105 1.001 3000

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 62.2 and DIC = 682.1

DIC is an estimate of expected predictive error (lower deviance is better).

data.rcb.mcmc.list.m2 <- as.mcmc(data.rcb.r2jags.m2) Data.Rcb.mcmc.list.m2 <- data.rcb.mcmc.list.m2

Hierarchical parameterization

For a simple model with only two hierarchical levels, the model is the same as above..

$R^2$ and finite population standard deviations

modelString=" model { #Likelihood (esimating site means (gamma.site) for (i in 1:n) { y[i]~dnorm(mu[i],tau) mu[i] <- gamma[Block[i]] + inprod(beta[], X[i,]) y.err[i]<- mu[i]-y[i] } for (i in 1:nBlock) { gamma[i] ~ dnorm(0, tau.block) } #Priors for (i in 1:nX) { beta[i] ~ dnorm(0, 1.0E-6) #prior } sigma ~ dunif(0, 100) tau <- 1 / (sigma * sigma) sigma.block ~ dunif(0, 100) tau.block <- 1 / (sigma.block * sigma.block) sd.y <- sd(y.err) sd.block <- sd(gamma) } "

A.Xmat <- model.matrix(~A,ddply(data.rcb,~Block,catcolwise(unique))) data.rcb.list <- with(data.rcb, list(y=y, Block=Block, X= A.Xmat, n=nrow(data.rcb), nBlock=length(levels(Block)), nX = ncol(A.Xmat) ) ) params <- c("beta","sigma","sd.y",'sd.block','sigma','sigma.block') burnInSteps = 3000 nChains = 3 numSavedSteps = 3000 thinSteps = 10 nIter = burnInSteps+ceiling((numSavedSteps * thinSteps)/nChains) library(R2jags) rnorm(1)

[1] -1.321587

jags.SD.time <- system.time( data.rcb.r2jagsSD <- jags(data=data.rcb.list, inits=NULL, parameters.to.save=params, model.file=textConnection(modelString), n.chains=3, n.iter=nIter, n.burnin=burnInSteps, n.thin=thinSteps ) )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 899 Initializing model

jags.SD.time

user system elapsed 3.972 0.008 3.996

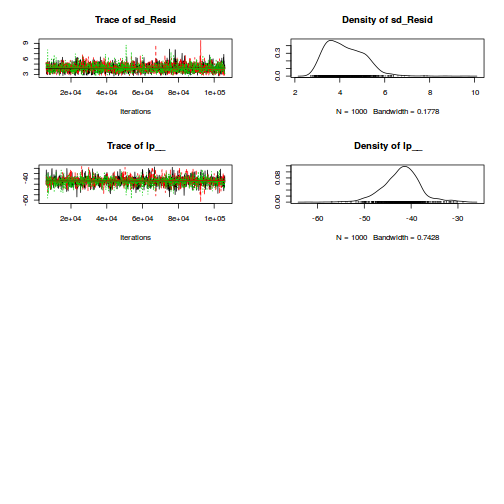

print(data.rcb.r2jagsSD)

Inference for Bugs model at "5", fit using jags,

3 chains, each with 13000 iterations (first 3000 discarded), n.thin = 10

n.sims = 3000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

beta[1] 43.008 2.193 38.860 41.550 42.966 44.443 47.378 1.001 2800

beta[2] 28.447 1.121 26.275 27.654 28.436 29.224 30.645 1.001 3000

beta[3] 40.177 1.134 37.950 39.428 40.190 40.941 42.370 1.001 2500

sd.block 11.499 0.472 10.584 11.180 11.497 11.827 12.400 1.001 3000

sd.y 4.605 0.232 4.220 4.438 4.580 4.751 5.113 1.002 1700

sigma 4.659 0.410 3.937 4.372 4.635 4.911 5.565 1.001 3000

sigma.block 11.967 1.611 9.316 10.865 11.819 12.883 15.607 1.001 2100

deviance 619.453 10.667 601.034 611.788 618.614 626.518 642.120 1.001 3000

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 56.9 and DIC = 676.3

DIC is an estimate of expected predictive error (lower deviance is better).

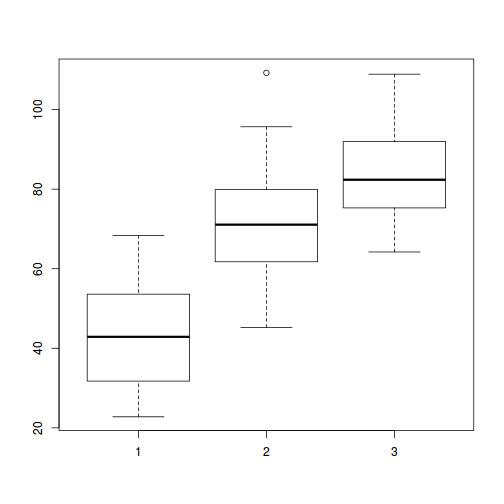

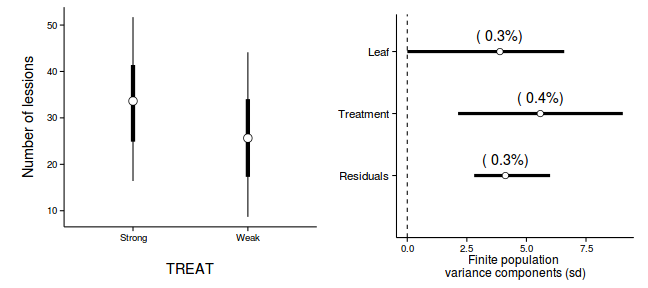

data.rcb.mcmc.listSD <- as.mcmc(data.rcb.r2jagsSD) Xmat <- model.matrix(~A, data.rcb) coefs <- data.rcb.r2jagsSD$BUGSoutput$sims.list[['beta']] fitted <- coefs %*% t(Xmat) X.var <- aaply(fitted,1,function(x){var(x)}) Z.var <- data.rcb.r2jagsSD$BUGSoutput$sims.list[['sd.block']]^2 R.var <- data.rcb.r2jagsSD$BUGSoutput$sims.list[['sd.y']]^2 R2.marginal <- (X.var)/(X.var+Z.var+R.var) R2.marginal <- data.frame(Mean=mean(R2.marginal), Median=median(R2.marginal), HPDinterval(as.mcmc(R2.marginal))) R2.conditional <- (X.var+Z.var)/(X.var+Z.var+R.var) R2.conditional <- data.frame(Mean=mean(R2.conditional), Median=median(R2.conditional), HPDinterval(as.mcmc(R2.conditional))) R2.block <- (Z.var)/(X.var+Z.var+R.var) R2.block <- data.frame(Mean=mean(R2.block), Median=median(R2.block), HPDinterval(as.mcmc(R2.block))) R2.res<-(R.var)/(X.var+Z.var+R.var) R2.res <- data.frame(Mean=mean(R2.res), Median=median(R2.res), HPDinterval(as.mcmc(R2.res))) rbind(R2.block=R2.block, R2.marginal=R2.marginal, R2.res=R2.res, R2.conditional=R2.conditional)

Mean Median lower upper R2.block 0.30002983 0.29979922 0.25796679 0.3362629 R2.marginal 0.65172770 0.65224634 0.61326493 0.6888135 R2.res 0.04824247 0.04754536 0.03857305 0.0586949 R2.conditional 0.95175753 0.95245464 0.94130510 0.9614270

Rstan

Cell means parameterization

rstanString=" data{ int n; int nA; int nB; vector [n] y; int A[n]; int B[n]; } parameters{ real alpha[nA]; real<lower=0> sigma; vector [nB] beta; real<lower=0> sigma_B; } model{ real mu[n]; // Priors alpha ~ normal( 0 , 100 ); beta ~ normal( 0 , sigma_B ); sigma_B ~ cauchy( 0 , 25 ); sigma ~ cauchy( 0 , 25 ); for ( i in 1:n ) { mu[i] <- alpha[A[i]] + beta[B[i]]; } y ~ normal( mu , sigma ); } "

data.rcb.list <- with(data.rcb, list(y=y, A=as.numeric(A), B=as.numeric(Block), n=nrow(data.rcb), nB=length(levels(Block)),nA=length(levels(A)))) burnInSteps = 3000 nChains = 3 numSavedSteps = 3000 thinSteps = 10 nIter = burnInSteps+ceiling((numSavedSteps * thinSteps)/nChains) library(rstan) rstan.c.time <- system.time( data.rcb.rstan.c <- stan(data=data.rcb.list, model_code=rstanString, pars=c('alpha','sigma','sigma_B'), chains=nChains, iter=nIter, warmup=burnInSteps, thin=thinSteps, save_dso=TRUE ) )

TRANSLATING MODEL 'rstanString' FROM Stan CODE TO C++ CODE NOW. COMPILING THE C++ CODE FOR MODEL 'rstanString' NOW. SAMPLING FOR MODEL 'rstanString' NOW (CHAIN 1). Iteration: 1 / 13000 [ 0%] (Warmup) Iteration: 1300 / 13000 [ 10%] (Warmup) Iteration: 2600 / 13000 [ 20%] (Warmup) Iteration: 3001 / 13000 [ 23%] (Sampling) Iteration: 4300 / 13000 [ 33%] (Sampling) Iteration: 5600 / 13000 [ 43%] (Sampling) Iteration: 6900 / 13000 [ 53%] (Sampling) Iteration: 8200 / 13000 [ 63%] (Sampling) Iteration: 9500 / 13000 [ 73%] (Sampling) Iteration: 10800 / 13000 [ 83%] (Sampling) Iteration: 12100 / 13000 [ 93%] (Sampling) Iteration: 13000 / 13000 [100%] (Sampling) # Elapsed Time: 0.4 seconds (Warm-up) # 1.14 seconds (Sampling) # 1.54 seconds (Total) SAMPLING FOR MODEL 'rstanString' NOW (CHAIN 2). Iteration: 1 / 13000 [ 0%] (Warmup) Iteration: 1300 / 13000 [ 10%] (Warmup) Iteration: 2600 / 13000 [ 20%] (Warmup) Iteration: 3001 / 13000 [ 23%] (Sampling) Iteration: 4300 / 13000 [ 33%] (Sampling) Iteration: 5600 / 13000 [ 43%] (Sampling) Iteration: 6900 / 13000 [ 53%] (Sampling) Iteration: 8200 / 13000 [ 63%] (Sampling) Iteration: 9500 / 13000 [ 73%] (Sampling) Iteration: 10800 / 13000 [ 83%] (Sampling) Iteration: 12100 / 13000 [ 93%] (Sampling) Iteration: 13000 / 13000 [100%] (Sampling) # Elapsed Time: 0.37 seconds (Warm-up) # 1.26 seconds (Sampling) # 1.63 seconds (Total) SAMPLING FOR MODEL 'rstanString' NOW (CHAIN 3). Iteration: 1 / 13000 [ 0%] (Warmup) Iteration: 1300 / 13000 [ 10%] (Warmup) Iteration: 2600 / 13000 [ 20%] (Warmup) Iteration: 3001 / 13000 [ 23%] (Sampling) Iteration: 4300 / 13000 [ 33%] (Sampling) Iteration: 5600 / 13000 [ 43%] (Sampling) Iteration: 6900 / 13000 [ 53%] (Sampling) Iteration: 8200 / 13000 [ 63%] (Sampling) Iteration: 9500 / 13000 [ 73%] (Sampling) Iteration: 10800 / 13000 [ 83%] (Sampling) Iteration: 12100 / 13000 [ 93%] (Sampling) Iteration: 13000 / 13000 [100%] (Sampling) # Elapsed Time: 0.42 seconds (Warm-up) # 1.3 seconds (Sampling) # 1.72 seconds (Total)

print(data.rcb.rstan.c)

Inference for Stan model: rstanString.

3 chains, each with iter=13000; warmup=3000; thin=10;

post-warmup draws per chain=1000, total post-warmup draws=3000.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

alpha[1] 42.89 0.05 2.24 38.52 41.39 42.90 44.37 47.27 2125 1

alpha[2] 71.38 0.05 2.21 66.97 69.88 71.41 72.89 75.72 2166 1

alpha[3] 83.08 0.05 2.20 78.74 81.57 83.10 84.53 87.42 2114 1

sigma 4.67 0.01 0.41 3.93 4.38 4.63 4.92 5.55 2939 1

sigma_B 11.90 0.03 1.56 9.24 10.82 11.71 12.85 15.41 3000 1

lp__ -313.76 0.11 5.45 -324.89 -317.25 -313.37 -309.85 -304.44 2691 1

Samples were drawn using NUTS(diag_e) at Mon Mar 9 09:10:22 2015.

For each parameter, n_eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor on split chains (at

convergence, Rhat=1).

data.rcb.rstan.c.df <-as.data.frame(extract(data.rcb.rstan.c)) head(data.rcb.rstan.c.df)

alpha.1 alpha.2 alpha.3 sigma sigma_B lp__ 1 39.66 68.98 81.29 3.990 12.033 -302.8 2 42.15 70.92 81.55 4.437 15.084 -321.2 3 43.65 72.31 85.08 4.874 9.853 -316.7 4 46.98 75.78 88.41 4.893 11.365 -326.1 5 41.91 69.89 81.54 4.678 14.699 -322.2 6 40.61 68.60 79.92 4.293 15.213 -309.9

data.rcb.mcmc.c<-rstan:::as.mcmc.list.stanfit(data.rcb.rstan.c) plyr:::adply(as.matrix(data.rcb.rstan.c.df),2,MCMCsum)

X1 Median X0. X25. X50. X75. X100. lower upper lower.1 upper.1 1 alpha.1 42.900 34.146 41.385 42.900 44.371 51.119 38.613 47.342 40.641 45.058 2 alpha.2 71.405 62.558 69.880 71.405 72.889 79.280 66.819 75.389 69.261 73.589 3 alpha.3 83.102 75.224 81.569 83.102 84.526 91.045 78.704 87.371 80.878 85.183 4 sigma 4.634 3.519 4.376 4.634 4.922 6.221 3.849 5.434 4.203 4.991 5 sigma_B 11.709 7.877 10.820 11.709 12.848 21.071 9.041 15.084 10.200 13.095 6 lp__ -313.369 -340.392 -317.248 -313.369 -309.850 -298.056 -324.061 -303.851 -318.308 -307.557

Full effects parameterization

rstanString=" data{ int n; int nB; vector [n] y; int A2[n]; int A3[n]; int B[n]; } parameters{ real alpha0; real alpha2; real alpha3; real<lower=0> sigma; vector [nB] beta; real<lower=0> sigma_B; } model{ real mu[n]; // Priors alpha0 ~ normal( 0 , 1000 ); alpha2 ~ normal( 0 , 1000 ); alpha3 ~ normal( 0 , 1000 ); beta ~ normal( 0 , sigma_B ); sigma_B ~ cauchy( 0 , 25 ); sigma ~ cauchy( 0 , 25 ); for ( i in 1:n ) { mu[i] <- alpha0 + alpha2*A2[i] + alpha3*A3[i] + beta[B[i]]; } y ~ normal( mu , sigma ); } "

A2 <- ifelse(data.rcb$A=='2',1,0) A3 <- ifelse(data.rcb$A=='3',1,0) data.rcb.list <- with(data.rcb, list(y=y, A2=A2, A3=A3, B=as.numeric(Block), n=nrow(data.rcb), nB=length(levels(Block)))) burnInSteps = 3000 nChains = 3 numSavedSteps = 3000 thinSteps = 10 nIter = burnInSteps + ceiling((numSavedSteps * thinSteps)/nChains) library(rstan) rstan.f.time <- system.time( data.rcb.rstan.f <- stan(data=data.rcb.list, model_code=rstanString, pars=c('alpha0','alpha2','alpha3','sigma','sigma_B'), chains=nChains, iter=nIter, warmup=burnInSteps, thin=thinSteps, save_dso=TRUE ) )

SAMPLING FOR MODEL 'e6b26e59c453ce19af522e475363a98a' NOW (CHAIN 1). Chain 1, Iteration: 1 / 13000 [ 0%] (Warmup) Chain 1, Iteration: 1300 / 13000 [ 10%] (Warmup) Chain 1, Iteration: 2600 / 13000 [ 20%] (Warmup) Chain 1, Iteration: 3001 / 13000 [ 23%] (Sampling) Chain 1, Iteration: 4300 / 13000 [ 33%] (Sampling) Chain 1, Iteration: 5600 / 13000 [ 43%] (Sampling) Chain 1, Iteration: 6900 / 13000 [ 53%] (Sampling) Chain 1, Iteration: 8200 / 13000 [ 63%] (Sampling) Chain 1, Iteration: 9500 / 13000 [ 73%] (Sampling) Chain 1, Iteration: 10800 / 13000 [ 83%] (Sampling) Chain 1, Iteration: 12100 / 13000 [ 93%] (Sampling) Chain 1, Iteration: 13000 / 13000 [100%] (Sampling) # Elapsed Time: 0.687363 seconds (Warm-up) # 1.86471 seconds (Sampling) # 2.55208 seconds (Total) SAMPLING FOR MODEL 'e6b26e59c453ce19af522e475363a98a' NOW (CHAIN 2). Chain 2, Iteration: 1 / 13000 [ 0%] (Warmup) Chain 2, Iteration: 1300 / 13000 [ 10%] (Warmup) Chain 2, Iteration: 2600 / 13000 [ 20%] (Warmup) Chain 2, Iteration: 3001 / 13000 [ 23%] (Sampling) Chain 2, Iteration: 4300 / 13000 [ 33%] (Sampling) Chain 2, Iteration: 5600 / 13000 [ 43%] (Sampling) Chain 2, Iteration: 6900 / 13000 [ 53%] (Sampling) Chain 2, Iteration: 8200 / 13000 [ 63%] (Sampling) Chain 2, Iteration: 9500 / 13000 [ 73%] (Sampling) Chain 2, Iteration: 10800 / 13000 [ 83%] (Sampling) Chain 2, Iteration: 12100 / 13000 [ 93%] (Sampling) Chain 2, Iteration: 13000 / 13000 [100%] (Sampling) # Elapsed Time: 0.64299 seconds (Warm-up) # 1.78891 seconds (Sampling) # 2.4319 seconds (Total) SAMPLING FOR MODEL 'e6b26e59c453ce19af522e475363a98a' NOW (CHAIN 3). Chain 3, Iteration: 1 / 13000 [ 0%] (Warmup) Chain 3, Iteration: 1300 / 13000 [ 10%] (Warmup) Chain 3, Iteration: 2600 / 13000 [ 20%] (Warmup) Chain 3, Iteration: 3001 / 13000 [ 23%] (Sampling) Chain 3, Iteration: 4300 / 13000 [ 33%] (Sampling) Chain 3, Iteration: 5600 / 13000 [ 43%] (Sampling) Chain 3, Iteration: 6900 / 13000 [ 53%] (Sampling) Chain 3, Iteration: 8200 / 13000 [ 63%] (Sampling) Chain 3, Iteration: 9500 / 13000 [ 73%] (Sampling) Chain 3, Iteration: 10800 / 13000 [ 83%] (Sampling) Chain 3, Iteration: 12100 / 13000 [ 93%] (Sampling) Chain 3, Iteration: 13000 / 13000 [100%] (Sampling) # Elapsed Time: 0.721642 seconds (Warm-up) # 1.92995 seconds (Sampling) # 2.65159 seconds (Total)

print(data.rcb.rstan.f)

Inference for Stan model: e6b26e59c453ce19af522e475363a98a.

3 chains, each with iter=13000; warmup=3000; thin=10;

post-warmup draws per chain=1000, total post-warmup draws=3000.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

alpha0 43.01 0.06 2.20 38.71 41.51 43.00 44.41 47.41 1445 1

alpha2 28.45 0.02 1.13 26.24 27.69 28.45 29.21 30.71 3000 1

alpha3 40.15 0.02 1.14 37.97 39.40 40.15 40.92 42.40 2663 1

sigma 4.65 0.01 0.42 3.92 4.36 4.63 4.92 5.52 3000 1

sigma_B 11.90 0.03 1.59 9.24 10.75 11.75 12.89 15.52 2700 1

lp__ -313.00 0.10 5.38 -324.44 -316.37 -312.61 -309.11 -303.95 2811 1

Samples were drawn using NUTS(diag_e) at Wed Dec 23 11:14:07 2015.

For each parameter, n_eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor on split chains (at

convergence, Rhat=1).

data.rcb.rstan.f.df <-as.data.frame(extract(data.rcb.rstan.f)) head(data.rcb.rstan.f.df)

alpha0 alpha2 alpha3 sigma sigma_B lp__ 1 45.34963 28.86244 41.28095 4.288403 11.64362 -316.1950 2 46.56000 29.03809 40.02504 4.589700 11.16517 -309.2829 3 41.02639 29.06624 40.60771 4.816826 10.61045 -307.8916 4 43.12900 28.36760 39.66213 4.574166 12.08069 -313.3190 5 43.42910 28.56547 41.53942 4.432488 12.23545 -304.2449 6 44.52987 26.68965 39.34490 4.134544 11.10949 -311.3038

data.rcb.mcmc.f<-rstan:::as.mcmc.list.stanfit(data.rcb.rstan.f) plyr:::adply(as.matrix(data.rcb.rstan.f.df),2,MCMCsum)

X1 Median X0. X25. X50. X75. X100. lower upper lower.1 upper.1 1 alpha0 42.998651 34.792884 41.513957 42.998651 44.405610 50.294911 38.811920 47.497702 40.593886 44.830394 2 alpha2 28.447276 23.922377 27.693890 28.447276 29.208311 32.080887 26.313779 30.740973 27.139039 29.394033 3 alpha3 40.151930 35.955333 39.398216 40.151930 40.916647 44.493609 38.084372 42.500434 39.034976 41.300031 4 sigma 4.626867 3.510477 4.358376 4.626867 4.921509 7.065056 3.874188 5.429956 4.195516 5.005088 5 sigma_B 11.753132 7.543985 10.749614 11.753132 12.887957 19.570931 9.016837 15.113263 10.095304 13.135502 6 lp__ -312.610598 -334.649842 -316.366239 -312.610598 -309.105863 -297.718740 -323.717718 -303.285639 -317.279913 -306.961175

Matrix effects parameterization

rstanString=" data{ int n; int nX; int nB; vector [n] y; matrix [n,nX] X; int B[n]; } parameters{ vector [nX] beta; real<lower=0> sigma; vector [nB] gamma; real<lower=0> sigma_B; } transformed parameters { vector[n] mu; mu <- X*beta; for (i in 1:n) { mu[i] <- mu[i] + gamma[B[i]]; } } model{ // Priors beta ~ normal( 0 , 100 ); gamma ~ normal( 0 , sigma_B ); sigma_B ~ cauchy( 0 , 25 ); sigma ~ cauchy( 0 , 25 ); y ~ normal( mu , sigma ); } "

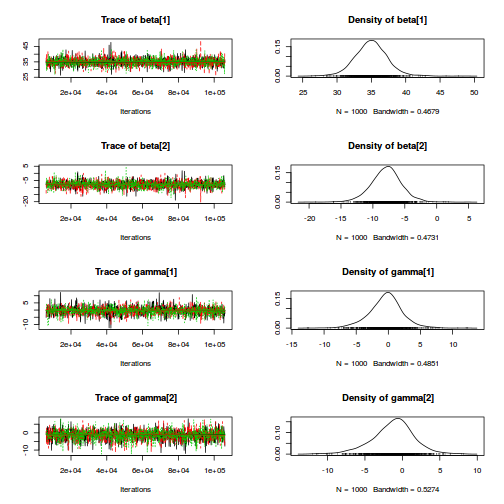

Xmat <- model.matrix(~A, data=data.rcb) data.rcb.list <- with(data.rcb, list(y=y, X=Xmat, nX=ncol(Xmat), B=as.numeric(Block), n=nrow(data.rcb), nB=length(levels(Block)))) library(rstan) rstan.d.time <- system.time( data.rcb.rstan.d <- stan(data=data.rcb.list, model_code=rstanString, pars=c('beta','sigma','sigma_B'), chains=3, iter=3000, warmup=1000, thin=2, save_dso=TRUE ) )

SAMPLING FOR MODEL '18e6498c61bcea7cdfdc0535e9da24c2' NOW (CHAIN 1). Chain 1, Iteration: 1 / 3000 [ 0%] (Warmup) Chain 1, Iteration: 300 / 3000 [ 10%] (Warmup) Chain 1, Iteration: 600 / 3000 [ 20%] (Warmup) Chain 1, Iteration: 900 / 3000 [ 30%] (Warmup) Chain 1, Iteration: 1001 / 3000 [ 33%] (Sampling) Chain 1, Iteration: 1300 / 3000 [ 43%] (Sampling) Chain 1, Iteration: 1600 / 3000 [ 53%] (Sampling) Chain 1, Iteration: 1900 / 3000 [ 63%] (Sampling) Chain 1, Iteration: 2200 / 3000 [ 73%] (Sampling) Chain 1, Iteration: 2500 / 3000 [ 83%] (Sampling) Chain 1, Iteration: 2800 / 3000 [ 93%] (Sampling) Chain 1, Iteration: 3000 / 3000 [100%] (Sampling) # Elapsed Time: 0.453177 seconds (Warm-up) # 0.62562 seconds (Sampling) # 1.0788 seconds (Total) SAMPLING FOR MODEL '18e6498c61bcea7cdfdc0535e9da24c2' NOW (CHAIN 2). Chain 2, Iteration: 1 / 3000 [ 0%] (Warmup) Chain 2, Iteration: 300 / 3000 [ 10%] (Warmup) Chain 2, Iteration: 600 / 3000 [ 20%] (Warmup) Chain 2, Iteration: 900 / 3000 [ 30%] (Warmup) Chain 2, Iteration: 1001 / 3000 [ 33%] (Sampling) Chain 2, Iteration: 1300 / 3000 [ 43%] (Sampling) Chain 2, Iteration: 1600 / 3000 [ 53%] (Sampling) Chain 2, Iteration: 1900 / 3000 [ 63%] (Sampling) Chain 2, Iteration: 2200 / 3000 [ 73%] (Sampling) Chain 2, Iteration: 2500 / 3000 [ 83%] (Sampling) Chain 2, Iteration: 2800 / 3000 [ 93%] (Sampling) Chain 2, Iteration: 3000 / 3000 [100%] (Sampling) # Elapsed Time: 0.545787 seconds (Warm-up) # 0.602373 seconds (Sampling) # 1.14816 seconds (Total) SAMPLING FOR MODEL '18e6498c61bcea7cdfdc0535e9da24c2' NOW (CHAIN 3). Chain 3, Iteration: 1 / 3000 [ 0%] (Warmup) Chain 3, Iteration: 300 / 3000 [ 10%] (Warmup) Chain 3, Iteration: 600 / 3000 [ 20%] (Warmup) Chain 3, Iteration: 900 / 3000 [ 30%] (Warmup) Chain 3, Iteration: 1001 / 3000 [ 33%] (Sampling) Chain 3, Iteration: 1300 / 3000 [ 43%] (Sampling) Chain 3, Iteration: 1600 / 3000 [ 53%] (Sampling) Chain 3, Iteration: 1900 / 3000 [ 63%] (Sampling) Chain 3, Iteration: 2200 / 3000 [ 73%] (Sampling) Chain 3, Iteration: 2500 / 3000 [ 83%] (Sampling) Chain 3, Iteration: 2800 / 3000 [ 93%] (Sampling) Chain 3, Iteration: 3000 / 3000 [100%] (Sampling) # Elapsed Time: 0.475119 seconds (Warm-up) # 0.637245 seconds (Sampling) # 1.11236 seconds (Total)

print(data.rcb.rstan.d)

Inference for Stan model: 18e6498c61bcea7cdfdc0535e9da24c2.

3 chains, each with iter=3000; warmup=1000; thin=2;

post-warmup draws per chain=1000, total post-warmup draws=3000.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

beta[1] 42.98 0.11 2.08 38.70 41.64 42.99 44.37 46.96 333 1.01

beta[2] 28.42 0.02 1.12 26.23 27.65 28.41 29.18 30.62 2244 1.00

beta[3] 40.12 0.02 1.12 37.90 39.40 40.12 40.90 42.20 2351 1.00

sigma 4.65 0.01 0.40 3.97 4.36 4.61 4.90 5.51 1901 1.00

sigma_B 11.93 0.03 1.60 9.26 10.79 11.79 12.90 15.57 2405 1.00

lp__ -312.99 0.14 5.47 -324.51 -316.52 -312.72 -309.00 -303.73 1441 1.01

Samples were drawn using NUTS(diag_e) at Wed Dec 23 11:24:24 2015.

For each parameter, n_eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor on split chains (at

convergence, Rhat=1).

data.rcb.rstan.d.df <-as.data.frame(extract(data.rcb.rstan.d)) head(data.rcb.rstan.d.df)

beta.1 beta.2 beta.3 sigma sigma_B lp__ 1 39.14560 31.09324 39.65505 4.573291 10.759842 -314.6550 2 44.86351 27.03882 39.13673 5.144527 10.361909 -311.5803 3 43.57541 29.97864 40.73320 5.101668 9.780195 -318.3110 4 45.90323 27.41146 39.27068 4.899846 12.238562 -313.2588 5 40.54387 27.54918 41.86662 4.718828 13.027700 -317.1884 6 41.80010 27.94253 40.45006 4.865833 9.818217 -321.6274

data.rcb.mcmc.d<-rstan:::as.mcmc.list.stanfit(data.rcb.rstan.d) plyr:::adply(as.matrix(data.rcb.rstan.d.df),2,MCMCsum)

X1 Median X0. X25. X50. X75. X100. lower upper lower.1 upper.1 1 beta.1 42.990618 35.807843 41.644381 42.990618 44.365448 50.508390 38.929137 47.081249 41.26412 45.269817 2 beta.2 28.414124 23.722028 27.647317 28.414124 29.179416 32.416893 26.251619 30.623911 27.25986 29.452460 3 beta.3 40.121429 35.797484 39.399052 40.121429 40.900593 44.350100 38.055674 42.353027 39.11139 41.327864 4 sigma 4.612858 3.455549 4.355802 4.612858 4.898886 6.464649 3.939388 5.481554 4.17415 4.957871 5 sigma_B 11.792348 7.897597 10.788851 11.792348 12.897968 18.886186 9.090628 15.144342 10.14461 13.213148 6 lp__ -312.723587 -339.349432 -316.520568 -312.723587 -308.998518 -299.367259 -324.529867 -303.740895 -317.55765 -306.738854

Planned comparisons and pairwise tests

Since there are no restrictions on the type and number of comparisons derived from the posteriors, Bayesian analyses provide a natural framework for exploring additional contrasts and comparisons. For example, to compare all possible levels:

coefs <- data.rcb.r2jags.m$BUGSoutput$sims.list[[c('beta')]] head(coefs)

[,1] [,2] [,3] [1,] 42.36122 28.03856 41.06767 [2,] 43.91985 28.54764 39.03881 [3,] 42.39406 27.01535 38.90414 [4,] 42.33675 28.00178 40.57500 [5,] 39.45086 29.89651 40.68564 [6,] 42.20516 25.63713 38.38961

newdata <- data.frame(A=levels(data.rcb$A)) # A Tukeys contrast matrix library(multcomp) tuk.mat <- contrMat(n=table(newdata$A), type="Tukey") Xmat <- model.matrix(~A, data=newdata) pairwise.mat <- tuk.mat %*% Xmat pairwise.mat

(Intercept) A2 A3 2 - 1 0 1 0 3 - 1 0 0 1 3 - 2 0 -1 1

comps <- coefs %*% t(pairwise.mat) MCMCsum <- function(x) { data.frame(Median=median(x, na.rm=TRUE), t(quantile(x,na.rm=TRUE)), HPDinterval(as.mcmc(x)),HPDinterval(as.mcmc(x),p=0.5)) } (comps <-plyr:::adply(comps,2,MCMCsum))

X1 Median X0. X25. X50. X75. X100. lower upper lower.1 upper.1 1 2 - 1 28.46831 23.861253 27.74467 28.46831 29.23208 32.74363 26.360184 30.73643 27.73428 29.21728 2 3 - 1 40.21721 35.148536 39.44456 40.21721 40.94479 45.57754 38.033142 42.42591 39.63044 41.10973 3 3 - 2 11.70102 8.092086 10.91325 11.70102 12.46882 15.67819 9.546795 14.05773 11.01591 12.56149

library(ggplot2) library(gridExtra) ggplot(comps, aes(x=X1, y=Median)) + coord_flip()+ geom_hline(v=0, linetype=2)+ geom_errorbar(aes(ymin=lower, ymax=upper),width=0)+ geom_errorbar(aes(ymin=lower.1, ymax=upper.1),width=0, size=1.25)+ geom_point()+ scale_y_continuous("Effect size (median)")+ scale_x_discrete("Comparison (A)")+ theme(panel.grid.major=element_blank(), panel.grid.minor=element_blank(), panel.background=element_blank(), panel.border = element_blank(), axis.line = element_line(), axis.line.y=element_blank(), axis.title.y=element_text(size=17, vjust=2,angle=90), axis.text.y=element_text(size=12), axis.title.x=element_text(size=17,vjust=-2), axis.text.x=element_text(size=10), strip.background=element_rect(fill="transparent", colour="black"), plot.margin=unit(c(0.5,0.5,2,2),"lines") )

Error in theme(panel.grid.major = element_blank(), panel.grid.minor = element_blank(), : could not find function "unit"

RCB (repeated measures) ANOVA in R - continuous within

Scenario and Data

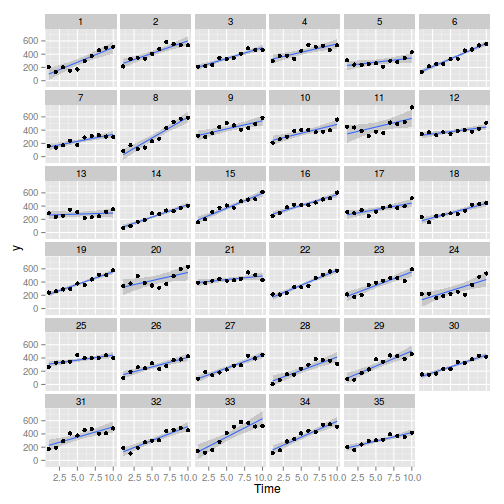

Imagine now that we has designed an experiment to investigate the effects of a continuous predictor ($x$, for example time) on a response ($y$). Again, the system that we intend to sample is spatially heterogeneous and thus will add a great deal of noise to the data that will make it difficult to detect a signal (impact of treatment).

Thus in an attempt to constrain this variability, we again decide to apply a design (RCB) in which each of the levels of X (such as time) treatments within each of 35 blocks dispersed randomly throughout the landscape. As this section is mainly about the generation of artificial data (and not specifically about what to do with the data), understanding the actual details are optional and can be safely skipped. Consequently, I have folded (toggled) this section away.

- the number of times = 10

- the number of blocks containing treatments = 35

- mean slope (rate of change in response over time) = 60

- mean intercept (value of response at time 0 = 200

- the variability (standard deviation) between blocks of the same treatment = 12

- the variability (standard deviation) in slope = 5