Tutorial 10.1 - Frequency analysis

22 Jul 2018

Simple frequency analyses

The analyses described in previous tutorials and workshops have all involved response variables that implicitly represent normally distributed and continuous population responses. In this context, continuous indicates that (at least in theory), any value of measurement down to an infinite number of decimal places is possible.

Population responses can also be categorical such that the values could be logically or experimentally constrained to a set number of discrete possibilities. For example, individuals in a population can be categorized as either male or female, reaches in a stream could be classified as either riffles, runs or pools and salinity levels of sites might be categorized as either high, medium or low.

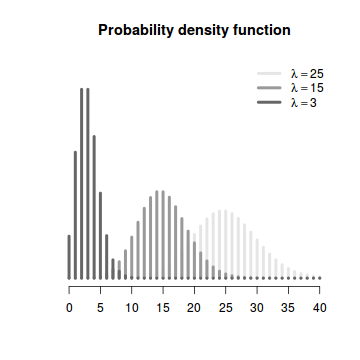

Typically, categorical response variables are tallied up to generate the frequency of replicates in each of the possible categories. From above, we would tally up the frequency of males and females, the number of riffles, runs and pools and the high, medium and low salinity sites. Hence, rather than model data in which a response was measured from each replicate in the sample (as was the case for previous analyses in this series), frequency analyses model data on the frequency of replicates in each possible category. Furthermore, frequency data follow a Poisson distribution rather than a normal distribution. The Poisson distribution is a symmetrical distribution in which only discrete integer values are possible and whose variance is equal to its mean.

|

The Poisson distribution is defined as: $$p(x) = \lambda^x~\frac{exp(-\lambda)}{x!}$$ Note that the location AND shape are both determined by a single parameter ($\lambda$). |

Since the mean and variance of a Poisson distribution are equal, distributions with higher expected values are shorter and wider than those with smaller means. Note that a Poisson distribution with an expected less than less than 5 will be obviously asymmetrical as a Poisson distribution is bounded to the left by zero. This has important implications for the reliability of frequency analyses when sample sizes are low.

The frequencies expected for each category are determined by the size of the sample and the nature of the (null) hypothesis. For example, if the null hypothesis is that there are three times as many females as males in a population (ratio of 3:1), then a sample of 110 individuals would be expected to yield $0.75 \times 110 = 82.5$ females and $0.25 \times 110 = 27.5$ males.

The chi-square statistic

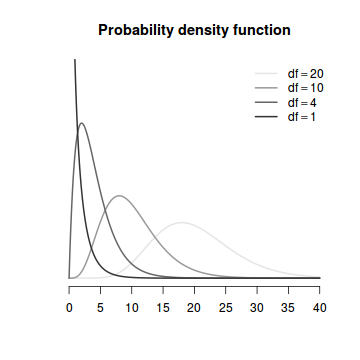

The degree of difference between the observed ($o$) and expected ($e$) sample category frequencies is represented by the chi-square ($\chi^2$) statistic. $$\chi^2 = \sum\frac{(o-e)^2}{e}$$ This is a relative measure that is standardized by the magnitude of the expected frequencies. When the null hypothesis is true (typically this represents the situation when there are no effects or patterns of interest in the population response category frequencies), and we have sampled in an unbiased manner, we might expect the observed category frequencies in the sample to be very similar (if not equal) to the expected frequencies and thus, the chi-square value should be close to zero. Likewise, repeated sampling from such a population is likely to yield chi-square values close to zero and large chi-square values should be relatively rare. As such, the chi-square statistic approximately follows a $\chi^2$ distribution (see figure below), a mathematical probability distribution representing the frequency (and thus probability) of all possible ranges of chi-square statistics that could result when the null hypothesis is true.

|

The $\chi^2$ distribution is defined as: $$p(x) = \frac{1}{\left(2^{(\frac{n}{2})}\gamma(\frac{n}{2})\right)}~x^{\left(\frac{n}{2-1}\right)}.e^{\left(\frac{-x}{2}\right)}$$ Note that the location AND shape are both determined by a single parameter (the sample size, $n$ which is also equal to the degrees of freedom+1). |

The $\chi^2$ distribution is an asymmetrical distribution bounded by zero and infinity and whose exact shape is determined by the degrees of freedom (calculated as the total number of categories minus 1). Note also that the peak of a chi-square distribution is not actually at zero (although it does approach it when the degrees of freedom is equal to zero). Initially, this might seem counter intuitive. We might expect that when a null hypothesis is true, the most common chi-square value will be zero. However, the $\chi^2$ distribution takes into account the expected natural variability in a population as well as the nature of sampling (in which multiple samples should yield slightly different results). The more categories there are, the more likely that the observed and expected values will differ. It could be argued that when there are a large number of categories, samples in which all the observed frequencies are very close to the expected frequencies are a little suspicious and may represent dishonesty on the part of the researcher (Indeed the extraordinary conformity of Gregor Mendel’s pea experiments have been subjected to such skepticism).

By comparing any given sample chi-square statistic to its appropriate $\chi^2$ distribution, the probability that the observed category frequencies could have be collected from a population with a specific ratio of frequencies (for example 3:1) can be estimated. As is the case for most hypothesis tests, probabilities lower than 0.05 (5%) are considered unlikely and suggest that the sample is unlikely to have come from a population characterized by the null hypothesis. Chi-squared tests are typically one-tailed tests focusing on the right-hand tail as we are primarily interested in the probability of obtaining large chi-square values. Nevertheless, it is also possible to focus on the left-hand tail so as to investigate whether the observed values are "too good to be true".

Assumptions

A chi-square statistic will follow a $\chi^2$ distribution approximately provided;

- All observations are classified independently of one another. The classification of one replicate should not be influenced by or related to the classification of other replicates. Random sampling should address this.

- No more than 20% of the expected frequencies are less than five. $\chi^2$ distributions do not reliably approximate the distribution of all possible chi-square values under those circumstances (Expected frequencies less than five result in asymmetrical sampling distributions (since they must be truncated at zero) and thus potentially unrepresentative $\chi^2$ distributions). Since the expected values are a function of sample sizes, meeting this assumption is a matter of ensuring sufficient replication. When sample sizes or other circumstances beyond control lead to a violation of this assumption, numerous options are available (see here)

Goodness of fit tests

Homogeneous frequencies tests

Homogeneous frequencies tests (often referred to as goodness of fit tests) are used to test null hypotheses that the category frequencies observed within a single variable could arise from a population displaying a specific ratio of frequencies. The null hypothesis (H0) is that the observed frequencies come from a population with a specific ratio of frequencies.

Distributional conformity - Kolmogorov-Smirnov tests

Strictly, goodness of fit tests are used to examine whether a frequency/sampling distribution is homogeneous with some declared distribution. For example, we might use a goodness of fit test to formally investigate whether the distribution of a response variable deviates substantially from a normal distribution. In this case, frequencies of responses in a set of pre-defined bin ranges are compared to those frequencies expected according to the mathematical model of a normal distribution. Since calculations of these expected frequencies also involve estimates of population mean and variance (both required to determine the mathematical formula), a two degree of freedom loss is incurred (hence $df=n-2$).

Contingency tables

Contingency tables are used to investigate the associations between two or more categorical variables. That is, they test whether the patterns of frequencies in one categorical variable differ between different levels of other categorical variable(s) or ould the variables be independent of another. In this way, they are analogous to interactions in factorial linear models (such as factorial ANOVA).

Contingency tables test the null hypothesis (H0) that the categorical variables are independent of (not associated with) one another. Note that analyses of contingency tables do not empirically distinguish between response and predictor variables (analogous to correlation), yet causality can be implied when logical and justified by interpretation. As an example, contingency tables could be used to investigate whether incidences of hair and eye color in a population are associated with one another (is one hair color type more commonly observed with a certain eye color). In this case, neither hair color nor eye color influence one another, their incidences are both controlled by a separate set of unmeasured factors. By contrast, an association between the presence or absence of a species of frog and the level of salinity (high, medium or low) could imply that salinity effects the distribution of that species of frog - but not vice versa.

Sample replicates are cross-classified according to the levels (categories) of multiple categorical variables. The data are conceptualized as a table (hence the name) with the rows representing the levels of one variable and the column the levels of the other variable(s) such that the cells represent the category combinations. The expected frequency of any given cell is calculated as: $$\frac{(row~total) \times (column~total)}{grand~total}$$ Thereafter, the chi-square calculations are calculated as described above and the chi-square value is compared to a $\chi^2$ distribution with $(r-1)(c-1)$ degrees of freedom.

Contingency tables involving more than two variables have multiple interaction levels and thus multiple potential sources of independence. For example, in a three-way contingency table between variables A, B and C, there are four interactions (A:B, A:C, B:C and A:B:C). Such designs are arguably more appropriately analysed using log-linear models (see Tutorial 10.4 - Generalized linear models).

Odds ratios

The chi-square test provides an indication of whether or not the occurrences in one set of categories are likely to be associated with other sets of categories (an interaction between two or more categorical variables), yet does not provide any indication of how strongly the variables are associated (magnitude of the effect). Furthermore, for variables with more than two categories (e.g. high, medium, low), there is no indication of which category combinations contribute most to the associations. This role is provided by odds ratios which are essentially a measure of effect size.

Odds refer the likelihood of a specific event or outcome occurring (such as the odds of a species being present) versus the odds of it not occurring (and thus the occurrence of an alternative outcome) and are calculated as $\pi_j/(1 - \pi_j)$ where $\pi_j$ refers to the probability of the event occurring. For example, we could calculate the odds of frogs being present in highly saline habitats as the probability of frogs being present divided by the probability of them being absent. Similarly, we could calculate the likelihood of frog presence (odds) within low salinity habitats.

The ratio of two of these likelihoods (odds ratio) can then be used to compare whether the likelihood of one outcome (frog presence) is the same for both categories (salinity levels). For example, is the likelihood of frogs being present in highly saline habitats the same as the probability of them being present in habitats with low levels of salinity. In so doing, the odds ratio is a measure of effect size that describes the strength of an association between pairs of cross-classification levels.

Although odds and thus odds ratios ($\theta$) are technically derived from probabilities, they can also be estimated using cell frequencies ($n$). \begin{align*} \theta &= \frac{n_{11}n_{22}}{n_{12}n_{21}} \hspace{1cm} OR~alternatively\\ \theta &= \frac{(n_{11}+0.5)(n_{22}+0.5)}{(n_{12}+0.5)(n_{21}+0.5)} \end{align*} where 0.5 is a small constant added to prevent division by zero. An odds ratio of one indicates that the event or occurrence (presence of frogs) is equally likely in both categories (high and low salinity habitats). Odds ratios greater than one signify that the event or occurrence is more likely in the first than second category and vice verse for odds ratios less than one. For example, when comparing the presence/absence of frogs in low versus high salinity habitats, an odds ratio of 5.8 would suggest that frogs are 5.8 times more likely to be present in low salinity habitats than those that highly saline.

The distribution of odds ratios (which range from 0 to $\infty$) is not symmetrical around the null position (1) thereby precluding confidence interval and standard error calculations. Instead, these measures are calculated from log transformed (natural log) odds ratios (the distribution of which is a standard normal distribution centered around 0) and then converted back into a linear scale by anti-logging. Odds ratios can only be calculated between category pairs from two variables and therefore 2 x 2 contingency tables (tables with only two rows and two columns). However, tables with more rows and columns can be accommodate by splitting the table up into partial tables of specific category pair combinations. Odds ratios (and confidence intervals) are then calculated from each pairing, notwithstanding their lack of independence. For example, if there were three levels of salinity (high, medium and low), the odds ratios from three partial tables (high vs medium, high vs low, medium vs low) could be calculated.

Multi-way tables

Since odds ratios only explore pairwise patterns within two-way interactions, odds ratios for multi-way (three or more variables) tables are considerably more complex to calculate and interpret. Partial tables between two of the variables (e.g frog presence/absence and high/low salinity) are constructed for each level of a third (season: summer/winter). This essentially removes the effect of the third variable by holding it constant. Associations in partial tables are therefore referred to as conditional associations - since the outcomes (associated or independent) from each partial table are explicitly conditional on the level of the third variable at which they were tested.

Residuals

Specific contributions to a lack of independence (significant associations) can also be investigated by exploring the residuals. Recall that residuals are the difference between the observed values (frequencies) and those predicted or expected when the null hypothesis is true (no association between variables). Hence the magnitude of each residual indicates how much each of the cross classification combinations differs from what is expected. The residuals are typically standardized (by dividing by the square of the expected frequencies) to enable individual residuals to be compared relative to one another. Large residuals (in magnitude) indicate large deviations from what is expected when the null hypothesis is true and thus also indicate large influences (contributions) to the overall association. The sign (+ or -) of the residual indicates whether the frequencies were higher or lower than expected.

G-test

An alternative to the chi-square test for goodness of fit and contingency table analyses is the G-test. The G-test is based on a log likelihood-ratio test. A log likelihood ratio is a ratio of maximum likelihoods of the alternative and null hypotheses. More simply, a log likelihood ratio test essentially examines how likely (the probability) the alternative hypothesis (representing an effect) is compared to how likely the null hypothesis (no effect) is given the collected data.

The G2 statistic is calculated as: $$G^2=2\sum o.ln\left(\frac{o}{e}\right)$$ where $o$ and $e$ are the observed and expected sample category frequencies respectively and $ln$ denotes the natural logarithm (base e).

When the null hypothesis is true, the $G^2$ statistic approximately follows a theoretical $\chi^2$ distribution with the same degrees of freedom as the corresponding chi-square statistic. The $G^2$ statistic (which is twice the value of the log-likelihood ratio) is arguably more appropriate than the chi-square statistic as it is closely aligned with the theoretical basis of the $\chi^2$ distribution (for which the chi-squared statistic is a convenient approximation). For large sample sizes, $G^2$ and $\chi^2$ statistics are equivalent, however the former is a better approximation of the theoretical $chi^2$ distribution when the difference between the observed and expected is less than the expected frequencies (ie $|o-e|\lt e$). Nevertheless, G-tests operate under the same assumptions are the chi-square statistic and thus very small sample sizes (expected values less than 5) are still problematic. G-tests have the additional advantage that they can be used additively with more complex designs and a thus more extensible than the chi-squared statistic.

Small sample sizes

As discussed previously, both the $\chi^2$ and $G^2$ statistics are poor approximations of theoretical $\chi^2$ distributions when sample sizes are very small. Under these circumstances a number of alternative options are available:

- If the issue has arisen due to a large number of category levels in one or more of the variables, some categories could be combined together.

- Fishers exact test which essentially calculates the probability of obtaining the cell frequencies given the observed marginal totals in 2 x 2 tables. The calculations involved in such tests are extremely tedious as they involve calculating probabilities from hypergeometric distributions (discrete distributions describing the number of successes from sequences of samples drawn with out replacement) for all combinations of cell values that result in the given marginal totals.

- Yates' continuity correction calculates the test statistic after adding and subtracting 0.5 from observed values less than and greater than expected values respectively. Yates' correction can only be applied to designs with a single degree of freedom (goodness-of-fit designs with two categories or 2 x 2 tables) and for goodness-of-fit tests provide p-values that are closer to those of an exact binomial. However, they typically yield over inflated p-values in contingency tables and so have gone out of favour.

- Williams' correction is applied by dividing the test statistic by $1+(p^2-1)6nv$, where $p$ is the number of categories, $n$ is the total sample size (total of observed frequencies) and $v$ is the number of degrees of freedom $(p - 1)$. Williams' corrections can be applied to designs with greater than one degree of freedom, and are considered marginally more appropriate than Yates' corrections if corrections are insisted.

- Randomization tests in which the sample test statistic (either $\chi^2$ or $G^2$) is compared to a probability distribution generated by repeatedly calculating the test statistic from an equivalent number of observations drawn from a population (sampling with replacement) with the specific ratio of category frequencies defined by the null hypothesis. Significance is thereafter determined by the proportion of the randomized test statistic values that are greater than or equal to the value of the statistic that is based on observed data.

- Log-linear modelling (as a form of generalized linear model)