Tutorial 9.4a - Split-plot and complex repeated measures ANOVA

27 Jul 2018

Overview

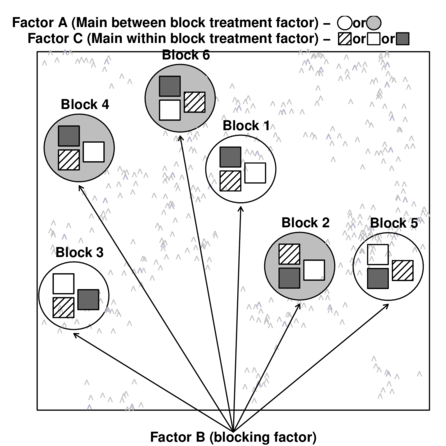

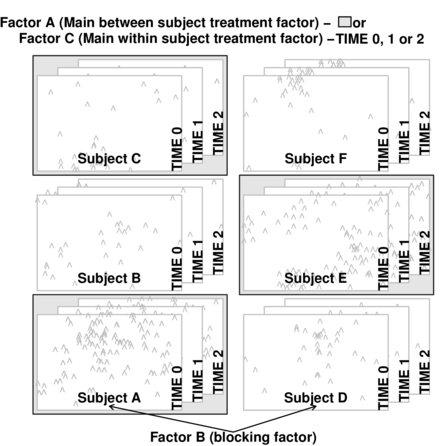

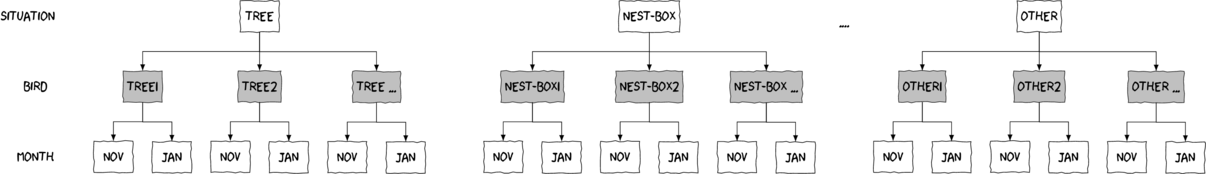

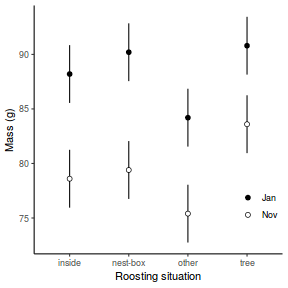

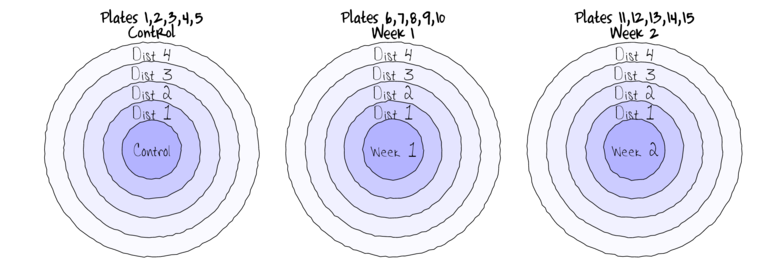

Split-plot designs (plots refer to agricultural field plots for which these designs were originally devised) extend unreplicated factorial (randomized complete block and simple repeated measures) designs by incorporating an additional factor whose levels are applied to entire blocks. Similarly, complex repeated measures designs are repeated measures designs in which there are different types of subjects. Split-plot and complex repeated measures designs are depicted diagrammatically in the following figure.

|

|

Consider the example of a randomized complete block presented at the start of Tutorial 9.3a. Blocks of four treatments (representing leaf packs subject to different aquatic taxa) were secured in numerous locations throughout a potentially heterogeneous stream. If some of those blocks had been placed in riffles, some in runs and some in pool habitats of the stream, the design becomes a split-plot design incorporating a between block factor (stream region: runs, riffles or pools) and a within block factor (leaf pack exposure type: microbial, macro invertebrate or vertebrate).

Furthermore, the design would enable us to investigate whether the roles that different organism scales play on the breakdown of leaf material in stream are consistent across each of the major regions of a stream (interaction between region and exposure type). Alternatively (or in addition), shading could be artificially applied to half of the blocks, thereby introducing a between block effect (whether the block is shaded or not).

Extending the repeated measures examples from Tutorial 9.3a, there might have been different populations (such as different species or histories) of rats or sharks. Any single subject (such as an individual shark or rat) can only be of one of the populations types and thus this additional factor represents a between subject effect.

Null hypotheses

There are separate null hypotheses associated with each of the main factors (and interactions), although typically, null hypotheses associated with the random blocking factors are of little interest.

Factor A - the main between block treatment effect

Fixed (typical case)

H$_0(A)$: $\mu_1=\mu_2=...=\mu_i=\mu$ (the population group means of A are all equal)

The mean of population 1 is equal to that of population 2 and so on, and thus all population means are equal to an overall mean. No effect of A.

If the effect of the $i^{th}$ group is the difference between the $i^{th}$ group mean and the overall mean ($\alpha_i = \mu_i - \mu$) then the H$_0$ can alternatively be written as:

H$_0(A)$: $\alpha_1 = \alpha_2 = ... = \alpha_i = 0$ \hspace*{2em}(the effect of each group equals zero)

If one or more of the $\alpha_i$ are different from zero (the response mean for this treatment differs from the overall response mean), the null hypothesis is not true indicating that the treatment does affect the response variable.

Random

H$_0(A)$: $\sigma_\alpha^2=0$ (population variance equals zero)

There is no added variance due to all possible levels of A.

Factor B - the blocking factor

Random (typical case)

H$_0(B)$: $\sigma_\beta^2=0$ (population variance equals zero)

There is no added variance due to all possible levels of B.

Fixed

H$_0(B)$: $\mu_{1}=\mu_{2}=...=\mu_{i}=\mu$ (the population group means of B are all equal)

H$_0(B)$: $\beta_{1} = \beta_{2}= ... = \beta_{i} = 0$ (the effect of each chosen B group equals zero)

Factor C - the main within block treatment effect

Fixed (typical case)

H$_0(C)$: $\mu_1=\mu_2=...=\mu_k=\mu$ (the population group means of C (pooling B) are all equal)

The mean of population 1 (pooling blocks) is equal to that of population 2 and so on, and thus all population means are equal to an overall mean. No effect of C within each block (Model 2) or over and above the effect of blocks.

If the effect of the $k^{th}$ group is the difference between the $k^{th}$ group mean and the overall mean ($\gamma_k = \mu_k - \mu$) then the H$_0$ can alternatively be written as:

H$_0(C)$: $\gamma_1 = \gamma_2 = ... = \gamma_k = 0$ (the effect of each group equals zero)

If one or more of the $\gamma_k$ are different from zero (the response mean for this treatment differs from the overall response mean), the null hypothesis is not true indicating that the treatment does affect the response variable.

Random

H$_0(C)$: $\sigma_\gamma^2=0$ (population variance equals zero)

There is no added variance due to all possible levels of C (pooling B).

Factor AC interaction - the main within block interaction effect

Fixed (typical case)

H$_0(A\times C)$: $\mu_{ijk}-\mu_i-\mu_k+\mu=0$ (the population group means of AC combinations (pooling B) are all equal)

There are no effects in addition to the main effects and the overall mean.

If the effect of the $ik^{th}$ group is the difference between the $ik^{th}$ group mean and the overall mean ($\gamma_{ik} = \mu_i - \mu$) then the H$_0$ can alternatively be written as:

H$_0(AC)$: $\alpha\gamma_{11} = \alpha\gamma_{12} = ... = \alpha\gamma_{ik} = 0$ (the interaction is equal to zero)

Random

H$_0(AC)$: $\sigma_{\alpha\gamma}^2=0$ (population variance equals zero)

There is no added variance due to any interaction effects (pooling B).

Factor BC interaction - the main within block by within Block effects

Random (typical case)

H$_0(BC)$: $\sigma_{\beta\gamma}^2=0$ (population variance equals zero)

There is no added variance due to any block by within block interaction effects. That is, the patterns amongst the levels of C are consistent across all the blocks.

Unless each of the levels of Factor C are replicated (occur more than once) within each block, this null hypotheses about this effect cannot be tested.

Linear models

The linear models for three and four factor partly nested designs are:

One between ($\alpha$), one within ($\gamma$) block effect

$y_{ijkl}=\mu+\alpha_i+\beta_{j}+\gamma_k+\alpha\gamma_{ij}+\beta\gamma_{jk} + \varepsilon_{ijkl}\hspace{20em}\\$

Two between ($\alpha$, $\gamma$), one within ($\delta$) block effect

\begin{align*} y_{ijklm}=&\mu+\alpha_i+\gamma_j+\alpha\gamma_{ij}+\beta_{k}+\delta_l+\alpha\delta_{il}+\gamma\delta_{jl} + \alpha\gamma\delta_{ijl}+\varepsilon_{ijklm}\hspace{5em} &\mathsf{(Model 2 - Additive)}\\ y_{ijklm}=&\mu+\alpha_i+\gamma_j+\alpha\gamma_{ij}+\beta_{k}+\delta_l+\alpha\delta_{il}+\gamma\delta_{jl} +\alpha\gamma\delta_{ijl} + \\ &\beta\delta_{kl}+\beta\alpha\delta_{kil}+\beta\gamma\delta_{kjl}+\beta\alpha\gamma\delta_{kijl}+\varepsilon_{ijklm}\hspace{5em} &\mathsf{(Model 1 - Non-additive)} \end{align*}One between ($\alpha$), two within ($\gamma$, $\delta$) block effects

\begin{align*} y_{ijklm}=&\mu+\alpha_i+\beta_{j}+\gamma_{k}+\delta_l+\gamma\delta_{kl}+\alpha\gamma_{ik}+\alpha\delta_{il}+\alpha\gamma\delta_{ikl}+\varepsilon_{ijk} \hspace{5em}&\mathsf{(Model 2- Additive)}\\ y_{ijklm}=&\mu+\alpha_i+\beta_{j}+\gamma_{k}+\beta\gamma_{jk}+\delta_l+\beta\delta_{jl}+\gamma\delta_{kl}+\beta\gamma\delta_{jkl}+\alpha\gamma_{ik}+\\ &\alpha\delta_{il}+\alpha\gamma\delta_{ikl}+\varepsilon_{ijk} &\mathsf{(Model 1 - Non-additive)} \end{align*} where $\mu$ is the overall mean, $\beta$ is the effect of the Blocking Factor B and $\varepsilon$ is the random unexplained or residual component.Analysis of Variance

The construction of appropriate F-ratios generally follow the rules and conventions established in Tutorial 8.7a-Tutorial 9.3a , albeit with additional complexity. The following tables document the (classically considered) appropriate numerator and denominator mean squares and degrees of freedom for each null hypothesis for a range of two and three factor partly nested designs. As stated in previous tutorials, there is considerable debate as to what the appropriate denominator degrees of freedom should be and indeed whether it is even possible to estimate the denominator degrees of freedom in hierarchical designs.

| F-ratio | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| A&C fixed, B random | A fixed, B&C random | C fixed, A&B random | |||||||

| Factor | d.f | Restricted | Unrestricted | Restricted | Unrestricted | Restricted | Unrestricted | A,B&C random | |

| 1 | A | $a-1$ | 1/2 | 1/2 | 1/(2+4-5) | 1/(2+4-5) | 1/2 | ? | 1/(2+4-5) |

| 2 | B$^\prime$(A) | $(b-1)a$ | 2/5 | 2/5 | 2/5 | 2/5 | 2/5 | ||

| 3 | C | $(c-1)$ | 3/5 | 3/5 | 3/5 | 3/4 | 3/4 | 3/4 | 3/4 |

| 4 | AC | $(c-1)(a-1)$ | 4/5 | 4/5 | 4/5 | 4/5 | 4/5 | 4/5 | 4/5 |

| 5 | Resid (=CxB$^\prime$(A)) | $(c-1)(b-1)a$ | |||||||

| R syntax (A&C fixed, B random) | |||||||||

| Balanced |

summary(aov(y~A*C+Error(B), data)) |

||||||||

| Balanced or unbalanced |

library(nlme) summary(lme(y~A*C, random=~1|B, data)) summary(lme(y~A*C, random=~1|B, data), correlation=...) anova(lme(y~A*C, random=~1|B, data)) #OR library(lme4) summary(lmer(y~(1|B)+A*C, data)) |

||||||||

| Variance components |

library(nlme) summary(lme(y~1, random=~1|B/(A*C), data)) #OR library(lme4) summary(lmer(y~(1|B)+(1|A*C), data)) |

||||||||

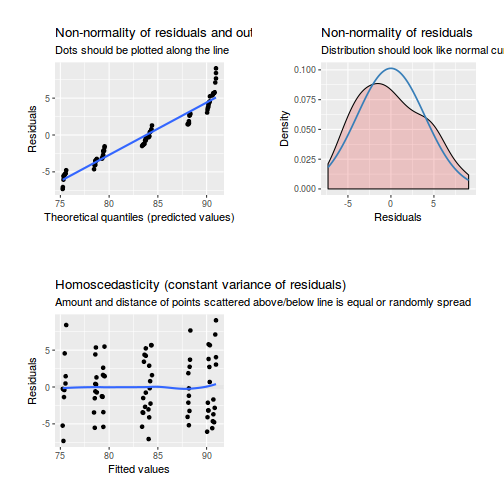

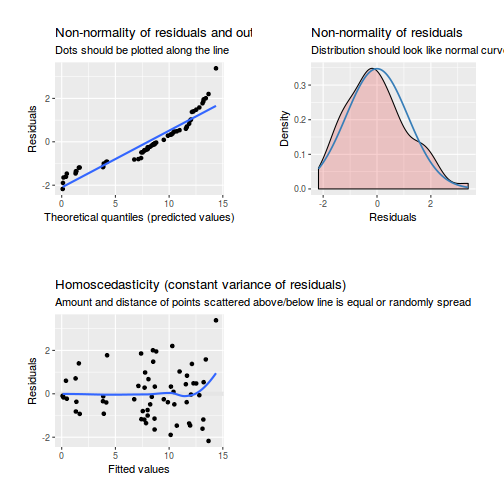

Assumptions

As partly nested designs share elements in common with each of nested, factorial and unreplicated factorial designs, they also share similar assumptions and implications to these other designs. Readers should also consult the sections on assumptions in Tutorial 7.6a, Tutorial 9.2a and Tutorial 9.3a Specifically, hypothesis tests assume that:

- the appropriate residuals are normally distributed. Boxplots using the appropriate scale of replication (reflecting the appropriate residuals/F-ratio denominator (see Tables above) be used to explore normality. Scale transformations are often useful.

- the appropriate residuals are equally varied. Boxplots and plots of means against variance (using the appropriate scale of replication) should be used to explore the spread of values. Residual plots should reveal no patterns. Scale transformations are often useful.

- the appropriate residuals are independent of one another. Critically, experimental units within blocks/subjects should be adequately spaced temporally and spatially to restrict contamination or carryover effects. Non-independence resulting from the hierarchical design should be accounted for.

- that the variance/covariance matrix displays sphericity (strickly, the variance-covariance matrix must display a very specific pattern of sphericity in which both variances and covariances are equal (compound symmetry), however an F-ratio will still reliably follow an F distribution provided basic sphericity holds). This assumption is likely to be met only if the treatment levels within each block can be randomly ordered. This assumption can be managed by either adjusting the sensitivity of the affected F-ratios or employing linear mixed effects modelling to the design.

- there are no block by within block interactions. Such interactions render non-significant within block effects difficult to interpret unless we assume that there are no block by within block interactions, non-significant within block effects could be due to either an absence of a treatment effect, or as a result of opposing effects within different blocks. As these block by within block interactions are unreplicated, they can neither be formally tested nor is it possible to perform main effects tests to diagnose non-significant within block effects.

$R^2$ approximations

Whilst $R^2$ is a popular goodness of fit metric in simple linear models, its use is rarely extended to (generalized) linear mixed effects models. The reasons for this include:

- there are numerous ways that $R^2$ could be defined for mixed effects models, some of which can result in values that are either difficult to interpret or illogical (for example negative $R^2$).

- perhaps as a consequence, software implementation is also largely lacking.

Nakagawa and Schielzeth (2013) discuss the issues associated with $R^2$ calculations and

suggest a series of simple calculations to yield sensible $R^2$ values from mixed effects models.

An $R^2$ value quantifies the proportion of variance explained by a model (or by terms in a model) - the higher the

value, the better the model (or term) fit.

Nakagawa and Schielzeth (2013) offered up two $R^2$ for mixed effects models:

- Marginal $R^2$ - the proportion of total variance explained by the fixed effects. $$ \text{Marginal}~R^2 = \frac{\sigma^2_f}{\sigma^2_f + \sum^z_l{\sigma^2_l} + \sigma^2_d + \sigma^2_e} $$ where $\sigma^2_f$ is the variance of the fitted values (i.e. $\sigma^2_f = var(\mathbf{X\beta})$) on the link scale, $\sum^z_l{\sigma^2_l}$ is the sum of the $z$ random effects (including the residuals) and $\sigma^2_d$ and $\sigma^2_e$ are additional variance components appropriate when using non-Gaussian distributions.

- Conditional $R^2$ - the proportion of the total variance collectively explained by the fixed and random factors $$ \text{Conditional}~R^2 = \frac{\sigma^2_f + \sum^z_l{\sigma^2_l}}{\sigma^2_f + \sum^z_l{\sigma^2_l} + \sigma^2_d + \sigma^2_e} $$

Split-plot and complex repeated analysis in R

Split-plot design

Scenario and Data

Imagine we has designed an experiment in which we intend to measure a response ($y$) to one of treatments (three levels; 'a1', 'a2' and 'a3'). Unfortunately, the system that we intend to sample is spatially heterogeneous and thus will add a great deal of noise to the data that will make it difficult to detect a signal (impact of treatment).

Thus in an attempt to constrain this variability you decide to apply a design (RCB) in which each of the treatments within each of 35 blocks dispersed randomly throughout the landscape. As this section is mainly about the generation of artificial data (and not specifically about what to do with the data), understanding the actual details are optional and can be safely skipped. Consequently, I have folded (toggled) this section away.

- the number of between block treatments (A) = 3

- the number of blocks = 35

- the number of within block treatments (C) = 3

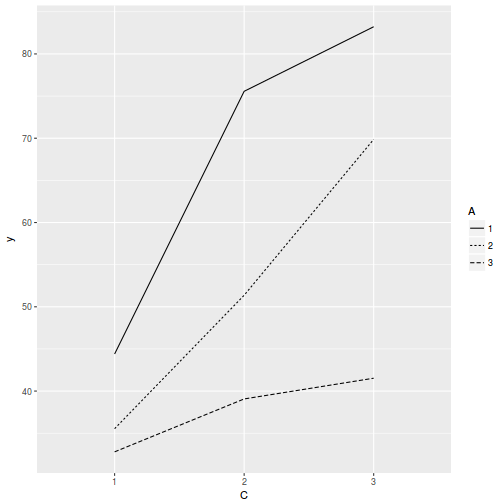

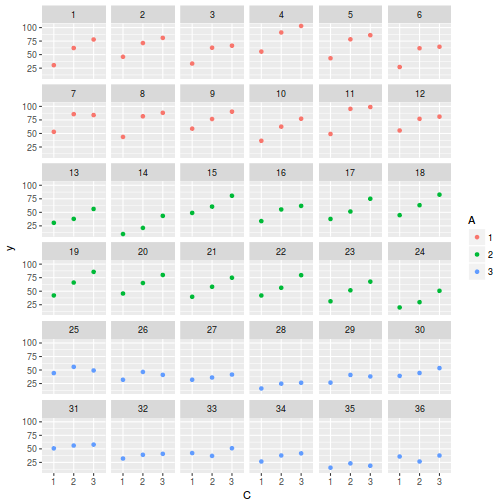

- the mean of the treatments = 40, 70 and 80 respectively

- the variability (standard deviation) between blocks of the same treatment = 12

- the variability (standard deviation) between treatments withing blocks = 5

library(ggplot2)

set.seed(1) nA <- 3 nC <- 3 nBlock <- 36 sigma <- 5 sigma.block <- 12 n <- nBlock * nC Block <- gl(nBlock, k = 1) C <- gl(nC, k = 1) ## Specify the cell means AC.means <- (rbind(c(40, 70, 80), c(35, 50, 70), c(35, 40, 45))) ## Convert these to effects X <- model.matrix(~A * C, data = expand.grid(A = gl(3, k = 1), C = gl(3, k = 1))) AC <- as.vector(AC.means) AC.effects <- solve(X, AC) A <- gl(nA, nBlock, n) dt <- expand.grid(C = C, Block = Block) dt <- data.frame(dt, A) Xmat <- cbind(model.matrix(~-1 + Block, data = dt), model.matrix(~A * C, data = dt)) block.effects <- rnorm(n = nBlock, mean = 0, sd = sigma.block) all.effects <- c(block.effects, AC.effects) lin.pred <- Xmat %*% all.effects ## the quadrat observations (within sites) are drawn from normal distributions with means according to ## the site means and standard deviations of 5 y <- rnorm(n, lin.pred, sigma) data.splt <- data.frame(y = y, A = A, dt) head(data.splt) #print out the first six rows of the data set

y A C Block A.1 1 30.51110 1 1 1 1 2 62.18599 1 2 1 1 3 77.98268 1 3 1 1 4 46.01960 1 1 2 1 5 71.38110 1 2 2 1 6 80.93691 1 3 2 1

tapply(data.splt$y, data.splt$A, mean)

1 2 3 67.73243 52.25684 37.79359

tapply(data.splt$y, data.splt$C, mean)

1 2 3 37.57486 55.33468 64.87331

replications(y ~ A * C + Error(Block), data.splt)

A C A:C 36 36 12

ggplot(data.splt, aes(y = y, x = C, linetype = A, group = A)) + geom_line(stat = "summary", fun.y = mean)

ggplot(data.splt, aes(y = y, x = C, color = A)) + geom_point() + facet_wrap(~Block)

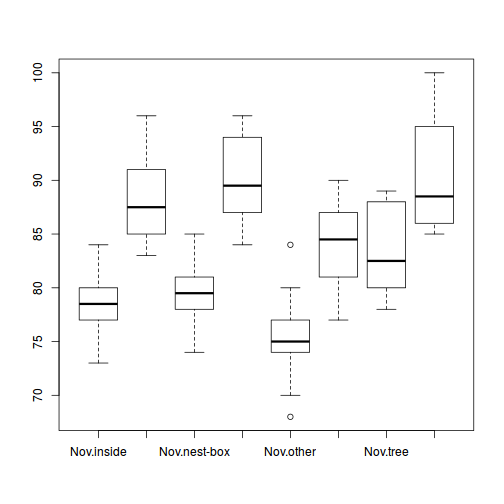

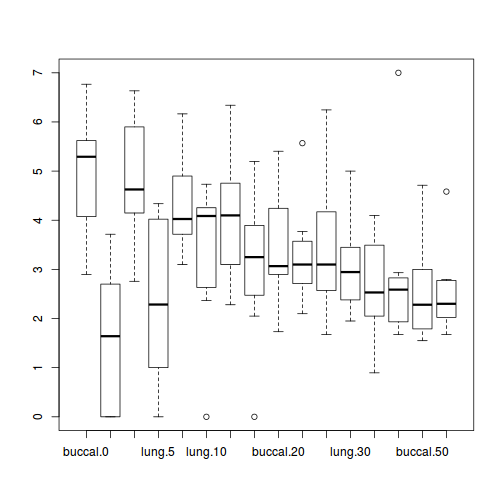

Exploratory data analysis

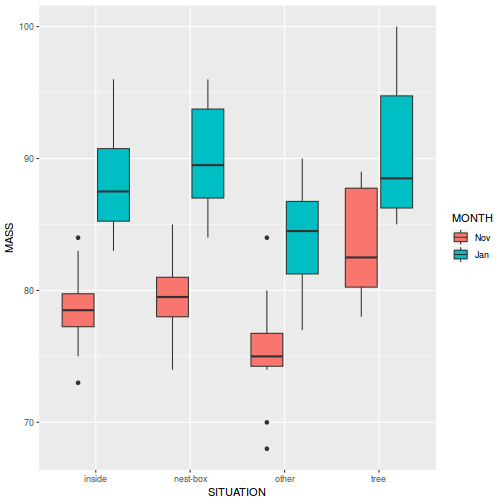

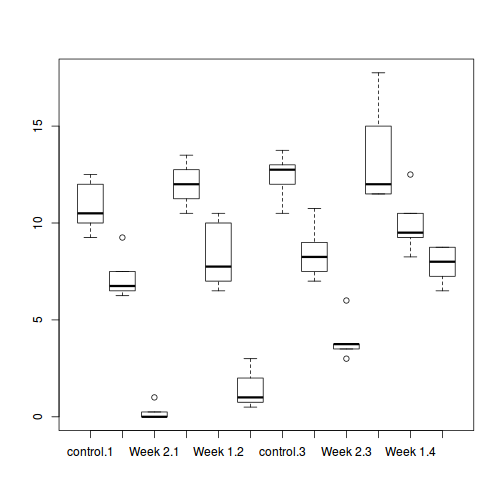

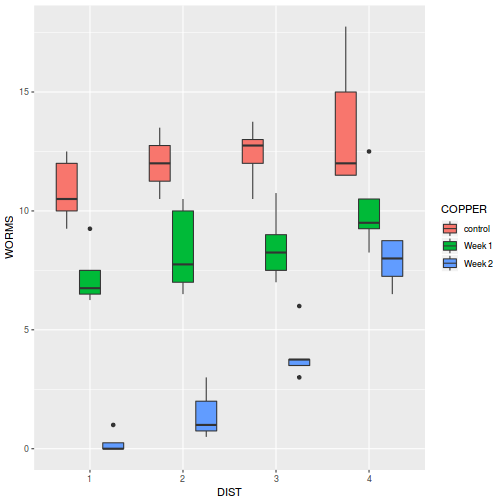

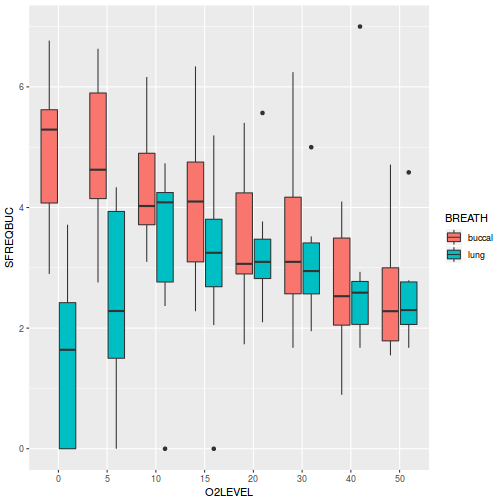

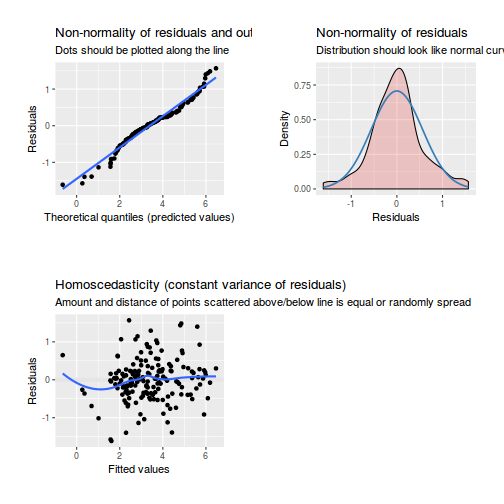

Normality and Homogeneity of variance

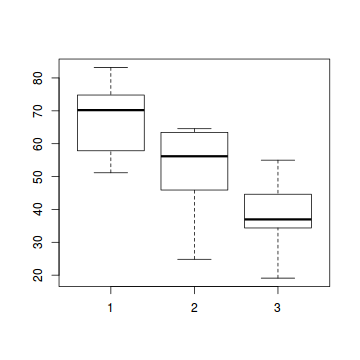

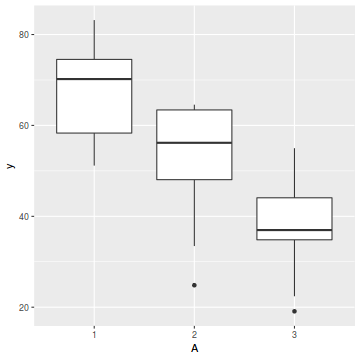

# check between plot effects library(plyr) boxplot(y ~ A, ddply(data.splt, ~A + Block, summarise, y = mean(y)))

# OR library(ggplot2) ggplot(ddply(data.splt, ~A + Block, summarise, y = mean(y)), aes(y = y, x = A)) + geom_boxplot()

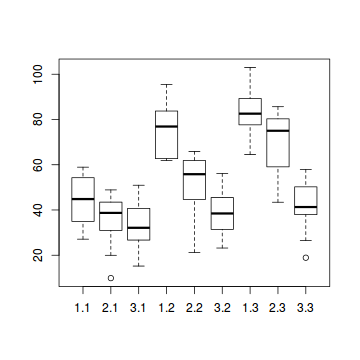

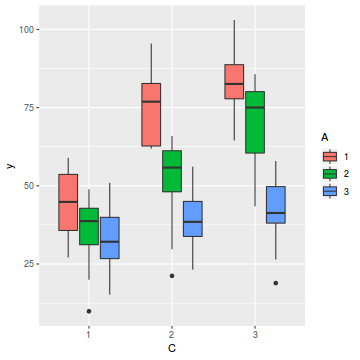

# check within plot effects boxplot(y ~ A * C, data.splt)

# OR ggplot(data.splt, aes(y = y, x = C, fill = A)) + geom_boxplot()

Conclusions:

- there is no evidence that the response variable is consistently non-normal across all populations - each boxplot is approximately symmetrical

- there is no evidence that variance (as estimated by the height of the boxplots) differs between the five populations. . More importantly, there is no evidence of a relationship between mean and variance - the height of boxplots does not increase with increasing position along the y-axis. Hence it there is no evidence of non-homogeneity

- transform the scale of the response variables (to address normality etc). Note transformations should be applied to the entire response variable (not just those populations that are skewed).

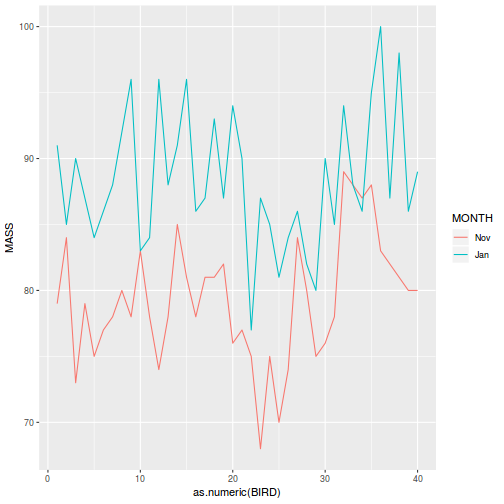

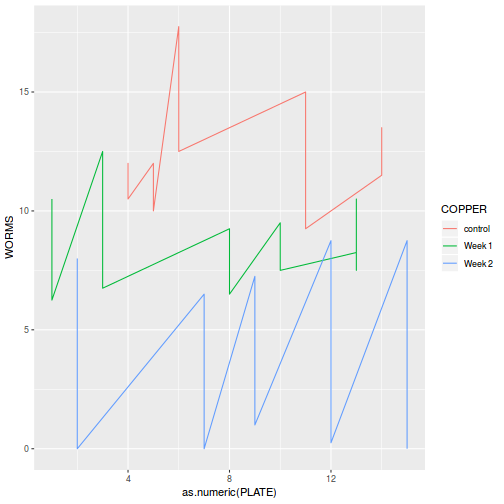

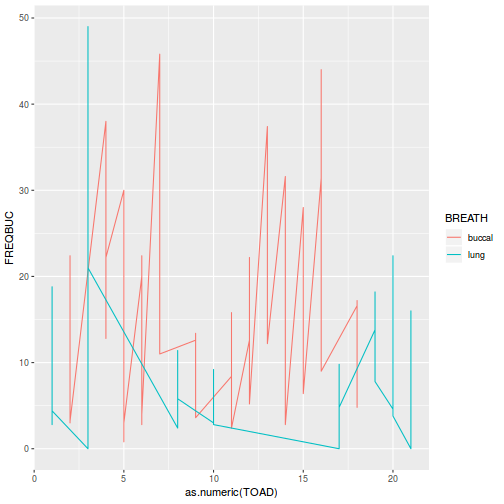

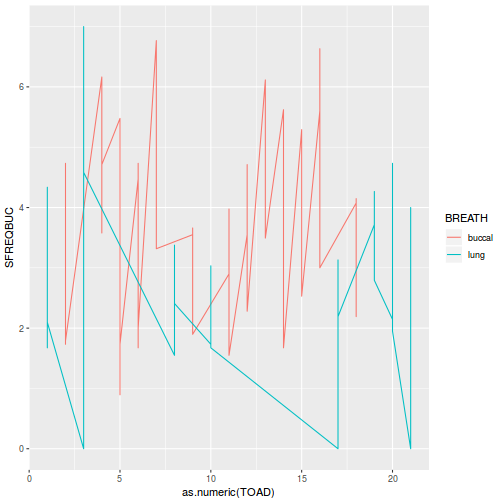

Block by within-Block interaction

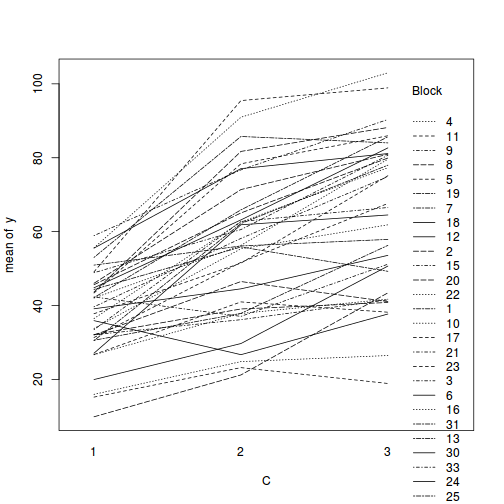

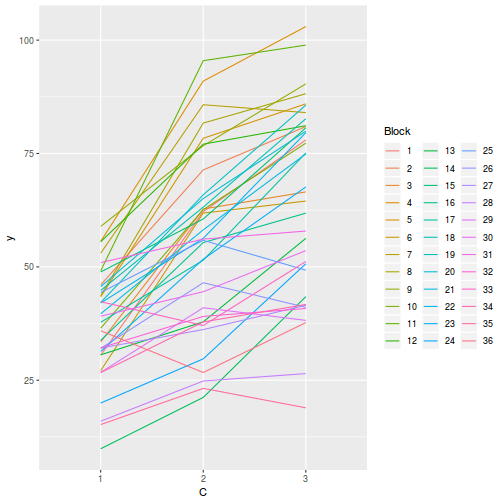

library(car) with(data.splt, interaction.plot(C, Block, y))

# OR with ggplot library(ggplot2) ggplot(data.splt, aes(y = y, x = C, group = Block, color = Block)) + geom_line() + guides(color = guide_legend(ncol = 3))

library(car) residualPlots(lm(y ~ Block + A * C, data.splt))

Test stat Pr(>|t|) Block NA NA A NA NA C NA NA Tukey test 1.539 0.124

# the Tukey's non-additivity test by itself can be obtained via an internal function within the car # package car:::tukeyNonaddTest(lm(y ~ Block + A * C, data.splt))

Test Pvalue 1.5394292 0.1236996

Conclusions:

- there is no visual or inferential evidence of any major interactions between Block and the within-Block effect (C). Any trends appear to be reasonably consistent between Blocks.

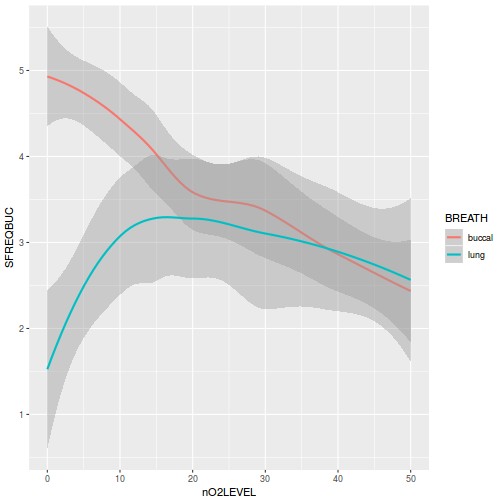

Sphericity

Prior to the use of maximum likelihood and mixed effects models (that permit specifying alternative variance-covariance structures), randomized block and repeated measures ANOVAs assumed that the variance-covariance matrix followed a specific pattern called 'Sphericity'. For repeated measures designs in which the within subject effects could not be randomized, the variance-covariance matrix was unlikely to meet sphericity. The only way of compensating for this was to estimate the degree to which sphericity was violated (via an epsilon value) and then use this epsilon to reduce the degrees of freedom of the model (thereby making p-values more conservative).

Since the levels of C in the current example were randomly assigned within each Block, we have no reason to expect that the variance-covariance will deviate substantially from sphericity. Nevertheless, we can calculate the epsilon sphericity values to confirm this. Recall that the closer the epsilon value is to 1, the greater the degree of sphericity compliance.

Note that sphericity only applies to within block effects..

library(biology)

Error in library(biology): there is no package called 'biology'

epsi.GG.HF(aov(y ~ Error(Block) + C, data = data.splt))

Error in eval(expr, envir, enclos): could not find function "epsi.GG.HF"

Conclusions:

- Both the Greenhouse-Geisser and Huynh-Feldt epsilons are reasonably close to one (they are both greater than 0.8), hence there is no evidence of correlation dependency structures.

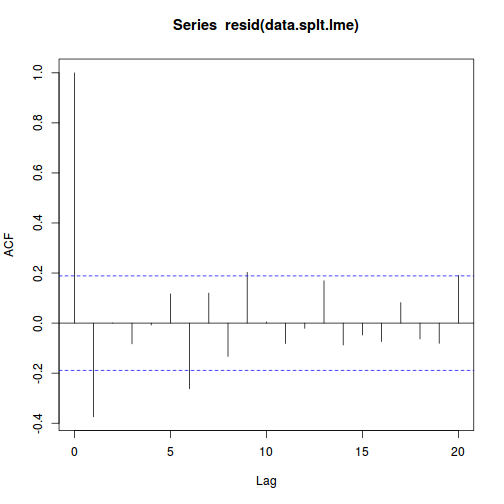

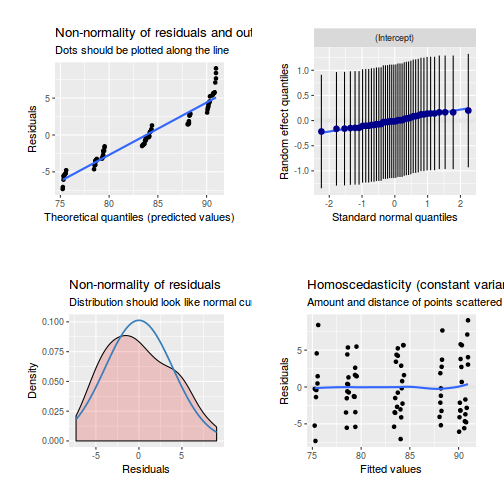

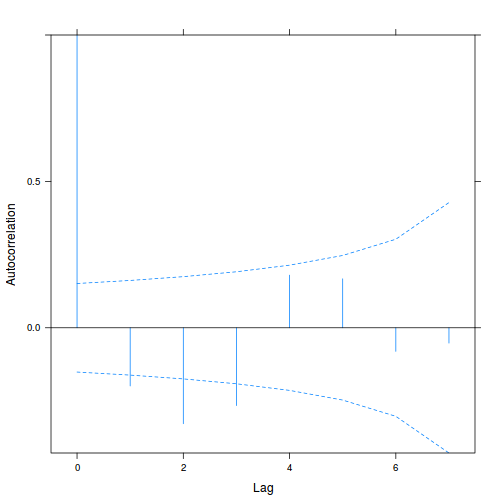

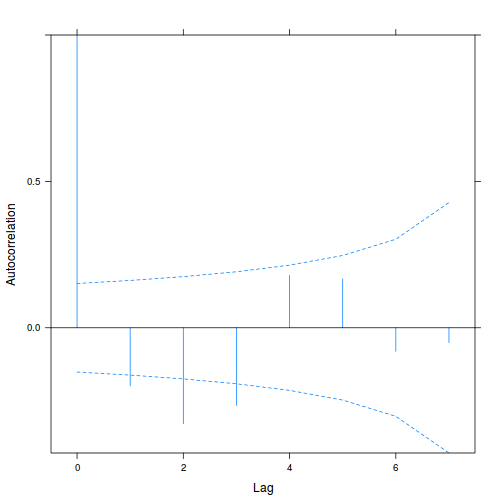

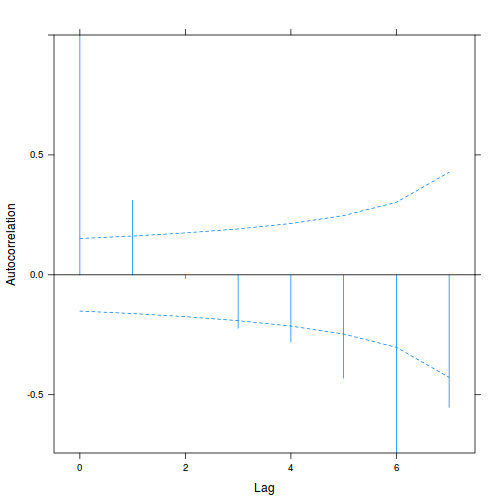

Alternatively (and preferentially), we can explore whether there is an auto-correlation patterns in the residuals.

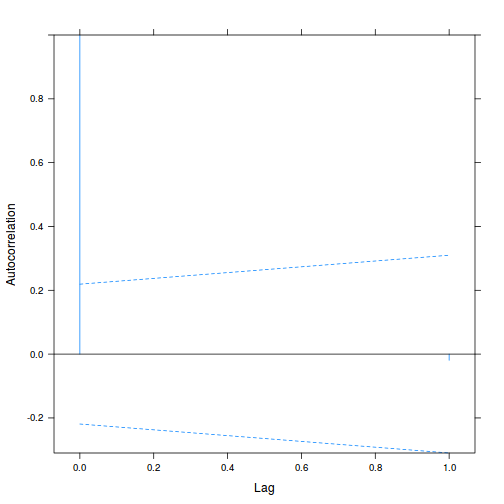

library(nlme) data.splt.lme <- lme(y ~ A * C, random = ~1 | Block, data = data.splt) acf(resid(data.splt.lme))

Conclusions:

- The autocorrelation factor (ACF) at a range of lags up to 20, indicate that there is not a strong pattern of a contagious structure running through the residuals. Note the ACF of lag 0 will always be 1 - the correlation of residuals with themselves must be 100%.

Model fitting or statistical analysis

There are numerous ways of fitting split-plot models in R.

Linear mixed effects modelling via the lme() function. This method is one of the original implementations in which separate variance-covariance matrices are incorporated into a interactive sequence of (generalized least squares) and maximum likelihood (actually REML) estimates of 'fixed' and 'random effects'.

Rather than fit just a single, simple random intercepts model, it is common to fit other related alternative models and explore which model fits the data best. For example, we could also fit a random intercepts and slope model. We could also explore other variance-covariance structures (autocorrelation or heterogeneity).

library(nlme) # random intercept data.splt.lme <- lme(y ~ A * C, random = ~1 | Block, data.splt, method = "REML") # random intercept/slope data.splt.lme1 <- lme(y ~ A * C, random = ~A | Block, data.splt, method = "REML") anova(data.splt.lme, data.splt.lme1)

Model df AIC BIC logLik Test L.Ratio p-value data.splt.lme 1 11 714.3519 742.8982 -346.1759 data.splt.lme1 2 16 723.5937 765.1156 -345.7968 1 vs 2 0.7582165 0.9796

More modern linear mixed effects modelling via the lmer() function. In contrast to the lme() function, the lmer() function supports are more complex combination of random effects (such as crossed random effects). However, unfortunately, it does not yet (and probably never will) have a mechanism to support specifying alternative covariance structures needed to accommodate spatial and temporal autocorrelation

library(lme4) data.splt.lmer <- lmer(y ~ A * C + (1 | Block), data.splt, REML = TRUE) #random intercept data.splt.lmer1 <- lmer(y ~ A * C + (A | Block), data.splt, REML = TRUE, control = lmerControl(check.nobs.vs.nRE = "ignore")) #random intercept/slope anova(data.splt.lmer, data.splt.lmer1)

Data: data.splt

Models:

data.splt.lmer: y ~ A * C + (1 | Block)

data.splt.lmer1: y ~ A * C + (A | Block)

Df AIC BIC logLik deviance Chisq Chi Df Pr(>Chisq)

data.splt.lmer 11 743.50 773.00 -360.75 721.50

data.splt.lmer1 16 752.67 795.59 -360.34 720.67 0.8271 5 0.9753

Mixed effects models can also be fit using the Template Model Builder automatic differentiation engine via the glmmTMB() function from a package with the same name. glmmTMB is able to fit similar models to lmer, yet can also incorporate more complex features such as zero inflation and temporal autocorrelation. Random effects are assumed to be Gaussian on the scale of the linear predictor and are integrated out via Laplace approximation. On the downsides, REML is not available for this technique yet and nor is Gauss-Hermite quadrature (which can be useful when dealing with small sample sizes and non-gaussian errors.

library(glmmTMB) data.splt.glmmTMB <- glmmTMB(y ~ A * C + (1 | Block), data.splt) #random intercept data.splt.glmmTMB1 <- glmmTMB(y ~ A * C + (A | Block), data.splt) #random intercept/slope anova(data.splt.glmmTMB, data.splt.glmmTMB1)

Data: data.splt

Models:

data.splt.glmmTMB: y ~ A * C + (1 | Block), zi=~0, disp=~1

data.splt.glmmTMB1: y ~ A * C + (A | Block), zi=~0, disp=~1

Df AIC BIC logLik deviance Chisq Chi Df Pr(>Chisq)

data.splt.glmmTMB 11 743.5 773 -360.75 721.5

data.splt.glmmTMB1 16 5

Traditional OLS with multiple error strata using the aov() function. The aov() function is actually a wrapper for a specialized lm() call that defines multiple residual terms and thus adds some properties and class attributes to the fitted model that modify the output. This option is illustrated purely as a link to the past, it is no longer considered as robust or flexible as more modern techniques.

data.splt.aov <- aov(y ~ A * C + Error(Block), data.splt)

Model evaluation

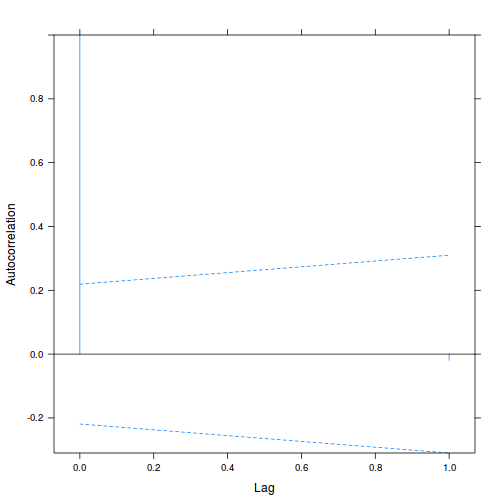

Temporal autocorrelation

Before proceeding any further we should really explore whether we are likely to have an issue with temporal autocorrelation. The models assume that there are no temporal dependency issues. A good way to explore this is to examine the autocorrelation function. Essentially, this involves looking at the degree of correlation between residuals associated with times of incrementally greater temporal lags.

We have already observed that a model with random intercepts and random slopes fits better than a model with just random intercepts. It is possible that this is due to temporal autocorrelation. The random intercept/slope model might have fit the temporally autocorrelated data better, but if this is due to autocorrelation, then the random intercepts/slope model does not actually adress the underlying issue. Consequently, it is important to explore autocorrelation for both models and if there is any evidence of temporal autocorrelation, refit both models.

We can visualize the issue via linear mixed model formulation: $$ \begin{align} y_i &\sim{} N(\mu_i, \sigma^2)\\ \mu_i &= \mathbf{X}\boldsymbol{\beta} + \mathbf{Z}\mathbf{b}\\ \mathbf{b} &\sim{} MVN(0, \Sigma)\\ \end{align} $$ With a bit or rearranging, $\mathbf{b}$ and $\Sigma$ can represent a combination of random intercepts ($\mathbf{b}_0$) and autocorrelated residuals ($\Sigma_{AR}$): $$ \begin{align} b_{ij} &= \mathbf{b}_{0,ij} + \varepsilon_{ij}\\ \varepsilon_i &\sim{} \Sigma_{AR}\\ \end{align} $$ where $i$ are the blocks and $j$ are the observations.

The current simulated data has only three observations within each Block (one for each of the C treatments). Consequently, there temporal autocorrelation is not that meaningful. Nevertheless, for other data sets it could be an issue and should be investigated. Tutorial 9.3a provides a demonstration on how to explore temporal autocorrelation.

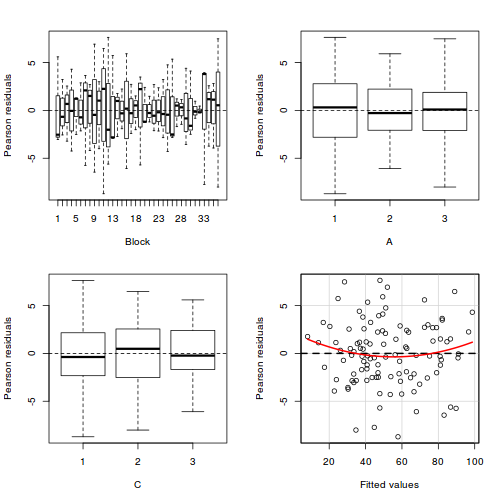

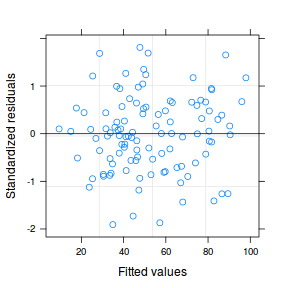

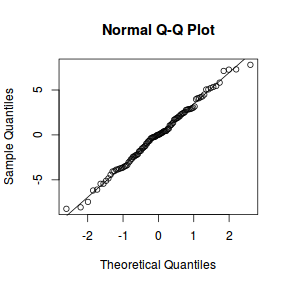

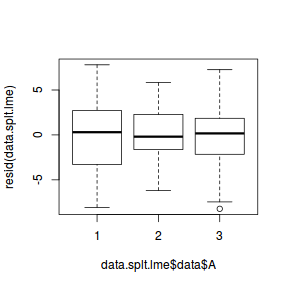

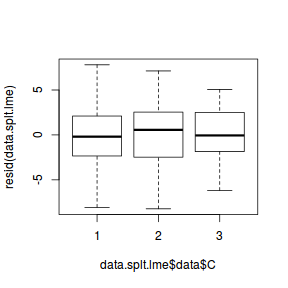

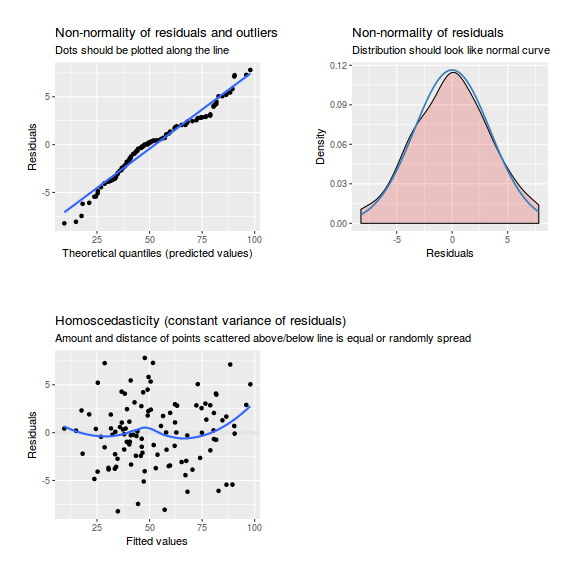

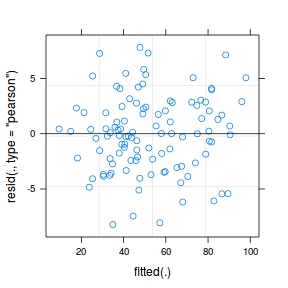

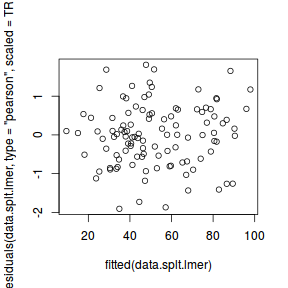

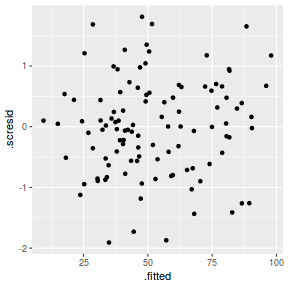

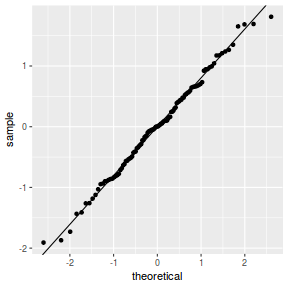

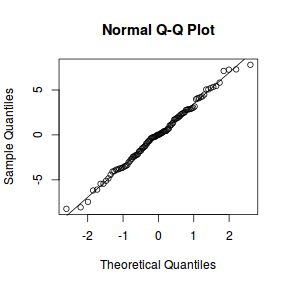

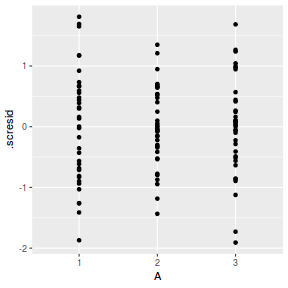

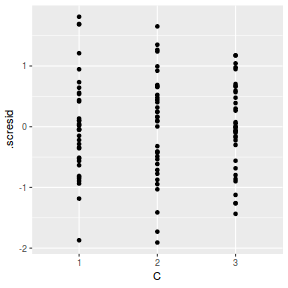

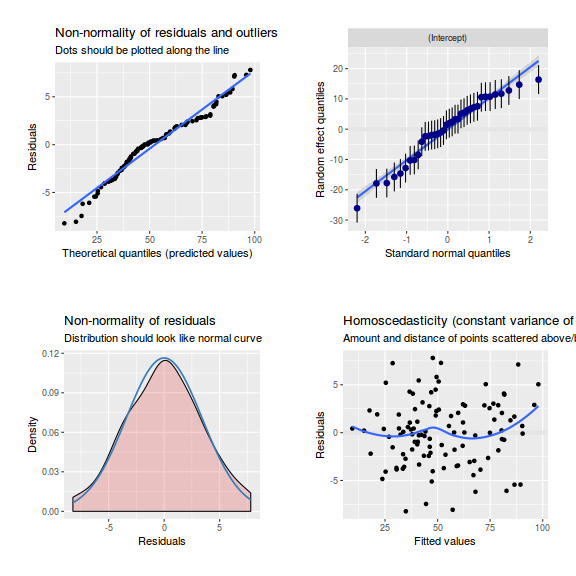

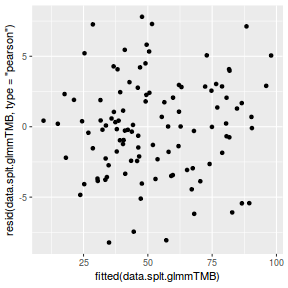

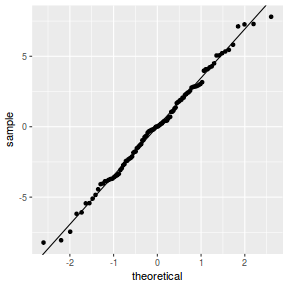

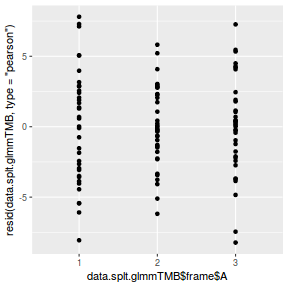

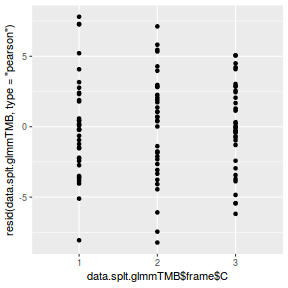

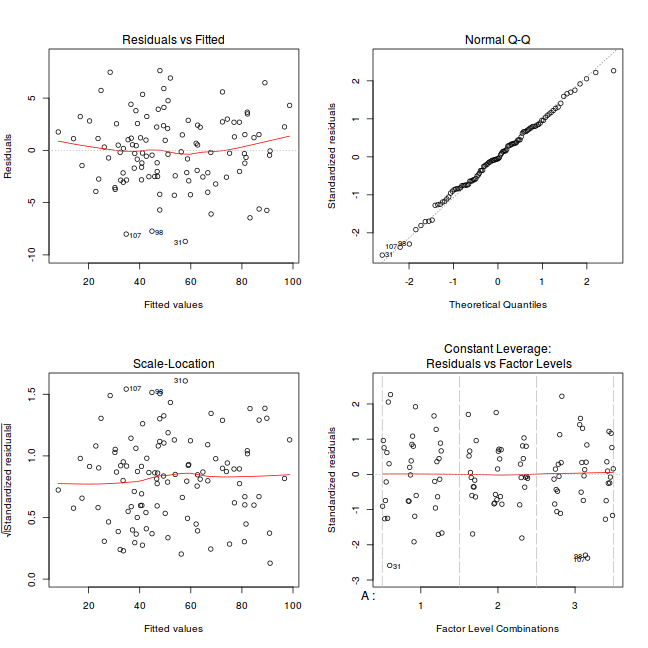

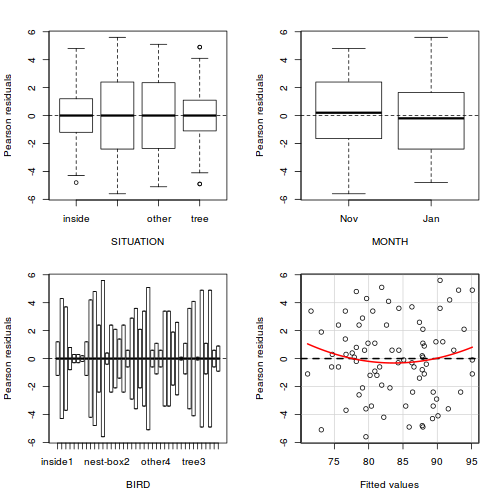

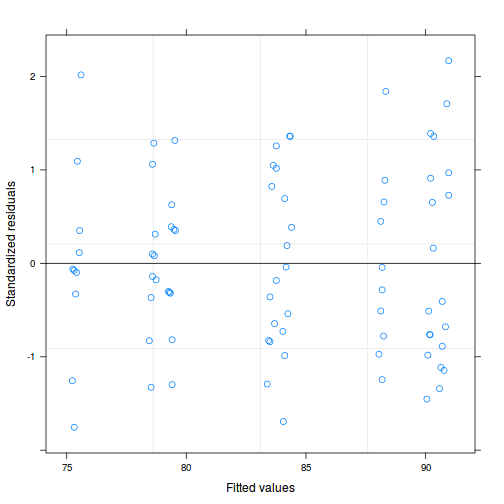

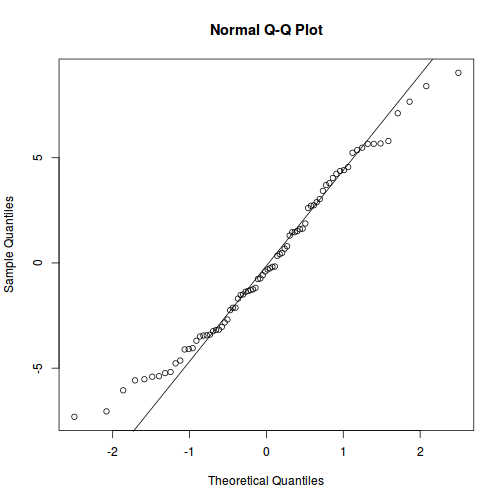

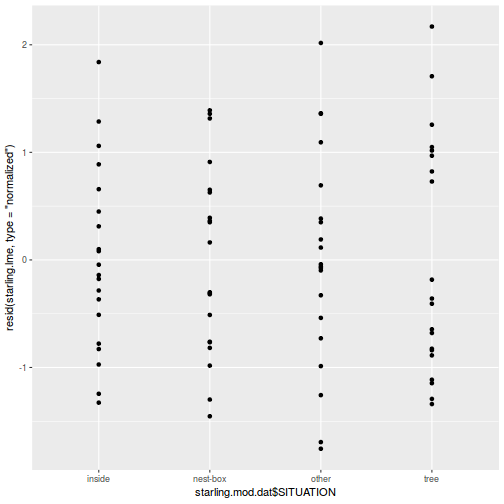

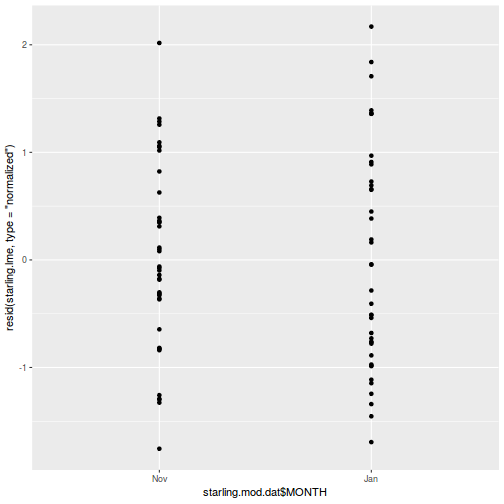

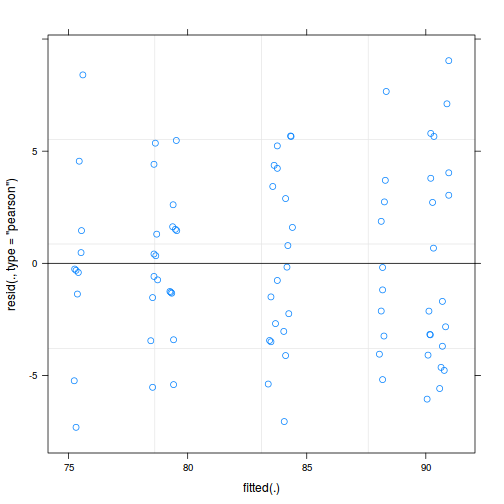

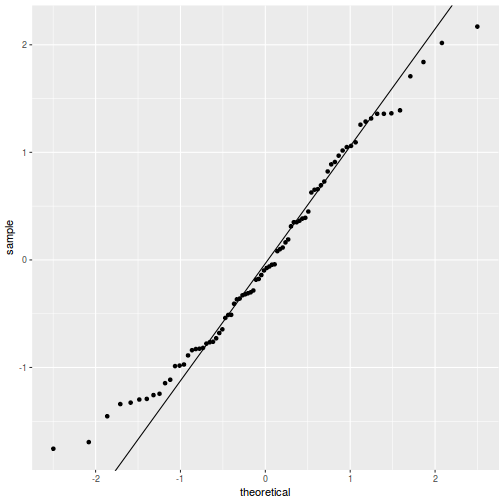

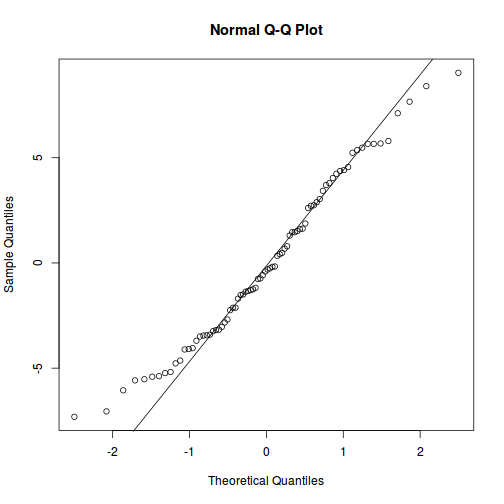

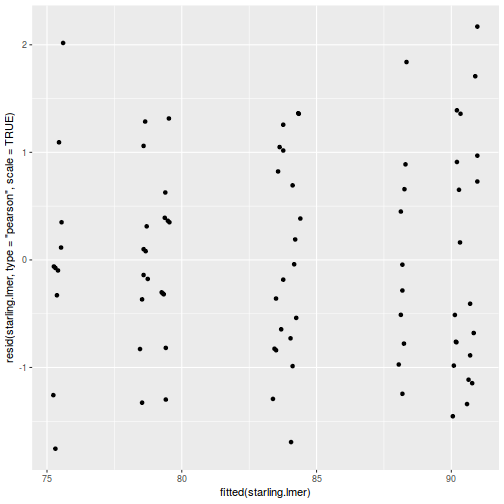

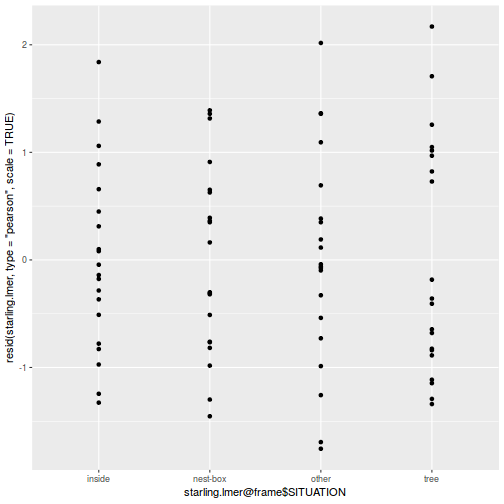

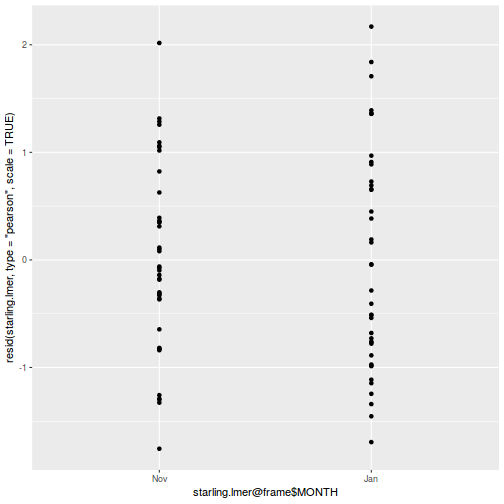

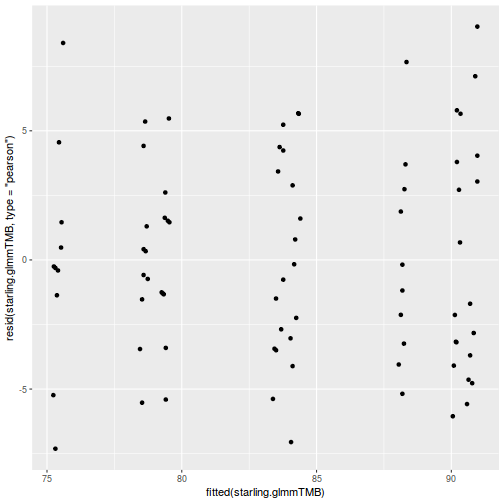

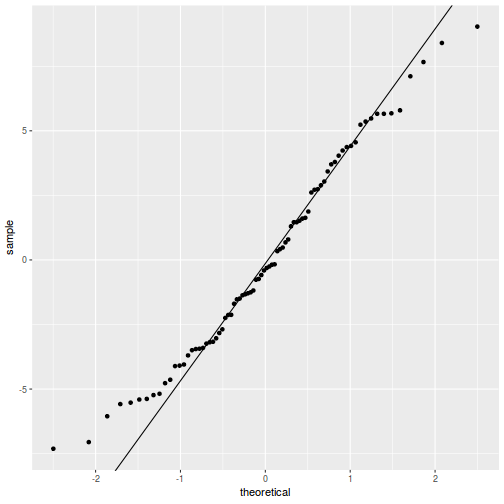

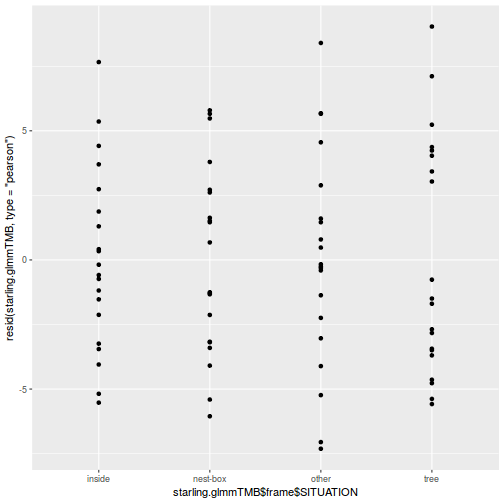

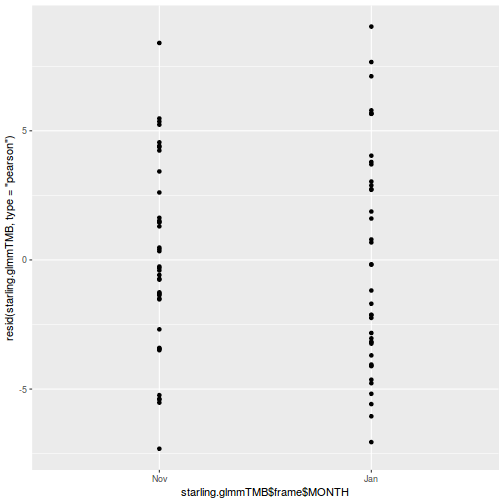

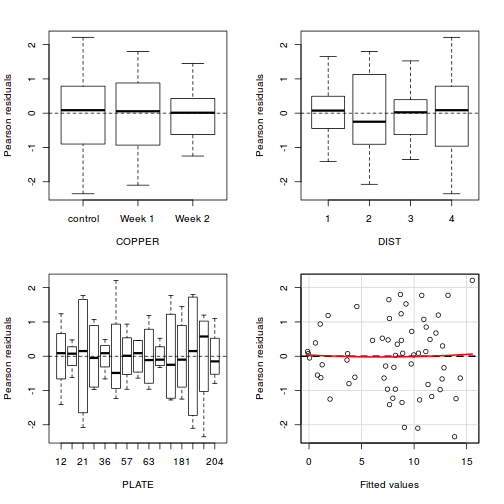

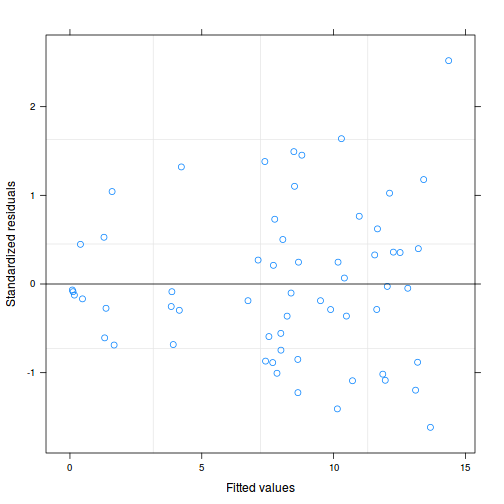

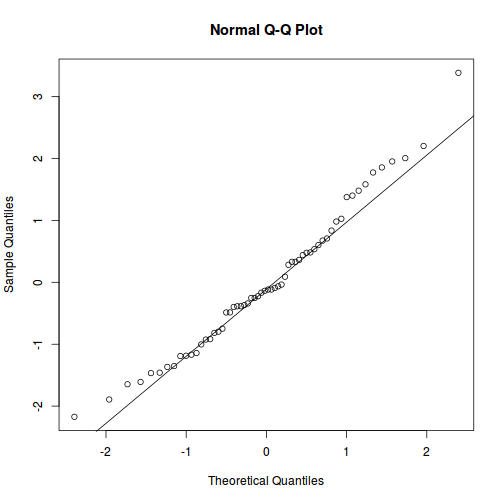

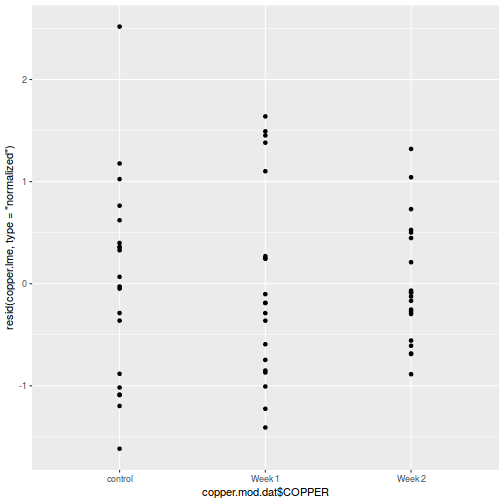

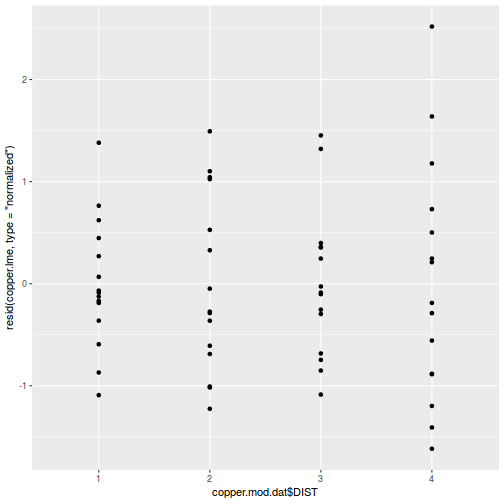

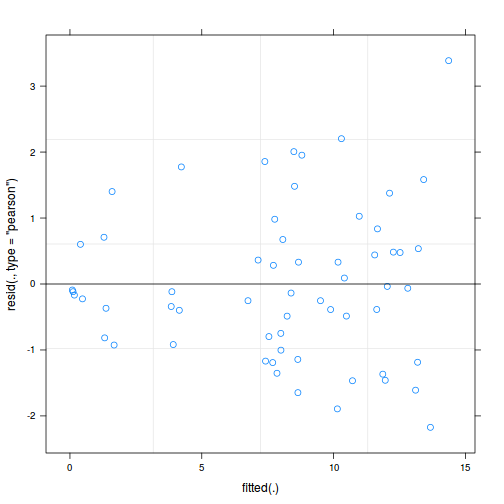

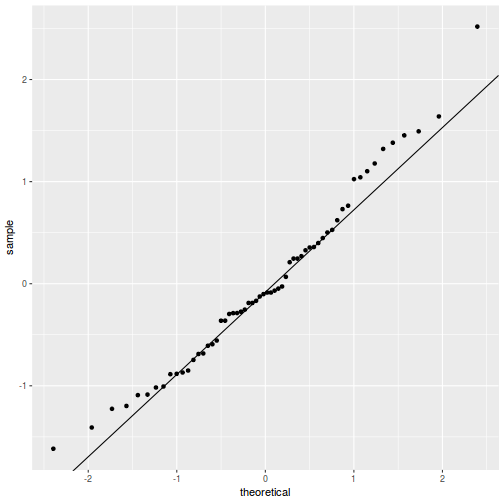

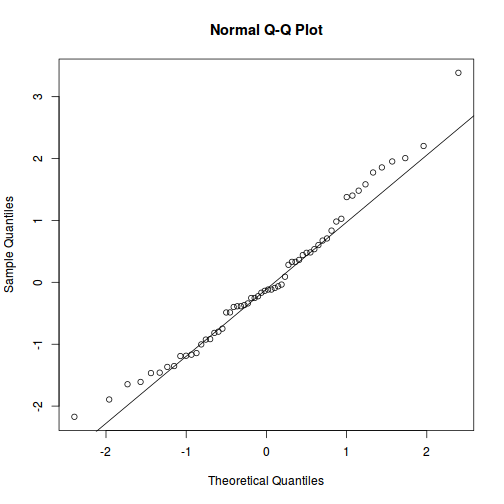

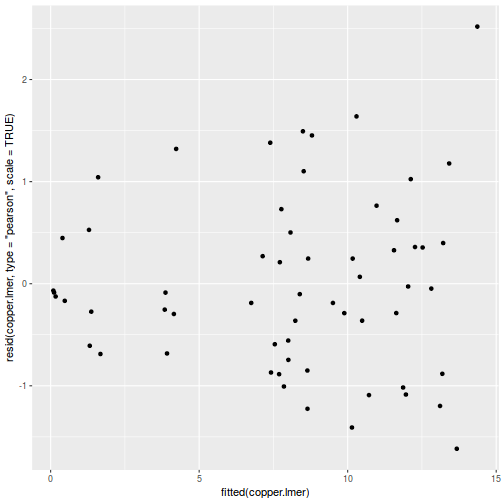

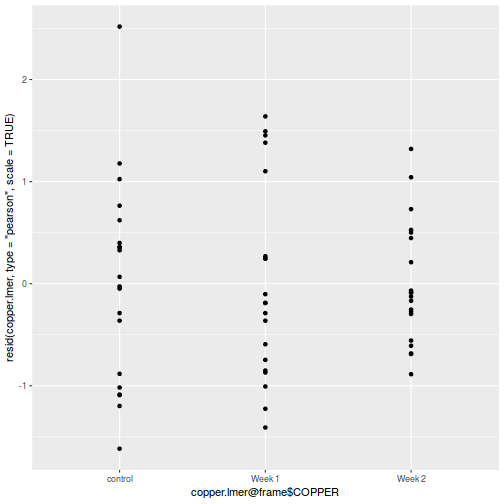

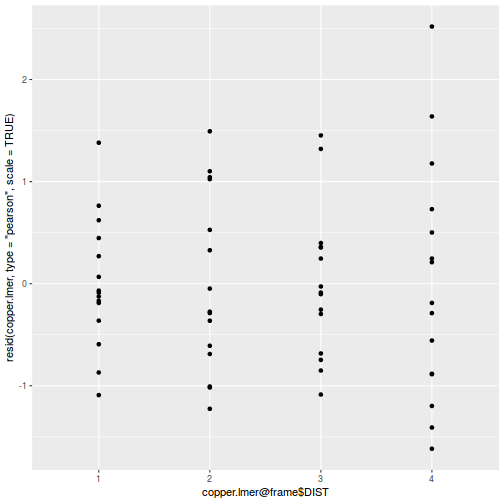

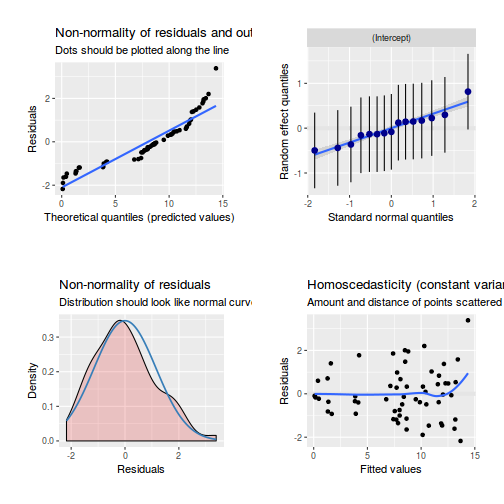

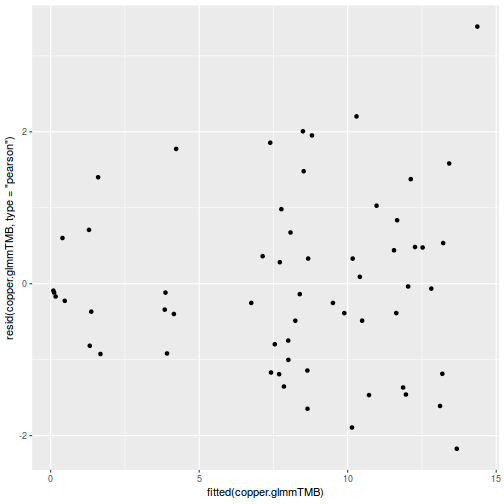

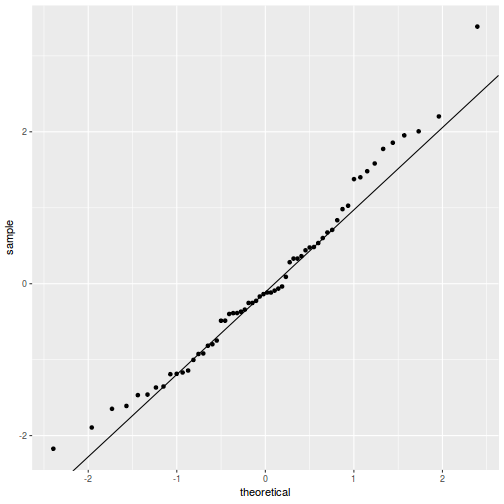

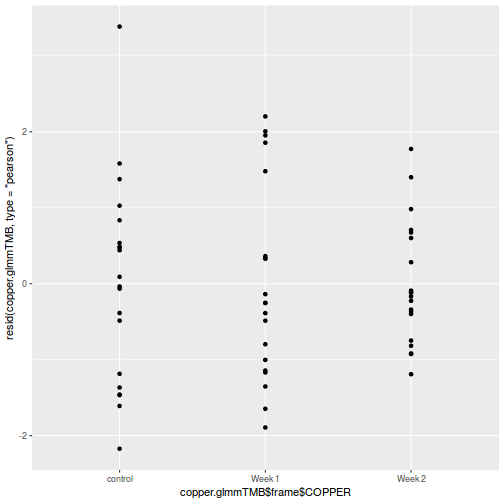

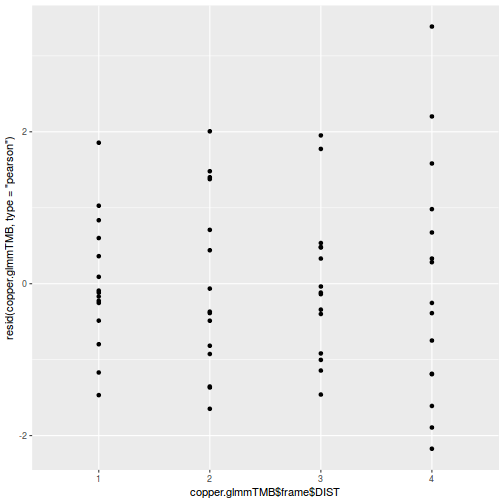

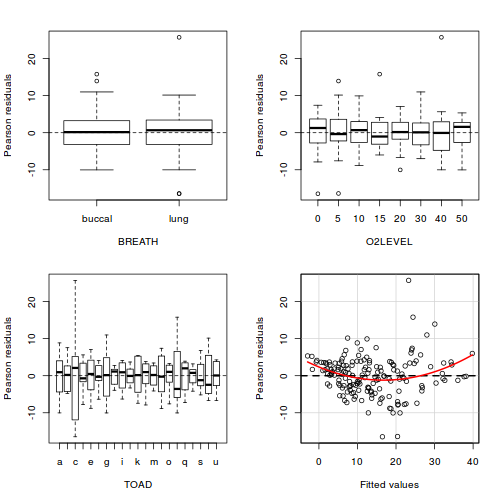

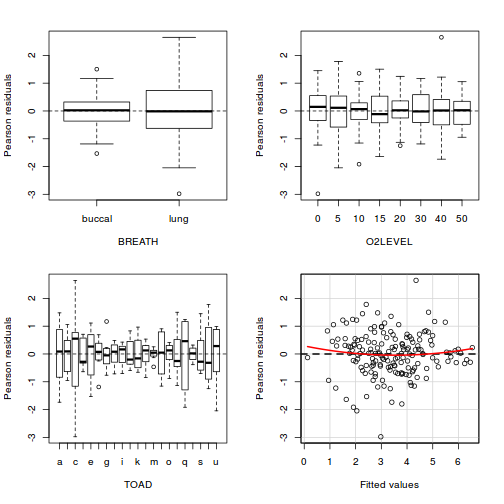

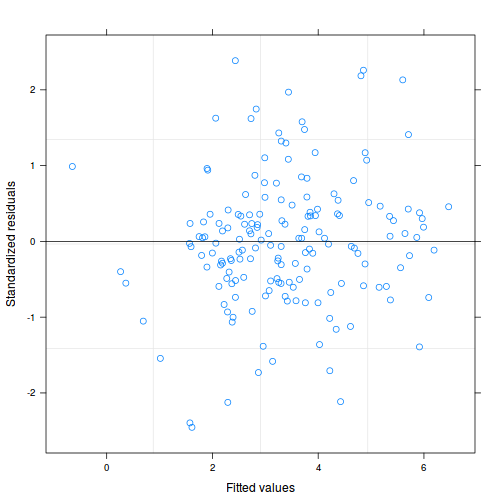

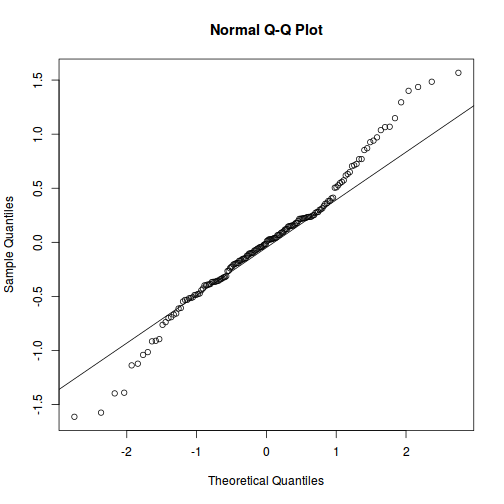

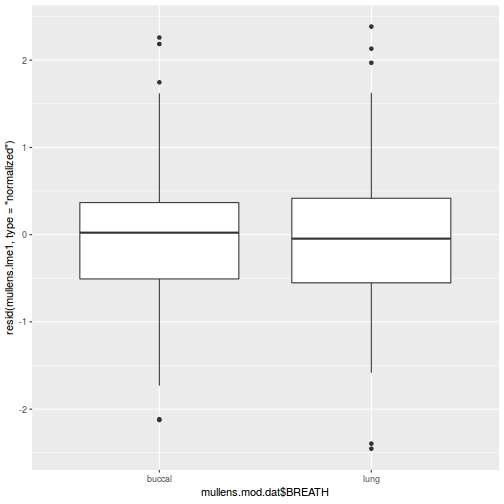

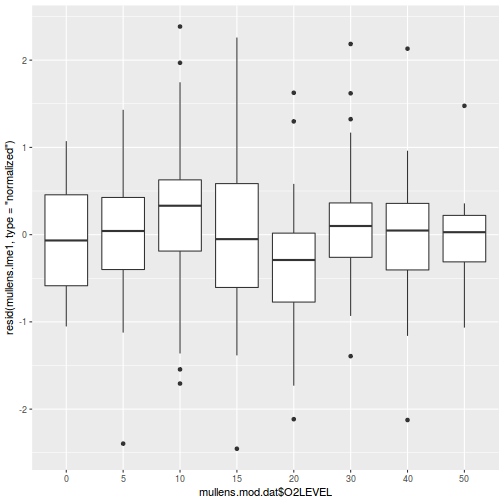

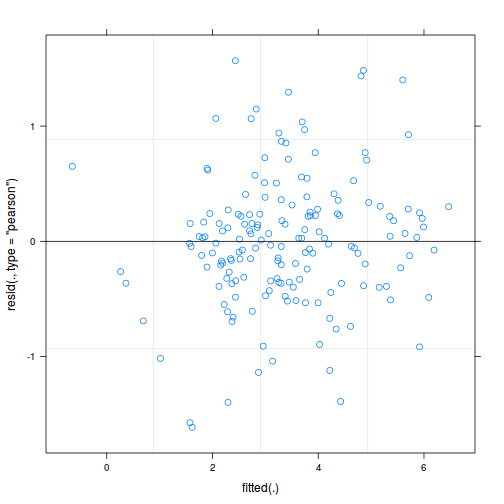

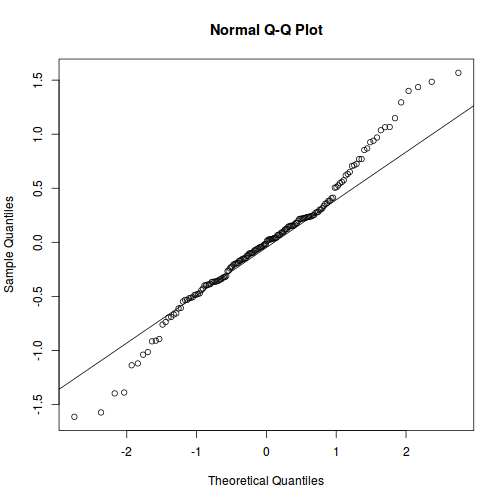

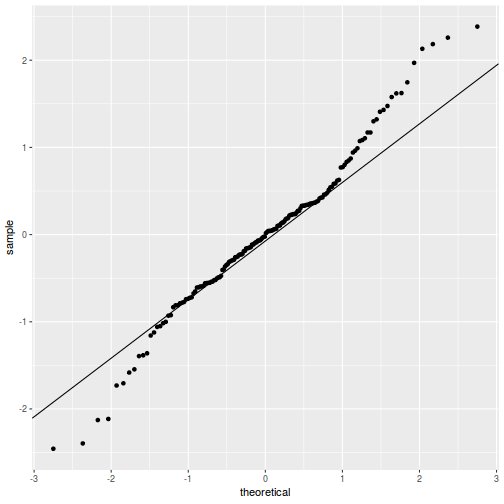

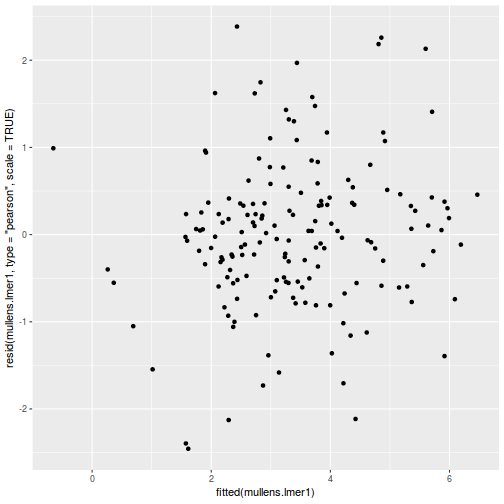

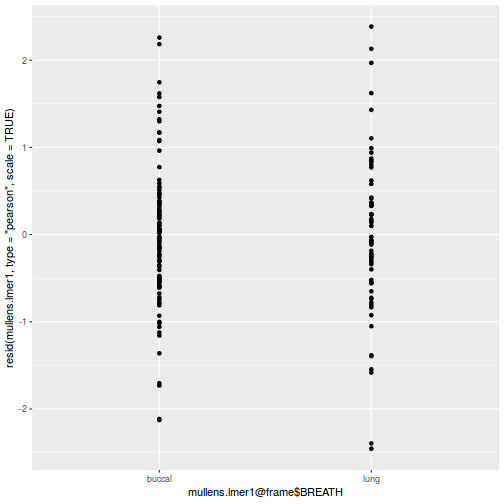

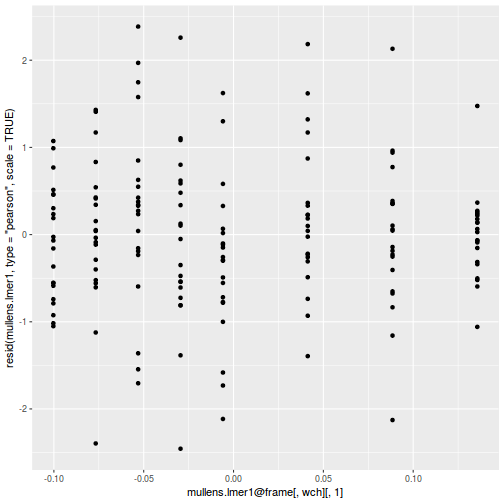

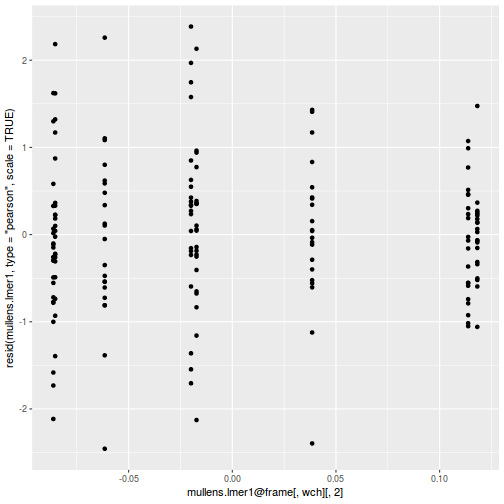

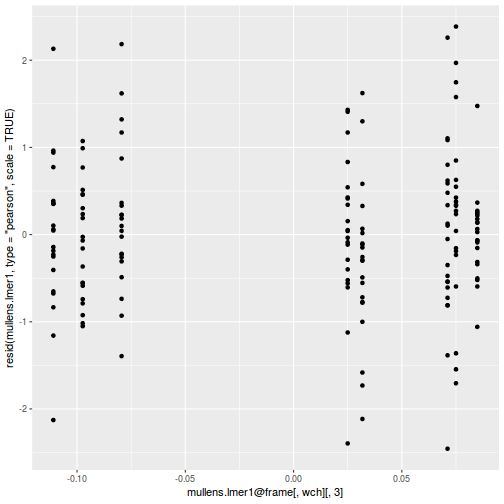

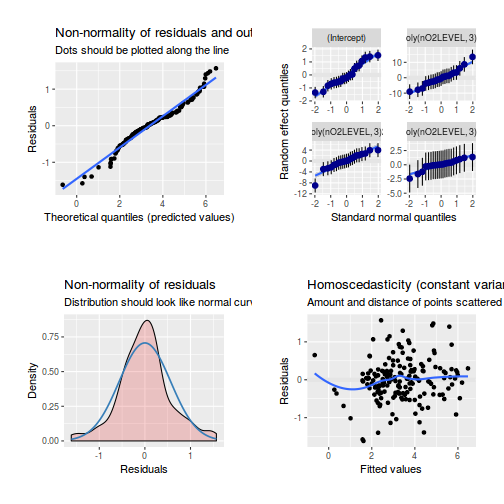

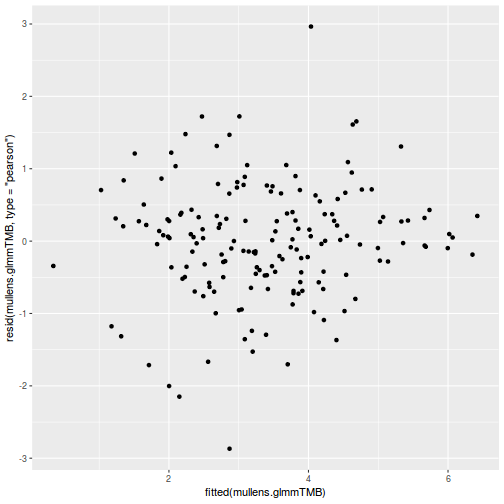

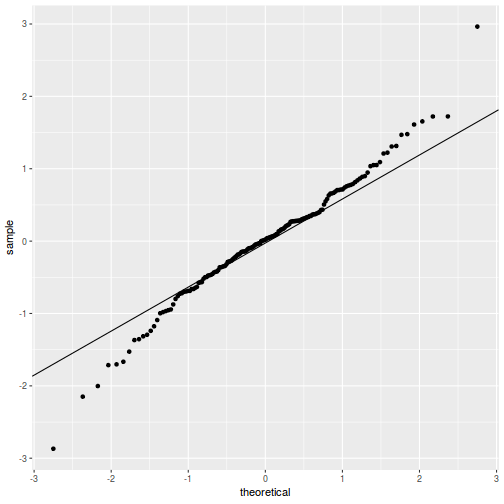

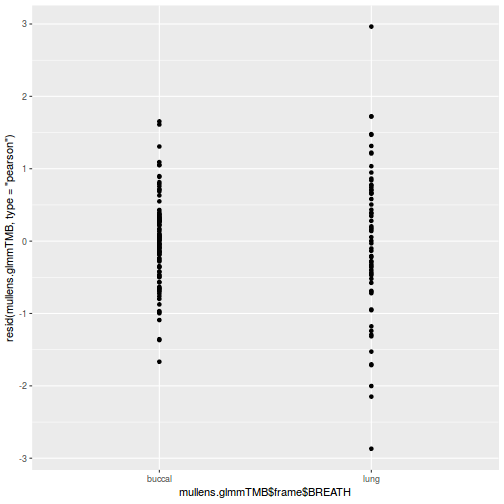

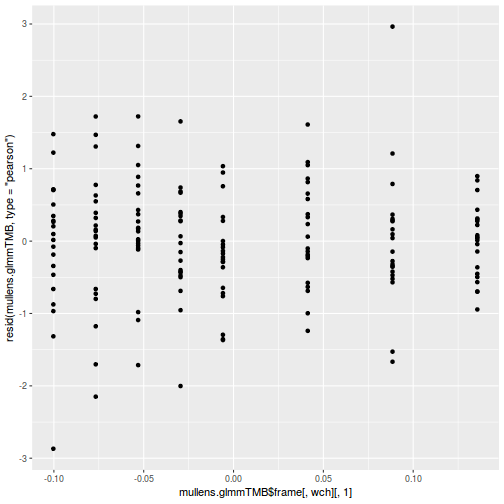

Residuals

As always, exploring the residuals can reveal issues of heteroscadacity, non-linearity and potential issues with autocorrelation. Note for lme() and lmer() residual plots use standardized (normalized) residuals rather than raw residuals as the former reflect changes to the variance-covariance matrix whereas the later do not.

The following function will be used for the production of some of the qqnormal plots.

qq.line = function(x) { # following four lines from base R's qqline() y <- quantile(x[!is.na(x)], c(0.25, 0.75)) x <- qnorm(c(0.25, 0.75)) slope <- diff(y)/diff(x) int <- y[1L] - slope * x[1L] return(c(int = int, slope = slope)) }

plot(data.splt.lme)

qqnorm(resid(data.splt.lme)) qqline(resid(data.splt.lme))

## plot residuals against each of the fixed effects plot(resid(data.splt.lme) ~ data.splt.lme$data$A)

plot(resid(data.splt.lme) ~ data.splt.lme$data$C)

library(sjPlot) plot_grid(plot_model(data.splt.lme, type = "diag"))

plot(data.splt.lmer)

plot(fitted(data.splt.lmer), residuals(data.splt.lmer, type = "pearson", scaled = TRUE))

ggplot(fortify(data.splt.lmer), aes(y = .scresid, x = .fitted)) + geom_point()

QQline = qq.line(fortify(data.splt.lmer)$.scresid) ggplot(fortify(data.splt.lmer), aes(sample = .scresid)) + stat_qq() + geom_abline(intercept = QQline[1], slope = QQline[2])

qqnorm(resid(data.splt.lmer)) qqline(resid(data.splt.lmer))

## plot residuals against each of the fixed effects ggplot(fortify(data.splt.lmer), aes(y = .scresid, x = A)) + geom_point()

ggplot(fortify(data.splt.lmer), aes(y = .scresid, x = C)) + geom_point()

library(sjPlot) plot_grid(plot_model(data.splt.lmer, type = "diag"))

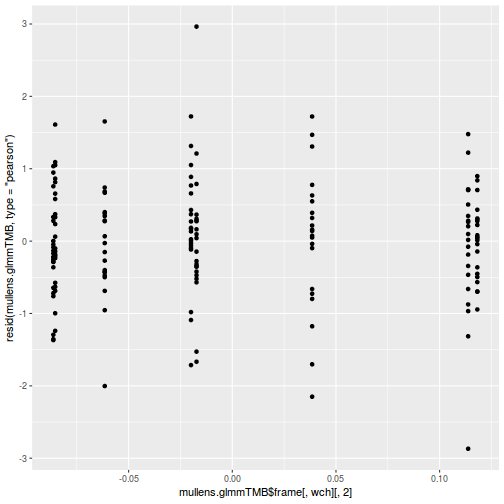

ggplot(data = NULL, aes(y = resid(data.splt.glmmTMB, type = "pearson"), x = fitted(data.splt.glmmTMB))) + geom_point()

QQline = qq.line(resid(data.splt.glmmTMB, type = "pearson")) ggplot(data = NULL, aes(sample = resid(data.splt.glmmTMB, type = "pearson"))) + stat_qq() + geom_abline(intercept = QQline[1], slope = QQline[2])

ggplot(data = NULL, aes(y = resid(data.splt.glmmTMB, type = "pearson"), x = data.splt.glmmTMB$frame$A)) + geom_point()

ggplot(data = NULL, aes(y = resid(data.splt.glmmTMB, type = "pearson"), x = data.splt.glmmTMB$frame$C)) + geom_point()

library(sjPlot) plot_grid(plot_model(data.splt.glmmTMB, type = "diag")) #not working yet - bug

Error in UseMethod("rstudent"): no applicable method for 'rstudent' applied to an object of class "glmmTMB"

par(mfrow = c(2, 2)) plot(lm(data.splt.aov))

Exploring model parameters

If there was any evidence that the assumptions had been violated, then we would need to reconsider the model and start the process again. In this case, there is no evidence that the test will be unreliable so we can proceed to explore the test statistics. As I had elected to illustrate multiple techniques for analysing this nested design, I will also deal with the summaries etc separately.

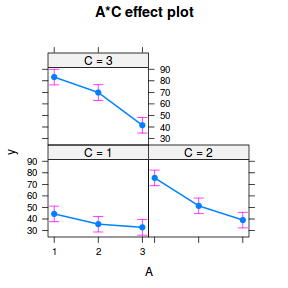

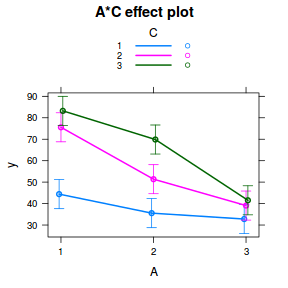

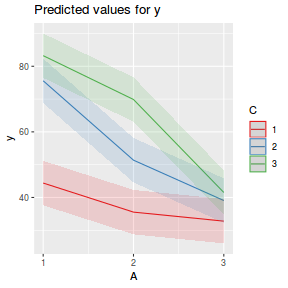

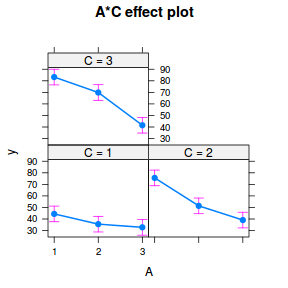

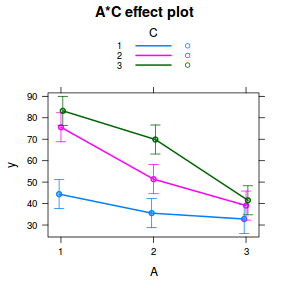

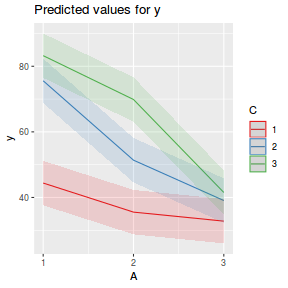

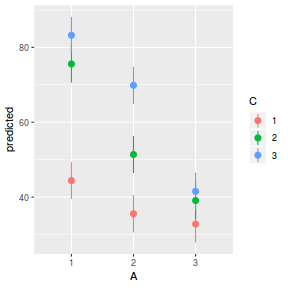

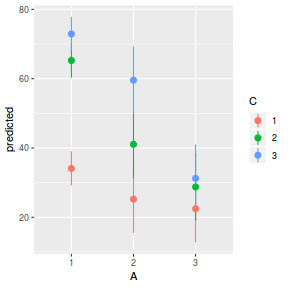

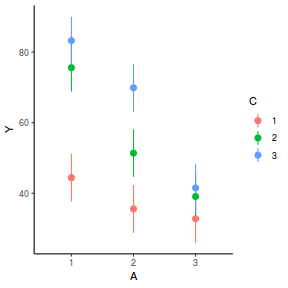

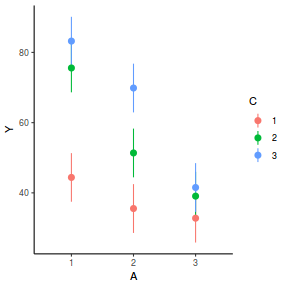

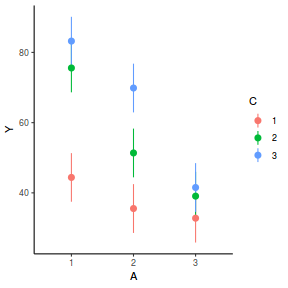

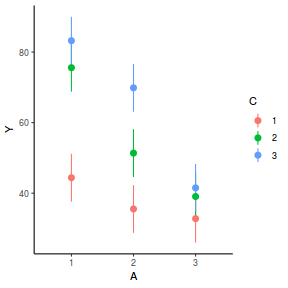

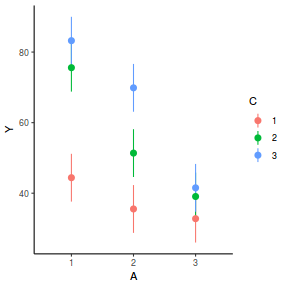

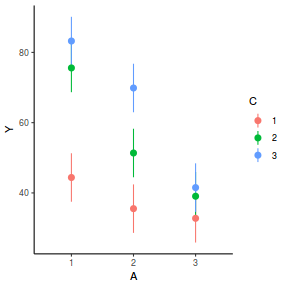

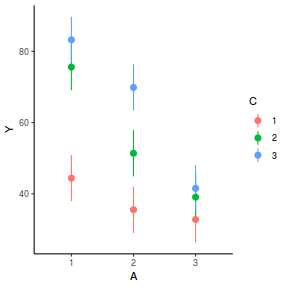

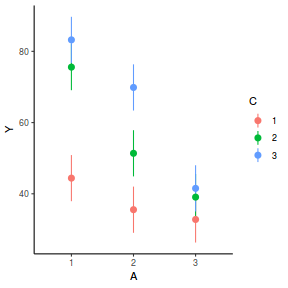

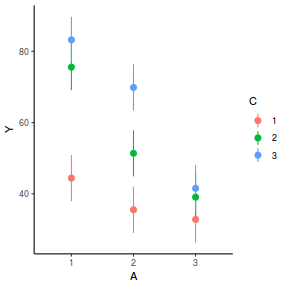

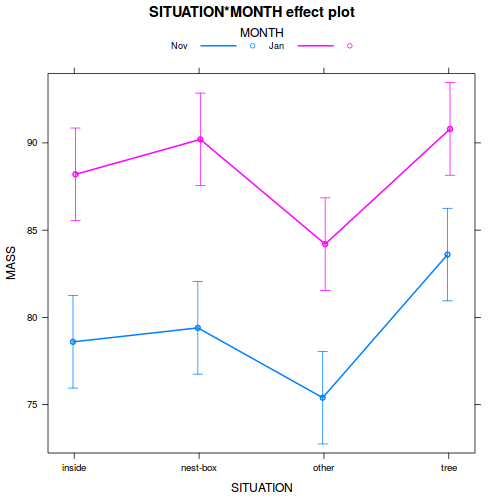

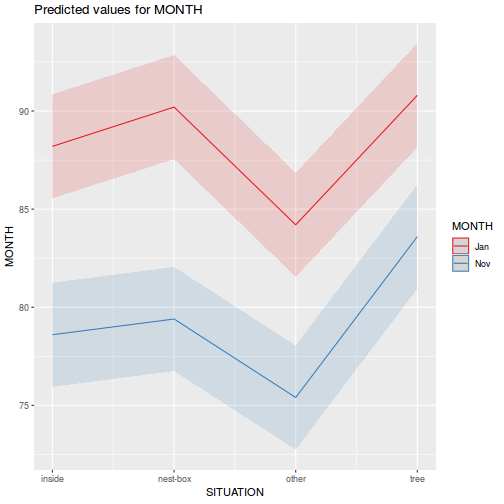

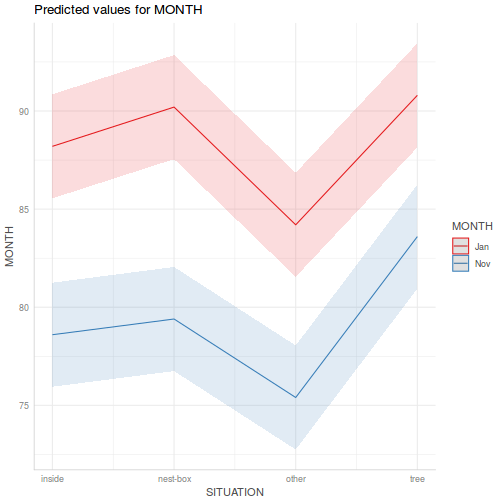

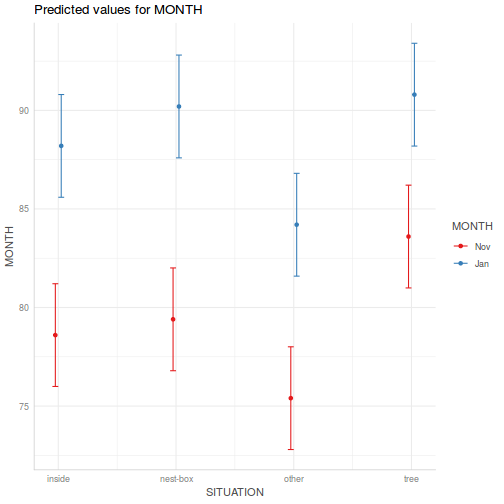

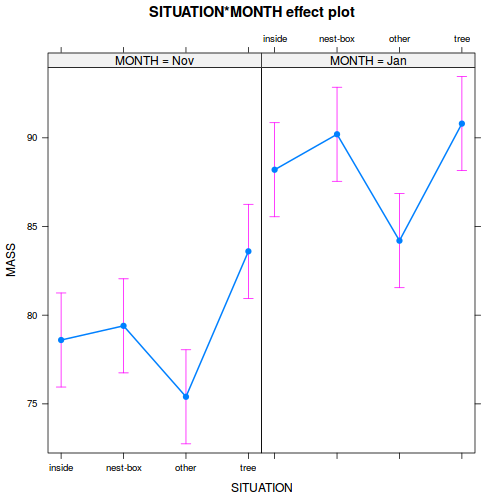

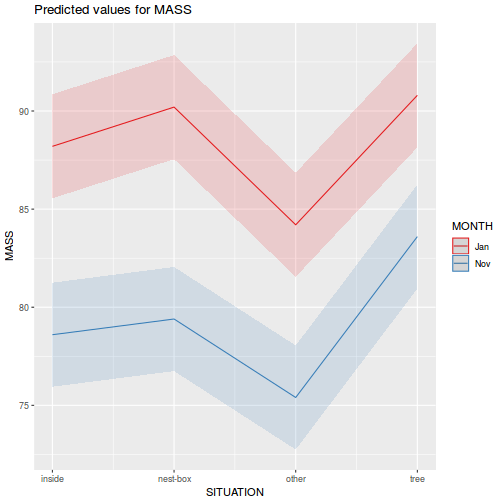

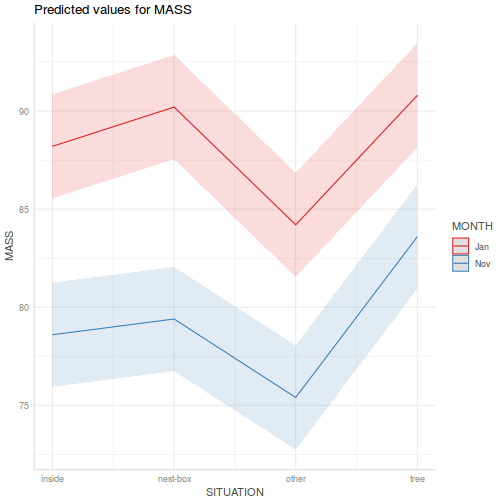

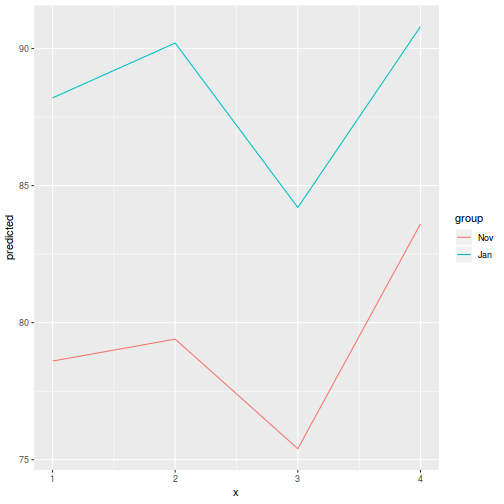

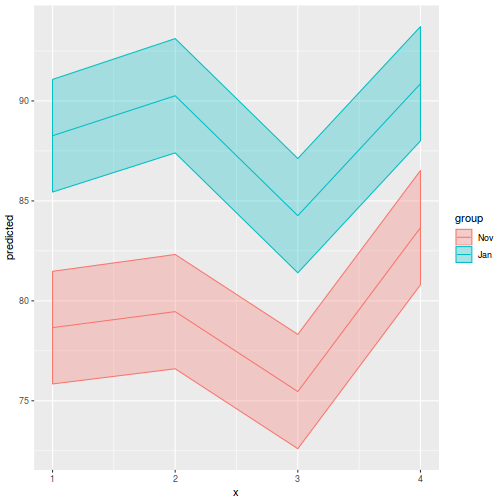

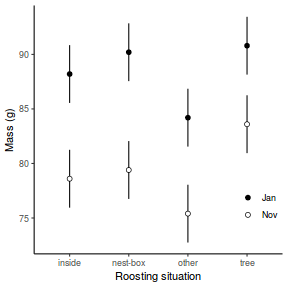

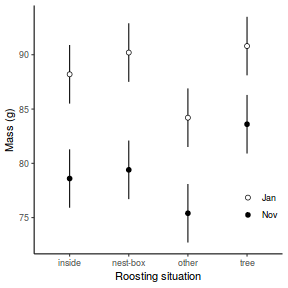

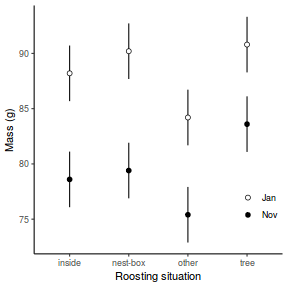

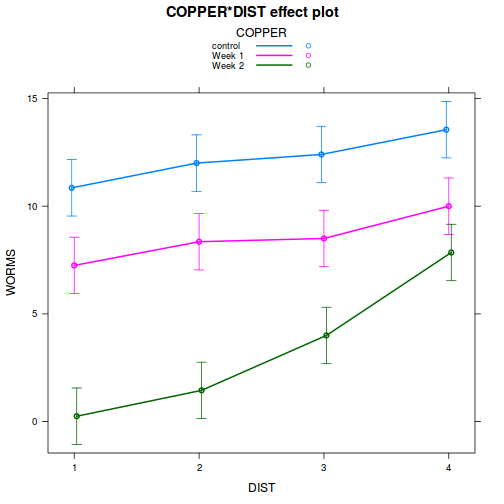

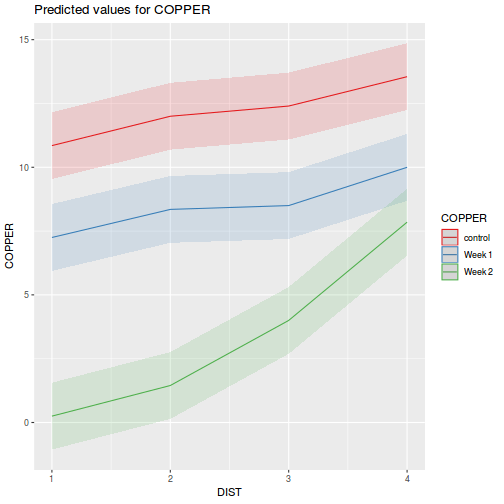

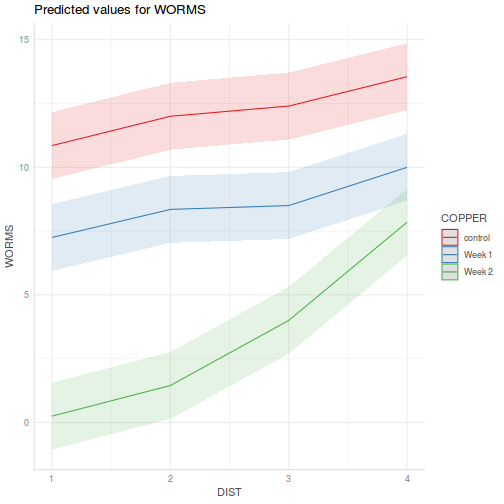

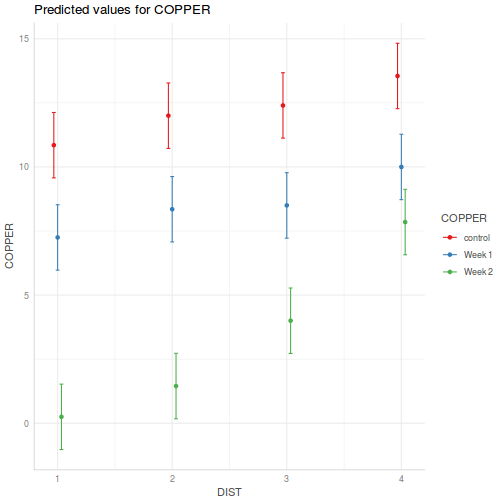

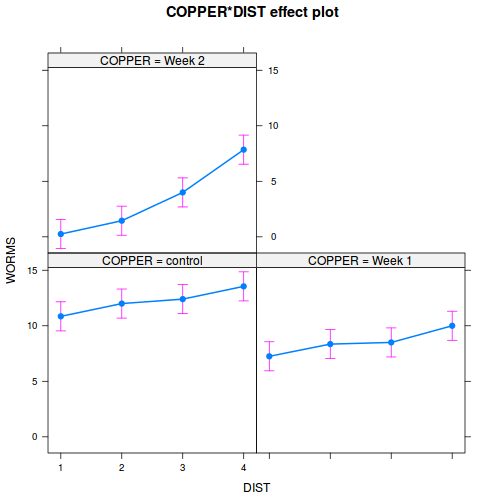

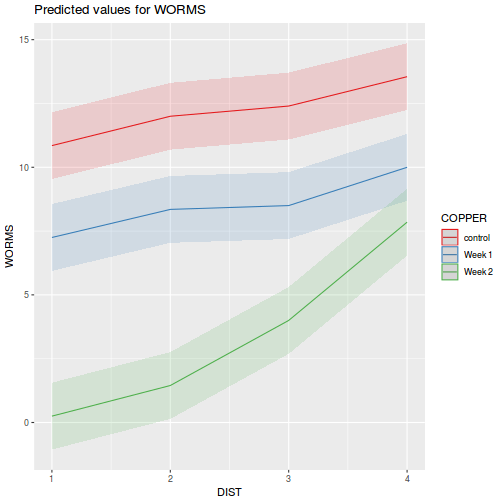

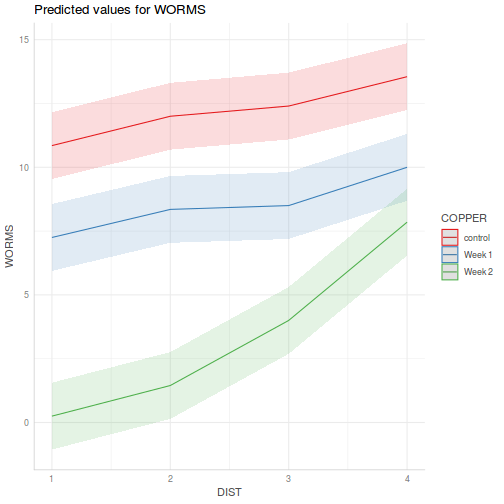

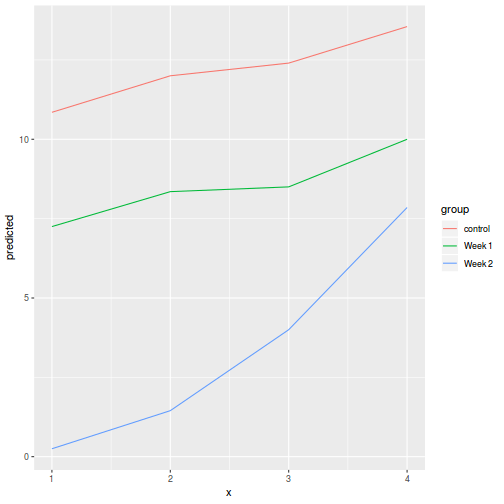

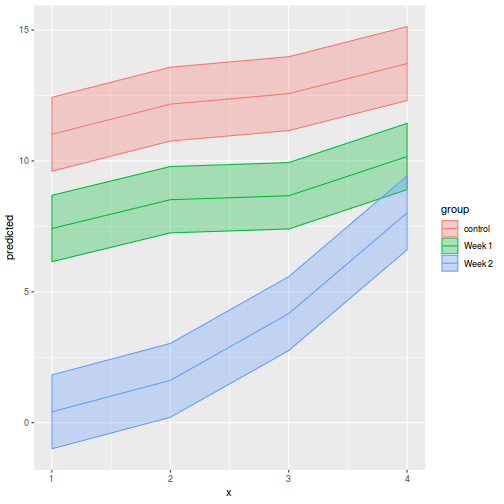

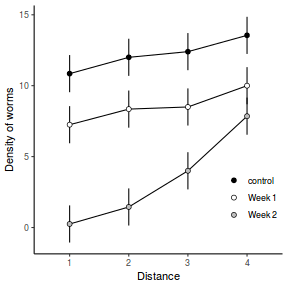

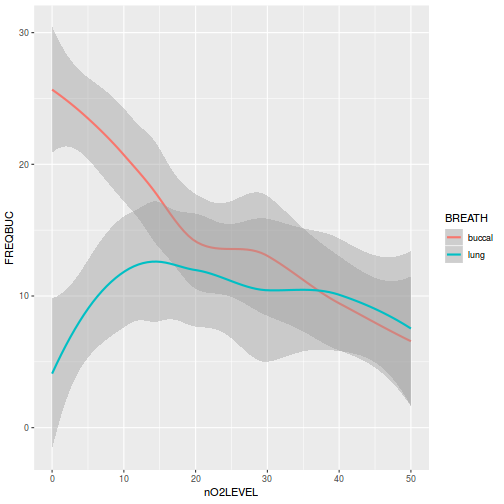

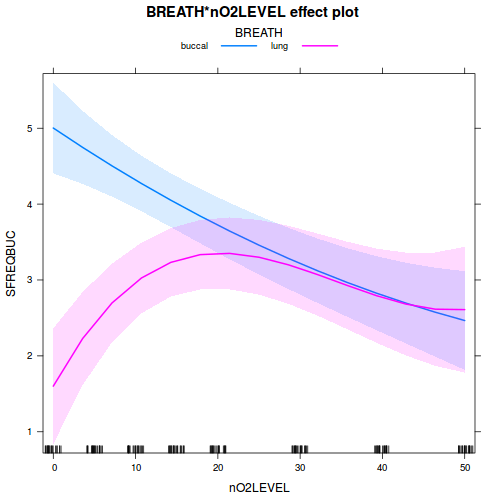

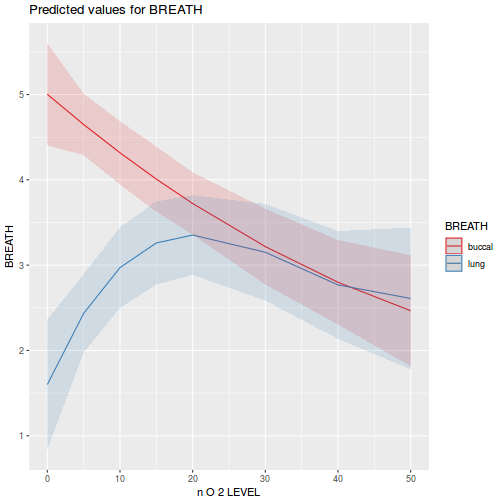

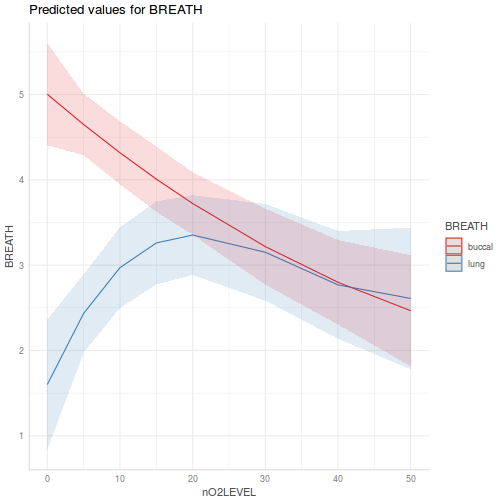

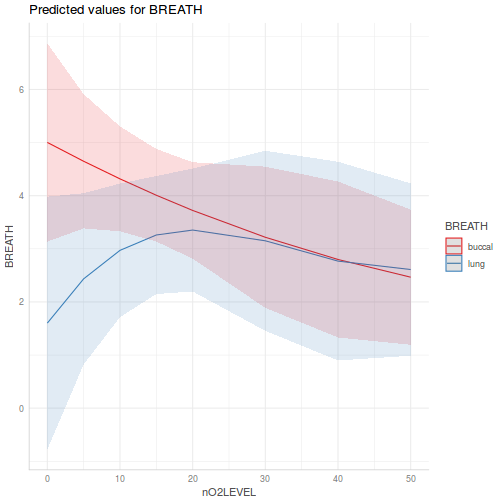

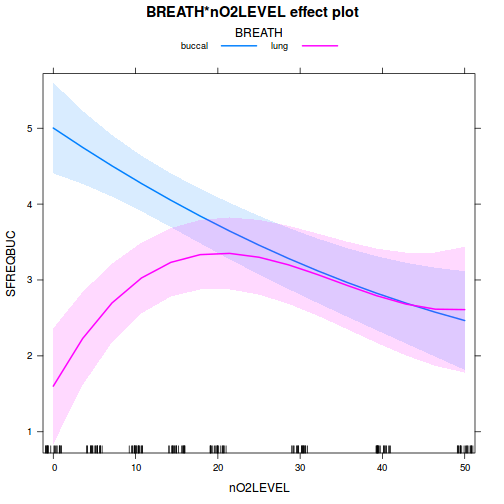

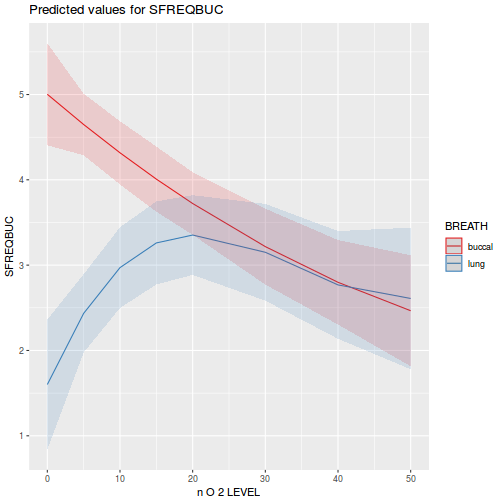

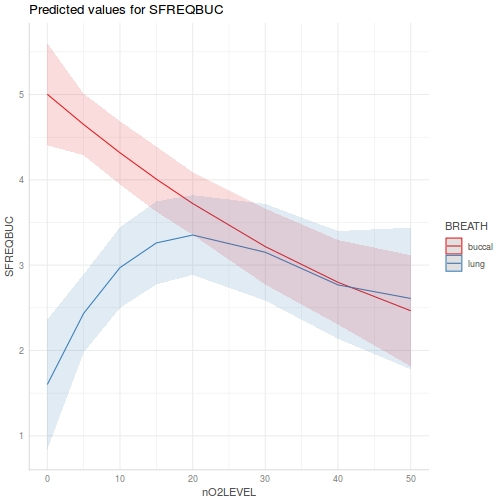

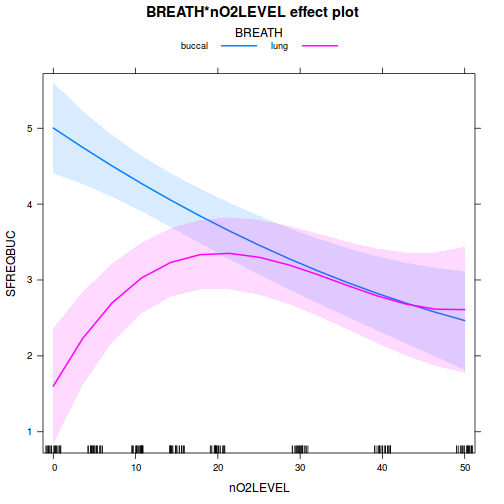

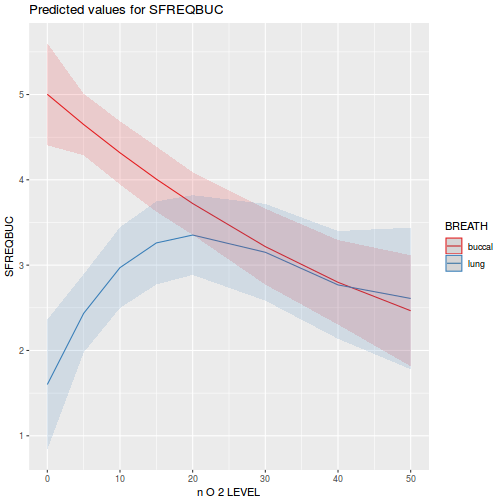

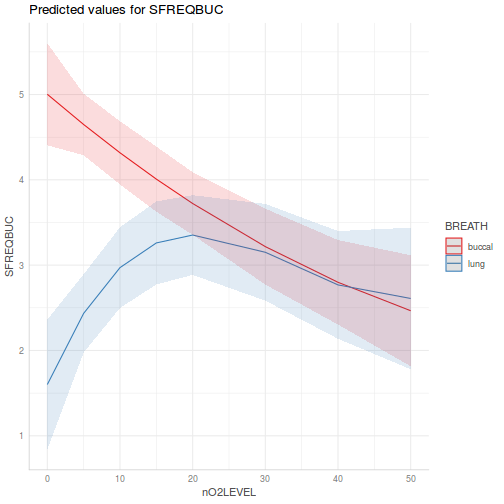

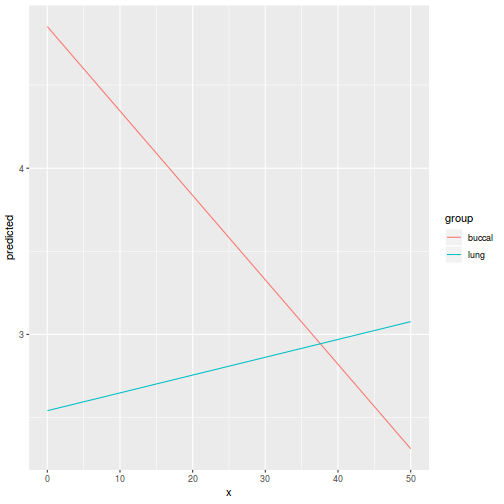

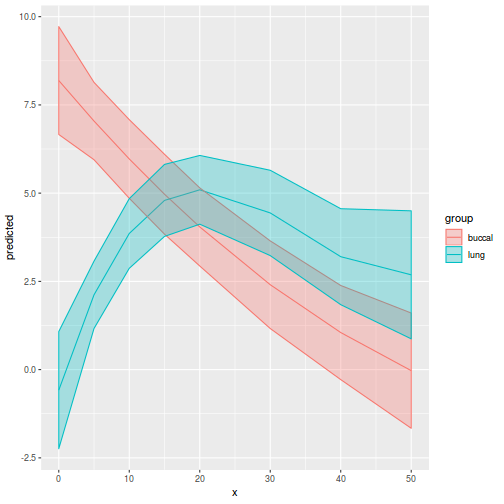

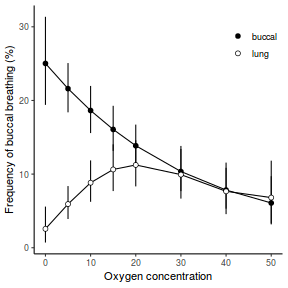

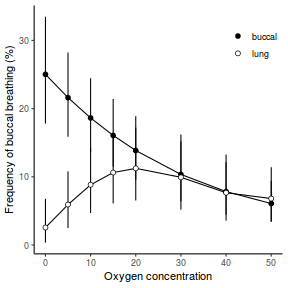

Partial effects plots

It is often useful to visualize partial effects plots while exploring the parameter estimates. Having a graphical representation of the partial effects typically makes it a lot easier to interpret the parameter estimates and inferences.

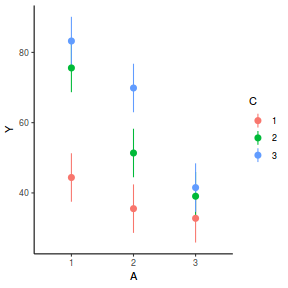

library(effects) plot(allEffects(data.splt.lme))

plot(allEffects(data.splt.lme), lines = list(multiline = TRUE), confint = list(style = "bars"))

library(sjPlot) plot_model(data.splt.lme, type = "eff", terms = c("A", "C"))

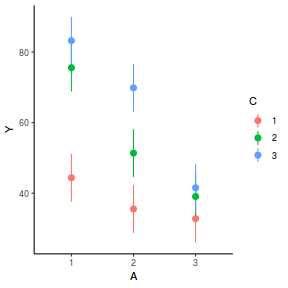

library(effects) plot(allEffects(data.splt.lmer))

plot(allEffects(data.splt.lmer), lines = list(multiline = TRUE), confint = list(style = "bars"))

library(sjPlot) plot_model(data.splt.lmer, type = "eff", terms = c("A", "C"))

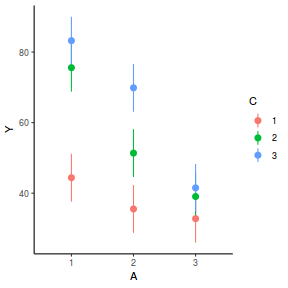

library(ggeffects) # observation level effects averaged across margins p = ggaverage(data.splt.glmmTMB, terms = c("A", "C"), x.as.factor = TRUE) p = p %>% dplyr::rename(A = x, C = group) ggplot(p, aes(y = predicted, x = A, color = C)) + geom_pointrange(aes(ymin = conf.low, ymax = conf.high))

# marginal effects p = ggpredict(data.splt.glmmTMB, terms = c("A", "C"), x.as.factor = TRUE) p = p %>% dplyr::rename(A = x, C = group) ggplot(p, aes(y = predicted, x = A, color = C)) + geom_pointrange(aes(ymin = conf.low, ymax = conf.high))

Extractor functions

There are a number of extractor functions (functions that extract or derive specific information from a model) available including:| Extractor | Description |

|---|---|

| residuals() | Extracts the residuals from the model |

| fitted() | Extracts the predicted (expected) response values (on the link scale) at the observed levels of the linear predictor |

| predict() | Extracts the predicted (expected) response values (on either the link, response or terms (linear predictor) scale) |

| coef() | Extracts the model coefficients |

| confint() | Calculate confidence intervals for the model coefficients |

| summary() | Summarizes the important output and characteristics of the model |

| anova() | Computes an analysis of variance (variance partitioning) from the model |

| VarCorr() | Computes variance components (of random effects) from the model |

| AIC() | Computes Akaike Information Criterion from the model |

| plot() | Generates a series of diagnostic plots from the model |

| effect() | effects package - estimates the marginal (partial) effects of a factor (useful for plotting) |

| avPlot() | car package - generates partial regression plots |

Parameter estimates

summary(data.splt.lme)

Linear mixed-effects model fit by REML

Data: data.splt

AIC BIC logLik

714.3519 742.8982 -346.1759

Random effects:

Formula: ~1 | Block

(Intercept) Residual

StdDev: 11.00689 4.309761

Fixed effects: y ~ A * C

Value Std.Error DF t-value p-value

(Intercept) 44.39871 3.412303 66 13.011362 0.0000

A2 -8.86091 4.825726 33 -1.836182 0.0754

A3 -11.61064 4.825726 33 -2.405988 0.0219

C2 31.17007 1.759453 66 17.715775 0.0000

C3 38.83108 1.759453 66 22.069979 0.0000

A2:C2 -15.33891 2.488242 66 -6.164556 0.0000

A3:C2 -24.89184 2.488242 66 -10.003787 0.0000

A2:C3 -4.50515 2.488242 66 -1.810574 0.0748

A3:C3 -30.09278 2.488242 66 -12.093993 0.0000

Correlation:

(Intr) A2 A3 C2 C3 A2:C2 A3:C2 A2:C3

A2 -0.707

A3 -0.707 0.500

C2 -0.258 0.182 0.182

C3 -0.258 0.182 0.182 0.500

A2:C2 0.182 -0.258 -0.129 -0.707 -0.354

A3:C2 0.182 -0.129 -0.258 -0.707 -0.354 0.500

A2:C3 0.182 -0.258 -0.129 -0.354 -0.707 0.500 0.250

A3:C3 0.182 -0.129 -0.258 -0.354 -0.707 0.250 0.500 0.500

Standardized Within-Group Residuals:

Min Q1 Med Q3 Max

-1.908000844 -0.541899250 0.003782048 0.542865052 1.810720228

Number of Observations: 108

Number of Groups: 36

intervals(data.splt.lme)

Approximate 95% confidence intervals

Fixed effects:

lower est. upper

(Intercept) 37.585829 44.398712 51.2115953

A2 -18.678921 -8.860908 0.9571044

A3 -21.428649 -11.610637 -1.7926243

C2 27.657207 31.170068 34.6829285

C3 35.318224 38.831084 42.3439451

A2:C2 -20.306842 -15.338907 -10.3709713

A3:C2 -29.859778 -24.891843 -19.9239073

A2:C3 -9.473081 -4.505146 0.4627894

A3:C3 -35.060715 -30.092780 -25.1248447

attr(,"label")

[1] "Fixed effects:"

Random Effects:

Level: Block

lower est. upper

sd((Intercept)) 8.540243 11.00689 14.18598

Within-group standard error:

lower est. upper

3.633843 4.309761 5.111405

anova(data.splt.lme)

numDF denDF F-value p-value (Intercept) 1 66 781.9956 <.0001 A 2 33 21.1243 <.0001 C 2 66 372.0035 <.0001 A:C 4 66 52.5514 <.0001

library(broom) tidy(data.splt.lme, effects = "fixed", conf.int = TRUE)

# A tibble: 9 x 5 term estimate std.error statistic p.value <chr> <dbl> <dbl> <dbl> <dbl> 1 (Intercept) 44.4 3.41 13.0 6.94e-20 2 A2 -8.86 4.83 -1.84 7.54e- 2 3 A3 -11.6 4.83 -2.41 2.19e- 2 4 C2 31.2 1.76 17.7 8.88e-27 5 C3 38.8 1.76 22.1 3.55e-32 6 A2:C2 -15.3 2.49 -6.16 4.80e- 8 7 A3:C2 -24.9 2.49 -10.0 7.43e-15 8 A2:C3 -4.51 2.49 -1.81 7.48e- 2 9 A3:C3 -30.1 2.49 -12.1 2.12e-18

glance(data.splt.lme)

# A tibble: 1 x 5 sigma logLik AIC BIC deviance <dbl> <dbl> <dbl> <dbl> <lgl> 1 4.31 -346. 714. 743. NA

The output comprises:

- various information criterion (for model comparison)

- the random effects variance components

- the estimated standard deviation between Blocks is

11.006894 - the estimated standard deviation within treatments is

4.309761 - Blocks represent

71.8622571% of the variability (based on SD).

- the estimated standard deviation between Blocks is

- the fixed effects

- The effects parameter estimates along with their hypothesis tests

- There is evidence of an interaction between $A$ and $C$ - the nature of the trends between $A1$, $A2$ and $A3$ are not consistent between all levels of $C$ (and vice verse).

summary(data.splt.lmer)

Linear mixed model fit by REML ['lmerMod']

Formula: y ~ A * C + (1 | Block)

Data: data.splt

REML criterion at convergence: 692.4

Scaled residuals:

Min 1Q Median 3Q Max

-1.90800 -0.54190 0.00378 0.54287 1.81072

Random effects:

Groups Name Variance Std.Dev.

Block (Intercept) 121.15 11.01

Residual 18.57 4.31

Number of obs: 108, groups: Block, 36

Fixed effects:

Estimate Std. Error t value

(Intercept) 44.399 3.412 13.011

A2 -8.861 4.826 -1.836

A3 -11.611 4.826 -2.406

C2 31.170 1.759 17.716

C3 38.831 1.759 22.070

A2:C2 -15.339 2.488 -6.165

A3:C2 -24.892 2.488 -10.004

A2:C3 -4.505 2.488 -1.811

A3:C3 -30.093 2.488 -12.094

Correlation of Fixed Effects:

(Intr) A2 A3 C2 C3 A2:C2 A3:C2 A2:C3

A2 -0.707

A3 -0.707 0.500

C2 -0.258 0.182 0.182

C3 -0.258 0.182 0.182 0.500

A2:C2 0.182 -0.258 -0.129 -0.707 -0.354

A3:C2 0.182 -0.129 -0.258 -0.707 -0.354 0.500

A2:C3 0.182 -0.258 -0.129 -0.354 -0.707 0.500 0.250

A3:C3 0.182 -0.129 -0.258 -0.354 -0.707 0.250 0.500 0.500

confint(data.splt.lmer)

2.5 % 97.5 % .sig01 8.383249 13.6705563 .sigma 3.534158 4.9042634 (Intercept) 37.848710 50.9487139 A2 -18.124010 0.4021934 A3 -20.873738 -2.3475353 C2 27.823884 34.5162514 C3 35.484901 42.1772680 A2:C2 -20.071125 -10.6066884 A3:C2 -29.624061 -20.1596244 A2:C3 -9.237364 0.2270724 A3:C3 -34.824998 -25.3605618

anova(data.splt.lmer)

Analysis of Variance Table

Df Sum Sq Mean Sq F value

A 2 784.7 392.4 21.124

C 2 13819.2 6909.6 372.003

A:C 4 3904.4 976.1 52.551

library(broom) tidy(data.splt.lmer, effects = "fixed", conf.int = TRUE)

# A tibble: 9 x 6 term estimate std.error statistic conf.low conf.high <chr> <dbl> <dbl> <dbl> <dbl> <dbl> 1 (Intercept) 44.4 3.41 13.0 37.7 51.1 2 A2 -8.86 4.83 -1.84 -18.3 0.597 3 A3 -11.6 4.83 -2.41 -21.1 -2.15 4 C2 31.2 1.76 17.7 27.7 34.6 5 C3 38.8 1.76 22.1 35.4 42.3 6 A2:C2 -15.3 2.49 -6.16 -20.2 -10.5 7 A3:C2 -24.9 2.49 -10.0 -29.8 -20.0 8 A2:C3 -4.51 2.49 -1.81 -9.38 0.372 9 A3:C3 -30.1 2.49 -12.1 -35.0 -25.2

glance(data.splt.lmer)

# A tibble: 1 x 6 sigma logLik AIC BIC deviance df.residual <dbl> <dbl> <dbl> <dbl> <dbl> <int> 1 4.31 -346. 714. 744. 721. 97

As a result of disagreement and discontent concerning the appropriate residual degrees of freedom, lmer() does not provide p-values in summary or anova tables. For hypothesis testing, the following options exist:

- Confidence intervals on the estimated parameters.

confint(data.splt.lmer)

2.5 % 97.5 % .sig01 8.383249 13.6705563 .sigma 3.534158 4.9042634 (Intercept) 37.848710 50.9487139 A2 -18.124010 0.4021934 A3 -20.873738 -2.3475353 C2 27.823884 34.5162514 C3 35.484901 42.1772680 A2:C2 -20.071125 -10.6066884 A3:C2 -29.624061 -20.1596244 A2:C3 -9.237364 0.2270724 A3:C3 -34.824998 -25.3605618

- Likelihood Ratio Test (LRT). Note, as this is contrasting a fixed component, the models need to be fitted with ML rather than REML.

mod1 = update(data.splt.lmer, REML = FALSE) mod2 = update(data.splt.lmer, ~. - A, REML = FALSE) anova(mod1, mod2)

Data: data.splt Models: mod1: y ~ A * C + (1 | Block) mod2: y ~ C + (1 | Block) + A:C Df AIC BIC logLik deviance Chisq Chi Df Pr(>Chisq) mod1 11 743.5 773 -360.75 721.5 mod2 11 743.5 773 -360.75 721.5 0 0 1 - Adopt the Satterthwaite or Kenward-Roger methods to denominator degrees of freedom (as used in SAS). This approach requires the lmerTest

and pbkrtest packages and requires that they be loaded before fitting the model (update() will suffice).

Note just because these are the approaches adopted by SAS, this does not mean that they are 'correct'.

library(lmerTest) data.splt.lmer <- update(data.splt.lmer) summary(data.splt.lmer)

Linear mixed model fit by REML t-tests use Satterthwaite approximations to degrees of freedom [ lmerMod] Formula: y ~ A * C + (1 | Block) Data: data.splt REML criterion at convergence: 692.4 Scaled residuals: Min 1Q Median 3Q Max -1.90800 -0.54190 0.00378 0.54287 1.81072 Random effects: Groups Name Variance Std.Dev. Block (Intercept) 121.15 11.01 Residual 18.57 4.31 Number of obs: 108, groups: Block, 36 Fixed effects: Estimate Std. Error df t value Pr(>|t|) (Intercept) 44.399 3.412 39.543 13.011 6.66e-16 *** A2 -8.861 4.826 39.543 -1.836 0.0739 . A3 -11.611 4.826 39.543 -2.406 0.0209 * C2 31.170 1.759 66.000 17.716 < 2e-16 *** C3 38.831 1.759 66.000 22.070 < 2e-16 *** A2:C2 -15.339 2.488 66.000 -6.165 4.80e-08 *** A3:C2 -24.892 2.488 66.000 -10.004 7.55e-15 *** A2:C3 -4.505 2.488 66.000 -1.811 0.0748 . A3:C3 -30.093 2.488 66.000 -12.094 < 2e-16 *** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 Correlation of Fixed Effects: (Intr) A2 A3 C2 C3 A2:C2 A3:C2 A2:C3 A2 -0.707 A3 -0.707 0.500 C2 -0.258 0.182 0.182 C3 -0.258 0.182 0.182 0.500 A2:C2 0.182 -0.258 -0.129 -0.707 -0.354 A3:C2 0.182 -0.129 -0.258 -0.707 -0.354 0.500 A2:C3 0.182 -0.258 -0.129 -0.354 -0.707 0.500 0.250 A3:C3 0.182 -0.129 -0.258 -0.354 -0.707 0.250 0.500 0.500anova(data.splt.lmer) # Satterthwaite denominator df method

Analysis of Variance Table of type III with Satterthwaite approximation for degrees of freedom Sum Sq Mean Sq NumDF DenDF F.value Pr(>F) A 784.7 392.4 2 33 21.12 1.24e-06 *** C 13819.2 6909.6 2 66 372.00 < 2.2e-16 *** A:C 3904.4 976.1 4 66 52.55 < 2.2e-16 *** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1anova(data.splt.lmer, ddf = "Kenward-Roger")

Analysis of Variance Table of type III with Kenward-Roger approximation for degrees of freedom Sum Sq Mean Sq NumDF DenDF F.value Pr(>F) A 784.7 392.4 2 33 21.12 1.24e-06 *** C 13819.2 6909.6 2 66 372.00 < 2.2e-16 *** A:C 3904.4 976.1 4 66 52.55 < 2.2e-16 *** --- Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

The output comprises:

- various information criterion (for model comparison)

- the random effects variance components

- the estimated standard deviation between Blocks is

11.0068942 - the estimated standard deviation within treatments is

4.3097613 - Blocks represent

71.8622559% of the variability (based on SD).

- the estimated standard deviation between Blocks is

- the fixed effects

- The effects parameter estimates along with their hypothesis tests

- There is evidence of an interaction between $A$ and $C$ - the nature of the trends between $A1$, $A2$ and $A3$ are not consistent between all levels of $C$ (and vice verse).

summary(data.splt.glmmTMB)

Family: gaussian ( identity )

Formula: y ~ A * C + (1 | Block)

Data: data.splt

AIC BIC logLik deviance df.resid

743.5 773.0 -360.7 721.5 97

Random effects:

Conditional model:

Groups Name Variance Std.Dev.

Block (Intercept) 111.06 10.538

Residual 17.03 4.126

Number of obs: 108, groups: Block, 36

Dispersion estimate for gaussian family (sigma^2): 17

Conditional model:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 44.399 3.267 13.590 < 2e-16 ***

A2 -8.861 4.620 -1.918 0.0551 .

A3 -11.611 4.620 -2.513 0.0120 *

C2 31.170 1.685 18.504 < 2e-16 ***

C3 38.831 1.685 23.051 < 2e-16 ***

A2:C2 -15.339 2.382 -6.439 1.21e-10 ***

A3:C2 -24.892 2.382 -10.449 < 2e-16 ***

A2:C3 -4.505 2.382 -1.891 0.0586 .

A3:C3 -30.093 2.382 -12.632 < 2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

confint(data.splt.glmmTMB)

2.5 % 97.5 % Estimate cond.(Intercept) 37.995459 50.8019809 44.398720 cond.A2 -17.916505 0.1946518 -8.860926 cond.A3 -20.666227 -2.5550708 -11.610649 cond.C2 27.868417 34.4717213 31.170069 cond.C3 35.529441 42.1327460 38.831094 cond.A2:C2 -20.008147 -10.6696636 -15.338905 cond.A3:C2 -29.561085 -20.2226021 -24.891844 cond.A2:C3 -9.174404 0.1640792 -4.505162 cond.A3:C3 -34.762032 -25.4235487 -30.092790 cond.Std.Dev.Block.(Intercept) 8.265449 13.4361251 10.538292 sigma 3.504495 4.8583895 4.126282

The output comprises:

- various information criterion (for model comparison)

- the random effects variance components

- the estimated standard deviation between Blocks is

- the estimated standard deviation within treatments is

TRUE - Blocks represent % of the variability (based on SD).

- the fixed effects

- The effects parameter estimates along with their hypothesis tests

- There is evidence of an interaction between $A$ and $C$ - the nature of the trends between $A1$, $A2$ and $A3$ are not consistent between all levels of $C$ (and vice verse).

summary(data.splt.aov)

Error: Block

Df Sum Sq Mean Sq F value Pr(>F)

A 2 16140 8070 21.12 1.24e-06 ***

Residuals 33 12607 382

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Error: Within

Df Sum Sq Mean Sq F value Pr(>F)

C 2 13819 6910 372.00 <2e-16 ***

A:C 4 3904 976 52.55 <2e-16 ***

Residuals 66 1226 19

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Planned comparisons and pairwise post-hoc tests

Similar to Tutorial 7.6a.html, we could apply manual adjustments to separate simple main effects tests or refit the model with modified interaction terms in order to explore the main effect of one factor at different levels of the other factor. Alternatively, we could just apply different contrasts to the existing fitted model.

As with non-heirarchical models, we can incorporate alternative contrasts for the fixed effects (other than the default treatment contrasts). The random factors must be sum-to-zero contrasts in order to ensure that the model is identifiable (possible to estimate true values of the parameters).

Likewise, post-hoc tests such as Tukey's tests can be performed.

For this demonstration, we have the extra complication - an interaction between A and C. For this reason, we will explore comparisons in A separately for each level of C (although we could do this the other way around and explore contrasts in C for each level of A).

In the absence of interactions, we could use glht() (which in this case, will perform the Tukey's test for A at the first level of C - as it is assumed in the absence of an interaction that this will be the same for all levels of C).

library(multcomp) summary(glht(data.splt.lme, linfct = mcp(A = "Tukey")))

Simultaneous Tests for General Linear Hypotheses

Multiple Comparisons of Means: Tukey Contrasts

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Linear Hypotheses:

Estimate Std. Error z value Pr(>|z|)

2 - 1 == 0 -8.861 4.826 -1.836 0.1578

3 - 1 == 0 -11.611 4.826 -2.406 0.0427 *

3 - 2 == 0 -2.750 4.826 -0.570 0.8362

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)

confint(glht(data.splt.lme, linfct = mcp(A = "Tukey")))

Simultaneous Confidence Intervals

Multiple Comparisons of Means: Tukey Contrasts

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Quantile = 2.3441

95% family-wise confidence level

Linear Hypotheses:

Estimate lwr upr

2 - 1 == 0 -8.8609 -20.1729 2.4511

3 - 1 == 0 -11.6106 -22.9227 -0.2986

3 - 2 == 0 -2.7497 -14.0617 8.5623

In this case, you will notice a message that warns us that we specified a model with an interaction term and that the above might not be appropriate. One alternative might be to perform the Tukey's test for A marginalizing (averaging) over all the levels of C.

As an alternative to the glht() function, we can also use the emmeans() function from a package with the same name. This package computes 'estimated marginal means' and is an adaptation of the least-squares (predicted marginal) means routine popularized by SAS. This routine uses the Kenward-Roger (or optionally, the Satterthwaite) method of calculating degrees of freedom and so will yield slightly different confidence intervals and p-values from glht(). Note, the emmeans() package has replaced the lsmeans() package.

library(multcomp) summary(glht(data.splt.lme, linfct = mcp(A = "Tukey", interaction_average = TRUE)))

Simultaneous Tests for General Linear Hypotheses

Multiple Comparisons of Means: Tukey Contrasts

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Linear Hypotheses:

Estimate Std. Error z value Pr(>|z|)

2 - 1 == 0 -15.476 4.607 -3.359 0.00220 **

3 - 1 == 0 -29.939 4.607 -6.499 < 0.001 ***

3 - 2 == 0 -14.463 4.607 -3.139 0.00497 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)

confint(glht(data.splt.lme, linfct = mcp(A = "Tukey", interaction_average = TRUE)))

Simultaneous Confidence Intervals

Multiple Comparisons of Means: Tukey Contrasts

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Quantile = 2.3431

95% family-wise confidence level

Linear Hypotheses:

Estimate lwr upr

2 - 1 == 0 -15.4756 -26.2702 -4.6810

3 - 1 == 0 -29.9388 -40.7334 -19.1443

3 - 2 == 0 -14.4633 -25.2578 -3.6687

## OR library(emmeans) emmeans(data.splt.lme, pairwise ~ A)

$emmeans A emmean SE df lower.CL upper.CL 1 67.73243 3.257595 35 61.11916 74.34570 2 52.25684 3.257595 33 45.62921 58.88446 3 37.79359 3.257595 33 31.16596 44.42121 Results are averaged over the levels of: C Degrees-of-freedom method: containment Confidence level used: 0.95 $contrasts contrast estimate SE df t.ratio p.value 1 - 2 15.47559 4.606934 33 3.359 0.0055 1 - 3 29.93884 4.606934 33 6.499 <.0001 2 - 3 14.46325 4.606934 33 3.139 0.0097 Results are averaged over the levels of: C P value adjustment: tukey method for comparing a family of 3 estimates

confint(emmeans(data.splt.lme, pairwise ~ A))

$emmeans A emmean SE df lower.CL upper.CL 1 67.73243 3.257595 35 61.11916 74.34570 2 52.25684 3.257595 33 45.62921 58.88446 3 37.79359 3.257595 33 31.16596 44.42121 Results are averaged over the levels of: C Degrees-of-freedom method: containment Confidence level used: 0.95 $contrasts contrast estimate SE df lower.CL upper.CL 1 - 2 15.47559 4.606934 33 4.171122 26.78006 1 - 3 29.93884 4.606934 33 18.634374 41.24331 2 - 3 14.46325 4.606934 33 3.158782 25.76772 Results are averaged over the levels of: C Confidence level used: 0.95 Conf-level adjustment: tukey method for comparing a family of 3 estimates

Arguably, it would be better to perform the Tukey's test for A separately for each level of C.

library(emmeans) emmeans(data.splt.lme, pairwise ~ A | C)

$emmeans C = 1: A emmean SE df lower.CL upper.CL 1 44.39871 3.412303 35 37.47137 51.32606 2 35.53780 3.412303 33 28.59542 42.48019 3 32.78808 3.412303 33 25.84569 39.73046 C = 2: A emmean SE df lower.CL upper.CL 1 75.56878 3.412303 35 68.64144 82.49612 2 51.36897 3.412303 33 44.42658 58.31135 3 39.06630 3.412303 33 32.12392 46.00868 C = 3: A emmean SE df lower.CL upper.CL 1 83.22980 3.412303 35 76.30245 90.15714 2 69.86374 3.412303 33 62.92136 76.80613 3 41.52638 3.412303 33 34.58400 48.46876 Degrees-of-freedom method: containment Confidence level used: 0.95 $contrasts C = 1: contrast estimate SE df t.ratio p.value 1 - 2 8.860908 4.825726 33 1.836 0.1737 1 - 3 11.610637 4.825726 33 2.406 0.0555 2 - 3 2.749729 4.825726 33 0.570 0.8370 C = 2: contrast estimate SE df t.ratio p.value 1 - 2 24.199815 4.825726 33 5.015 0.0001 1 - 3 36.502479 4.825726 33 7.564 <.0001 2 - 3 12.302665 4.825726 33 2.549 0.0404 C = 3: contrast estimate SE df t.ratio p.value 1 - 2 13.366054 4.825726 33 2.770 0.0242 1 - 3 41.703417 4.825726 33 8.642 <.0001 2 - 3 28.337363 4.825726 33 5.872 <.0001 P value adjustment: tukey method for comparing a family of 3 estimates

confint(emmeans(data.splt.lme, pairwise ~ A | C))

$emmeans C = 1: A emmean SE df lower.CL upper.CL 1 44.39871 3.412303 35 37.47137 51.32606 2 35.53780 3.412303 33 28.59542 42.48019 3 32.78808 3.412303 33 25.84569 39.73046 C = 2: A emmean SE df lower.CL upper.CL 1 75.56878 3.412303 35 68.64144 82.49612 2 51.36897 3.412303 33 44.42658 58.31135 3 39.06630 3.412303 33 32.12392 46.00868 C = 3: A emmean SE df lower.CL upper.CL 1 83.22980 3.412303 35 76.30245 90.15714 2 69.86374 3.412303 33 62.92136 76.80613 3 41.52638 3.412303 33 34.58400 48.46876 Degrees-of-freedom method: containment Confidence level used: 0.95 $contrasts C = 1: contrast estimate SE df lower.CL upper.CL 1 - 2 8.860908 4.825726 33 -2.9804308 20.70225 1 - 3 11.610637 4.825726 33 -0.2307022 23.45198 2 - 3 2.749729 4.825726 33 -9.0916102 14.59107 C = 2: contrast estimate SE df lower.CL upper.CL 1 - 2 24.199815 4.825726 33 12.3584757 36.04115 1 - 3 36.502479 4.825726 33 24.6611403 48.34382 2 - 3 12.302665 4.825726 33 0.4613257 24.14400 C = 3: contrast estimate SE df lower.CL upper.CL 1 - 2 13.366054 4.825726 33 1.5247149 25.20739 1 - 3 41.703417 4.825726 33 29.8620777 53.54476 2 - 3 28.337363 4.825726 33 16.4960239 40.17870 Confidence level used: 0.95 Conf-level adjustment: tukey method for comparing a family of 3 estimates

## For those who like their ANOVA test(emmeans(data.splt.lme, specs = ~A | C), joint = TRUE)

C df1 df2 F.ratio p.value 1 3 35 123.363 <.0001 2 3 NA 282.713 <.0001 3 3 NA 387.404 <.0001

## OR library(multcomp) summary(glht(data.splt.lme, linfct = lsm(tukey ~ A | C)))

$`C = 1`

Simultaneous Tests for General Linear Hypotheses

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

1 - 2 == 0 8.861 4.826 1.836 0.1737

1 - 3 == 0 11.611 4.826 2.406 0.0556 .

2 - 3 == 0 2.750 4.826 0.570 0.8370

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)

$`C = 2`

Simultaneous Tests for General Linear Hypotheses

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

1 - 2 == 0 24.200 4.826 5.015 <0.001 ***

1 - 3 == 0 36.502 4.826 7.564 <0.001 ***

2 - 3 == 0 12.303 4.826 2.549 0.0403 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)

$`C = 3`

Simultaneous Tests for General Linear Hypotheses

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

1 - 2 == 0 13.366 4.826 2.770 0.0242 *

1 - 3 == 0 41.703 4.826 8.642 <0.001 ***

2 - 3 == 0 28.337 4.826 5.872 <0.001 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)

## OR summary(glht(data.splt.lme, linfct = lsm(pairwise ~ A | C)))

$`C = 1`

Simultaneous Tests for General Linear Hypotheses

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

1 - 2 == 0 8.861 4.826 1.836 0.1737

1 - 3 == 0 11.611 4.826 2.406 0.0556 .

2 - 3 == 0 2.750 4.826 0.570 0.8370

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)

$`C = 2`

Simultaneous Tests for General Linear Hypotheses

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

1 - 2 == 0 24.200 4.826 5.015 <0.001 ***

1 - 3 == 0 36.502 4.826 7.564 <0.001 ***

2 - 3 == 0 12.303 4.826 2.549 0.0404 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)

$`C = 3`

Simultaneous Tests for General Linear Hypotheses

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

1 - 2 == 0 13.366 4.826 2.770 0.0241 *

1 - 3 == 0 41.703 4.826 8.642 <0.001 ***

2 - 3 == 0 28.337 4.826 5.872 <0.001 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)

confint(glht(data.splt.lme, linfct = lsm(tukey ~ A | C)))

$`C = 1`

Simultaneous Confidence Intervals

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Quantile = 2.4539

95% family-wise confidence level

Linear Hypotheses:

Estimate lwr upr

1 - 2 == 0 8.8609 -2.9809 20.7028

1 - 3 == 0 11.6106 -0.2312 23.4525

2 - 3 == 0 2.7497 -9.0921 14.5916

$`C = 2`

Simultaneous Confidence Intervals

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Quantile = 2.4539

95% family-wise confidence level

Linear Hypotheses:

Estimate lwr upr

1 - 2 == 0 24.1998 12.3580 36.0416

1 - 3 == 0 36.5025 24.6607 48.3442

2 - 3 == 0 12.3027 0.4609 24.1444

$`C = 3`

Simultaneous Confidence Intervals

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Quantile = 2.4532

95% family-wise confidence level

Linear Hypotheses:

Estimate lwr upr

1 - 2 == 0 13.3661 1.5278 25.2043

1 - 3 == 0 41.7034 29.8651 53.5417

2 - 3 == 0 28.3374 16.4991 40.1757

Comp1: Group 2 vs Group 3

Comp2: Group 1 vs (Group 2,3)

## Planned contrasts 1.(A2 vs A3) 2.(A1 vs A2,A3) contr.A = cbind(c(0, 1, -1), c(1, -0.5, -0.5)) crossprod(contr.A)

[,1] [,2] [1,] 2 0.0 [2,] 0 1.5

emmeans(data.splt.lme, spec = "A", by = "C", contr = list(A = contr.A))

$emmeans C = 1: A emmean SE df lower.CL upper.CL 1 44.39871 3.412303 35 37.47137 51.32606 2 35.53780 3.412303 33 28.59542 42.48019 3 32.78808 3.412303 33 25.84569 39.73046 C = 2: A emmean SE df lower.CL upper.CL 1 75.56878 3.412303 35 68.64144 82.49612 2 51.36897 3.412303 33 44.42658 58.31135 3 39.06630 3.412303 33 32.12392 46.00868 C = 3: A emmean SE df lower.CL upper.CL 1 83.22980 3.412303 35 76.30245 90.15714 2 69.86374 3.412303 33 62.92136 76.80613 3 41.52638 3.412303 33 34.58400 48.46876 Degrees-of-freedom method: containment Confidence level used: 0.95 $contrasts C = 1: contrast estimate SE df t.ratio p.value A.1 2.749729 4.825726 33 0.570 0.5727 A.2 10.235772 4.179201 33 2.449 0.0198 C = 2: contrast estimate SE df t.ratio p.value A.1 12.302665 4.825726 33 2.549 0.0156 A.2 30.351147 4.179201 33 7.262 <.0001 C = 3: contrast estimate SE df t.ratio p.value A.1 28.337363 4.825726 33 5.872 <.0001 A.2 27.534735 4.179201 33 6.589 <.0001

contrast(emmeans(data.splt.lme, ~A | C), method = list(A = contr.A))

C = 1: contrast estimate SE df t.ratio p.value A.1 2.749729 4.825726 33 0.570 0.5727 A.2 10.235772 4.179201 33 2.449 0.0198 C = 2: contrast estimate SE df t.ratio p.value A.1 12.302665 4.825726 33 2.549 0.0156 A.2 30.351147 4.179201 33 7.262 <.0001 C = 3: contrast estimate SE df t.ratio p.value A.1 28.337363 4.825726 33 5.872 <.0001 A.2 27.534735 4.179201 33 6.589 <.0001

confint(contrast(emmeans(data.splt.lme, ~A | C), method = list(A = contr.A)))

C = 1: contrast estimate SE df lower.CL upper.CL A.1 2.749729 4.825726 33 -7.068284 12.56774 A.2 10.235772 4.179201 33 1.733124 18.73842 C = 2: contrast estimate SE df lower.CL upper.CL A.1 12.302665 4.825726 33 2.484652 22.12068 A.2 30.351147 4.179201 33 21.848499 38.85380 C = 3: contrast estimate SE df lower.CL upper.CL A.1 28.337363 4.825726 33 18.519350 38.15538 A.2 27.534735 4.179201 33 19.032087 36.03738 Confidence level used: 0.95

## Using glht summary(glht(data.splt.lme, linfct = lsm("A", by = "C", contr = list(A = contr.A))), test = adjusted("none"))

$`C = 1`

Simultaneous Tests for General Linear Hypotheses

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

A.1 == 0 2.750 4.826 0.570 0.5727

A.2 == 0 10.236 4.179 2.449 0.0198 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- none method)

$`C = 2`

Simultaneous Tests for General Linear Hypotheses

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

A.1 == 0 12.303 4.826 2.549 0.0156 *

A.2 == 0 30.351 4.179 7.262 2.48e-08 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- none method)

$`C = 3`

Simultaneous Tests for General Linear Hypotheses

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

A.1 == 0 28.337 4.826 5.872 1.41e-06 ***

A.2 == 0 27.535 4.179 6.589 1.73e-07 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- none method)

confint(glht(data.splt.lme, linfct = lsm("A", by = "C", contr = list(A = contr.A))), calpha = univariate_calpha())

$`C = 1`

Simultaneous Confidence Intervals

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Quantile = 2.0345

95% confidence level

Linear Hypotheses:

Estimate lwr upr

A.1 == 0 2.7497 -7.0683 12.5677

A.2 == 0 10.2358 1.7331 18.7384

$`C = 2`

Simultaneous Confidence Intervals

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Quantile = 2.0345

95% confidence level

Linear Hypotheses:

Estimate lwr upr

A.1 == 0 12.3027 2.4847 22.1207

A.2 == 0 30.3511 21.8485 38.8538

$`C = 3`

Simultaneous Confidence Intervals

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Quantile = 2.0345

95% confidence level

Linear Hypotheses:

Estimate lwr upr

A.1 == 0 28.3374 18.5194 38.1554

A.2 == 0 27.5347 19.0321 36.0374

## OR manually newdata = with(data.splt, expand.grid(A = levels(A), C = levels(C))) Xmat = model.matrix(~A * C, newdata) coefs = fixef(data.splt.lme) Xmat.split = split.data.frame(Xmat, f = newdata$C) ## When estimating the confidence intervals, we will base Q on model ## degrees of freedom lsmean uses Q=1.96 lapply(Xmat.split, function(x) { Xmat = t(t(x) %*% contr.A) fit = as.vector(coefs %*% t(Xmat)) se = sqrt(diag(Xmat %*% vcov(data.splt.lme) %*% t(Xmat))) Q = qt(0.975, data.splt.lme$fixDF$terms["A"]) # Q=1.96 data.frame(fit = fit, lower = fit - Q * se, upper = fit + Q * se) })

$`1`

fit lower upper

1 2.749729 -7.068284 12.56774

2 10.235772 1.733124 18.73842

$`2`

fit lower upper

1 12.30266 2.484652 22.12068

2 30.35115 21.848499 38.85380

$`3`

fit lower upper

1 28.33736 18.51935 38.15538

2 27.53474 19.03209 36.03738

## We could alternatively use the split contrast matrices directly in ## glht unfortunately, we then need to know what each row refers to... contr.split = lapply(Xmat.split, function(x) { t(t(x) %*% contr.A) }) contr.split = do.call("rbind", contr.split) summary(glht(data.splt.lme, linfct = contr.split), test = adjusted("none"))

Simultaneous Tests for General Linear Hypotheses

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Linear Hypotheses:

Estimate Std. Error z value Pr(>|z|)

1 == 0 2.750 4.826 0.570 0.5688

2 == 0 10.236 4.179 2.449 0.0143 *

3 == 0 12.303 4.826 2.549 0.0108 *

4 == 0 30.351 4.179 7.262 3.80e-13 ***

5 == 0 28.337 4.826 5.872 4.30e-09 ***

6 == 0 27.535 4.179 6.589 4.44e-11 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- none method)

confint(glht(data.splt.lme, linfct = contr.split), calpha = univariate_calpha())

Simultaneous Confidence Intervals

Fit: lme.formula(fixed = y ~ A * C, data = data.splt, random = ~1 |

Block, method = "REML")

Quantile = 1.96

95% confidence level

Linear Hypotheses:

Estimate lwr upr

1 == 0 2.7497 -6.7085 12.2080

2 == 0 10.2358 2.0447 18.4269

3 == 0 12.3027 2.8444 21.7609

4 == 0 30.3511 22.1601 38.5422

5 == 0 28.3374 18.8791 37.7956

6 == 0 27.5347 19.3437 35.7258

In the absence of interactions, we could use glht() (which in this case, will perform the Tukey's test for A at the first level of C - as it is assumed in the absence of an interaction that this will be the same for all levels of C).

library(multcomp) summary(glht(data.splt.lmer, linfct = mcp(A = "Tukey")))

Simultaneous Tests for General Linear Hypotheses

Multiple Comparisons of Means: Tukey Contrasts

Fit: lme4::lmer(formula = y ~ A * C + (1 | Block), data = data.splt,

REML = TRUE)

Linear Hypotheses:

Estimate Std. Error z value Pr(>|z|)

2 - 1 == 0 -8.861 4.826 -1.836 0.1579

3 - 1 == 0 -11.611 4.826 -2.406 0.0427 *

3 - 2 == 0 -2.750 4.826 -0.570 0.8362

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)

confint(glht(data.splt.lmer, linfct = mcp(A = "Tukey")))

Simultaneous Confidence Intervals

Multiple Comparisons of Means: Tukey Contrasts

Fit: lme4::lmer(formula = y ~ A * C + (1 | Block), data = data.splt,

REML = TRUE)

Quantile = 2.3442

95% family-wise confidence level

Linear Hypotheses:

Estimate lwr upr

2 - 1 == 0 -8.8609 -20.1734 2.4516

3 - 1 == 0 -11.6106 -22.9231 -0.2982

3 - 2 == 0 -2.7497 -14.0622 8.5627

In this case, you will notice a message that warns us that we specified a model with an interaction term and that the above might not be appropriate. One alternative might be to perform the Tukey's test for A marginalizing (averaging) over all the levels of C.

As an alternative to the glht() function, we can also use the emmeans() function from a package with the same name. This package computes 'estimated marginal means' and is an adaptation of the least-squares (predicted marginal) means routine popularized by SAS. This routine uses the Kenward-Roger (or optionally, the Satterthwaite) method of calculating degrees of freedom and so will yield slightly different confidence intervals and p-values from glht(). Note, the emmeans() package has replaced the lsmeans() package.

library(multcomp) summary(glht(data.splt.lmer, linfct = mcp(A = "Tukey", interaction_average = TRUE)))

Simultaneous Tests for General Linear Hypotheses

Multiple Comparisons of Means: Tukey Contrasts

Fit: lme4::lmer(formula = y ~ A * C + (1 | Block), data = data.splt,

REML = TRUE)

Linear Hypotheses:

Estimate Std. Error z value Pr(>|z|)

2 - 1 == 0 -15.476 4.607 -3.359 0.00238 **

3 - 1 == 0 -29.939 4.607 -6.499 < 0.001 ***

3 - 2 == 0 -14.463 4.607 -3.139 0.00468 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)

confint(glht(data.splt.lmer, linfct = mcp(A = "Tukey", interaction_average = TRUE)))

Simultaneous Confidence Intervals

Multiple Comparisons of Means: Tukey Contrasts

Fit: lme4::lmer(formula = y ~ A * C + (1 | Block), data = data.splt,

REML = TRUE)

Quantile = 2.3434

95% family-wise confidence level

Linear Hypotheses:

Estimate lwr upr

2 - 1 == 0 -15.4756 -26.2713 -4.6798

3 - 1 == 0 -29.9388 -40.7346 -19.1431

3 - 2 == 0 -14.4633 -25.2590 -3.6675

## OR library(emmeans) emmeans(data.splt.lmer, pairwise ~ A)

$emmeans A emmean SE df lower.CL upper.CL 1 67.73243 3.257595 33 61.10480 74.36006 2 52.25684 3.257595 33 45.62921 58.88446 3 37.79359 3.257595 33 31.16596 44.42121 Results are averaged over the levels of: C Degrees-of-freedom method: kenward-roger Confidence level used: 0.95 $contrasts contrast estimate SE df t.ratio p.value 1 - 2 15.47559 4.606934 33 3.359 0.0055 1 - 3 29.93884 4.606934 33 6.499 <.0001 2 - 3 14.46325 4.606934 33 3.139 0.0097 Results are averaged over the levels of: C P value adjustment: tukey method for comparing a family of 3 estimates

confint(emmeans(data.splt.lmer, pairwise ~ A))

$emmeans A emmean SE df lower.CL upper.CL 1 67.73243 3.257595 33 61.10480 74.36006 2 52.25684 3.257595 33 45.62921 58.88446 3 37.79359 3.257595 33 31.16596 44.42121 Results are averaged over the levels of: C Degrees-of-freedom method: kenward-roger Confidence level used: 0.95 $contrasts contrast estimate SE df lower.CL upper.CL 1 - 2 15.47559 4.606934 33 4.171122 26.78006 1 - 3 29.93884 4.606934 33 18.634374 41.24331 2 - 3 14.46325 4.606934 33 3.158782 25.76772 Results are averaged over the levels of: C Confidence level used: 0.95 Conf-level adjustment: tukey method for comparing a family of 3 estimates

Arguably, it would be better to perform the Tukey's test for A separately for each level of C.

library(emmeans) emmeans(data.splt.lmer, pairwise ~ A | C)

$emmeans C = 1: A emmean SE df lower.CL upper.CL 1 44.39871 3.412303 39.54 37.49971 51.29772 2 35.53780 3.412303 39.54 28.63880 42.43681 3 32.78808 3.412303 39.54 25.88907 39.68708 C = 2: A emmean SE df lower.CL upper.CL 1 75.56878 3.412303 39.54 68.66977 82.46779 2 51.36897 3.412303 39.54 44.46996 58.26797 3 39.06630 3.412303 39.54 32.16729 45.96531 C = 3: A emmean SE df lower.CL upper.CL 1 83.22980 3.412303 39.54 76.33079 90.12880 2 69.86374 3.412303 39.54 62.96474 76.76275 3 41.52638 3.412303 39.54 34.62737 48.42539 Degrees-of-freedom method: kenward-roger Confidence level used: 0.95 $contrasts C = 1: contrast estimate SE df t.ratio p.value 1 - 2 8.860908 4.825726 39.54 1.836 0.1711 1 - 3 11.610637 4.825726 39.54 2.406 0.0534 2 - 3 2.749729 4.825726 39.54 0.570 0.8369 C = 2: contrast estimate SE df t.ratio p.value 1 - 2 24.199815 4.825726 39.54 5.015 <.0001 1 - 3 36.502479 4.825726 39.54 7.564 <.0001 2 - 3 12.302665 4.825726 39.54 2.549 0.0384 C = 3: contrast estimate SE df t.ratio p.value 1 - 2 13.366054 4.825726 39.54 2.770 0.0226 1 - 3 41.703417 4.825726 39.54 8.642 <.0001 2 - 3 28.337363 4.825726 39.54 5.872 <.0001 P value adjustment: tukey method for comparing a family of 3 estimates

confint(emmeans(data.splt.lmer, pairwise ~ A | C))

$emmeans C = 1: A emmean SE df lower.CL upper.CL 1 44.39871 3.412303 39.54 37.49971 51.29772 2 35.53780 3.412303 39.54 28.63880 42.43681 3 32.78808 3.412303 39.54 25.88907 39.68708 C = 2: A emmean SE df lower.CL upper.CL 1 75.56878 3.412303 39.54 68.66977 82.46779 2 51.36897 3.412303 39.54 44.46996 58.26797 3 39.06630 3.412303 39.54 32.16729 45.96531 C = 3: A emmean SE df lower.CL upper.CL 1 83.22980 3.412303 39.54 76.33079 90.12880 2 69.86374 3.412303 39.54 62.96474 76.76275 3 41.52638 3.412303 39.54 34.62737 48.42539 Degrees-of-freedom method: kenward-roger Confidence level used: 0.95 $contrasts C = 1: contrast estimate SE df lower.CL upper.CL 1 - 2 8.860908 4.825726 39.54 -2.8897130 20.61153 1 - 3 11.610637 4.825726 39.54 -0.1399843 23.36126 2 - 3 2.749729 4.825726 39.54 -9.0008924 14.50035 C = 2: contrast estimate SE df lower.CL upper.CL 1 - 2 24.199815 4.825726 39.54 12.4491936 35.95044 1 - 3 36.502479 4.825726 39.54 24.7518582 48.25310 2 - 3 12.302665 4.825726 39.54 0.5520436 24.05329 C = 3: contrast estimate SE df lower.CL upper.CL 1 - 2 13.366054 4.825726 39.54 1.6154328 25.11667 1 - 3 41.703417 4.825726 39.54 29.9527956 53.45404 2 - 3 28.337363 4.825726 39.54 16.5867418 40.08798 Confidence level used: 0.95 Conf-level adjustment: tukey method for comparing a family of 3 estimates

## For those who like their ANOVA test(emmeans(data.splt.lmer, specs = ~A | C), joint = TRUE)

C df1 df2 F.ratio p.value 1 3 39.54 123.363 <.0001 2 3 39.54 282.713 <.0001 3 3 39.54 387.404 <.0001

## OR library(multcomp) summary(glht(data.splt.lmer, linfct = lsm(tukey ~ A | C)))

$`C = 1`

Simultaneous Tests for General Linear Hypotheses

Fit: lme4::lmer(formula = y ~ A * C + (1 | Block), data = data.splt,

REML = TRUE)

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

1 - 2 == 0 8.861 4.826 1.836 0.1713

1 - 3 == 0 11.611 4.826 2.406 0.0536 .

2 - 3 == 0 2.750 4.826 0.570 0.8369

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)

$`C = 2`

Simultaneous Tests for General Linear Hypotheses

Fit: lme4::lmer(formula = y ~ A * C + (1 | Block), data = data.splt,

REML = TRUE)

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

1 - 2 == 0 24.200 4.826 5.015 <0.001 ***

1 - 3 == 0 36.502 4.826 7.564 <0.001 ***

2 - 3 == 0 12.303 4.826 2.549 0.0385 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)

$`C = 3`

Simultaneous Tests for General Linear Hypotheses

Fit: lme4::lmer(formula = y ~ A * C + (1 | Block), data = data.splt,

REML = TRUE)

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

1 - 2 == 0 13.366 4.826 2.770 0.0227 *

1 - 3 == 0 41.703 4.826 8.642 <0.001 ***

2 - 3 == 0 28.337 4.826 5.872 <0.001 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)

## OR summary(glht(data.splt.lmer, linfct = lsm(pairwise ~ A | C)))

$`C = 1`

Simultaneous Tests for General Linear Hypotheses

Fit: lme4::lmer(formula = y ~ A * C + (1 | Block), data = data.splt,

REML = TRUE)

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

1 - 2 == 0 8.861 4.826 1.836 0.1713

1 - 3 == 0 11.611 4.826 2.406 0.0534 .

2 - 3 == 0 2.750 4.826 0.570 0.8369

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)

$`C = 2`

Simultaneous Tests for General Linear Hypotheses

Fit: lme4::lmer(formula = y ~ A * C + (1 | Block), data = data.splt,

REML = TRUE)

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

1 - 2 == 0 24.200 4.826 5.015 <1e-04 ***

1 - 3 == 0 36.502 4.826 7.564 <1e-04 ***

2 - 3 == 0 12.303 4.826 2.549 0.0386 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)

$`C = 3`

Simultaneous Tests for General Linear Hypotheses

Fit: lme4::lmer(formula = y ~ A * C + (1 | Block), data = data.splt,

REML = TRUE)

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

1 - 2 == 0 13.366 4.826 2.770 0.0228 *

1 - 3 == 0 41.703 4.826 8.642 <0.001 ***

2 - 3 == 0 28.337 4.826 5.872 <0.001 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- single-step method)

confint(glht(data.splt.lmer, linfct = lsm(tukey ~ A | C)))

$`C = 1`

Simultaneous Confidence Intervals

Fit: lme4::lmer(formula = y ~ A * C + (1 | Block), data = data.splt,

REML = TRUE)

Quantile = 2.4368

95% family-wise confidence level

Linear Hypotheses:

Estimate lwr upr

1 - 2 == 0 8.8609 -2.8986 20.6205

1 - 3 == 0 11.6106 -0.1489 23.3702

2 - 3 == 0 2.7497 -9.0098 14.5093

$`C = 2`

Simultaneous Confidence Intervals

Fit: lme4::lmer(formula = y ~ A * C + (1 | Block), data = data.splt,

REML = TRUE)

Quantile = 2.4368

95% family-wise confidence level

Linear Hypotheses:

Estimate lwr upr

1 - 2 == 0 24.1998 12.4404 35.9592

1 - 3 == 0 36.5025 24.7431 48.2619

2 - 3 == 0 12.3027 0.5433 24.0621

$`C = 3`

Simultaneous Confidence Intervals

Fit: lme4::lmer(formula = y ~ A * C + (1 | Block), data = data.splt,

REML = TRUE)

Quantile = 2.4368

95% family-wise confidence level

Linear Hypotheses:

Estimate lwr upr

1 - 2 == 0 13.3661 1.6066 25.1255

1 - 3 == 0 41.7034 29.9440 53.4628

2 - 3 == 0 28.3374 16.5780 40.0968

Comp1: Group 2 vs Group 3

Comp2: Group 1 vs (Group 2,3)

## Planned contrasts 1.(A2 vs A3) 2.(A1 vs A2,A3) contr.A = cbind(c(0, 1, -1), c(1, -0.5, -0.5)) crossprod(contr.A)

[,1] [,2] [1,] 2 0.0 [2,] 0 1.5

emmeans(data.splt.lmer, spec = "A", by = "C", contr = list(A = contr.A))

$emmeans C = 1: A emmean SE df lower.CL upper.CL 1 44.39871 3.412303 39.54 37.49971 51.29772 2 35.53780 3.412303 39.54 28.63880 42.43681 3 32.78808 3.412303 39.54 25.88907 39.68708 C = 2: A emmean SE df lower.CL upper.CL 1 75.56878 3.412303 39.54 68.66977 82.46779 2 51.36897 3.412303 39.54 44.46996 58.26797 3 39.06630 3.412303 39.54 32.16729 45.96531 C = 3: A emmean SE df lower.CL upper.CL 1 83.22980 3.412303 39.54 76.33079 90.12880 2 69.86374 3.412303 39.54 62.96474 76.76275 3 41.52638 3.412303 39.54 34.62737 48.42539 Degrees-of-freedom method: kenward-roger Confidence level used: 0.95 $contrasts C = 1: contrast estimate SE df t.ratio p.value A.1 2.749729 4.825726 39.54 0.570 0.5720 A.2 10.235772 4.179201 39.54 2.449 0.0188 C = 2: contrast estimate SE df t.ratio p.value A.1 12.302665 4.825726 39.54 2.549 0.0148 A.2 30.351147 4.179201 39.54 7.262 <.0001 C = 3: contrast estimate SE df t.ratio p.value A.1 28.337363 4.825726 39.54 5.872 <.0001 A.2 27.534735 4.179201 39.54 6.589 <.0001

contrast(emmeans(data.splt.lmer, ~A | C), method = list(A = contr.A))

C = 1: contrast estimate SE df t.ratio p.value A.1 2.749729 4.825726 39.54 0.570 0.5720 A.2 10.235772 4.179201 39.54 2.449 0.0188 C = 2: contrast estimate SE df t.ratio p.value A.1 12.302665 4.825726 39.54 2.549 0.0148 A.2 30.351147 4.179201 39.54 7.262 <.0001 C = 3: contrast estimate SE df t.ratio p.value A.1 28.337363 4.825726 39.54 5.872 <.0001 A.2 27.534735 4.179201 39.54 6.589 <.0001

confint(contrast(emmeans(data.splt.lmer, ~A | C), method = list(A = contr.A)))

C = 1: contrast estimate SE df lower.CL upper.CL A.1 2.749729 4.825726 39.54 -7.006940 12.50640 A.2 10.235772 4.179201 39.54 1.786249 18.68530 C = 2: contrast estimate SE df lower.CL upper.CL A.1 12.302665 4.825726 39.54 2.545996 22.05933 A.2 30.351147 4.179201 39.54 21.901624 38.80067 C = 3: contrast estimate SE df lower.CL upper.CL A.1 28.337363 4.825726 39.54 18.580694 38.09403 A.2 27.534735 4.179201 39.54 19.085212 35.98426 Confidence level used: 0.95

## Using glht summary(glht(data.splt.lmer, linfct = lsm("A", by = "C", contr = list(A = contr.A))), test = adjusted("none"))

$`C = 1`

Simultaneous Tests for General Linear Hypotheses

Fit: lme4::lmer(formula = y ~ A * C + (1 | Block), data = data.splt,

REML = TRUE)

Linear Hypotheses:

Estimate Std. Error t value Pr(>|t|)

A.1 == 0 2.750 4.826 0.570 0.5721

A.2 == 0 10.236 4.179 2.449 0.0189 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Adjusted p values reported -- none method)

$`C = 2`

Simultaneous Tests for General Linear Hypotheses