Tutorial 10.6b - Poisson regression and log-linear models (Bayesian)

12 Sep 2016

Overview

Whilst in many instances, count data can be approximated reasonably well by a normal distribution (particularly if the counts are all above zero and the mean count is greater than about 20), more typically, when count data are modelled via normal distribution certain undesirable characteristics arise that are a consequence of the nature of discrete non-negative data.

- Expected (predicted) values and confidence bands less than zero are illogical, yet these are entirely possible from a normal distribution

- The distribution of count data are often skewed when their mean is low (in part because the distribution is truncated to the left by zero) and variance usually increases with increasing mean (variance is typically proportional to mean in count data). By contrast, the Gaussian (normal) distribution assumes that mean and variance are unrelated and thus estimates (particularly of standard error) might well be reasonable inaccurate.

Poisson regression is a type of generalized linear model (GLM) in which a non-negative integer (natural number) response is modelled against a linear predictor via a specific link function. The linear predictor is typically a linear combination of effects parameters (e.g. $\beta_0 + \beta_1x_x$). The role of the link function is to transform the expected values of the response y (which is on the scale of (0,$\infty$), as is the Poisson distribution from which expectations are drawn) into the scale of the linear predictor (which is $-\infty,\infty$).

As implied in the name of this group of analyses, a Poisson rather than Gaussian (normal) distribution is used to represent the errors (residuals). Like count data (number of individuals, species etc), the Poisson distribution encapsulates positive integers and is bound by zero at one end. Consequently, the degree of variability is directly related the expected value (equivalent to the mean of a Gaussian distribution). Put differently, the variance is a function of the mean. Repeated observations from a Poisson distribution located close to zero will yield a much smaller spread of observations than will samples drawn from a Poisson distribution located a greater distance from zero. In the Poisson distribution, the variance has a 1:1 relationship with the mean.

The canonical link function for the Poisson distribution is a log-link function.

Whilst the expectation that the mean=variance ($\mu=\sigma$) is broadly compatible with actual count data (that variance increases at the same rate as the mean), under certain circumstances, this might not be the case. For example, when there are other unmeasured influences on the response variable, the distribution of counts might be somewhat clumped which can result in higher than expected variability (that is $\sigma\gt\mu$). The variance increases more rapidly than does the mean. This is referred to as overdispersion. The degree to which the variability is greater than the mean (and thus the expected degree of variability) is called dispersion. Effectively, the Poisson distribution has a dispersion parameter (or scaling factor) of 1.

It turns out that overdispersion is very common for count data and it typically underestimates variability, standard errors and thus deflated p-values. There are a number of ways of overcoming this limitation, the effectiveness of which depend on the causes of overdispersion.

- Quasi-Poisson models - these introduce the dispersion parameter ($\phi$) into the model.

This approach does not utilize an underlying error distribution to calculate the maximum likelihood (there is no quasi-Poisson distribution).

Instead, if the Newton-Ralphson iterative reweighting least squares algorithm is applied using a direct specification of the relationship between

mean and variance ($var(y)=\phi\mu$), the estimates of the regression coefficients are identical to those of the maximum

likelihood estimates from the Poisson model. This is analogous to fitting ordinary least squares on symmetrical, yet not normally distributed data -

the parameter estimates are the same, however they won't necessarily be as efficient.

The standard errors of the coefficients are then calculated by multiplying the Poisson model coefficient

standard errors by $\sqrt{\phi}$.

Unfortunately, because the quasi-poisson model is not estimated via maximum likelihood, properties such as AIC and log-likelihood cannot be derived. Consequently, quasi-poisson and Poisson model fits cannot be compared via either AIC or likelihood ratio tests (nor can they be compared via deviance as uasi-poisson and Poisson models have the same residual deviance). That said, quasi-likelihood can be obtained by dividing the likelihood from the Poisson model by the dispersion (scale) factor.

- Negative binomial model - technically, the negative binomial distribution is a probability distribution for the number of successes before a specified number of failures.

However, the negative binomial can also be defined (parameterized) in terms of a mean ($\mu$) and scale factor ($\omega$),

$$p(y_i)=\frac{\Gamma(y_i+\omega)}{\Gamma(\omega)y!}\times\frac{\mu_i^{y_i}\omega^\omega}{(\mu_i+\omega)^{\mu_i+\omega}}$$

where the expectected value of the values $y_i$ (the means) are ($\mu_i$) and the variance is $y_i=\frac{\mu_i+\mu_i^2}{\omega}$.

In this way, the negative binomial is a two-stage hierarchical process in which the response is modeled against a Poisson distribution whose expected count is in turn

modeled by a Gamma distribution with a mean of $\mu$ and constant scale parameter ($\omega$).

Strictly, the negative binomial is not an exponential family distribution (unless $\omega$ is fixed as a constant), and thus negative binomial models cannot be fit via the usual GLM iterative reweighting algorithm. Instead estimates of the regression parameters along with the scale factor ($\omega$) are obtained via maximum likelihood.

The negative binomial model is useful for accommodating overdispersal when it is likely caused by clumping (due to the influence of other unmeasured factors) within the response.

- Zero-inflated Poisson model - overdispersion can also be caused by the presence of a greater number of zero's than would otherwise be expected for a Poisson distribution.

There are potentially two sources of zero counts - genuine zeros and false zeros.

Firstly, there may genuinely be no individuals present. This would be the number expected by a Poisson distribution.

Secondly, individuals may have been present yet not detected or may not even been possible.

These are false zero's and lead to zero inflated data (data with more zeros than expected).

For example, the number of joeys accompanying an adult koala could be zero because the koala has no offspring (true zero) or because the koala is male or infertile (both of which would be examples of false zeros). Similarly, zero counts of the number of individual in a transect are due either to the absence of individuals or the inability of the observer to detect them. Whilst in the former example, the latent variable representing false zeros (sex or infertility) can be identified and those individuals removed prior to analysis, this is not the case for the latter example. That is, we cannot easily partition which counts of zero are due to detection issues and which are a true indication of the natural state.

Consistent with these two sources of zeros, zero-inflated models combine a binary logistic regression model (that models count membership according to a latent variable representing observations that can only be zeros - not detectable or male koalas) with a Poisson regression (that models count membership according to a latent variable representing observations whose values could be 0 or any positive integer - fertile female koalas).

Summary of important equations

Many ecologists are repulsed or frightened by statistical formulae. In part this is due to the use of Greek letters to represent concepts, constants and functions with the assumption that the readers are familiar with their meaning. Hence, the issue is largely that many ecologists are familiar with certain statistical concepts, yet are not overly familiar with the notation used to represent those concepts.

As a typed language, R exposes users to a rich variety of statistical opportunities. Whilst many of the common procedures are wrapped into simple to use functions (and so all of the calculations etc underlying a GLM need not be performed by hand by the user), not all procedures have been packaged into functions. For such cases, it is useful to have the formulas handy.

Hence, in this section I am going to summarize and compare equations for common procedures associated with Poisson and Negative Binomial models. Feel free to skip this section until it is useful to you.

| Poisson | Negative Binomial (p,r) | |

|---|---|---|

| Density function | $f(y|\lambda)=\frac{\lambda^{y}e^{-\lambda}}{y!}$ | $f(y|r,p)=\frac{\Gamma(y+r)}{\Gamma(r)\Gamma(y+1)}p^r(1-p)^y$ |

| Expected value | $E(Y)=\mu=\lambda$ | $E(Y)=\mu=\frac{r(1-p)}{p}$ |

| Variance | $var(Y)=\lambda$ | $var(Y)=\frac{r(1-p)}{p^2}$ |

| log-likelihood | $\mathcal{LL}(\lambda\mid y) = \sum\limits^n_{i=1}y_i log(\lambda_i)-\lambda_i - log\Gamma(y_i+1)$ | $\begin{align}\mathcal{LL}(r,p\mid y) = \sum\limits^n_{i=1}&log\Gamma(y_1 + r) - log\Gamma(r) - log\Gamma(y_i + 1) + \\&r.log(p) + y_i log(1-p)\end{align}$ |

| Negative Binomial (a,b) | |

|---|---|

| Density function | $f(y \mid a,b) = \frac{b^{a}y^{b-1}e^{-b y}}{\Gamma(a)}$ |

| Expected value | $E(Y)=\mu=\frac{r}{p}$ |

| Variance | |

| log-likelihood | $\mathcal{LL}(\lambda;y)=\sum\limits^n_{i=1} log\Gamma(y_i + a) - log\Gamma(y_i+1) - log\Gamma(a) + \alpha(log(b_i) - log(b_i+1)) - y_i.log(b_i+1)$ |

| Zero-inflated Poisson | |

|---|---|

| Density function | $p(y \mid \theta,\lambda) = \left\{ \begin{array}{l l} \theta + (1-\theta)\times \text{Pois}(0|\lambda) & \quad \text{if $y_i=0$ and}\\ (1-\theta)\times \text{Pois}(y_i|\lambda) & \quad \text{if $y_i>0$} \end{array} \right.$ |

| Expected value | $E(Y)=\mu=\lambda\times(1-theta)$ |

| Variance | $var(Y)=\lambda\times(1-\theta)\times(1+\theta\times\lambda^2)$ |

| log-likelihood | $\mathcal{LL}(\theta,\lambda\mid y) = \left\{ \begin{array}{l l} \sum\limits^n_{i=1} (1-\theta)\times log(\theta + \lambda_i)\\ \sum\limits^n_{i=1}y_i log(1-\theta)\times y_i\lambda_i - exp(\lambda_i)\\ \end{array} \right. $ |

| Procedure | Equation |

|---|---|

| Residuals | $\varepsilon_i = y_i - \hat{y}$ |

| Pearson's Residuals | $\frac{y_i - \hat{y}}{\sqrt{var(y)}}$ |

| Residual Sums of Squares | $RSS = \sum \varepsilon_i^2$ |

| Dispersion statistic | $\phi=\frac{RSS}{df}$ |

| $R^2$ | $R^2 = 1-\frac{RSS_{model}}{RSS_{null}}$ |

| McFadden's $R^2$ | $R_{McF}^2 = 1 - \frac{\mathcal{LL}_{model}}{\mathcal{LL}_{null}}$ |

| Deviance | $dev = -2*\mathcal{LL}$ |

| pD | $pD = $ |

| DIC | $DIC = -pD$ |

| AIC | $AIC = min(dev+(2pD))$ |

Poisson regression

The following equations are provided since in Bayesian modelling, it is occasionally necessary to directly define the log-likelihood calculations (particularly for zero-inflated models and other mixture models). Feel free initially gloss over these equations until such time when your models require them ;)

Density function: $$ f(y\mid\lambda)=\frac{\lambda^{y}e^{-\lambda}}{y!}\\ E(Y)=Var(Y)=\lambda\\ $$ where $\lambda$ is the mean.Likelihood: $$ \begin{align} \mathcal{L}(\lambda\mid y) &= \prod\limits_{i=1}^n \dfrac{\lambda^{y_i}e^{-\lambda}}{y_i!}\\ %&= \dfrac{\lambda^{\sum\limits^n_{i=1}y_i} e^{-n\lambda}}{y_1!y_2! \cdots y_n!}\\ \end{align} $$ Log-likelihood: $$ \begin{array}{rcl} \mathcal{LL}(\lambda\mid y)&=&\sum\limits^n_{i=1}log(\lambda^{y_i}e^{-\lambda_i})-log(y_i!)\\ \mathcal{LL}(\lambda\mid y)&=&\sum\limits^n_{i=1}log(\lambda^{y_i})+log(e^{-\lambda_i})-log(y_i!)\\ \mathcal{LL}(\lambda\mid y)&=&\sum\limits^n_{i=1}y_i log(\lambda_i)-\lambda_i - log(y_i!) \end{array} $$

Scenario and Data

Lets say we wanted to model the abundance of an item ($y$) against a continuous predictor ($x$). As this section is mainly about the generation of artificial data (and not specifically about what to do with the data), understanding the actual details are optional and can be safely skipped. Consequently, I have folded (toggled) this section away.

- the sample size = 20

- the continuous $x$ variable is a random uniform spread of measurements between 1 and 20

- the rate of change in log $y$ per unit of $x$ (slope) = 0.1.

- the value of $x$ when log$y$ equals 0 (when $y$=1)

- to generate the values of $y$ expected at each $x$ value, we evaluate the linear predictor (created by calculating the outer product of the model matrix and the regression parameters). These expected values are then transformed into a scale mapped by (0,$\infty$) by using the log function $e^{linear~predictor}$

- finally, we generate $y$ values by using the expected $y$ values ($\lambda$) as probabilities when drawing random numbers from a Poisson distribution. This step adds random noise to the expected $y$ values and returns only 0's and positive integers.

set.seed(8) #The number of samples n.x <- 20 #Create x values that at uniformly distributed throughout the rate of 1 to 20 x <- sort(runif(n = n.x, min = 1, max =20)) mm <- model.matrix(~x) intercept <- 0.6 slope=0.1 #The linear predictor linpred <- mm %*% c(intercept,slope) #Predicted y values lambda <- exp(linpred) #Add some noise and make binomial y <- rpois(n=n.x, lambda=lambda) dat <- data.frame(y,x)

With these sort of data, we are primarily interested in investigating whether there is a relationship between the positive integer response variable and the linear predictor (linear combination of one or more continuous or categorical predictors).

Exploratory data analysis and initial assumption checking

- All of the observations are independent - this must be addressed at the design and collection stages

- The response variable (and thus the residuals) should be matched by an appropriate distribution (in the case of positive integers response - a Poisson is appropriate).

- All observations are equally influential in determining the trends - or at least no observations are overly influential. This is most effectively diagnosed via residuals and other influence indices and is very difficult to diagnose prior to analysis

- the relationship between the linear predictor (right hand side of the regression formula) and the link function should be linear. A scatterplot with smoother can be useful for identifying possible non-linearity.

- The dispersion factor is close to 1

There are at least five main potential models we could consider fitting to these data:

- Ordinary least squares regression (general linear model) - assumes normality of residuals

- Poisson regression - assumes mean=variance (dispersion=1)

- Quasi-poisson regression - a general solution to overdispersion. Assumes variance is a function of mean, dispersion estimated, however likelihood based statistics unavailable

- Negative binomial regression - a specific solution to overdispersion caused by clumping (due to an unmeasured latent variable). Scaling factor ($\omega$) is estimated along with the regression parameters.

- Zero-inflation model - a specific solution to overdispersion caused by excessive zeros (due to an unmeasured latent variable). Mixture of binomial and Poisson models.

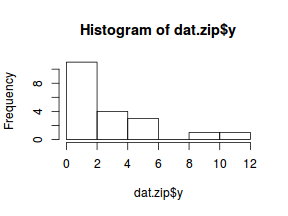

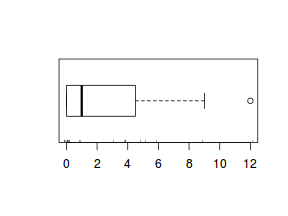

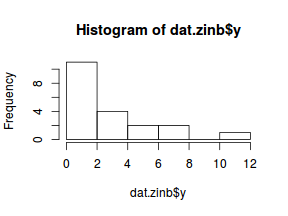

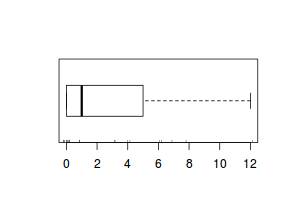

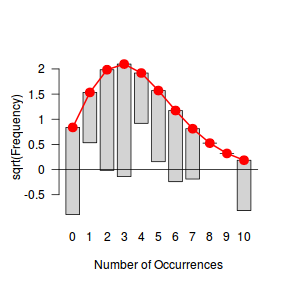

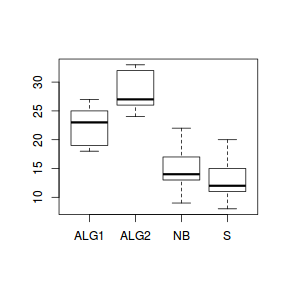

Confirm non-normality and explore clumping

When counts are all very large (not close to 0) and their ranges do not span orders of magnitude, they take on very Gaussian properties (symmetrical distribution and variance independent of the mean). Given that models based on the Gaussian distribution are more optimized and recognized than Generalized Linear Models, it can be prudent to adopt Gaussian models for such data. Hence it is a good idea to first explore whether a Poisson model is likely to be more appropriate than a standard Gaussian model.

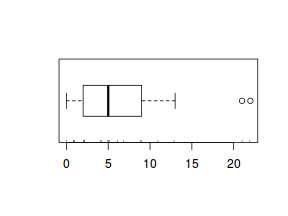

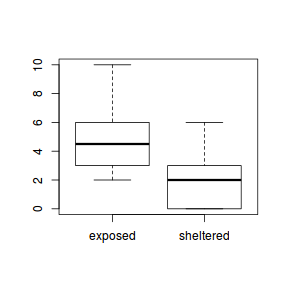

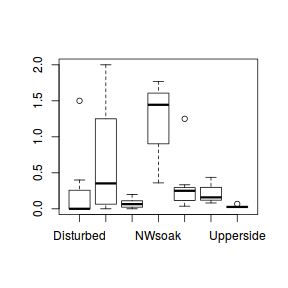

The potential for overdispersion can be explored by adding a rug to boxplot. The rug is simply tick marks on the inside of an axis at the position corresponding to an observation. As multiple identical values result in tick marks drawn over one another, it is typically a good idea to apply a slight amount of jitter (random displacement) to the values used by the rug.

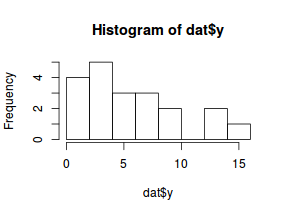

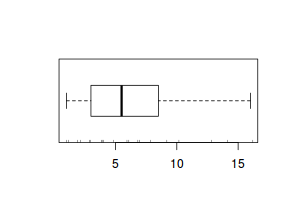

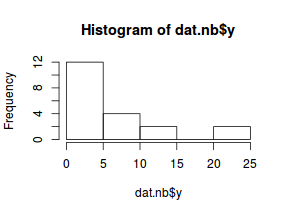

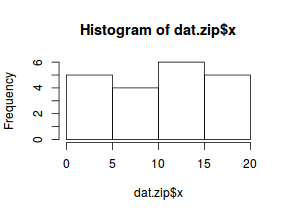

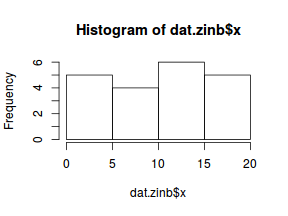

hist(dat$y)

boxplot(dat$y, horizontal=TRUE) rug(jitter(dat$y), side=1)

There is definitely signs of non-normality that would warrant Poisson models. The rug applied to the boxplots does not indicate a series degree of clumping and there appears to be few zero. Thus overdispersion is unlikely to be an issue.

Confirm linearity

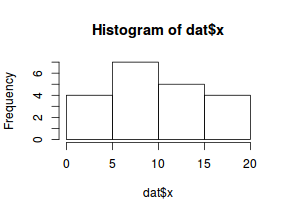

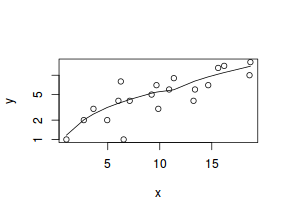

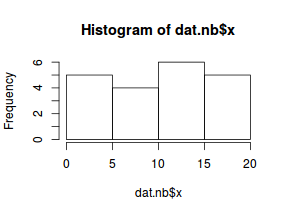

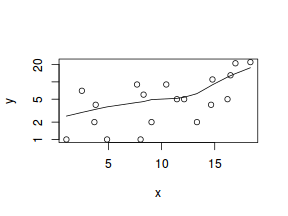

Lets now explore linearity by creating a histogram of the predictor variable ($x$) and a scatterplot of the relationship between the response ($y$) and the predictor ($x$)

hist(dat$x)

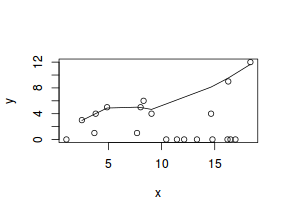

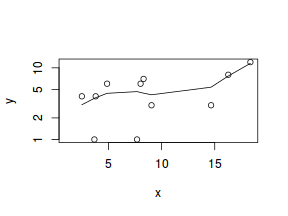

#now for the scatterplot plot(y~x, dat, log="y") with(dat, lines(lowess(y~x)))

Conclusions: the predictor ($x$) does not display any skewness or other issues that might lead to non-linearity. The lowess smoother on the scatterplot does not display major deviations from a straight line and thus linearity is satisfied. Violations of linearity could be addressed by either:

- define a non-linear linear predictor (such as a polynomial, spline or other non-linear function)

- transform the scale of the predictor variables

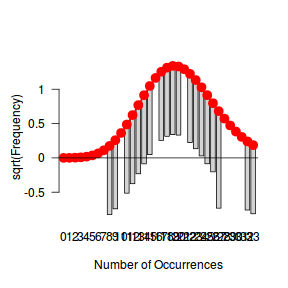

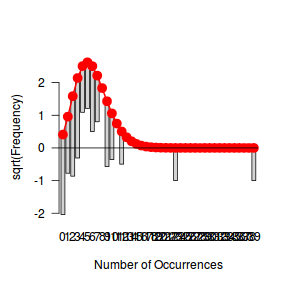

Explore zero inflation

Although we have already established that there are few zeros in the data (and thus overdispersion is unlikely to be an issue), we can also explore this by comparing the number of zeros in the data to the number of zeros that would be expected from a Poisson distribution with a mean equal to the mean count of the data.

#proportion of 0's in the data dat.tab<-table(dat$y==0) dat.tab/sum(dat.tab)

FALSE

1

#proportion of 0's expected from a Poisson distribution mu <- mean(dat$y) cnts <- rpois(1000, mu) dat.tab <- table(cnts == 0) dat.tab/sum(dat.tab)

FALSE TRUE 0.997 0.003

0.003).

Model fitting or statistical analysis

JAGS

$$ \begin{align} Y_i&\sim{}P(\lambda) & (\text{response distribution})\\ log(\lambda_i)&=\eta_i & (\text{link function})\\ \eta_i&=\beta_0+\beta_1 X_i & (\text{linear predictor})\\ \beta_0, \beta_1&\sim{}\mathcal{N}(0,10000) & (\text{diffuse Bayesian prior})\\ \end{align} $$dat.list <- with(dat,list(Y=y, X=x,N=nrow(dat))) modelString=" model { for (i in 1:N) { Y[i] ~ dpois(lambda[i]) log(lambda[i]) <- beta0 + beta1*X[i] } beta0 ~ dnorm(0,1.0E-06) beta1 ~ dnorm(0,1.0E-06) } " writeLines(modelString,con='../downloads/BUGSscripts/tut11.5bS1.4.bug') library(R2jags) system.time( dat.P.jags <- jags(model='../downloads/BUGSscripts/tut11.5bS1.4.bug', data=dat.list, inits=NULL, param=c('beta0','beta1'), n.chain=3, n.iter=20000, n.thin=10, n.burnin=10000) )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 105 Initializing model

user system elapsed 1.852 0.004 1.861

Xmat <- model.matrix(~x, dat) nX <- ncol(Xmat) dat.list1 <- with(dat,list(Y=y, X=Xmat,N=nrow(dat), nX=nX)) modelString=" model { for (i in 1:N) { Y[i] ~ dpois(lambda[i]) eta[i] <- inprod(beta[], X[i,]) log(lambda[i]) <- eta[i] } for (i in 1:nX) { beta[i] ~ dnorm(0,1.0E-06) } } " writeLines(modelString,con='../downloads/BUGSscripts/tut11.5bS1.41.bug') library(R2jags) system.time( dat.P.jags1 <- jags(model='../downloads/BUGSscripts/tut11.5bS1.41.bug', data=dat.list1, inits=NULL, param=c('beta'), n.chain=3, n.iter=20000, n.thin=10, n.burnin=10000) )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 127 Initializing model

user system elapsed 1.836 0.004 1.844

print(dat.P.jags1)

Inference for Bugs model at "../downloads/BUGSscripts/tut11.5bS1.41.bug", fit using jags,

3 chains, each with 20000 iterations (first 10000 discarded), n.thin = 10

n.sims = 3000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

beta[1] 0.546 0.256 0.019 0.384 0.552 0.719 1.018 1.002 1700

beta[2] 0.112 0.019 0.077 0.099 0.112 0.124 0.149 1.002 1800

deviance 88.428 4.999 86.376 86.891 87.680 89.036 93.531 1.001 3000

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 12.5 and DIC = 100.9

DIC is an estimate of expected predictive error (lower deviance is better).

Or arguably better still, use a multivariate normal prior. If we have a $k$ regression parameters ($\beta_k$), then the multivariate normal priors are defined as: $$ \boldsymbol{\beta}\sim{}\mathcal{N_k}(\boldsymbol{\mu}, \mathbf{\Sigma}) $$ where $$\boldsymbol{\mu}=[E[\beta_1],E[\beta_2],...,E[\beta_k]] = \left(\begin{array}{c}0\\\vdots\\0\end{array}\right)$$ $$ \mathbf{\Sigma}=[Cov[\beta_i, \beta_j]] = \left(\begin{array}{ccc}1000^2&0&0\\0&\ddots&0\\0&0&1000^2\end{array} \right) $$ hence, along with the response and predictor matrix, we need to supply $\boldsymbol{\mu}$ (a vector of zeros) and $\boldsymbol{\Sigma}$ (a covariance matrix with $1000^2$ in the diagonals).

Xmat <- model.matrix(~x, dat) nX <- ncol(Xmat) dat.list2 <- with(dat,list(Y=y, X=Xmat,N=nrow(dat), mu=rep(0,nX),Sigma=diag(1.0E-06,nX))) modelString=" model { for (i in 1:N) { Y[i] ~ dpois(lambda[i]) eta[i] <- inprod(beta[], X[i,]) log(lambda[i]) <- eta[i] } beta ~ dmnorm(mu[],Sigma[,]) } " writeLines(modelString,con='../downloads/BUGSscripts/tut11.5bS1.42.bug') library(R2jags) system.time( dat.P.jags2 <- jags(model='../downloads/BUGSscripts/tut11.5bS1.42.bug', data=dat.list2, inits=NULL, param=c('beta'), n.chain=3, n.iter=20000, n.thin=10, n.burnin=10000) )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 130 Initializing model

user system elapsed 0.460 0.000 0.463

print(dat.P.jags2)

Inference for Bugs model at "../downloads/BUGSscripts/tut11.5bS1.42.bug", fit using jags,

3 chains, each with 20000 iterations (first 10000 discarded), n.thin = 10

n.sims = 3000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

beta[1] 0.561 0.241 0.084 0.405 0.571 0.724 1.034 1.002 1500

beta[2] 0.111 0.018 0.074 0.099 0.111 0.123 0.145 1.002 1300

deviance 88.184 1.850 86.372 86.854 87.596 88.898 92.951 1.007 900

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 1.7 and DIC = 89.9

DIC is an estimate of expected predictive error (lower deviance is better).

BRMS

library(brms)

dat.brm <- brm(y~x, data=dat, family='poisson', prior = c(set_prior("normal(0,100)", class="b")), chains=3, iter=2000, warmup=1000, thin=2)

SAMPLING FOR MODEL 'poisson(log) brms-model' NOW (CHAIN 1). Chain 1, Iteration: 1 / 2000 [ 0%] (Warmup) Chain 1, Iteration: 200 / 2000 [ 10%] (Warmup) Chain 1, Iteration: 400 / 2000 [ 20%] (Warmup) Chain 1, Iteration: 600 / 2000 [ 30%] (Warmup) Chain 1, Iteration: 800 / 2000 [ 40%] (Warmup) Chain 1, Iteration: 1000 / 2000 [ 50%] (Warmup) Chain 1, Iteration: 1001 / 2000 [ 50%] (Sampling) Chain 1, Iteration: 1200 / 2000 [ 60%] (Sampling) Chain 1, Iteration: 1400 / 2000 [ 70%] (Sampling) Chain 1, Iteration: 1600 / 2000 [ 80%] (Sampling) Chain 1, Iteration: 1800 / 2000 [ 90%] (Sampling) Chain 1, Iteration: 2000 / 2000 [100%] (Sampling)# # Elapsed Time: 0.03963 seconds (Warm-up) # 0.03519 seconds (Sampling) # 0.07482 seconds (Total) # SAMPLING FOR MODEL 'poisson(log) brms-model' NOW (CHAIN 2). Chain 2, Iteration: 1 / 2000 [ 0%] (Warmup) Chain 2, Iteration: 200 / 2000 [ 10%] (Warmup) Chain 2, Iteration: 400 / 2000 [ 20%] (Warmup) Chain 2, Iteration: 600 / 2000 [ 30%] (Warmup) Chain 2, Iteration: 800 / 2000 [ 40%] (Warmup) Chain 2, Iteration: 1000 / 2000 [ 50%] (Warmup) Chain 2, Iteration: 1001 / 2000 [ 50%] (Sampling) Chain 2, Iteration: 1200 / 2000 [ 60%] (Sampling) Chain 2, Iteration: 1400 / 2000 [ 70%] (Sampling) Chain 2, Iteration: 1600 / 2000 [ 80%] (Sampling) Chain 2, Iteration: 1800 / 2000 [ 90%] (Sampling) Chain 2, Iteration: 2000 / 2000 [100%] (Sampling)# # Elapsed Time: 0.038022 seconds (Warm-up) # 0.035344 seconds (Sampling) # 0.073366 seconds (Total) # SAMPLING FOR MODEL 'poisson(log) brms-model' NOW (CHAIN 3). Chain 3, Iteration: 1 / 2000 [ 0%] (Warmup) Chain 3, Iteration: 200 / 2000 [ 10%] (Warmup) Chain 3, Iteration: 400 / 2000 [ 20%] (Warmup) Chain 3, Iteration: 600 / 2000 [ 30%] (Warmup) Chain 3, Iteration: 800 / 2000 [ 40%] (Warmup) Chain 3, Iteration: 1000 / 2000 [ 50%] (Warmup) Chain 3, Iteration: 1001 / 2000 [ 50%] (Sampling) Chain 3, Iteration: 1200 / 2000 [ 60%] (Sampling) Chain 3, Iteration: 1400 / 2000 [ 70%] (Sampling) Chain 3, Iteration: 1600 / 2000 [ 80%] (Sampling) Chain 3, Iteration: 1800 / 2000 [ 90%] (Sampling) Chain 3, Iteration: 2000 / 2000 [100%] (Sampling)# # Elapsed Time: 0.038597 seconds (Warm-up) # 0.037356 seconds (Sampling) # 0.075953 seconds (Total) #

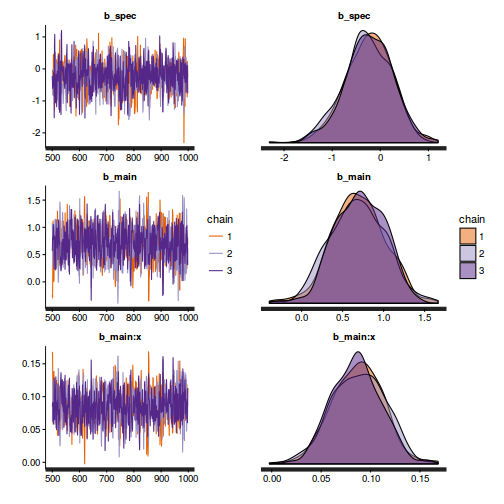

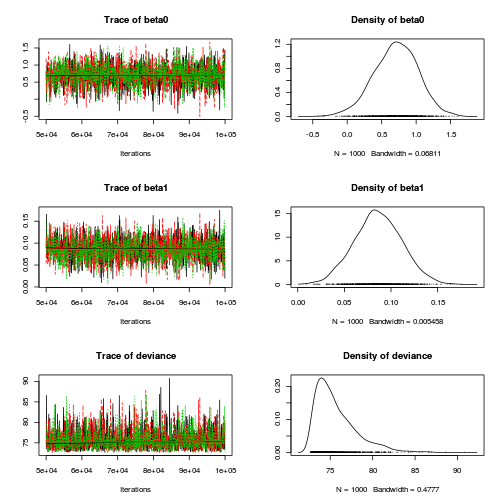

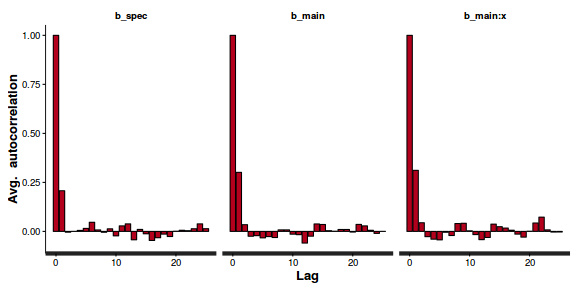

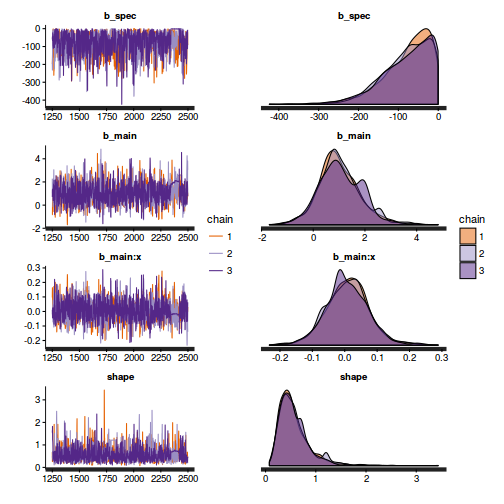

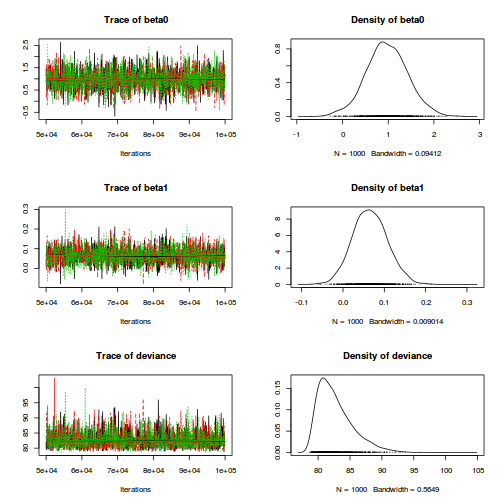

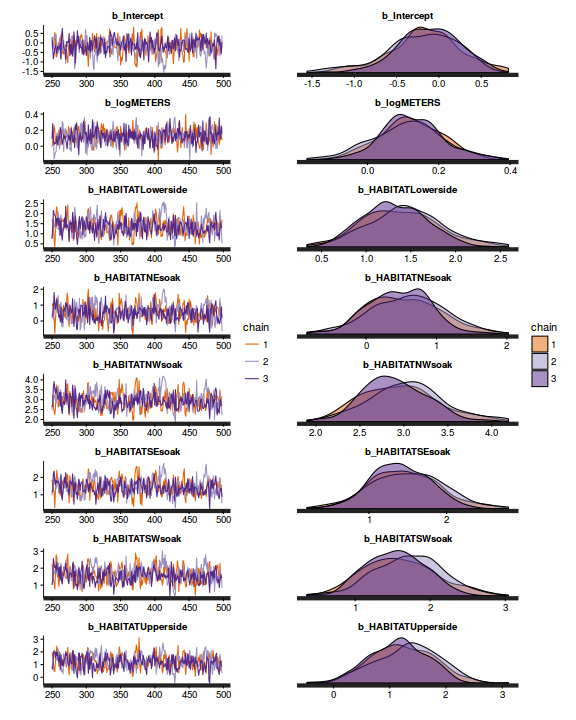

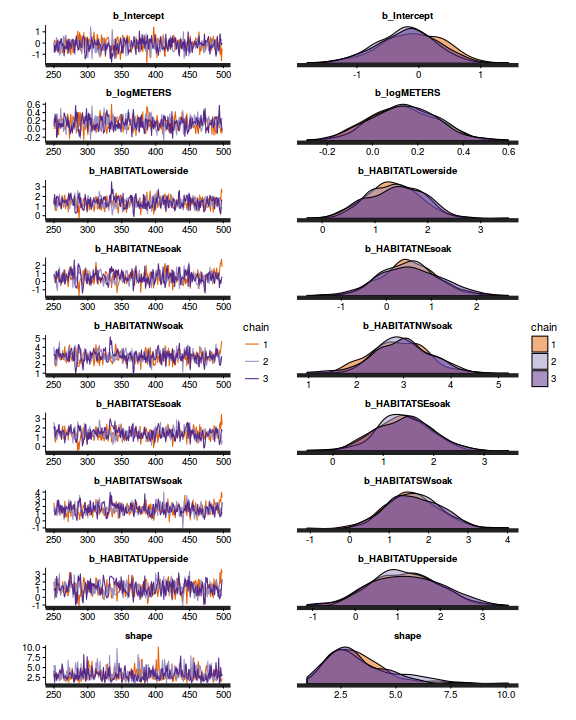

Chain mixing and Model validation

Prior to exploring the model parameters, it is prudent to confirm that the model did indeed fit the assumptions and was an appropriate fit to the data as well as that the MCMC sampling chain was adequately mixed and the retained samples independent.

Whilst I will only demonstrate this for the logit model, the procedure would be identical for exploring the probit and clog-log models.

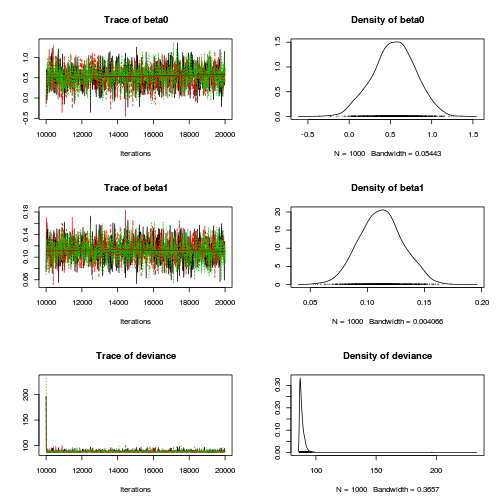

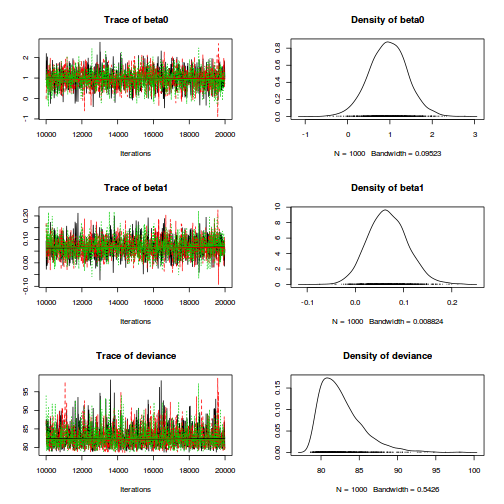

- We will start by exploring the mixing of the MCMC chains via traceplots.

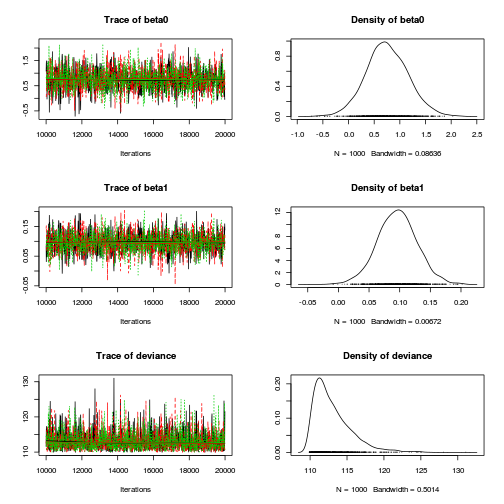

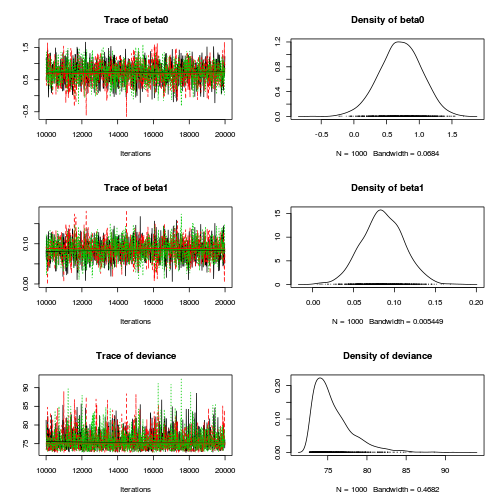

plot(as.mcmc(dat.P.jags))

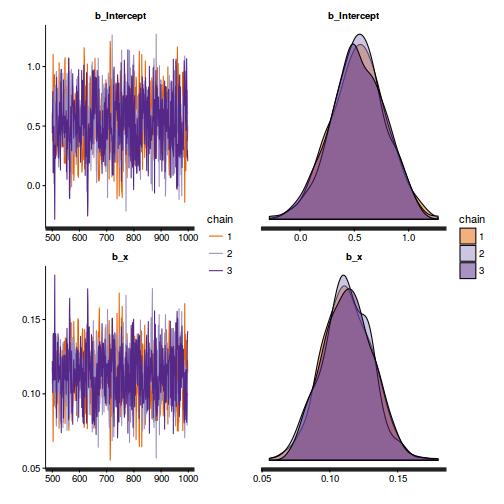

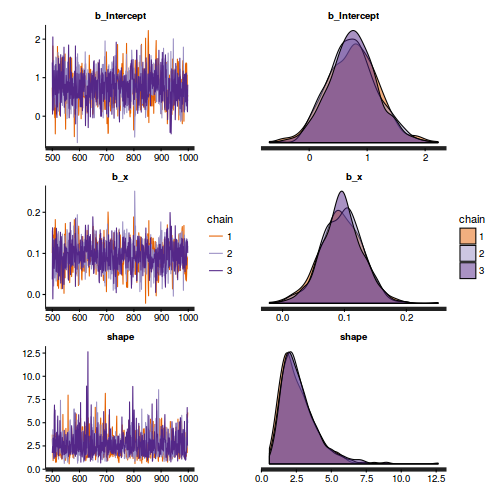

library(gridExtra) grid.arrange(stan_trace(dat.brm$fit, ncol=1), stan_dens(dat.brm$fit, separate_chains=TRUE,ncol=1), ncol=2)

The chains appear well mixed and stable

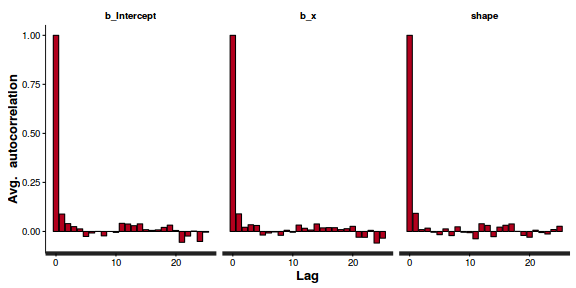

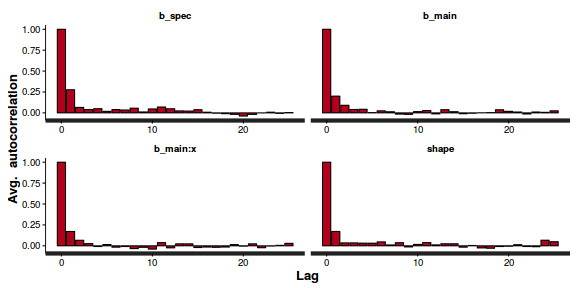

- Next we will explore correlation amongst MCMC samples.

autocorr.diag(as.mcmc(dat.P.jags))

beta0 beta1 deviance Lag 0 1.0000000 1.00000 1.000000 Lag 10 0.1553164 0.11400 0.009912 Lag 50 0.0228548 0.01439 0.004464 Lag 100 -0.0008548 -0.01320 0.001207 Lag 500 -0.0339699 -0.02674 -0.007545

The level of auto-correlation at the nominated lag of 10 is higher than we would generally like. It is worth increasing the thinning rate from 10 to 50. Obviously, to support this higher thinning rate, we would also increase the number of iterations.

library(R2jags) dat.P.jags <- jags(data=dat.list,model.file='../downloads/BUGSscripts/tut11.5bS1.4.bug', param=c('beta0','beta1'), n.chains=3, n.iter=100000, n.burnin=20000, n.thin=50)

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 105 Initializing model

print(dat.P.jags)

Inference for Bugs model at "../downloads/BUGSscripts/tut11.5bS1.4.bug", fit using jags, 3 chains, each with 1e+05 iterations (first 20000 discarded), n.thin = 50 n.sims = 4800 iterations saved mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff beta0 0.545 0.257 0.040 0.375 0.545 0.718 1.039 1.001 4800 beta1 0.112 0.019 0.075 0.100 0.112 0.124 0.148 1.001 4800 deviance 88.379 3.712 86.368 86.871 87.664 89.058 93.778 1.002 4800 For each parameter, n.eff is a crude measure of effective sample size, and Rhat is the potential scale reduction factor (at convergence, Rhat=1). DIC info (using the rule, pD = var(deviance)/2) pD = 6.9 and DIC = 95.3 DIC is an estimate of expected predictive error (lower deviance is better).plot(as.mcmc(dat.P.jags))

autocorr.diag(as.mcmc(dat.P.jags))

beta0 beta1 deviance Lag 0 1.0000000 1.000000 1.000000 Lag 50 0.0100259 0.009642 0.003845 Lag 250 0.0133849 0.010277 0.001847 Lag 500 0.0056560 0.003987 0.001649 Lag 2500 0.0006608 0.003896 0.007031

Conclusions: the samples are now less auto-correlated and the chains are also arguably mixed a little better.

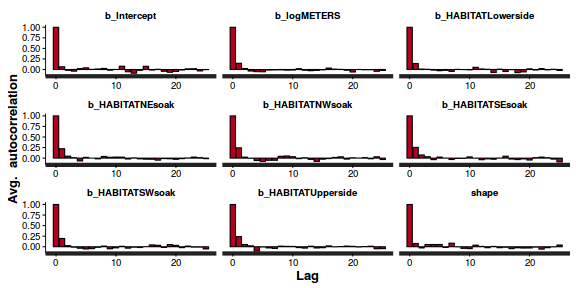

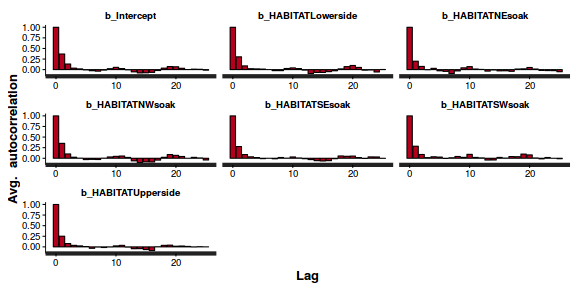

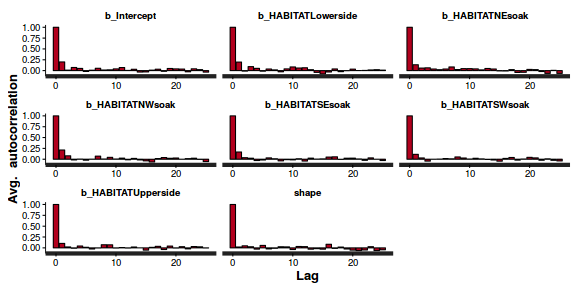

stan_ac(dat.brm$fit)

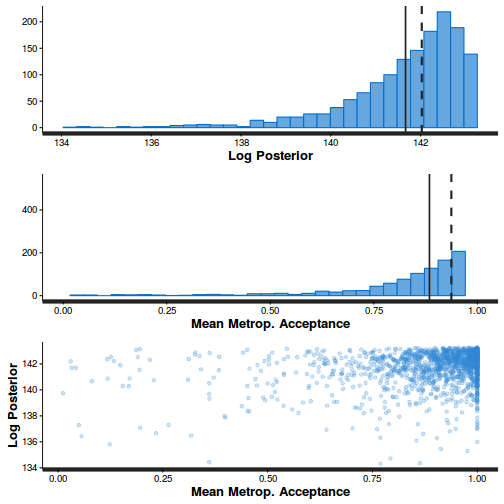

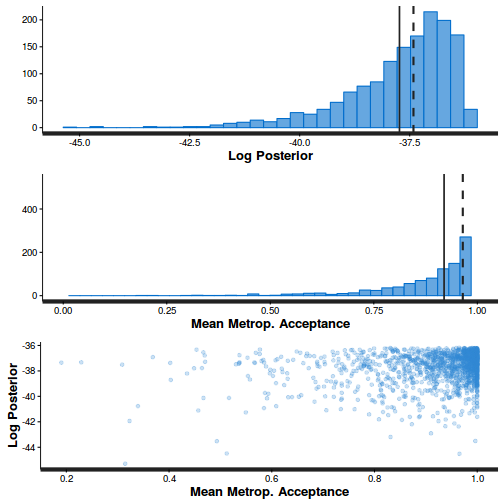

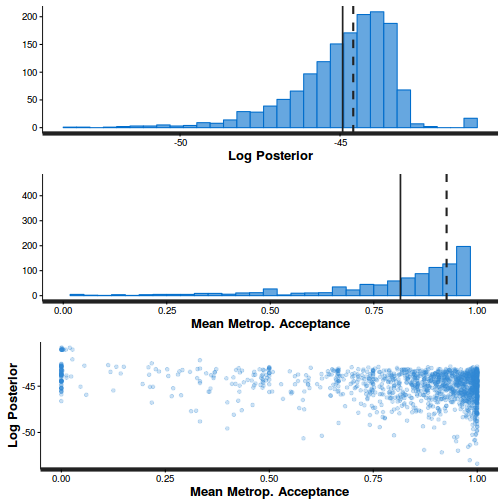

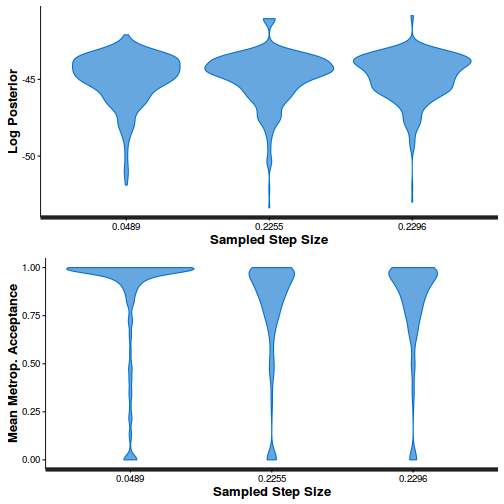

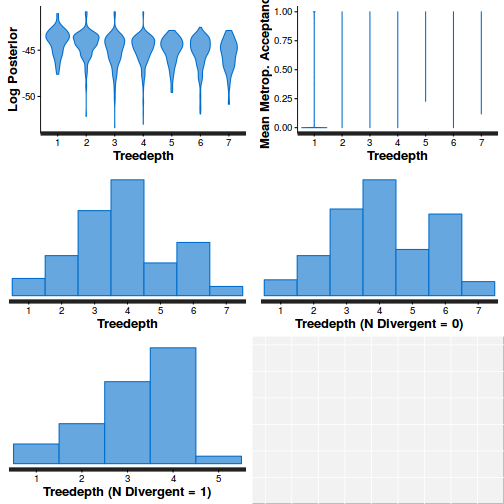

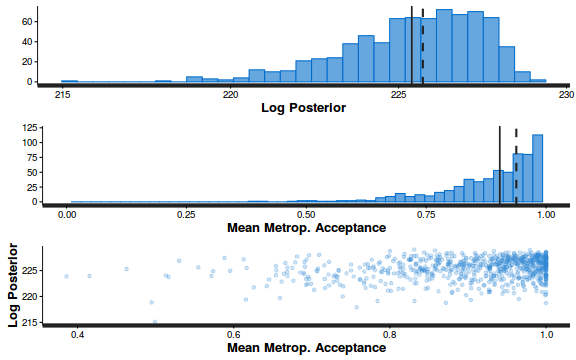

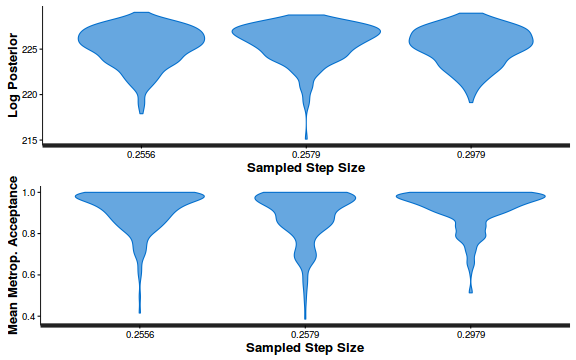

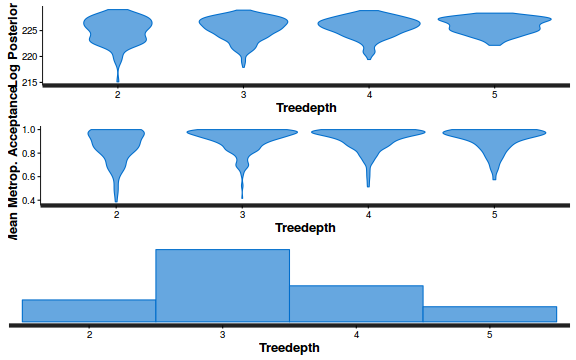

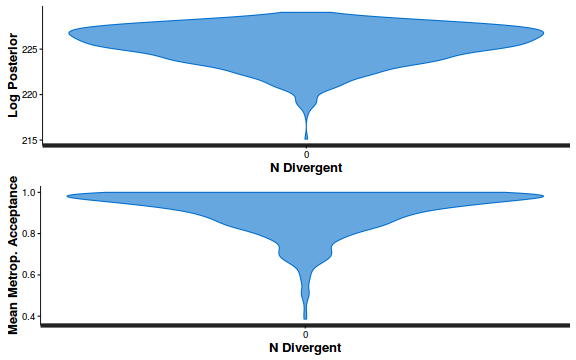

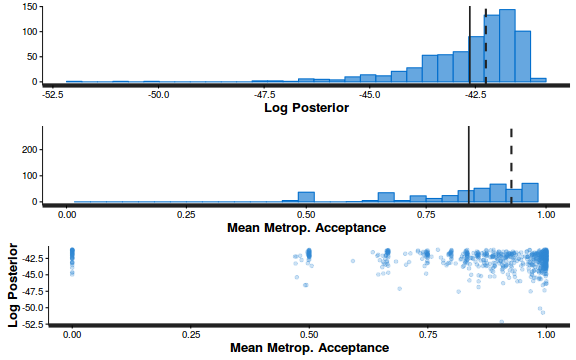

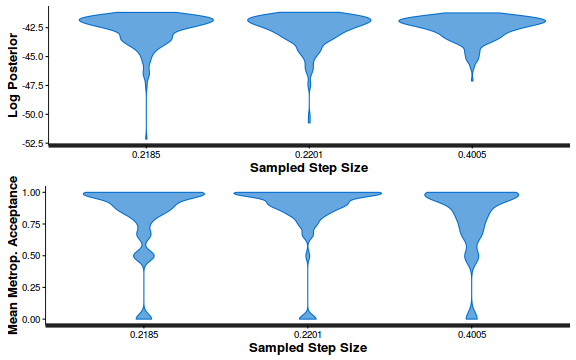

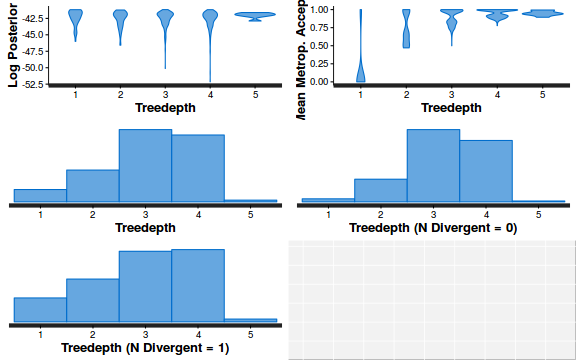

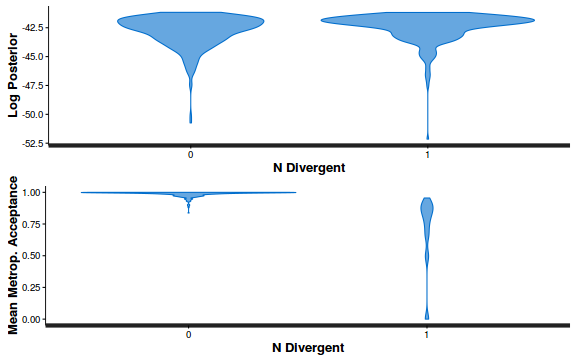

- Explore the step size characteristics (STAN only)

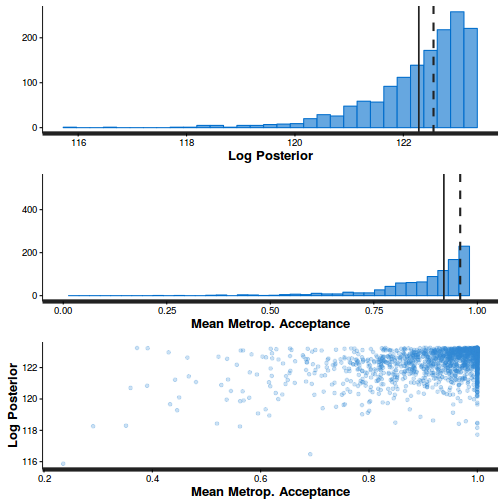

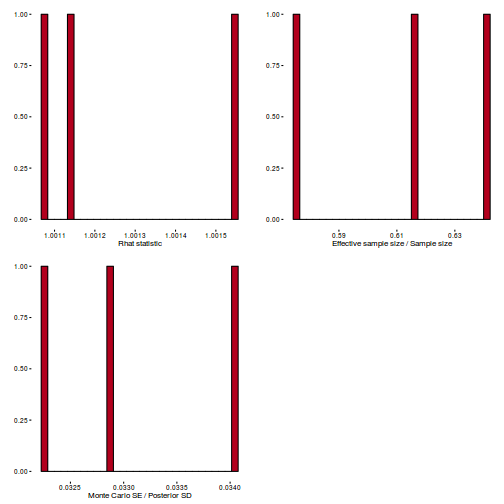

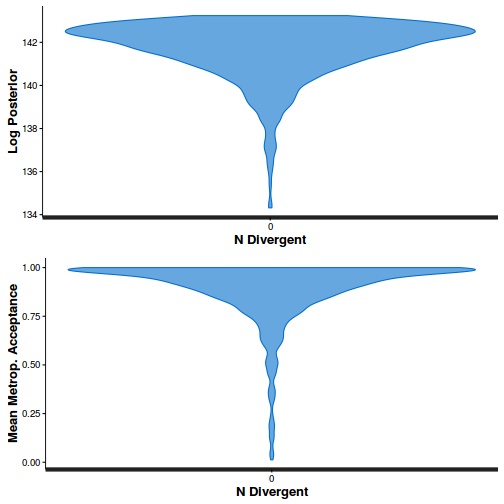

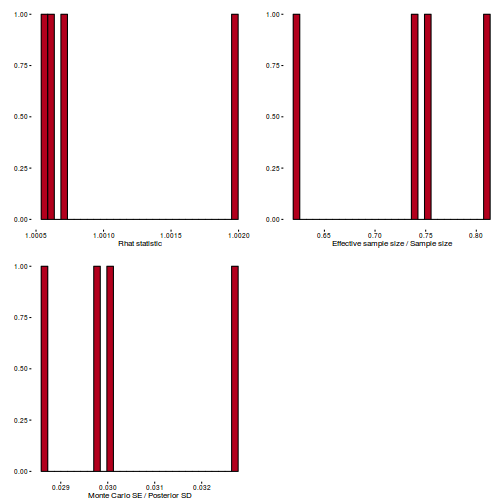

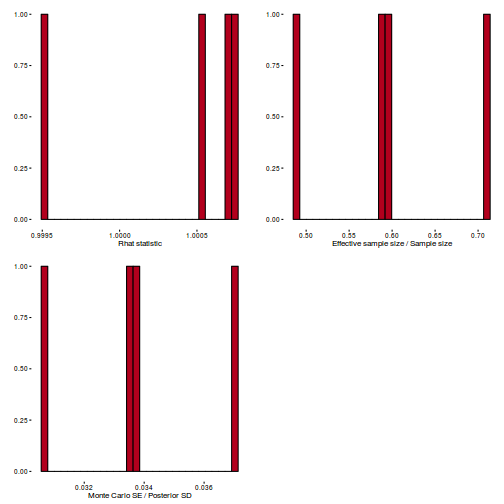

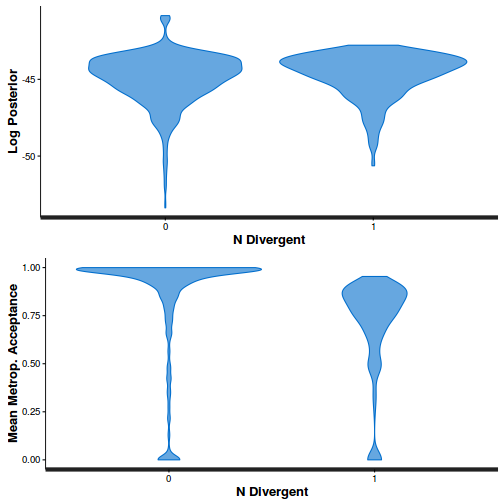

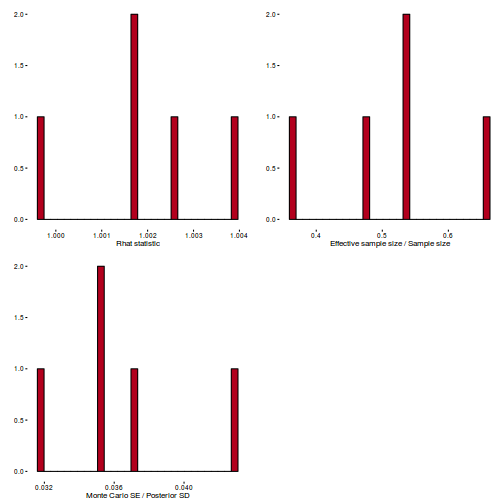

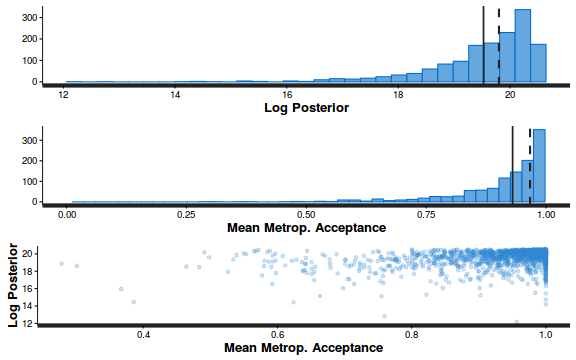

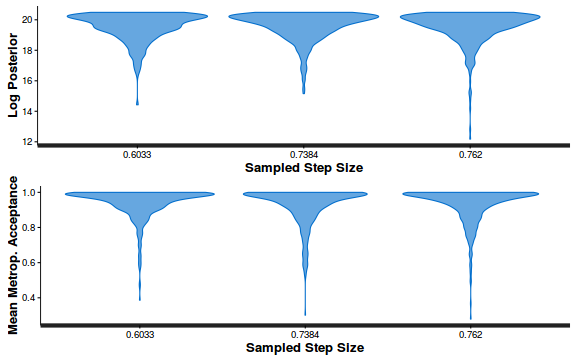

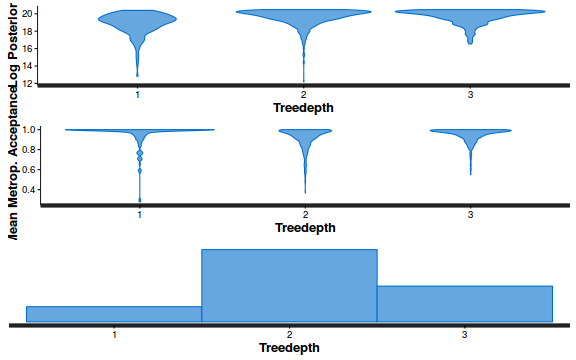

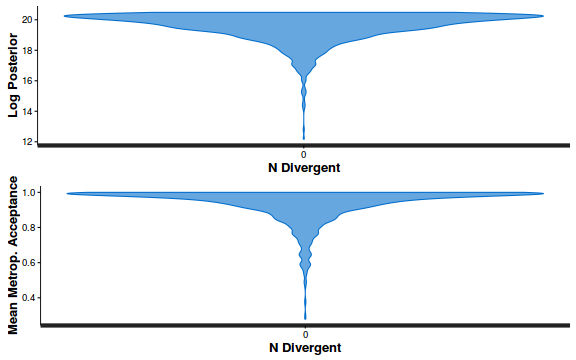

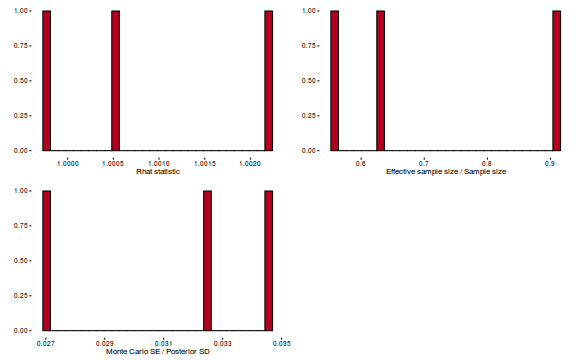

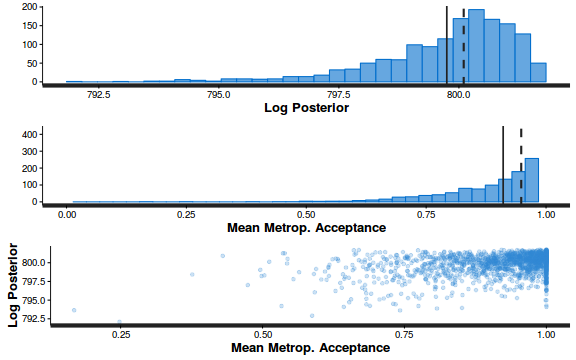

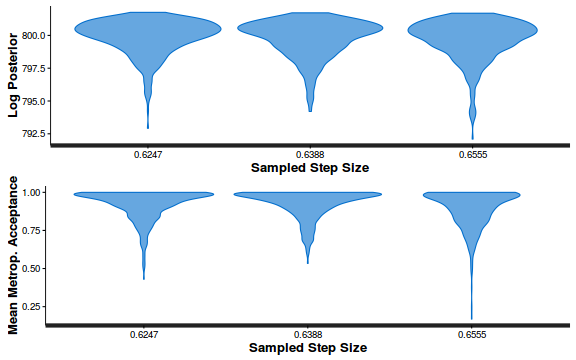

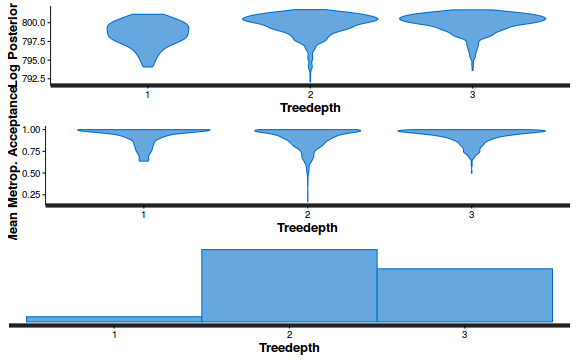

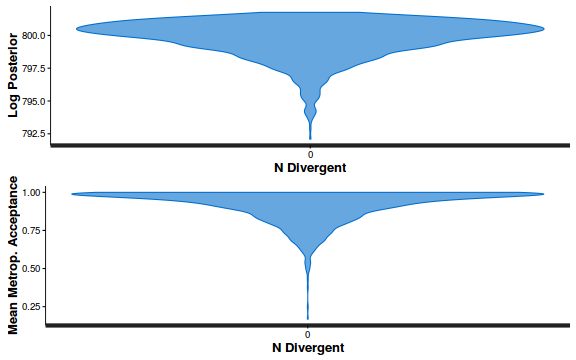

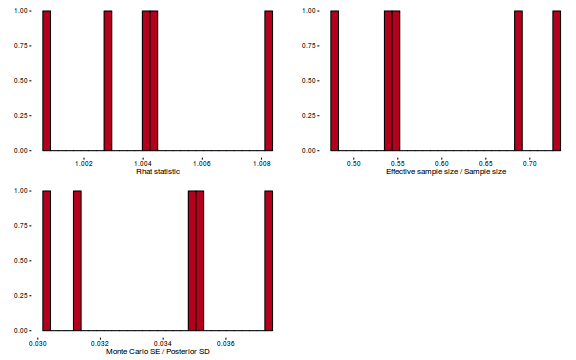

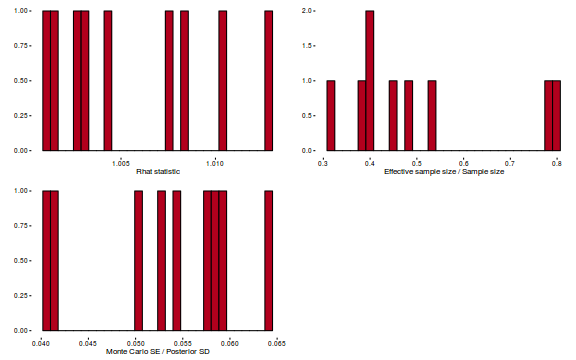

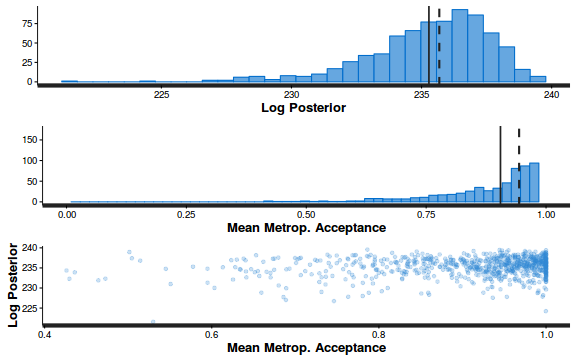

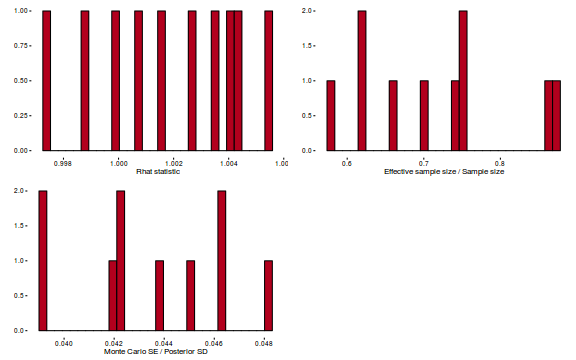

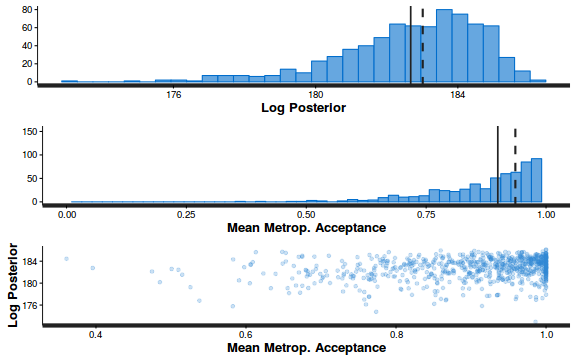

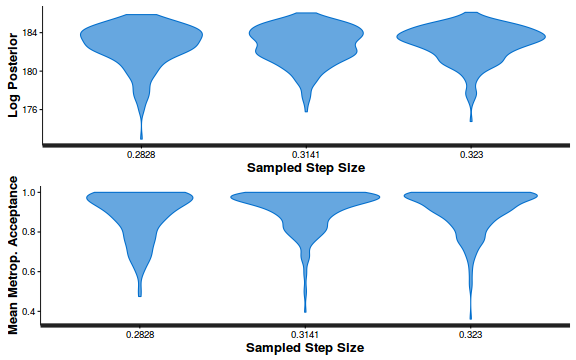

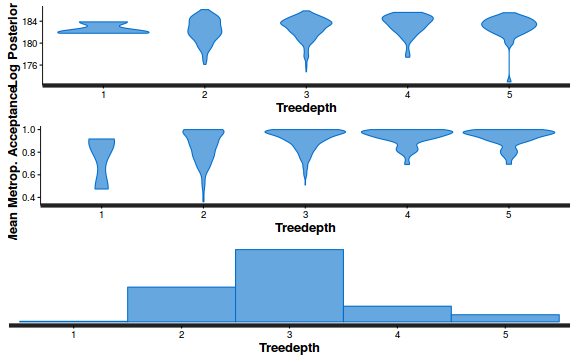

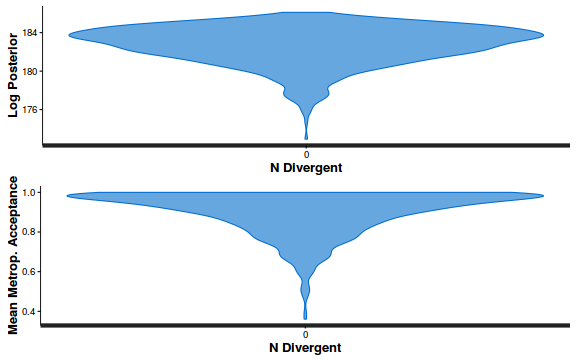

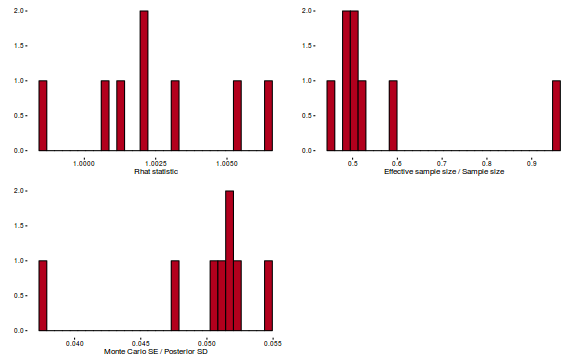

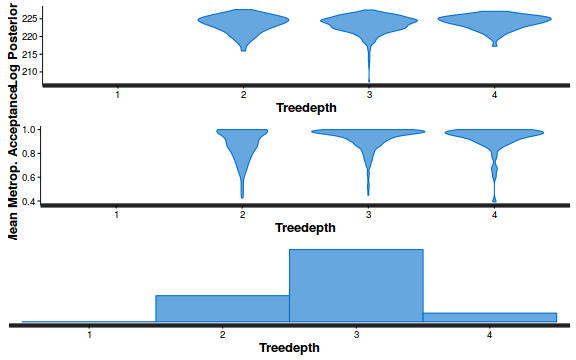

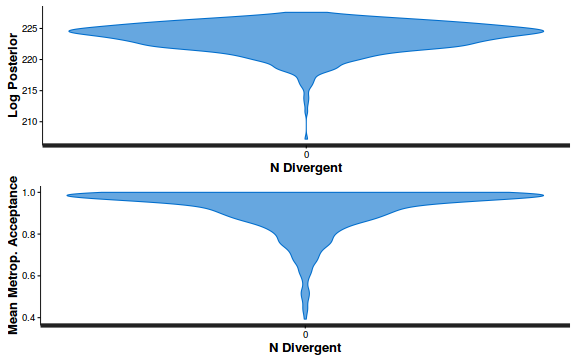

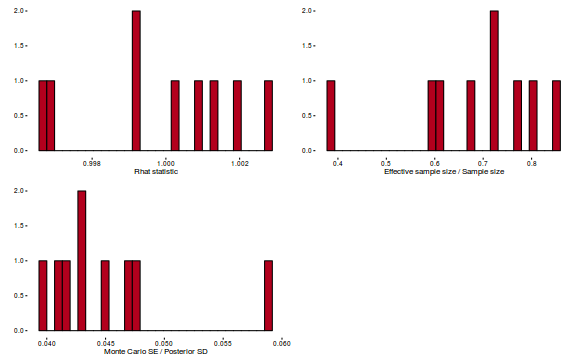

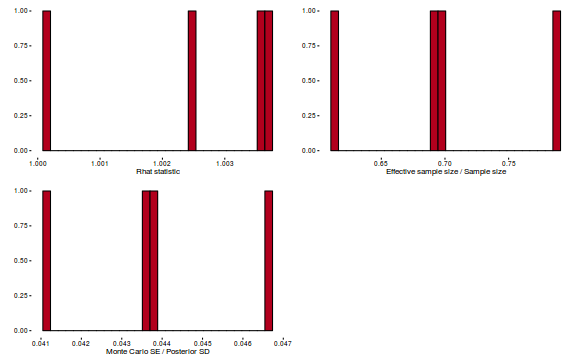

Conclusions: acceptance ratio is very good, the lot posterior is relatively robust to step size and tree depth, rhat values are all below 1.05, the effective sample size is always above 0.5 and the ratio of MCMC error to posterior sd is very small.

summary(do.call(rbind, args = get_sampler_params(dat.brm$fit, inc_warmup = FALSE)), digits = 2)

accept_stat__ stepsize__ treedepth__ n_leapfrog__ n_divergent__ Min. :0.24 Min. :0.64 Min. :1.0 Min. :1.0 Min. :0 1st Qu.:0.88 1st Qu.:0.64 1st Qu.:2.0 1st Qu.:3.0 1st Qu.:0 Median :0.96 Median :0.72 Median :2.0 Median :3.0 Median :0 Mean :0.92 Mean :0.70 Mean :2.1 Mean :3.7 Mean :0 3rd Qu.:1.00 3rd Qu.:0.73 3rd Qu.:3.0 3rd Qu.:5.0 3rd Qu.:0 Max. :1.00 Max. :0.73 Max. :3.0 Max. :7.0 Max. :0

stan_diag(dat.brm$fit)

stan_diag(dat.brm$fit, information = "stepsize")

stan_diag(dat.brm$fit, information = "treedepth")

stan_diag(dat.brm$fit, information = "divergence")

library(gridExtra) grid.arrange(stan_rhat(dat.brm$fit) + theme_classic(8), stan_ess(dat.brm$fit) + theme_classic(8), stan_mcse(dat.brm$fit) + theme_classic(8), ncol = 2)

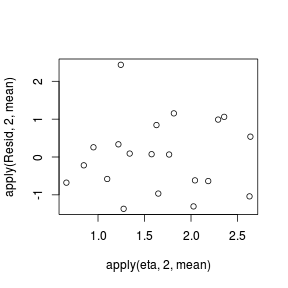

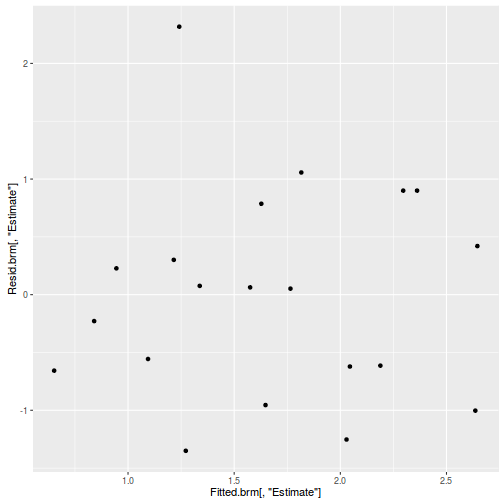

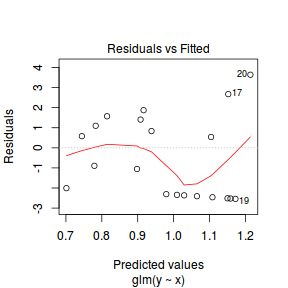

- One very important model validation procedure is to examine a plot of residuals against predicted or fitted values (the residual plot).

Ideally, residual plots should show a random scatter of points without outliers.

That is, there should be no patterns in the residuals. Patterns suggest inappropriate linear predictor (or scale) and/or inappropriate

residual distribution/link function.

The residuals used in such plots should be standardized (particularly if the model incorporated any variance-covariance structures - such as an autoregressive correlation structure)

Pearsons's residuals standardize residuals by division with the square-root of the variance.

We can generate Pearson's residuals within the JAGS model.

Alternatively, we could use the parameters to generate the residuals outside of JAGS.

Pearson's residuals are calculated according to:

$$

\varepsilon = \frac{y_i - \mu}{\sqrt{var(y)}}

$$

where $\mu$ is the expected value of $Y$ ($=\lambda$ for Poisson) and $var(y)$ is the variance of $Y$ ($=\lambda$ for Poisson).

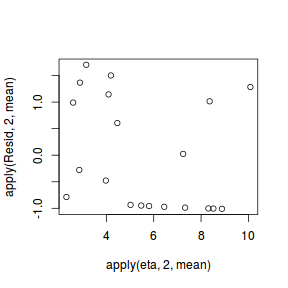

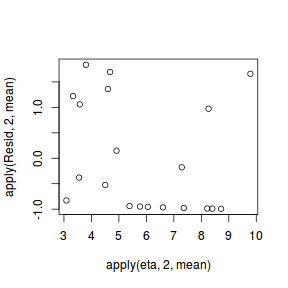

#extract the samples for the two model parameters coefs <- dat.P.jags$BUGSoutput$sims.matrix[,1:2] Xmat <- model.matrix(~x, data=dat) #expected values on a log scale eta<-coefs %*% t(Xmat) #expected value on response scale lambda <- exp(eta) #Expected value and variance are both equal to lambda expY <- varY <- lambda #sweep across rows and then divide by lambda Resid <- -1*sweep(expY,2,dat$y,'-')/sqrt(varY) #plot residuals vs expected values plot(apply(Resid,2,mean)~apply(eta,2,mean))

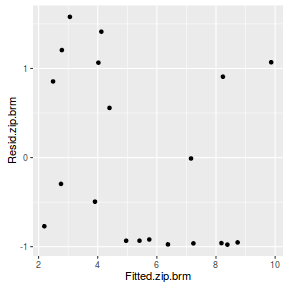

#Calculate residuals Resid.brm <- residuals(dat.brm, type='pearson') Fitted.brm <- fitted(dat.brm, scale='linear') ggplot(data=NULL, aes(y=Resid.brm[,'Estimate'], x=Fitted.brm[,'Estimate'])) + geom_point()

There is one residual that is substantially larger in magnitude than all the others. However, there are no other patterns in the residuals.

- Now we will compare the sum of squared residuals to the sum of squares residuals that would be expected from a Poisson distribution

matching that estimated by the model. Essentially this is estimating how well the Poisson distribution, the log-link function and the linear model

approximates the observed data.

SSres<-apply(Resid^2,1,sum) set.seed(2) #generate a matrix of draws from a poisson distribution # the matrix is the same dimensions as lambda and uses the probabilities of lambda YNew <- matrix(rpois(length(lambda),lambda=lambda),nrow=nrow(lambda)) Resid1<-(lambda - YNew)/sqrt(lambda) SSres.sim<-apply(Resid1^2,1,sum) mean(SSres.sim>SSres)

[1] 0.484375

dat.list1 <- with(dat,list(Y=y, X=x,N=nrow(dat))) modelString=" model { for (i in 1:N) { #likelihood function Y[i] ~ dpois(lambda[i]) eta[i] <- beta0+beta1*X[i] #linear predictor log(lambda[i]) <- eta[i] #link function #E(Y) and var(Y) expY[i] <- lambda[i] varY[i] <- lambda[i] # Calculate RSS Resid[i] <- (Y[i] - expY[i])/sqrt(varY[i]) RSS[i] <- pow(Resid[i],2) #Simulate data from a Poisson distribution Y1[i] ~ dpois(lambda[i]) #Calculate RSS for simulated data Resid1[i] <- (Y1[i] - expY[i])/sqrt(varY[i]) RSS1[i] <-pow(Resid1[i],2) } #Priors beta0 ~ dnorm(0,1.0E-06) beta1 ~ dnorm(0,1.0E-06) #Bayesian P-value Pvalue <- mean(sum(RSS1)>sum(RSS)) } " writeLines(modelString,con='../downloads/BUGSscripts/tut11.5bS6.41.bug') library(R2jags) system.time( dat.P.jags1 <- jags(model='../downloads/BUGSscripts/tut11.5bS6.41.bug', data=dat.list1, inits=NULL, param=c('beta0','beta1','Pvalue'), n.chain=3, n.iter=100000, n.thin=50, n.burnin=20000) )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 272 Initializing model

user system elapsed 16.189 0.004 16.209

print(dat.P.jags1)

Inference for Bugs model at "../downloads/BUGSscripts/tut11.5bS6.41.bug", fit using jags, 3 chains, each with 1e+05 iterations (first 20000 discarded), n.thin = 50 n.sims = 4800 iterations saved mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff Pvalue 0.481 0.500 0.000 0.000 0.000 1.000 1.00 1.001 4800 beta0 0.544 0.261 0.017 0.374 0.550 0.720 1.04 1.002 1200 beta1 0.112 0.019 0.075 0.099 0.112 0.125 0.15 1.002 1600 deviance 88.466 3.891 86.372 86.931 87.758 89.180 93.91 1.001 4800 For each parameter, n.eff is a crude measure of effective sample size, and Rhat is the potential scale reduction factor (at convergence, Rhat=1). DIC info (using the rule, pD = var(deviance)/2) pD = 7.6 and DIC = 96.0 DIC is an estimate of expected predictive error (lower deviance is better).Resid.brm <- residuals(dat.brm, type='pearson', summary=FALSE) SSres.brm <- apply(Resid.brm^2,1,sum) lambda.brm = fitted(dat.brm, scale='response', summary=FALSE) YNew.brm <- matrix(rpois(length(lambda.brm), lambda=lambda.brm),nrow=nrow(lambda.brm)) Resid1.brm<-(lambda.brm - YNew.brm)/sqrt(lambda.brm) SSres.sim.brm<-apply(Resid1.brm^2,1,sum) mean(SSres.sim.brm>SSres.brm)

[1] 0.6026667

Conclusions: the Bayesian p-value is approximately 0.5, suggesting that there is a good fit of the model to the data.

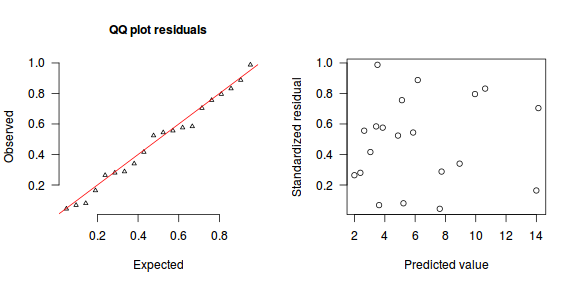

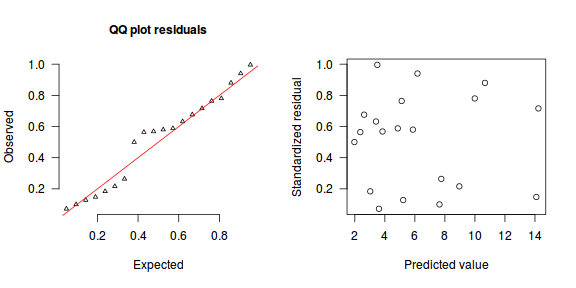

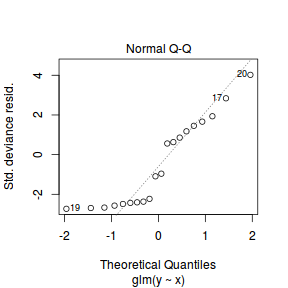

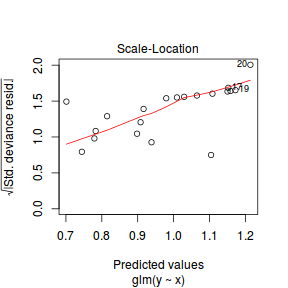

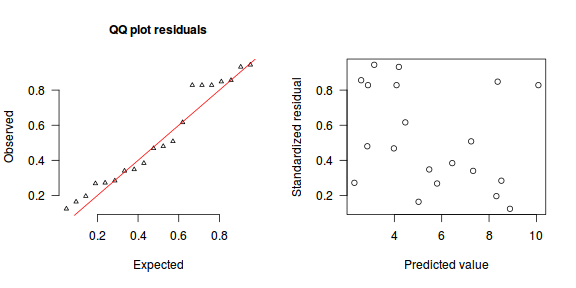

- Unfortunately, unlike with linear models (Gaussian family), the expected distribution of data (residuals) varies

over the range of fitted values for numerous (often competing) ways that make diagnosing (and attributing causes

thereof) miss-specified generalized linear models from standard residual plots very difficult. The use of standardized

(Pearson) residuals or deviance residuals can partly address this issue, yet they still do not offer completely

consistent diagnoses across all issues (miss-specified model, over-dispersion, zero-inflation).

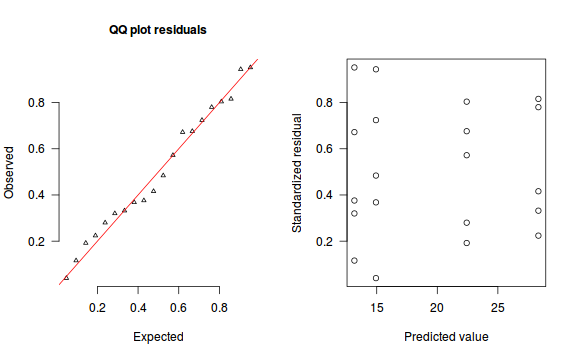

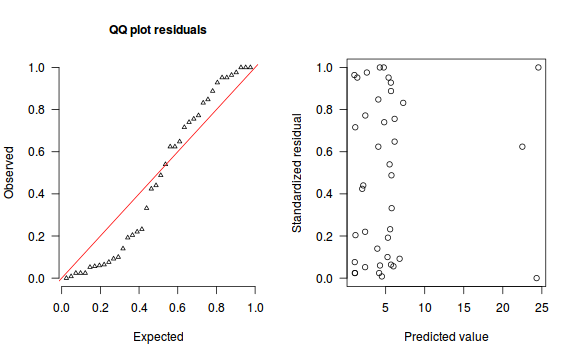

An alternative approach is to use simulated data from the model posteriors to calculate an empirical cumulative density function from which residuals are are generated as values corresponding to the observed data along the density function.

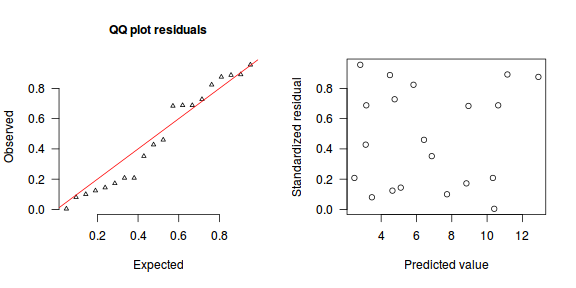

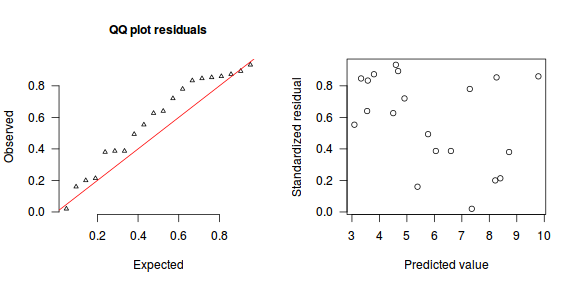

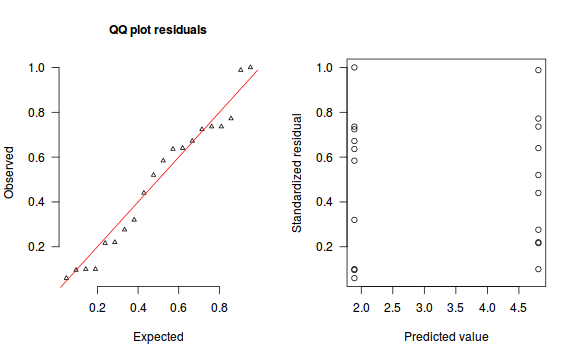

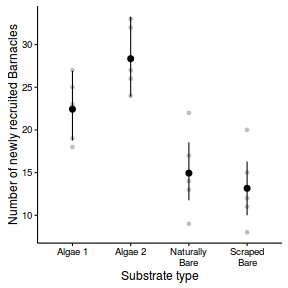

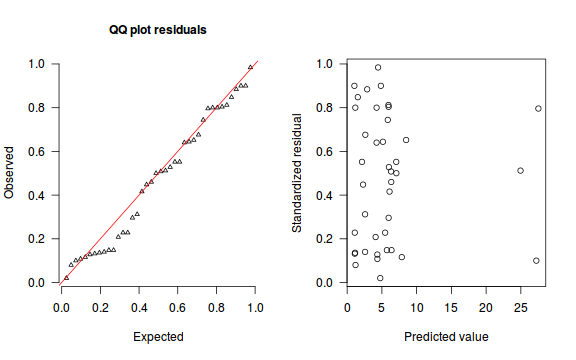

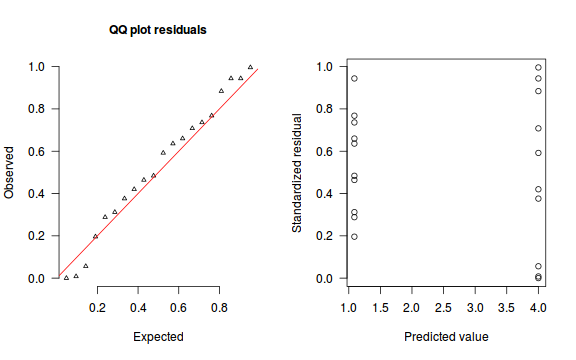

The trend (black symbols) in the qq-plot does not appear to be overly non-linear (matching the ideal red line well), suggesting that the model is not overdispersed. The spread of standardized (simulated) residuals in the residual plot do not appear overly non-uniform. That is there is not trend in the residuals. Furthermore, there is not a concentration of points close to 1 or 0 (which would imply overdispersion).#extract the samples for the two model parameters coefs <- dat.P.jags$BUGSoutput$sims.matrix[,1:2] Xmat <- model.matrix(~x, data=dat) #expected values on a log scale eta<-coefs %*% t(Xmat) #expected value on response scale lambda <- exp(eta) simRes <- function(lambda, data,n=250, plot=T, family='poisson') { require(gap) N = nrow(data) sim = switch(family, 'poisson' = matrix(rpois(n*N,apply(lambda,2,mean)),ncol=N, byrow=TRUE) ) a = apply(sim + runif(n,-0.5,0.5),2,ecdf) resid<-NULL for (i in 1:nrow(data)) resid<-c(resid,a[[i]](data$y[i] + runif(1 ,-0.5,0.5))) if (plot==T) { par(mfrow=c(1,2)) gap::qqunif(resid,pch = 2, bty = "n", logscale = F, col = "black", cex = 0.6, main = "QQ plot residuals", cex.main = 1, las=1) plot(resid~apply(lambda,2,mean), xlab='Predicted value', ylab='Standardized residual', las=1) } resid } simRes(lambda,dat, family='poisson')

[1] 0.264 0.280 0.556 0.416 0.584 0.988 0.068 0.576 0.524 0.756 0.080 0.544 0.888 0.044 0.288 0.340 0.796 0.832 0.164 0.704

lambda.brm = fitted(dat.brm, scale='response', summary=FALSE) simRes <- function(lambda, data,n=250, plot=T, family='poisson') { require(gap) N = nrow(data) sim = switch(family, 'poisson' = matrix(rpois(n*N,apply(lambda,2,mean)),ncol=N, byrow=TRUE) ) a = apply(sim + runif(n,-0.5,0.5),2,ecdf) resid<-NULL for (i in 1:nrow(data)) resid<-c(resid,a[[i]](data$y[i] + runif(1 ,-0.5,0.5))) if (plot==T) { par(mfrow=c(1,2)) gap::qqunif(resid,pch = 2, bty = "n", logscale = F, col = "black", cex = 0.6, main = "QQ plot residuals", cex.main = 1, las=1) plot(resid~apply(lambda,2,mean), xlab='Predicted value', ylab='Standardized residual', las=1) } resid } simRes(lambda.brm, dat, family='poisson')

[1] 0.500 0.564 0.676 0.184 0.632 0.996 0.072 0.568 0.588 0.764 0.128 0.580 0.940 0.100 0.264 0.216 0.780 0.880 0.148 0.716

-

Recall that the Poisson regression model assumes that variance=mean ($var=\mu\phi$ where $\phi=1$) and thus dispersion ($\phi=\frac{var}{\mu}=1$).

However, we can also calculate approximately what the dispersion factor would be by using sum square of the residuals as a measure of variance and the model

residual degrees of freedom as a measure of the mean (since the expected value of a Poisson distribution is the same as its degrees of freedom).

$$\phi=\frac{RSS}{df}$$ where $df=n-k$ and $k$ is the number of estimated model coefficients.

Resid <- -1*sweep(lambda,2,dat$y,'-')/sqrt(lambda) RSS<-apply(Resid^2,1,sum) (df<-nrow(dat)-ncol(coefs))

[1] 18

Disp <- RSS/df data.frame(Median=median(Disp), Mean=mean(Disp), HPDinterval(as.mcmc(Disp)), HPDinterval(as.mcmc(Disp),p=0.5))

Median Mean lower upper lower.1 upper.1 var1 1.044197 1.109181 0.9299409 1.452832 0.9299853 1.044238

dat.list <- with(dat,list(Y=y, X=x,N=nrow(dat))) modelString=" model { for (i in 1:N) { Y[i] ~ dpois(lambda[i]) eta[i] <- beta0 + beta1*X[i] log(lambda[i]) <- eta[i] expY[i] <- lambda[i] varY[i] <- lambda[i] Resid[i] <- (Y[i] - expY[i])/sqrt(varY[i]) } beta0 ~ dnorm(0,1.0E-06) beta1 ~ dnorm(0,1.0E-06) RSS <- sum(pow(Resid,2)) df <- N-2 phi <- RSS/df } " writeLines(modelString,con='tut11.5bS1.40.bug') library(R2jags) system.time( dat.P.jags <- jags(model='tut11.5bS1.40.bug', data=dat.list, inits=NULL, param=c('beta0','beta1','phi'), n.chain=3, n.iter=100000, n.thin=50, n.burnin=20000) )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 171 Initializing model

user system elapsed 14.865 0.004 14.886

print(dat.P.jags)

Inference for Bugs model at "tut11.5bS1.40.bug", fit using jags, 3 chains, each with 1e+05 iterations (first 20000 discarded), n.thin = 50 n.sims = 4800 iterations saved mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff beta0 0.542 0.258 0.013 0.37 0.544 0.716 1.042 1.001 4800 beta1 0.112 0.019 0.075 0.10 0.112 0.125 0.149 1.001 4800 phi 1.112 0.400 0.934 0.98 1.052 1.173 1.570 1.001 4800 deviance 88.433 3.859 86.373 86.89 87.727 89.159 93.839 1.001 4800 For each parameter, n.eff is a crude measure of effective sample size, and Rhat is the potential scale reduction factor (at convergence, Rhat=1). DIC info (using the rule, pD = var(deviance)/2) pD = 7.4 and DIC = 95.9 DIC is an estimate of expected predictive error (lower deviance is better).Resid.brm <- residuals(dat.brm, type='pearson', summary=FALSE) SSres.brm <- apply(Resid.brm^2,1,sum) (df <- nrow(dat) - nrow(coef(dat.brm)))

[1] 18

Disp <- SSres.brm/df data.frame(Median=median(Disp), Mean=mean(Disp), HPDinterval(as.mcmc(Disp)), HPDinterval(as.mcmc(Disp)))

Median Mean lower upper lower.1 upper.1 var1 0.9666143 0.9944079 0.8964392 1.168199 0.8964392 1.168199

The dispersion statistic $\phi$ is close to 1 and thus there is no evidence that the data were overdispersed. The Poisson distribution was therefore appropriate.

Exploring the model parameters, test hypotheses

If there was any evidence that the assumptions had been violated or the model was not an appropriate fit, then we would need to reconsider the model and start the process again. In this case, there is no evidence that the test will be unreliable so we can proceed to explore the test statistics.

print(dat.P.jags)

Inference for Bugs model at "tut11.5bS1.40.bug", fit using jags,

3 chains, each with 1e+05 iterations (first 20000 discarded), n.thin = 50

n.sims = 4800 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

beta0 0.542 0.258 0.013 0.37 0.544 0.716 1.042 1.001 4800

beta1 0.112 0.019 0.075 0.10 0.112 0.125 0.149 1.001 4800

phi 1.112 0.400 0.934 0.98 1.052 1.173 1.570 1.001 4800

deviance 88.433 3.859 86.373 86.89 87.727 89.159 93.839 1.001 4800

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 7.4 and DIC = 95.9

DIC is an estimate of expected predictive error (lower deviance is better).

library(plyr) adply(dat.P.jags$BUGSoutput$sims.matrix[,1:2], 2, function(x) { data.frame(Median=median(x), Mean=mean(x), HPDinterval(as.mcmc(x)), HPDinterval(as.mcmc(x),p=0.5)) })

X1 Median Mean lower upper lower.1 upper.1 1 beta0 0.5445 0.5423 0.02752 1.0480 0.3617 0.7056 2 beta1 0.1123 0.1122 0.07599 0.1491 0.1001 0.1251

Actually, many find it more palatable to express the estimates in the original scale of the observations rather than on a log scale.

library(plyr) adply(exp(dat.P.jags$BUGSoutput$sims.matrix[,1:2]), 2, function(x) { data.frame(Median=median(x), Mean=mean(x), HPDinterval(as.mcmc(x)), HPDinterval(as.mcmc(x),p=0.5)) })

X1 Median Mean lower upper lower.1 upper.1 1 beta0 1.724 1.778 0.9645 2.709 1.342 1.915 2 beta1 1.119 1.119 1.0790 1.161 1.105 1.133

Conclusions:

We would reject the null hypothesis of no effect of $x$ on $y$.

An increase in x is associated with a significant linear increase (positive slope) in the abundance of $y$.

Every 1 unit increase in $x$ results in a log 0.1123415 unit increase in $y$.

We usually express this in terms of abundance rather than log abundance, so

every 1 unit increase in $x$ results in a ($e^{ 0.1123415 } = 1.1188949 $)

1.1188949 unit increase in the abundance of $y$.

summary(dat.brm)

Family: poisson (log)

Formula: y ~ x

Data: dat (Number of observations: 20)

Samples: 3 chains, each with iter = 2000; warmup = 1000; thin = 2;

total post-warmup samples = 1500

WAIC: Not computed

Fixed Effects:

Estimate Est.Error l-95% CI u-95% CI Eff.Sample Rhat

Intercept 0.54 0.25 0.04 1.01 864 1

x 0.11 0.02 0.08 0.15 962 1

Samples were drawn using sampling(NUTS). For each parameter, Eff.Sample

is a crude measure of effective sample size, and Rhat is the potential

scale reduction factor on split chains (at convergence, Rhat = 1).

exp(coef(dat.brm))

mean Intercept 1.710068 x 1.119290

coefs.brm <- as.matrix(as.data.frame(rstan:::extract(dat.brm$fit))) coefs.brm <- coefs.brm[,grep('b', colnames(coefs.brm))] plyr:::adply(exp(coefs.brm), 2, function(x) { data.frame(Mean=mean(x), median=median(x), HPDinterval(as.mcmc(x))) })

X1 Mean median lower upper 1 b_Intercept 1.763680 1.710650 0.950453 2.630181 2 b_x 1.119476 1.118679 1.082259 1.161513

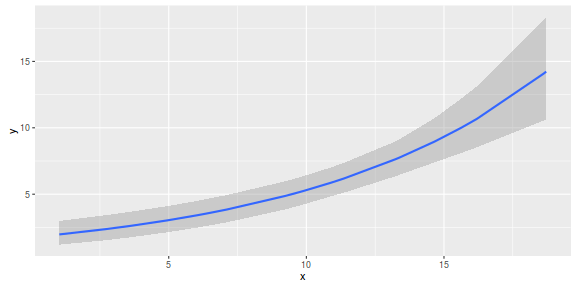

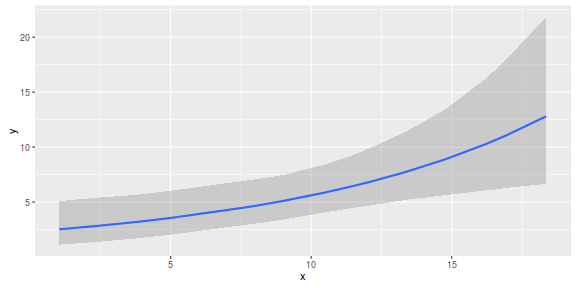

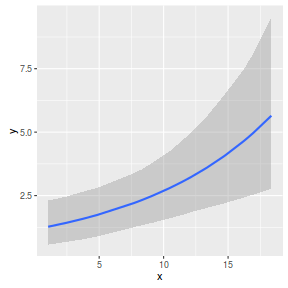

marginal_effects(dat.brm)

Further explorations of the trends

A measure of the strength of the relationship can be obtained according to: $$R^2 = 1 - \frac{RSS_{model}}{RSS_{null}}$$

Xmat <- model.matrix(~x, data=dat) #expected values on a log scale eta<-coefs %*% t(Xmat) #expected value on response scale lambda <- exp(eta) #calculate the raw SS residuals SSres <- apply((-1*(sweep(lambda,2,dat$y,'-')))^2,1,sum) SSres.null <- sum((dat$y - mean(dat$y))^2) #OR SSres.null <- crossprod(dat$y - mean(dat$y)) #calculate the model r2 1-mean(SSres)/SSres.null

[,1] [1,] 0.6572

Conclusions: 65.72% of the variation in $y$ abundance can be explained by its relationship with $x$.

dat.list <- with(dat,list(Y=y, X=x,N=nrow(dat))) modelString=" model { for (i in 1:N) { Y[i] ~ dpois(lambda[i]) eta[i] <- beta0 + beta1*X[i] log(lambda[i]) <- eta[i] res[i] <- Y[i] - lambda[i] resnull[i] <- Y[i] - meanY } meanY <- mean(Y) beta0 ~ dnorm(0,1.0E-06) beta1 ~ dnorm(0,1.0E-06) RSS <- sum(res^2) RSSnull <- sum(resnull^2) r2 <- 1-RSS/RSSnull } " writeLines(modelString,con='tut11.5bS1.40.bug') library(R2jags) system.time( dat.P.jags <- jags(model='tut11.5bS1.40.bug', data=dat.list, inits=NULL, param=c('beta0','beta1','r2'), n.chain=3, n.iter=100000, n.thin=50, n.burnin=20000) )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 150 Initializing model

user system elapsed 14.909 0.008 14.933

print(dat.P.jags)

Inference for Bugs model at "tut11.5bS1.40.bug", fit using jags,

3 chains, each with 1e+05 iterations (first 20000 discarded), n.thin = 50

n.sims = 4800 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

beta0 0.550 0.253 0.052 0.384 0.554 0.723 1.028 1.001 4800

beta1 0.112 0.019 0.076 0.099 0.112 0.124 0.149 1.001 4800

r2 0.655 0.066 0.509 0.640 0.673 0.691 0.701 1.001 4800

deviance 88.418 4.483 86.372 86.885 87.718 89.117 93.725 1.003 4800

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 10.1 and DIC = 98.5

DIC is an estimate of expected predictive error (lower deviance is better).

## calculate the expected values on the response scale lambda.brm = fitted(dat.brm, scale='response', summary=FALSE) ## calculate the raw SSresid SSres.brm <- apply((-1*(sweep(lambda.brm,2,dat$y,'-')))^2,1,sum) SSres.null <- sum((dat$y - mean(dat$y))^2) #OR SSres.null <- crossprod(dat$y - mean(dat$y)) #calculate the model r2 1-mean(SSres.brm)/SSres.null

[,1] [1,] 0.6570747

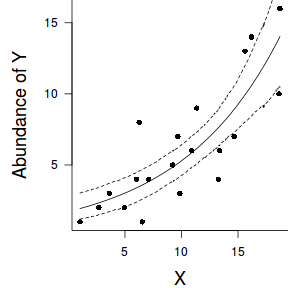

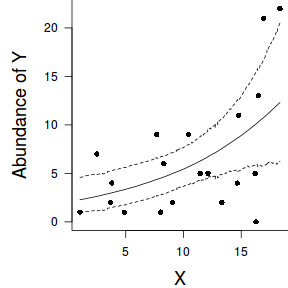

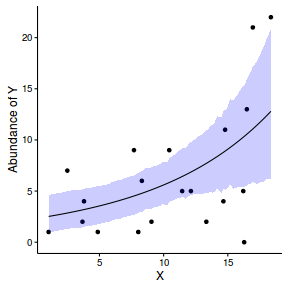

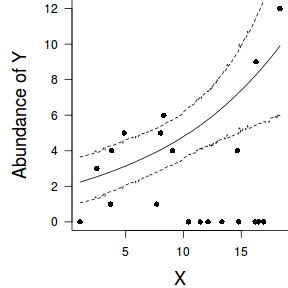

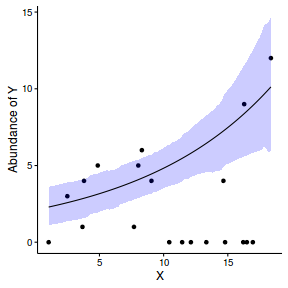

Finally, we will create a summary plot.

par(mar = c(4, 5, 0, 0)) plot(y ~ x, data = dat, type = "n", ann = F, axes = F) points(y ~ x, data = dat, pch = 16) xs <- seq(min(dat$x,na.rm=TRUE),max(dat$x,na.rm=TRUE), l = 1000) Xmat <- model.matrix(~xs) eta<-coefs %*% t(Xmat) ys <- exp(eta) library(plyr) library(coda) data.tab <- adply(ys,2,function(x) { data.frame(Median=median(x), HPDinterval(as.mcmc(x))) }) data.tab <- cbind(x=xs,data.tab) points(Median ~ x, data=data.tab,col = "black", type = "l") lines(lower ~ x, data=data.tab,col = "black", type = "l", lty = 2) lines(upper ~ x, data=data.tab,col = "black", type = "l", lty = 2) axis(1) mtext("X", 1, cex = 1.5, line = 3) axis(2, las = 2) mtext("Abundance of Y", 2, cex = 1.5, line = 3) box(bty = "l")

newdata = data.frame(x=seq(min(dat$x), max(dat$x), len=100)) Xmat = model.matrix(~x, data=newdata) coefs <- as.matrix(as.data.frame(rstan:::extract(dat.brm$fit))) coefs <- coefs[,grep('b', colnames(coefs))] fit = exp(coefs %*% t(Xmat)) newdata = cbind(newdata, plyr:::adply(fit, 2, function(x) { data.frame(Mean=mean(x), Median=median(x), HPDinterval(as.mcmc(x))) }) ) ggplot(newdata, aes(y=Mean, x=x)) + geom_point(data=dat, aes(y=y)) + geom_ribbon(aes(ymin=lower, ymax=upper), fill='blue',alpha=0.2) + geom_line() + scale_x_continuous('X') + scale_y_continuous('Abundance of Y') + theme_classic() + theme(axis.line.x=element_line(),axis.line.y=element_line())

Defining full log-likelihood function

Now lets try it by specifying log-likelihood and the zero trick. When applying this trick, we need to manually calculate the deviance as the inbuilt deviance will be based on the log-likelihood of estimating the zeros (as part of the zero trick) rather than the deviance of the intended model..

The one advantage of the zero trick is that the Deviance and thus DIC, AIC provided by R2jags will be incorrect. Hence, they too need to be manually defined within jags. I suspect that the AIC calculation I have used is incorrect...

Xmat <- model.matrix(~x, dat) nX <- ncol(Xmat) dat.list2 <- with(dat,list(Y=y, X=Xmat,N=nrow(dat), mu=rep(0,nX), Sigma=diag(1.0E-06,nX), zeros=rep(0,nrow(dat)), C=10000)) modelString=" model { for (i in 1:N) { zeros[i] ~ dpois(zeros.lambda[i]) zeros.lambda[i] <- -ll[i] + C ll[i] <- Y[i]*log(lambda[i]) - lambda[i] - loggam(Y[i]+1) eta[i] <- inprod(beta[], X[i,]) log(lambda[i]) <- eta[i] llm[i] <- Y[i]*log(meanlambda) - meanlambda - loggam(Y[i]+1) } meanlambda <- mean(lambda) beta ~ dmnorm(mu[],Sigma[,]) dev <- sum(-2*ll) pD <- mean(dev)-sum(-2*llm) AIC <- min(dev+(2*pD)) } " writeLines(modelString,con='../downloads/BUGSscripts/tut11.5bS1.42.bug') library(R2jags) system.time( dat.P.jags3 <- jags(model='../downloads/BUGSscripts/tut11.5bS1.42.bug', data=dat.list2, inits=NULL, param=c('beta','dev','AIC'), n.chain=3, n.iter=50000, n.thin=50, n.burnin=10000) )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 353 Initializing model

user system elapsed 1.936 0.004 1.942

print(dat.P.jags3)

Inference for Bugs model at "../downloads/BUGSscripts/tut11.5bS1.42.bug", fit using jags,

3 chains, each with 50000 iterations (first 10000 discarded), n.thin = 50

n.sims = 2400 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

AIC 1.328e+01 4.076 9.634e+00 1.050e+01 1.200e+01 1.458e+01 2.438e+01 1.001 2400

beta[1] 5.510e-01 0.245 6.200e-02 3.860e-01 5.540e-01 7.160e-01 1.008e+00 1.000 2400

beta[2] 1.120e-01 0.018 7.800e-02 9.900e-02 1.120e-01 1.240e-01 1.470e-01 1.001 2400

dev 8.821e+01 1.868 8.637e+01 8.686e+01 8.764e+01 8.897e+01 9.294e+01 1.002 2400

deviance 4.001e+05 1.868 4.001e+05 4.001e+05 4.001e+05 4.001e+05 4.001e+05 1.000 1

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 1.7 and DIC = 400090.0

DIC is an estimate of expected predictive error (lower deviance is better).

Negative binomial

The following equations are provided since in Bayesian modelling, it is occasionally necessary to directly define the log-likelihood calculations (particularly for zero-inflated models and other mixture models). Feel free initially gloss over these equations until such time when your models require them ;)

| prob, size | alpha, beta (gamma-poisson) |

|---|---|

| $$ f(y|r,p)=\frac{\Gamma(y+r)}{\Gamma(r)\Gamma(y+1)}p^r(1-p)^y $$ where $p$ is the probability of $y$ successes until $r$ failures. If, we make $p=\frac{size}{size+\mu}$, then we can define the function in terms of $\mu$ $$ f(y|r,\mu)=\frac{\Gamma(y+r)}{\Gamma(r)\Gamma(y+1)}\left(\frac{r}{\mu+r}\right)^r\left(1-\frac{r}{\mu+r}\right)^y $$ where $p$ is the probability of $y$ successes until $r$ failures. $$\mu = \frac{r(1-p)}{p}\\ E(Y)=\mu, Var(Y)=\mu+\frac{\mu^2}{r} $$ | $$ f(y \mid \alpha, \beta) = \frac{\beta^{\alpha}y^{\alpha-1}e^{-\beta y}}{\Gamma(\alpha)} $$ where $$ \begin{align} l(\lambda;y)=&\sum\limits^n_{i=1} log\Gamma(y_i + \alpha) - log\Gamma(y_i+1) - log\Gamma(\alpha) + \\ & \alpha(log(\beta_i) - log(\beta_i+1)) - y_i.log(\beta_i+1) \end{align} $$ |

Scenario and Data

Lets say we wanted to model the abundance of an item ($y$) against a continuous predictor ($x$). As this section is mainly about the generation of artificial data (and not specifically about what to do with the data), understanding the actual details are optional and can be safely skipped. Consequently, I have folded (toggled) this section away.

- the sample size = 20

- the continuous $x$ variable is a random uniform spread of measurements between 1 and 20

- the rate of change in log $y$ per unit of $x$ (slope) = 0.1.

- the value of $x$ when log$y$ equals 0 (when $y$=1)

- to generate the values of $y$ expected at each $x$ value, we evaluate the linear predictor (created by calculating the outer product of the model matrix and the regression parameters). These expected values are then transformed into a scale mapped by (0,$\infty$) by using the log function $e^{linear~predictor}$

- finally, we generate $y$ values by using the expected $y$ values ($\lambda$) as probabilities when drawing random numbers from a Poisson distribution. This step adds random noise to the expected $y$ values and returns only 0's and positive integers.

set.seed(37) #16 #35 #The number of samples n.x <- 20 #Create x values that at uniformly distributed throughout the rate of 1 to 20 x <- sort(runif(n = n.x, min = 1, max =20)) mm <- model.matrix(~x) intercept <- 0.6 slope=0.1 #The linear predictor linpred <- mm %*% c(intercept,slope) #Predicted y values lambda <- exp(linpred) #Add some noise and make binomial y <- rnbinom(n=n.x, mu=lambda, size=1) dat.nb <- data.frame(y,x)

Exploratory data analysis and initial assumption checking

- All of the observations are independent - this must be addressed at the design and collection stages

- The response variable (and thus the residuals) should be matched by an appropriate distribution (in the case of positive integers response - a Poisson is appropriate).

- All observations are equally influential in determining the trends - or at least no observations are overly influential. This is most effectively diagnosed via residuals and other influence indices and is very difficult to diagnose prior to analysis

- the relationship between the linear predictor (right hand side of the regression formula) and the link function should be linear. A scatterplot with smoother can be useful for identifying possible non-linearity.

- Dispersion is either 1 or overdispersion is otherwise accounted for in the model

- The number of zeros is either not excessive or else they are specifically addressed by the model

When counts are all very large (not close to 0) and their ranges do not span orders of magnitude, they take on very Gaussian properties (symmetrical distribution and variance independent of the mean). Given that models based on the Gaussian distribution are more optimized and recognized than Generalized Linear Models, it can be prudent to adopt Gaussian models for such data. Hence it is a good idea to first explore whether a Poisson or Negative Binomial model is likely to be more appropriate than a standard Gaussian model.

Recall from Poisson regression, there are five main potential models that we could consider fitting to these data.

There are five main potential models we could consider fitting to these data:

- Ordinary least squares regression (general linear model) - assumes normality of residuals

- Poisson regression - assumes mean=variance (dispersion=1)

- Quasi-poisson regression - a general solution to overdispersion. Assumes variance is a function of mean, dispersion estimated, however likelihood based statistics unavailable

- Negative binomial regression - a specific solution to overdispersion caused by clumping (due to an unmeasured latent variable). Scaling factor ($\omega$) is estimated along with the regression parameters.

- Zero-inflation model - a specific solution to overdispersion caused by excessive zeros (due to an unmeasured latent variable). Mixture of binomial and Poisson models.

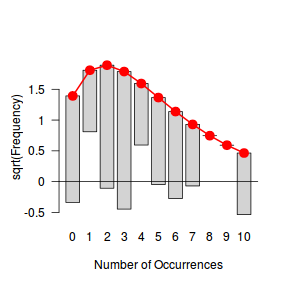

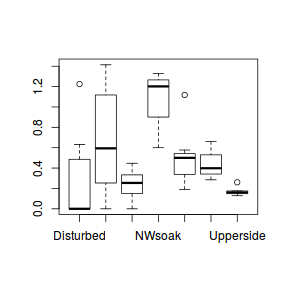

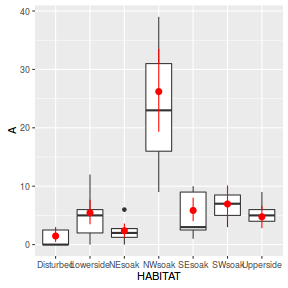

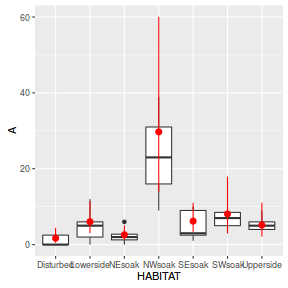

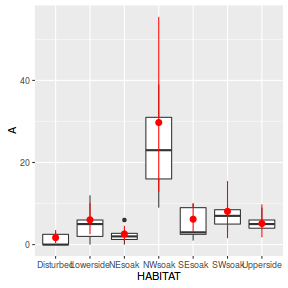

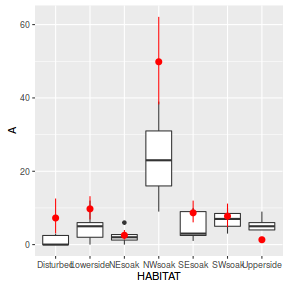

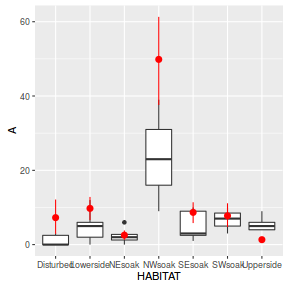

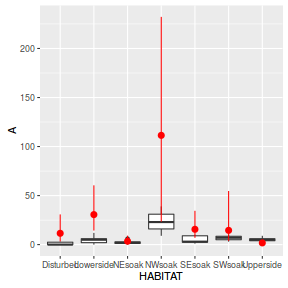

Confirm non-normality and explore clumping

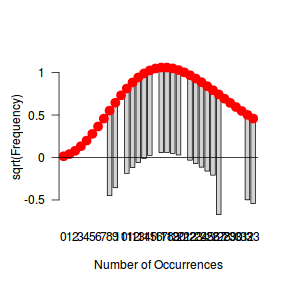

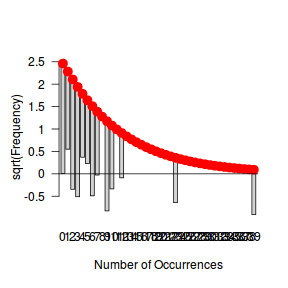

Check the distribution of the $y$ abundances

hist(dat.nb$y)

boxplot(dat.nb$y, horizontal=TRUE) rug(jitter(dat.nb$y))

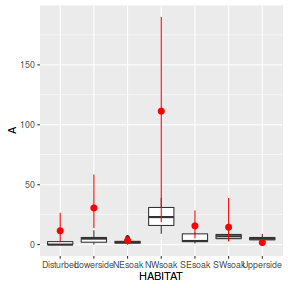

Confirm linearity

Lets now explore linearity by creating a histogram of the predictor variable ($x$) and a scatterplot of the relationship between the response ($y$) and the predictor ($x$)

hist(dat.nb$x)

#now for the scatterplot plot(y~x, dat.nb, log="y") with(dat.nb, lines(lowess(y~x)))

Conclusions: the predictor ($x$) does not display any skewness or other issues that might lead to non-linearity. The lowess smoother on the scatterplot does not display major deviations from a straight line and thus linearity is satisfied. Violations of linearity could be addressed by either:

- define a non-linear linear predictor (such as a polynomial, spline or other non-linear function)

- transform the scale of the predictor variables

Explore zero inflation

Although we have already established that there are few zeros in the data (and thus overdispersion is unlikely to be an issue), we can also explore this by comparing the number of zeros in the data to the number of zeros that would be expected from a Poisson distribution with a mean equal to the mean count of the data.

#proportion of 0's in the data dat.nb.tab<-table(dat.nb$y==0) dat.nb.tab/sum(dat.nb.tab)

FALSE TRUE 0.95 0.05

#proportion of 0's expected from a Poisson distribution mu <- mean(dat.nb$y) cnts <- rpois(1000, mu) dat.nb.tabE <- table(cnts == 0) dat.nb.tabE/sum(dat.nb.tabE)

FALSE

1

Model fitting or statistical analysis

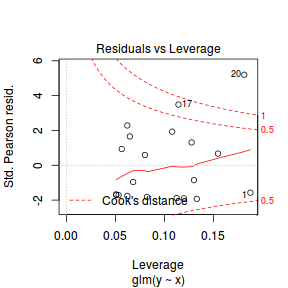

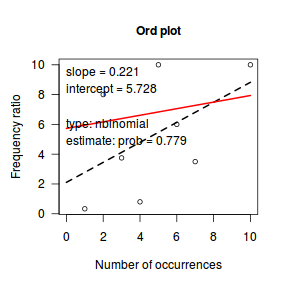

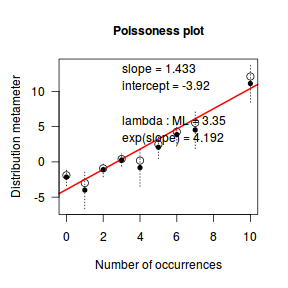

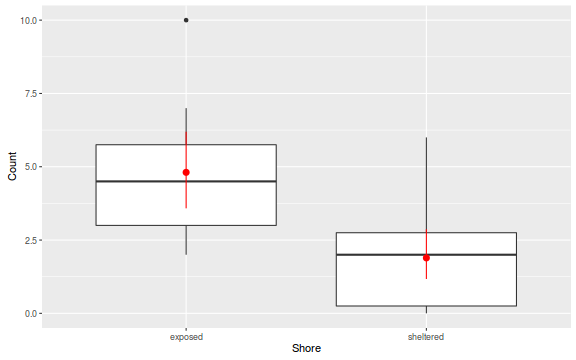

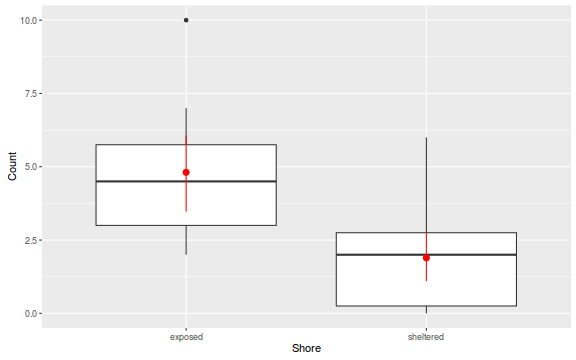

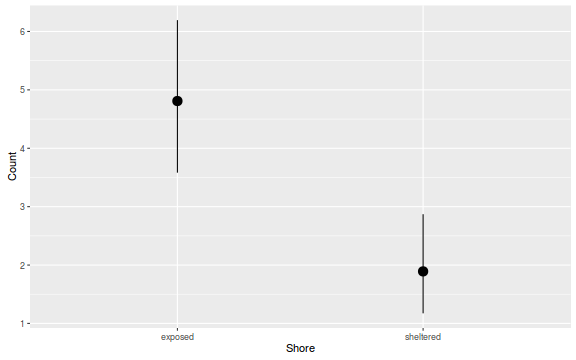

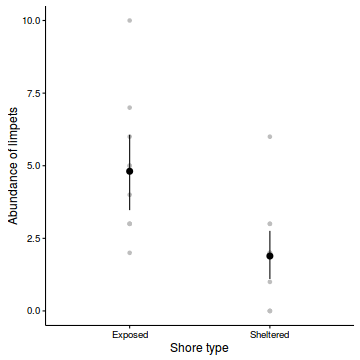

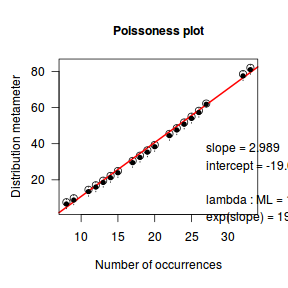

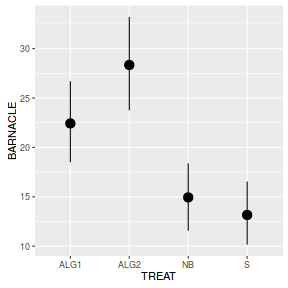

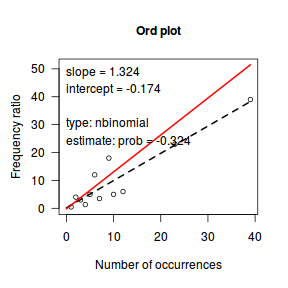

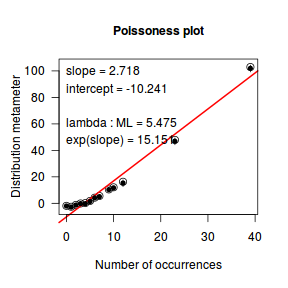

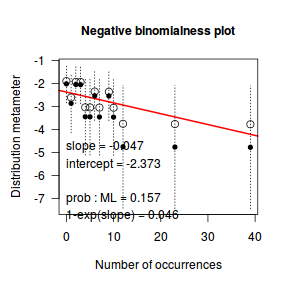

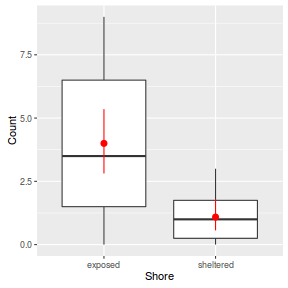

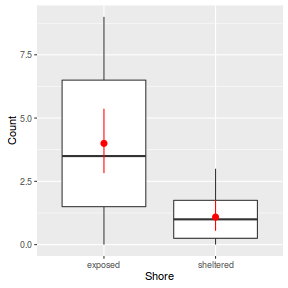

The boxplot of $y$ with the axis rug suggested that there might be some clumping (possibly due to some other unmeasured influence). It is therefore likely that the data are overdispersed.

dat.nb.list <- with(dat.nb,list(Y=y, X=x,N=nrow(dat.nb))) modelString=" model { for (i in 1:N) { Y[i] ~ dpois(lambda[i]) eta[i] <- beta0 + beta1*X[i] log(lambda[i]) <- eta[i] } beta0 ~ dnorm(0,1.0E-06) beta1 ~ dnorm(0,1.0E-06) } " writeLines(modelString,con='../downloads/BUGSscripts/tut11.5bS3.4.bug') library(R2jags) system.time( dat.nb.P.jags <- jags(model='../downloads/BUGSscripts/tut11.5bS3.4.bug', data=dat.nb.list, inits=NULL, param=c('beta0','beta1'), n.chain=3, n.iter=20000, n.thin=10, n.burnin=10000) )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 105 Initializing model

user system elapsed 1.788 0.000 1.791

print(dat.nb.P.jags)

Inference for Bugs model at "../downloads/BUGSscripts/tut11.5bS3.4.bug", fit using jags,

3 chains, each with 20000 iterations (first 10000 discarded), n.thin = 10

n.sims = 3000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

beta0 0.555 0.278 0.002 0.365 0.568 0.736 1.08 1.003 1000

beta1 0.111 0.020 0.072 0.098 0.111 0.125 0.15 1.002 1400

deviance 135.489 4.131 133.269 133.849 134.697 136.261 141.07 1.010 2700

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 8.5 and DIC = 144.0

DIC is an estimate of expected predictive error (lower deviance is better).

#extract the samples for the two model parameters coefs <- dat.nb.P.jags$BUGSoutput$sims.matrix[,1:2] Xmat <- model.matrix(~x, data=dat) #expected values on a log scale eta<-coefs %*% t(Xmat) #expected value on response scale lambda <- exp(eta) Resid <- -1*sweep(lambda,2,dat.nb$y, '-')/sqrt(lambda) RSS <- apply(Resid^2, 1, sum) Disp <- RSS/(nrow(dat.nb)-ncol(coefs)) data.frame(Median=median(Disp), Mean=mean(Disp), HPDinterval(as.mcmc(Disp)), HPDinterval(as.mcmc(Disp),p=0.5))

Median Mean lower upper lower.1 upper.1 var1 3.147 3.243 2.63 4.002 2.786 3.252

The dispersion parameter was 3.2425024, indicating over three times more variability than would be expected for a Poisson distribution.

The data are thus over-dispersed. Given that this is unlikely to be due to zero inflation and the rug plot did suggest some level of clumping,

negative binomial regression would seem reasonable.

Model fitting or statistical analysis

JAGS

$$ \begin{align} Y_i&\sim{}NB(p_i,size) & (\text{response distribution})\\ p_i&=size/(size+\lambda_i)\\ log(\lambda_i)&=\eta_i & (\text{link function})\\ \eta_i&=\beta_0+\beta_1 X_i & (\text{linear predictor})\\ \beta_0, \beta_1&\sim{}\mathcal{N}(0,10000) & (\text{diffuse Bayesian prior})\\ size &\sim{}\mathcal{U}(0.001,1000)\\ \end{align} $$dat.nb.list <- with(dat.nb,list(Y=y, X=x,N=nrow(dat.nb))) modelString=" model { for (i in 1:N) { Y[i] ~ dnegbin(p[i],size) p[i] <- size/(size+lambda[i]) log(lambda[i]) <- beta0 + beta1*X[i] } beta0 ~ dnorm(0,1.0E-06) beta1 ~ dnorm(0,1.0E-06) size ~ dunif(0.001,1000) theta <- pow(1/mean(p),2) scaleparam <- mean((1-p)/p) } " writeLines(modelString,con='../downloads/BUGSscripts/tut11.5bS4.1.bug') library(R2jags) system.time( dat.NB.jags <- jags(model='../downloads/BUGSscripts/tut11.5bS4.1.bug', data=dat.nb.list, inits=NULL, param=c('beta0','beta1', 'size','theta','scaleparam'), n.chain=3, n.iter=20000, n.thin=10, n.burnin=10000) )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 157 Initializing model

user system elapsed 17.430 0.008 17.455

Xmat <- model.matrix(~x, dat.nb) nX <- ncol(Xmat) dat.nb.list1 <- with(dat.nb,list(Y=y, X=Xmat,N=nrow(dat.nb), nX=nX)) modelString=" model { for (i in 1:N) { Y[i] ~ dnegbin(p[i],size) p[i] <- size/(size+lambda[i]) eta[i] <- inprod(beta[], X[i,]) log(lambda[i]) <- max(-20,min(20,eta[i])) } for (i in 1:nX) { beta[i] ~ dnorm(0,1.0E-06) } size ~ dunif(0.001,10000) } " writeLines(modelString,con='../downloads/BUGSscripts/tut11.5bS4.11.bug') library(R2jags) system.time( dat.NB.jags1 <- jags(model='../downloads/BUGSscripts/tut11.5bS4.11.bug', data=dat.nb.list1, inits=NULL, param=c('beta'), n.chain=3, n.iter=20000, n.thin=10, n.burnin=10000) )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 212 Initializing model

user system elapsed 17.01 0.00 17.02

print(dat.NB.jags1)

Inference for Bugs model at "../downloads/BUGSscripts/tut11.5bS4.11.bug", fit using jags,

3 chains, each with 20000 iterations (first 10000 discarded), n.thin = 10

n.sims = 3000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

beta[1] 0.733 0.415 -0.069 0.465 0.725 1.005 1.564 1.001 3000

beta[2] 0.097 0.033 0.032 0.075 0.097 0.118 0.163 1.001 3000

deviance 113.046 2.682 110.080 111.088 112.321 114.261 120.171 1.002 2000

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 3.6 and DIC = 116.6

DIC is an estimate of expected predictive error (lower deviance is better).

Or arguably better still, use a multivariate normal prior. If we have a $k$ regression parameters ($\beta_k$), then the multivariate normal priors are defined as: $$ \boldsymbol{\beta}\sim{}\mathcal{N_k}(\boldsymbol{\mu}, \mathbf{\Sigma}) $$ where $$\boldsymbol{\mu}=[E[\beta_1],E[\beta_2],...,E[\beta_k]] = \left(\begin{array}{c}0\\\vdots\\0\end{array}\right)$$ $$ \mathbf{\Sigma}=[Cov[\beta_i, \beta_j]] = \left(\begin{array}{ccc}1000^2&0&0\\0&\ddots&0\\0&0&1000^2\end{array} \right) $$ hence, along with the response and predictor matrix, we need to supply $\boldsymbol{\mu}$ (a vector of zeros) and $\boldsymbol{\Sigma}$ (a covariance matrix with $1000^2$ in the diagonals).

Xmat <- model.matrix(~x, dat.nb) nX <- ncol(Xmat) dat.nb.list2 <- with(dat.nb,list(Y=y, X=Xmat,N=nrow(dat.nb), mu=rep(0,nX),Sigma=diag(1.0E-06,nX))) modelString=" model { for (i in 1:N) { Y[i] ~ dnegbin(p[i],size) p[i] <- size/(size+lambda[i]) eta[i] <- inprod(beta[], X[i,]) log(lambda[i]) <- eta[i] } beta ~ dmnorm(mu[],Sigma[,]) size ~ dunif(0.001,10000) } " writeLines(modelString,con='../downloads/BUGSscripts/tut11.5bS4.12.bug') library(R2jags) system.time( dat.NB.jags2 <- jags(model='../downloads/BUGSscripts/tut11.5bS4.12.bug', data=dat.nb.list2, inits=NULL, param=c('beta', 'size'), n.chain=3, n.iter=20000, n.thin=10, n.burnin=10000) )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 173 Initializing model

user system elapsed 6.353 0.000 6.361

print(dat.NB.jags2)

Inference for Bugs model at "../downloads/BUGSscripts/tut11.5bS4.12.bug", fit using jags,

3 chains, each with 20000 iterations (first 10000 discarded), n.thin = 10

n.sims = 3000 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

beta[1] 0.737 0.421 -0.097 0.460 0.736 1.013 1.555 1.002 3000

beta[2] 0.097 0.033 0.030 0.076 0.097 0.118 0.164 1.001 3000

size 3.102 1.931 1.048 1.885 2.615 3.749 7.885 1.001 3000

deviance 113.067 2.628 110.066 111.150 112.407 114.255 120.059 1.002 1600

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 3.5 and DIC = 116.5

DIC is an estimate of expected predictive error (lower deviance is better).

dat.nb.brm <- brm(y~x, data=dat.nb, family='negbinomial', prior=c(set_prior("normal(0,100)", class="b"), set_prior("student_t(3,0,5)", class="shape")), chains=3, iter=2000, warmup=1000, thin=2)

SAMPLING FOR MODEL 'negbinomial(log) brms-model' NOW (CHAIN 1). Chain 1, Iteration: 1 / 2000 [ 0%] (Warmup) Chain 1, Iteration: 200 / 2000 [ 10%] (Warmup) Chain 1, Iteration: 400 / 2000 [ 20%] (Warmup) Chain 1, Iteration: 600 / 2000 [ 30%] (Warmup) Chain 1, Iteration: 800 / 2000 [ 40%] (Warmup) Chain 1, Iteration: 1000 / 2000 [ 50%] (Warmup) Chain 1, Iteration: 1001 / 2000 [ 50%] (Sampling) Chain 1, Iteration: 1200 / 2000 [ 60%] (Sampling) Chain 1, Iteration: 1400 / 2000 [ 70%] (Sampling) Chain 1, Iteration: 1600 / 2000 [ 80%] (Sampling) Chain 1, Iteration: 1800 / 2000 [ 90%] (Sampling) Chain 1, Iteration: 2000 / 2000 [100%] (Sampling)# # Elapsed Time: 0.129428 seconds (Warm-up) # 0.100482 seconds (Sampling) # 0.22991 seconds (Total) # SAMPLING FOR MODEL 'negbinomial(log) brms-model' NOW (CHAIN 2). Chain 2, Iteration: 1 / 2000 [ 0%] (Warmup) Chain 2, Iteration: 200 / 2000 [ 10%] (Warmup) Chain 2, Iteration: 400 / 2000 [ 20%] (Warmup) Chain 2, Iteration: 600 / 2000 [ 30%] (Warmup) Chain 2, Iteration: 800 / 2000 [ 40%] (Warmup) Chain 2, Iteration: 1000 / 2000 [ 50%] (Warmup) Chain 2, Iteration: 1001 / 2000 [ 50%] (Sampling) Chain 2, Iteration: 1200 / 2000 [ 60%] (Sampling) Chain 2, Iteration: 1400 / 2000 [ 70%] (Sampling) Chain 2, Iteration: 1600 / 2000 [ 80%] (Sampling) Chain 2, Iteration: 1800 / 2000 [ 90%] (Sampling) Chain 2, Iteration: 2000 / 2000 [100%] (Sampling)# # Elapsed Time: 0.11 seconds (Warm-up) # 0.091671 seconds (Sampling) # 0.201671 seconds (Total) # SAMPLING FOR MODEL 'negbinomial(log) brms-model' NOW (CHAIN 3). Chain 3, Iteration: 1 / 2000 [ 0%] (Warmup) Chain 3, Iteration: 200 / 2000 [ 10%] (Warmup) Chain 3, Iteration: 400 / 2000 [ 20%] (Warmup) Chain 3, Iteration: 600 / 2000 [ 30%] (Warmup) Chain 3, Iteration: 800 / 2000 [ 40%] (Warmup) Chain 3, Iteration: 1000 / 2000 [ 50%] (Warmup) Chain 3, Iteration: 1001 / 2000 [ 50%] (Sampling) Chain 3, Iteration: 1200 / 2000 [ 60%] (Sampling) Chain 3, Iteration: 1400 / 2000 [ 70%] (Sampling) Chain 3, Iteration: 1600 / 2000 [ 80%] (Sampling) Chain 3, Iteration: 1800 / 2000 [ 90%] (Sampling) Chain 3, Iteration: 2000 / 2000 [100%] (Sampling)# # Elapsed Time: 0.108511 seconds (Warm-up) # 0.074716 seconds (Sampling) # 0.183227 seconds (Total) #

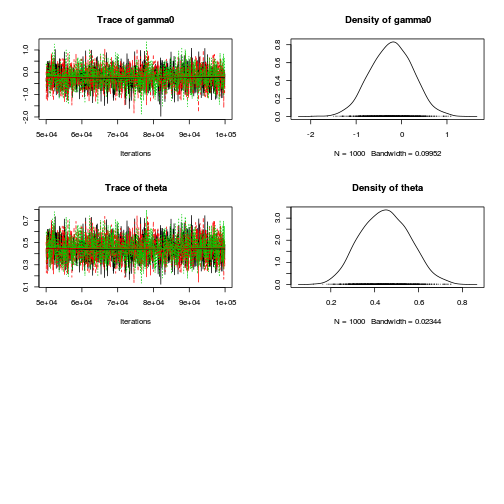

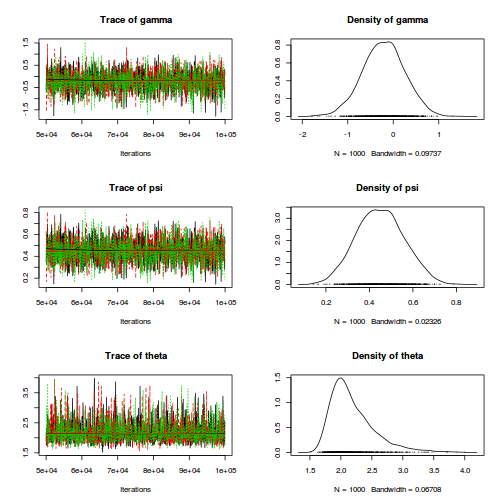

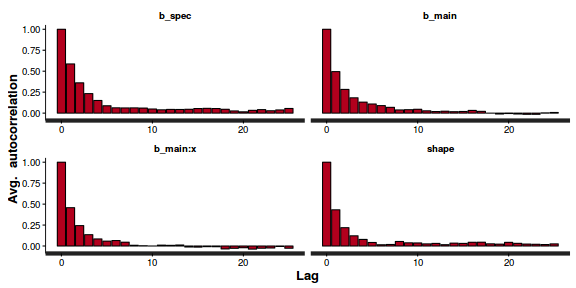

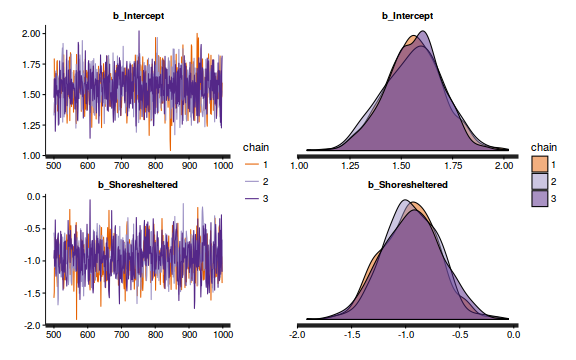

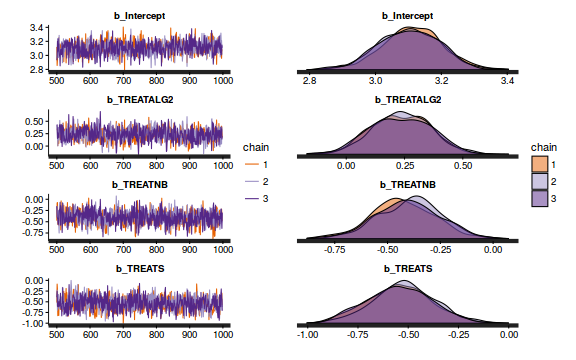

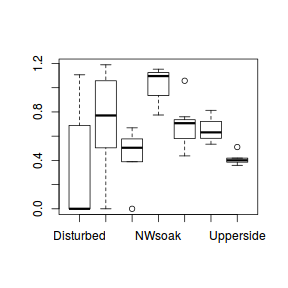

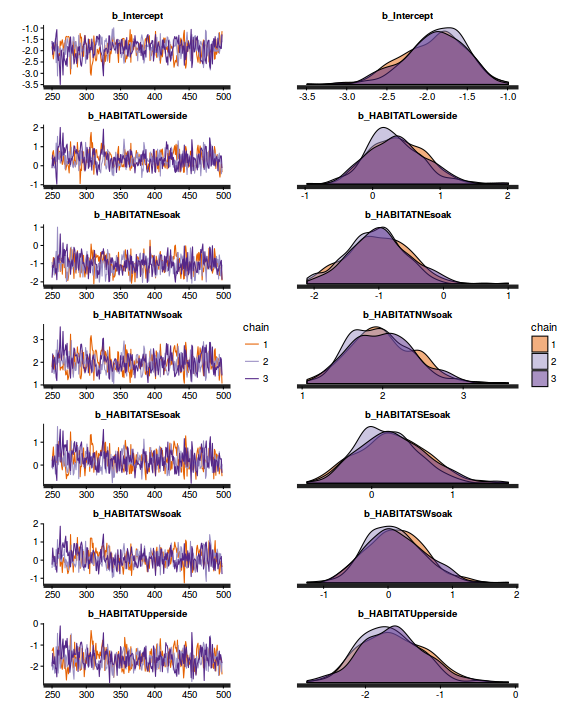

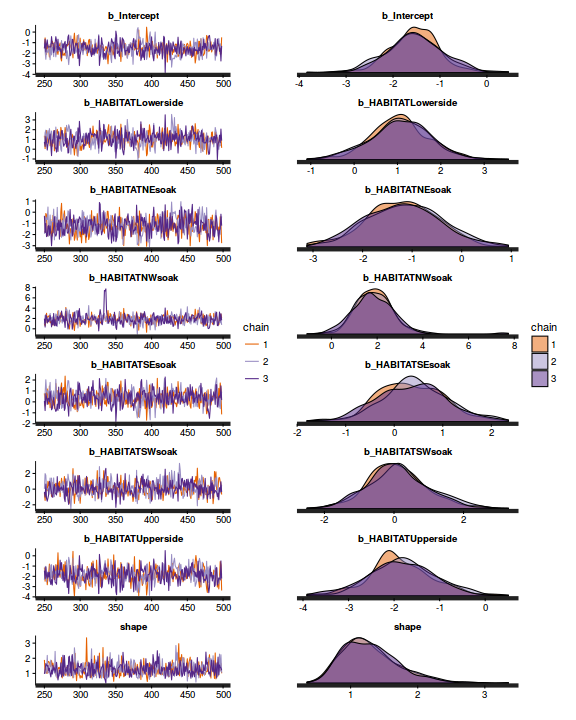

Chain mixing and Model validation

Prior to exploring the model parameters, it is prudent to confirm that the model did indeed fit the assumptions and was an appropriate fit to the data as well as that the MCMC sampling chain was adequately mixed and the retained samples independent.

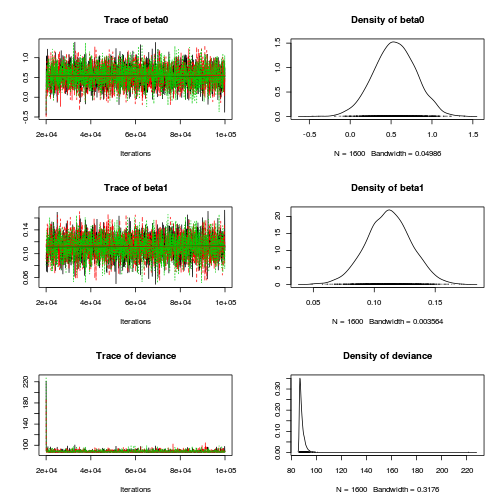

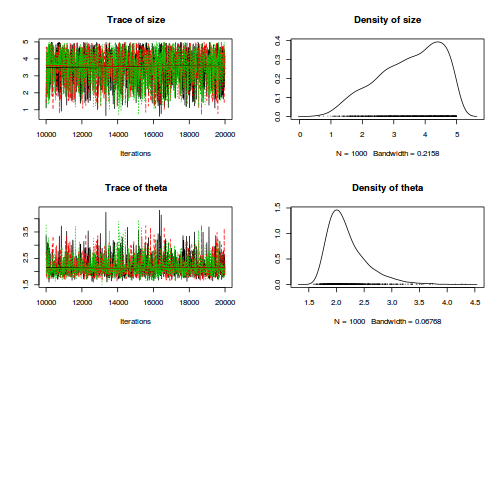

- We will start by exploring the mixing of the MCMC chains via traceplots.

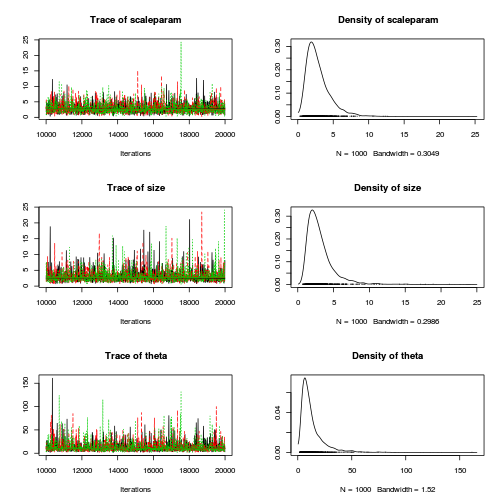

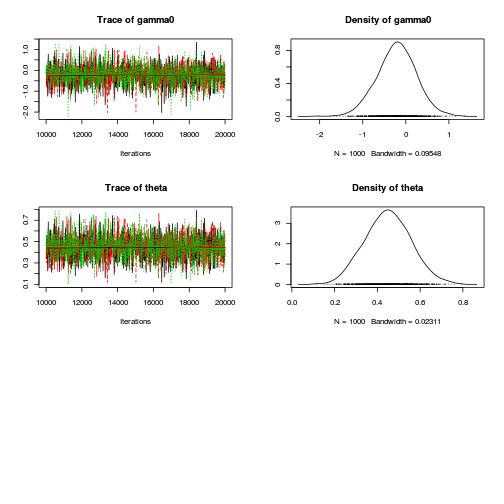

plot(as.mcmc(dat.NB.jags))

library(gridExtra) grid.arrange(stan_trace(dat.nb.brm$fit, ncol=1), stan_dens(dat.nb.brm$fit, separate_chains=TRUE,ncol=1), ncol=2)

The chains appear well mixed and stable

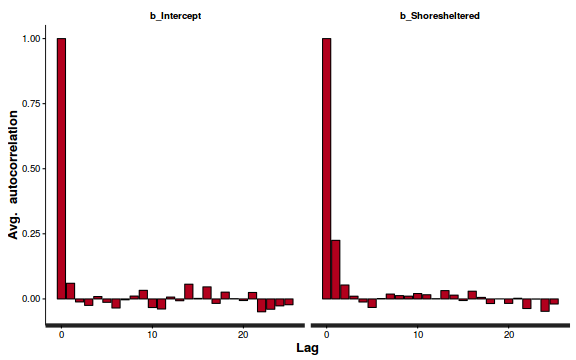

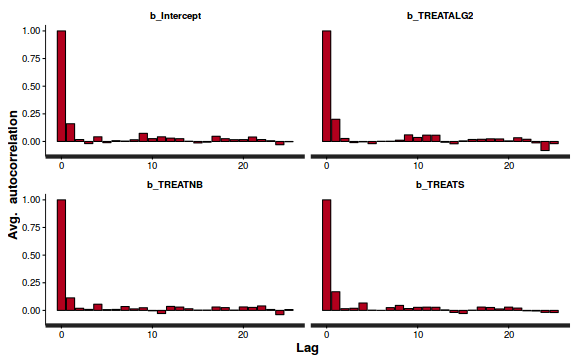

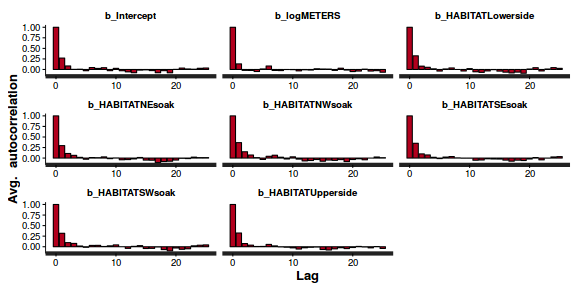

- Next we will explore correlation amongst MCMC samples.

autocorr.diag(as.mcmc(dat.NB.jags))

beta0 beta1 deviance scaleparam size theta Lag 0 1.00000 1.00000 1.0000000 1.0000000 1.00000 1.000000 Lag 10 0.30859 0.30371 0.0455964 0.0180221 0.08759 0.028477 Lag 50 -0.05370 -0.05565 0.0011639 -0.0206865 -0.01812 -0.022056 Lag 100 -0.01028 -0.01914 -0.0003804 -0.0075196 0.01651 -0.004871 Lag 500 0.02130 0.01817 -0.0441559 0.0001324 0.01337 0.022265

The level of auto-correlation at the nominated lag of 10 is higher than we would generally like. It is worth increasing the thinning rate from 10 to 50. Obviously, to support this higher thinning rate, we would also increase the number of iterations. Typically for a negative binomial, it is worth having a large burnin (approximately half of the iterations).

library(R2jags) dat.NB.jags <- jags(data=dat.nb.list,model.file='../downloads/BUGSscripts/tut11.5bS4.1.bug', param=c('beta0','beta1','size'), n.chains=3, n.iter=50000, n.burnin=25000, n.thin=50)

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 157 Initializing model

print(dat.NB.jags)

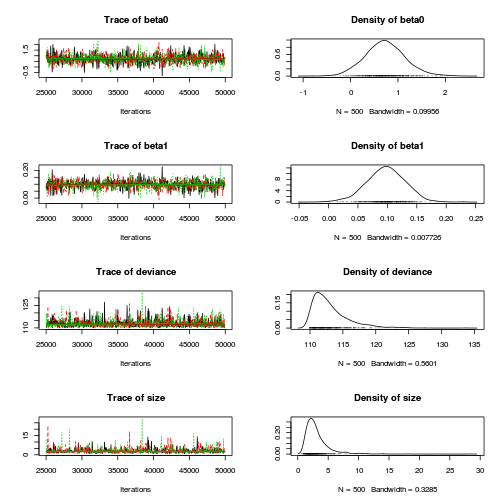

Inference for Bugs model at "../downloads/BUGSscripts/tut11.5bS4.1.bug", fit using jags, 3 chains, each with 50000 iterations (first 25000 discarded), n.thin = 50 n.sims = 1500 iterations saved mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff beta0 0.730 0.421 -0.074 0.452 0.725 0.996 1.602 1.000 1500 beta1 0.097 0.033 0.030 0.077 0.098 0.119 0.157 1.001 1500 size 3.167 2.185 1.086 1.911 2.641 3.704 8.551 1.002 1100 deviance 113.089 2.760 110.053 111.122 112.340 114.179 120.468 1.004 780 For each parameter, n.eff is a crude measure of effective sample size, and Rhat is the potential scale reduction factor (at convergence, Rhat=1). DIC info (using the rule, pD = var(deviance)/2) pD = 3.8 and DIC = 116.9 DIC is an estimate of expected predictive error (lower deviance is better).plot(as.mcmc(dat.NB.jags))

autocorr.diag(as.mcmc(dat.NB.jags))

beta0 beta1 deviance size Lag 0 1.000000 1.000000 1.000000 1.00000 Lag 50 0.002132 0.007780 0.002472 0.00480 Lag 250 -0.014601 -0.013641 -0.012261 -0.06051 Lag 500 0.019129 0.015262 -0.023785 0.01291 Lag 2500 0.039030 0.008195 -0.013073 -0.01248

Conclusions: the samples are now less auto-correlated. Ideally, we should probably collect even more samples. Whilst the traceplots are reasonably noisy, there is more of a signal or pattern than there ideally should be.

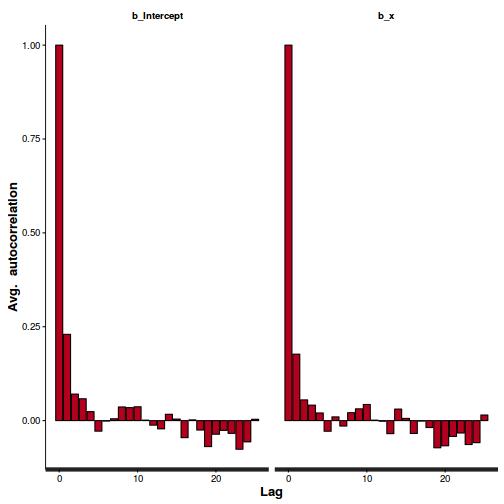

stan_ac(dat.nb.brm$fit)

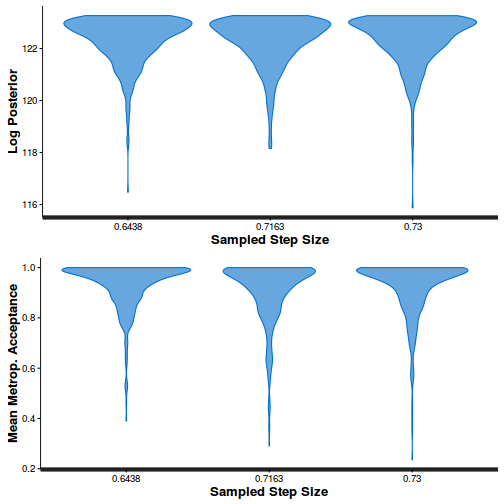

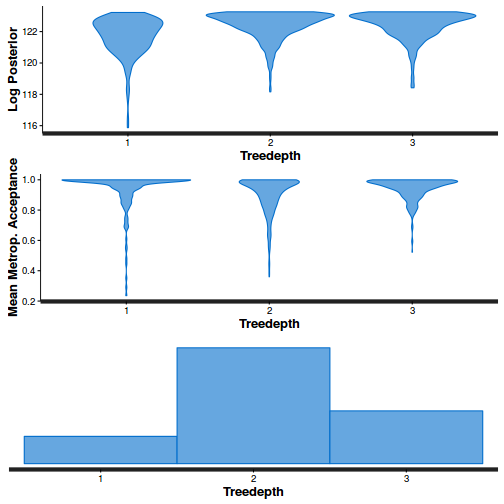

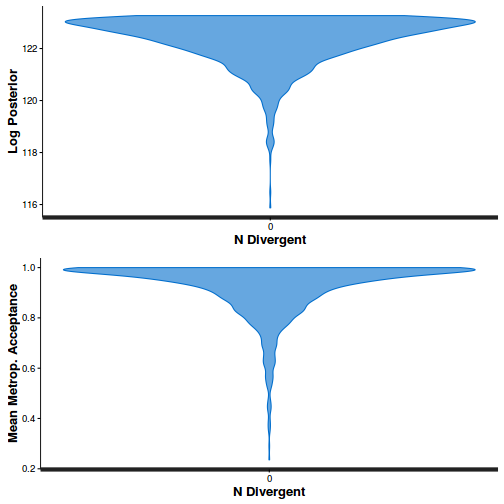

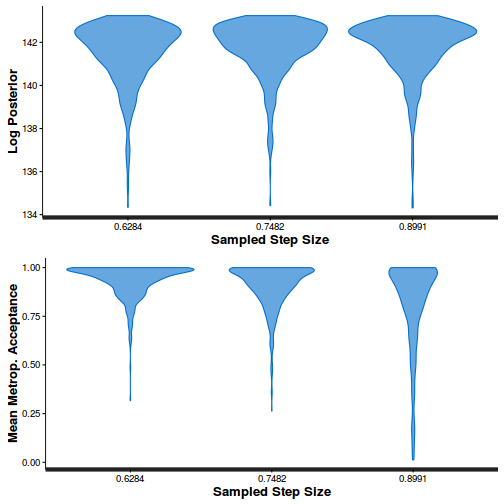

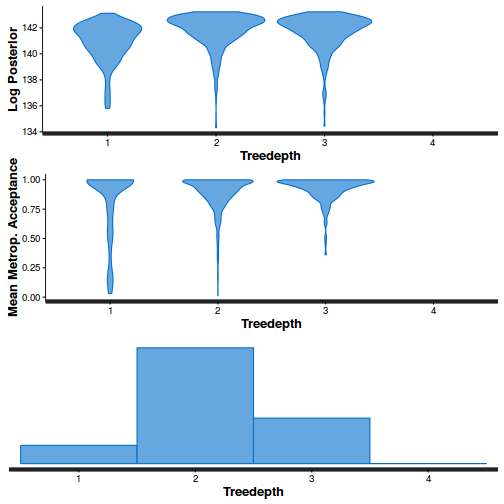

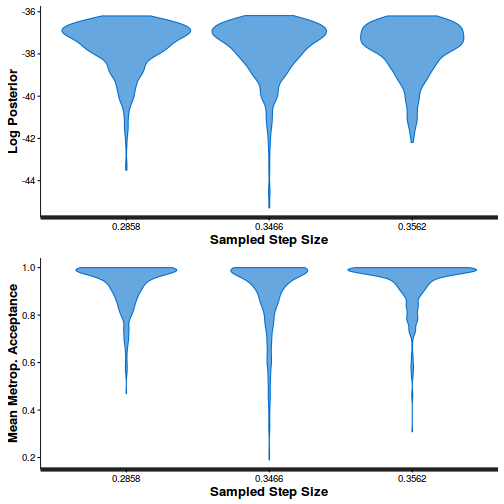

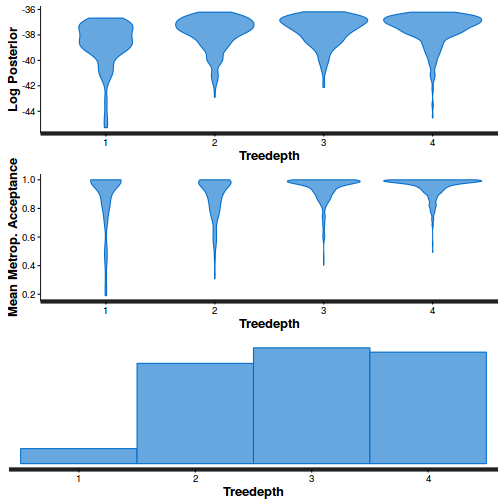

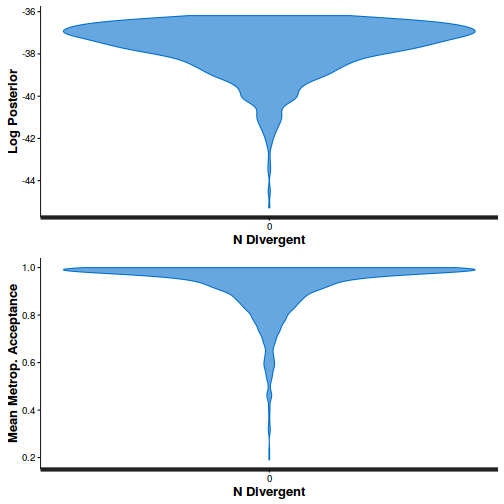

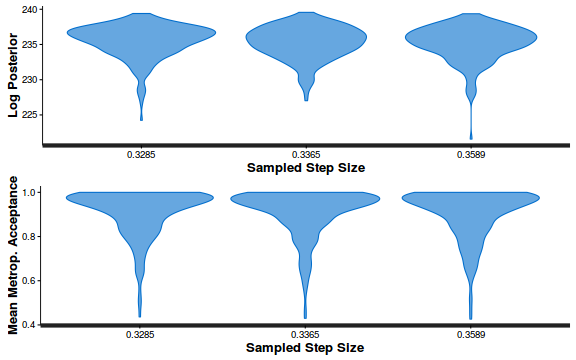

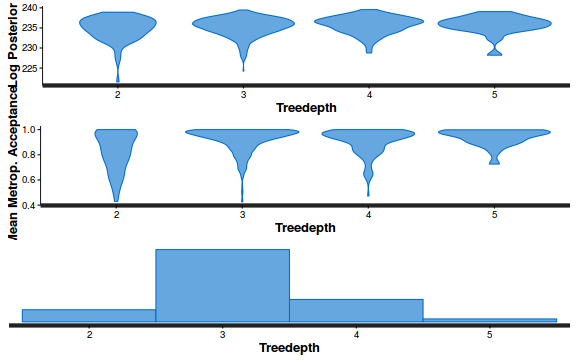

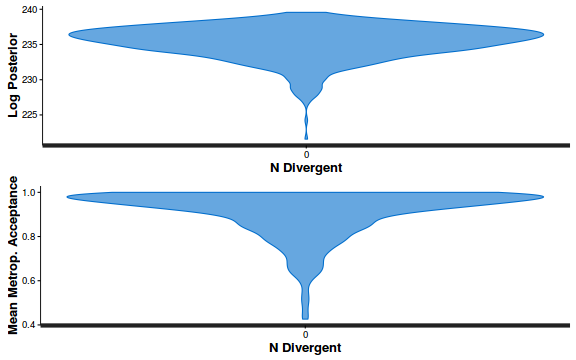

- Explore the step size characteristics (STAN only)

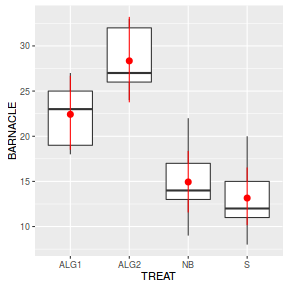

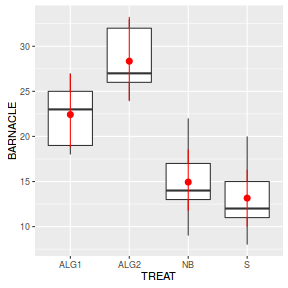

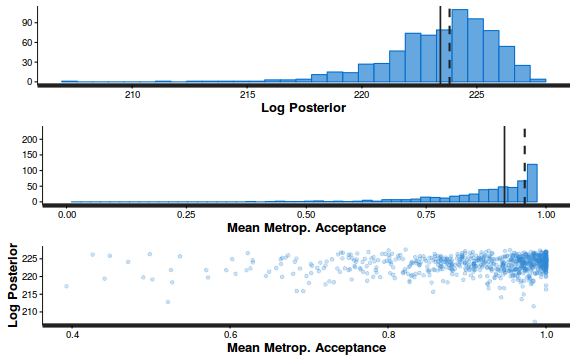

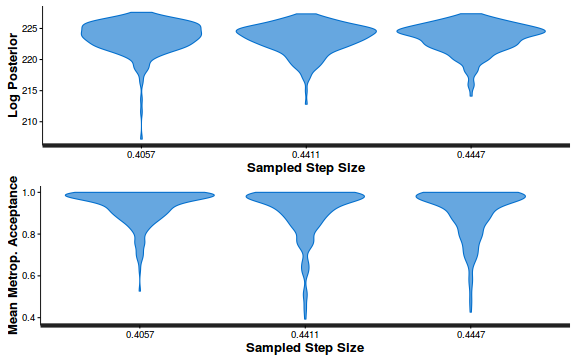

Conclusions: acceptance ratio is very good, the lot posterior is relatively robust to step size and tree depth, rhat values are all below 1.05, the effective sample size is always above 0.5 and the ratio of MCMC error to posterior sd is very small.

summary(do.call(rbind, args = get_sampler_params(dat.nb.brm$fit, inc_warmup = FALSE)), digits = 2)

accept_stat__ stepsize__ treedepth__ n_leapfrog__ n_divergent__ Min. :0.012 Min. :0.63 Min. :1.0 Min. : 1.0 Min. :0 1st Qu.:0.850 1st Qu.:0.63 1st Qu.:2.0 1st Qu.: 3.0 1st Qu.:0 Median :0.937 Median :0.75 Median :2.0 Median : 3.0 Median :0 Mean :0.884 Mean :0.76 Mean :2.2 Mean : 3.8 Mean :0 3rd Qu.:0.990 3rd Qu.:0.90 3rd Qu.:3.0 3rd Qu.: 5.0 3rd Qu.:0 Max. :1.000 Max. :0.90 Max. :4.0 Max. :15.0 Max. :0

stan_diag(dat.nb.brm$fit)

stan_diag(dat.nb.brm$fit, information = "stepsize")

stan_diag(dat.nb.brm$fit, information = "treedepth")

stan_diag(dat.nb.brm$fit, information = "divergence")

library(gridExtra) grid.arrange(stan_rhat(dat.nb.brm$fit) + theme_classic(8), stan_ess(dat.nb.brm$fit) + theme_classic(8), stan_mcse(dat.nb.brm$fit) + theme_classic(8), ncol = 2)

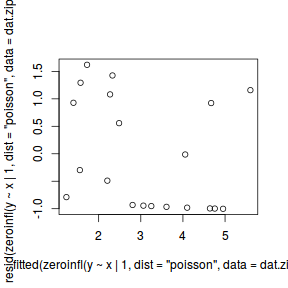

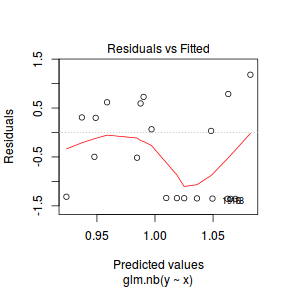

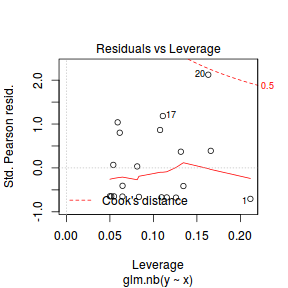

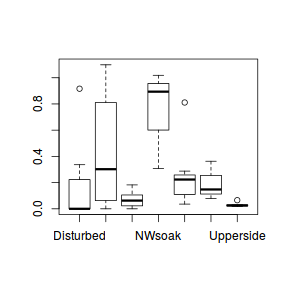

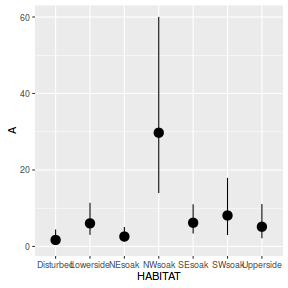

- We now explore the goodness of fit of the models via the residuals and deviance.

We could calculate the Pearsons's residuals within the JAGS model.

Alternatively, we could use the parameters to generate the residuals outside of JAGS.

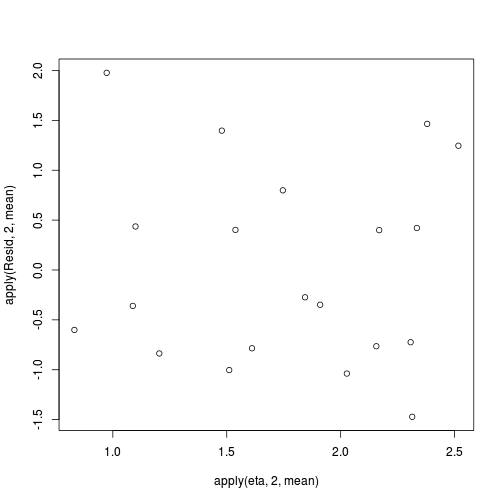

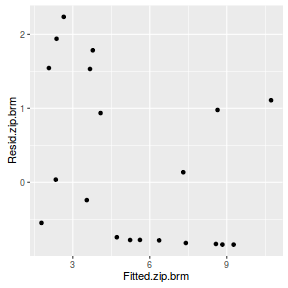

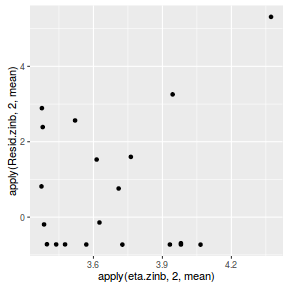

#extract the samples for the two model parameters coefs <- dat.NB.jags$BUGSoutput$sims.matrix[,1:2] size <- dat.NB.jags$BUGSoutput$sims.matrix[,'size'] Xmat <- model.matrix(~x, data=dat.nb) #expected values on a log scale eta<-coefs %*% t(Xmat) #expected value on response scale lambda <- exp(eta) varY <- lambda + (lambda^2)/size #sweep across rows and then divide by lambda Resid <- -1*sweep(lambda,2,dat.nb$y,'-')/sqrt(varY) #plot residuals vs expected values plot(apply(Resid,2,mean)~apply(eta,2,mean))

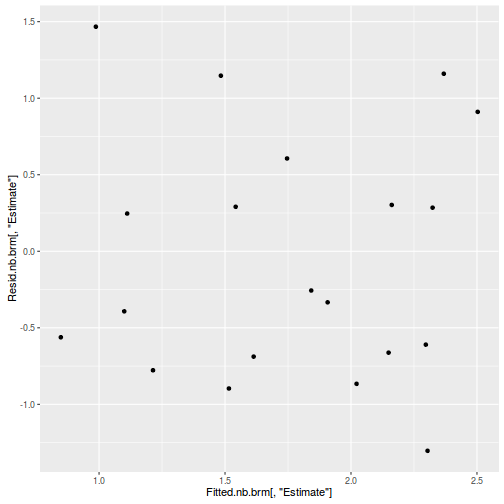

#Calculate residuals Resid.nb.brm <- residuals(dat.nb.brm, type='pearson') Fitted.nb.brm <- fitted(dat.nb.brm, scale='linear') ggplot(data=NULL, aes(y=Resid.nb.brm[,'Estimate'], x=Fitted.nb.brm[,'Estimate'])) + geom_point()

There are no real patterns in the residuals.

- Now we will compare the sum of squared residuals to the sum of squares residuals that would be expected from a Poisson distribution

matching that estimated by the model. Essentially this is estimating how well the Poisson distribution, the log-link function and the linear model

approximates the observed data.

SSres<-apply(Resid^2,1,sum) set.seed(2) #generate a matrix of draws from a negative binomial distribution # the matrix is the same dimensions as pi and uses the probabilities of pi YNew <- matrix(rnbinom(length(lambda),mu=lambda, size=size),nrow=nrow(lambda)) Resid1<-(lambda - YNew)/sqrt(varY) SSres.sim<-apply(Resid1^2,1,sum) mean(SSres.sim>SSres)

[1] 0.4127

Xmat <- model.matrix(~x, dat.nb) nX <- ncol(Xmat) dat.nb.list2 <- with(dat.nb,list(Y=y, X=Xmat,N=nrow(dat.nb), mu=rep(0,nX),Sigma=diag(1.0E-06,nX))) modelString=" model { for (i in 1:N) { Y[i] ~ dnegbin(p[i],size) p[i] <- size/(size+lambda[i]) eta[i] <- inprod(beta[], X[i,]) log(lambda[i]) <- eta[i] Y1[i] ~ dnegbin(p[i],size) varY[i] <- lambda[i] + pow(lambda[i],2)/size Resid[i] <- (Y[i] - lambda[i])/sqrt(varY[i]) Resid1[i] <- (Y1[i] - lambda[i])/sqrt(varY[i]) RSS[i] <- pow(Resid[i],2) RSS1[i] <-pow(Resid1[i],2) } beta ~ dmnorm(mu[],Sigma[,]) size ~ dunif(0.001,10000) Pvalue <- mean(sum(RSS1)>sum(RSS)) } " writeLines(modelString,con='../downloads/BUGSscripts/tut11.5bS4.61.bug') library(R2jags) system.time( dat.NB.jags3 <- jags(model='../downloads/BUGSscripts/tut11.5bS4.61.bug', data=dat.nb.list2, inits=NULL, param=c('beta','Pvalue'), n.chain=3, n.iter=20000, n.thin=10, n.burnin=10000) )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 400 Initializing model

user system elapsed 6.717 0.000 6.726

print(dat.NB.jags3)

Inference for Bugs model at "../downloads/BUGSscripts/tut11.5bS4.61.bug", fit using jags, 3 chains, each with 20000 iterations (first 10000 discarded), n.thin = 10 n.sims = 3000 iterations saved mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff Pvalue 0.421 0.494 0.000 0.000 0.000 1.000 1.000 1.002 1000 beta[1] 0.763 0.422 -0.035 0.482 0.746 1.044 1.619 1.002 1400 beta[2] 0.095 0.033 0.030 0.072 0.095 0.117 0.160 1.002 1500 deviance 113.064 2.596 110.071 111.143 112.361 114.276 119.730 1.001 3000 For each parameter, n.eff is a crude measure of effective sample size, and Rhat is the potential scale reduction factor (at convergence, Rhat=1). DIC info (using the rule, pD = var(deviance)/2) pD = 3.4 and DIC = 116.4 DIC is an estimate of expected predictive error (lower deviance is better).Resid.nb.brm <- residuals(dat.nb.brm, type='pearson', summary=FALSE) SSres.nb.brm <- apply(Resid.nb.brm^2,1,sum) lambda.nb.brm = fitted(dat.nb.brm, scale='response', summary=FALSE) YNew.nb.brm <- matrix(rpois(length(lambda.nb.brm), lambda=lambda.nb.brm),nrow=nrow(lambda.nb.brm)) Resid1.nb.brm<-(lambda.nb.brm - YNew.nb.brm)/sqrt(lambda.nb.brm) SSres.sim.nb.brm<-apply(Resid1.nb.brm^2,1,sum) mean(SSres.sim.nb.brm>SSres.nb.brm)

[1] 0.8286667

Conclusions: the Bayesian p-value is not far from 0.5, suggesting that there is a good fit of the model to the data.

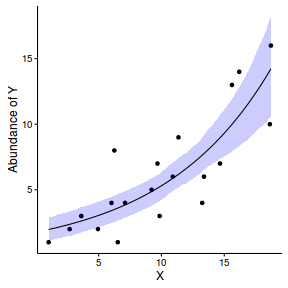

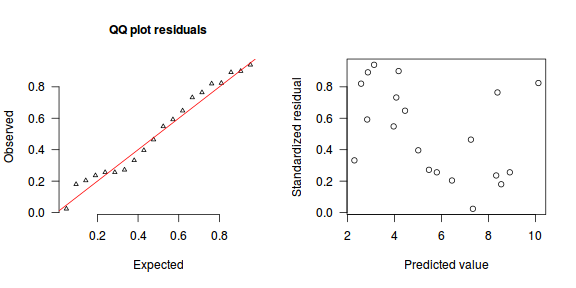

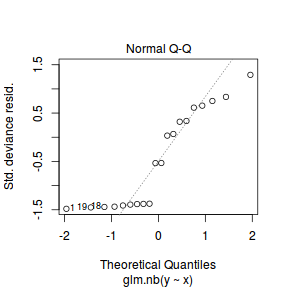

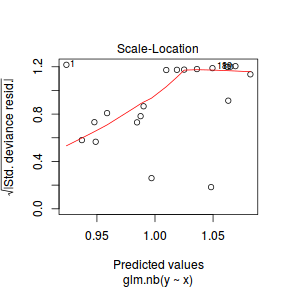

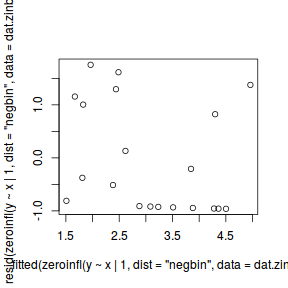

- Unfortunately, unlike with linear models (Gaussian family), the expected distribution of data (residuals) varies

over the range of fitted values for numerous (often competing) ways that make diagnosing (and attributing causes

thereof) miss-specified generalized linear models from standard residual plots very difficult. The use of standardized

(Pearson) residuals or deviance residuals can partly address this issue, yet they still do not offer completely

consistent diagnoses across all issues (miss-specified model, over-dispersion, zero-inflation).

An alternative approach is to use simulated data from the model posteriors to calculate an empirical cumulative density function from which residuals are are generated as values corresponding to the observed data along the density function.

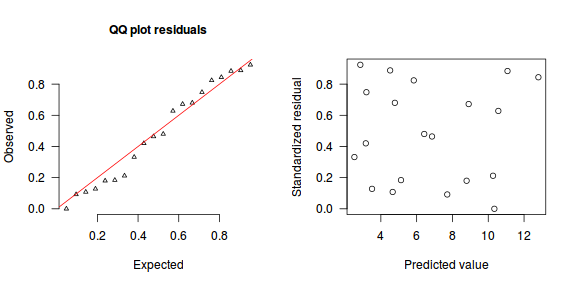

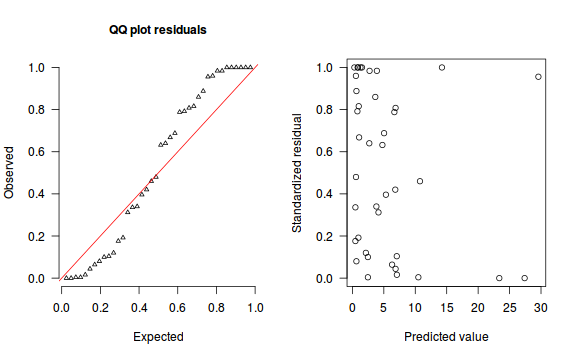

The trend (black symbols) in the qq-plot does not appear to be overly non-linear (matching the ideal red line well), suggesting that the model is not overdispersed. The spread of standardized (simulated) residuals in the residual plot do not appear overly non-uniform. That is there is not trend in the residuals. Furthermore, there is not a concentration of points close to 1 or 0 (which would imply overdispersion).#extract the samples for the two model parameters coefs <- dat.NB.jags$BUGSoutput$sims.matrix[,1:2] size <- dat.NB.jags$BUGSoutput$sims.matrix[,'size'] Xmat <- model.matrix(~x, data=dat.nb) #expected values on a log scale eta<-coefs %*% t(Xmat) #expected value on response scale lambda <- exp(eta) simRes <- function(lambda, data,n=250, plot=T, family='negbin', size=NULL) { require(gap) N = nrow(data) sim = switch(family, 'poisson' = matrix(rpois(n*N,apply(lambda,2,mean)),ncol=N, byrow=TRUE), 'negbin' = matrix(MASS:::rnegbin(n*N,apply(lambda,2,mean),size),ncol=N, byrow=TRUE) ) a = apply(sim + runif(n,-0.5,0.5),2,ecdf) resid<-NULL for (i in 1:nrow(data)) resid<-c(resid,a[[i]](data$y[i] + runif(1 ,-0.5,0.5))) if (plot==T) { par(mfrow=c(1,2)) gap::qqunif(resid,pch = 2, bty = "n", logscale = F, col = "black", cex = 0.6, main = "QQ plot residuals", cex.main = 1, las=1) plot(resid~apply(lambda,2,mean), xlab='Predicted value', ylab='Standardized residual', las=1) } resid } simRes(lambda,dat.nb, family='negbin', size=mean(size))

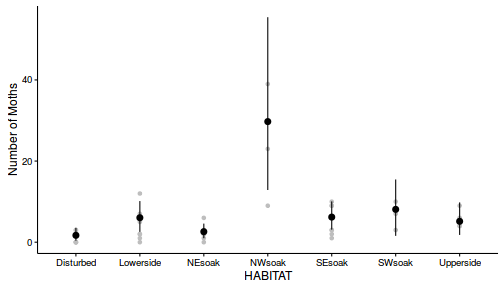

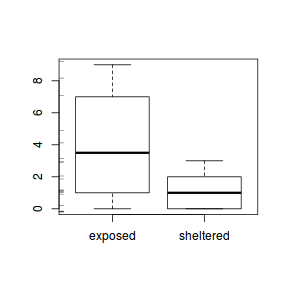

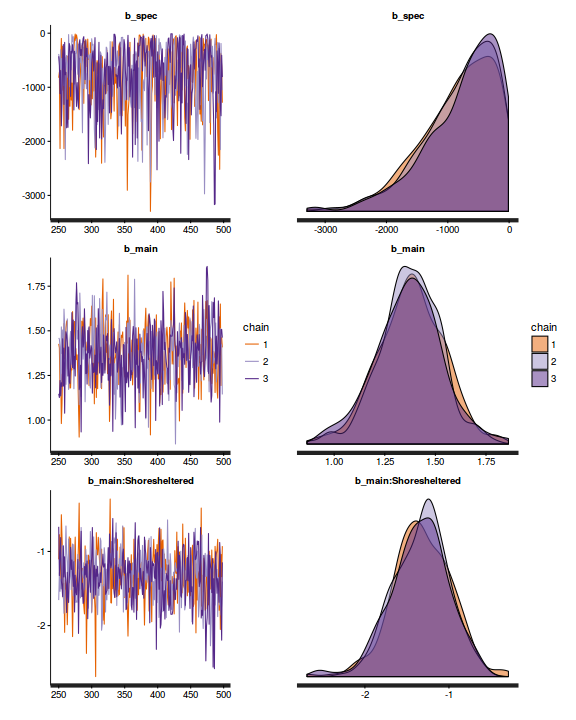

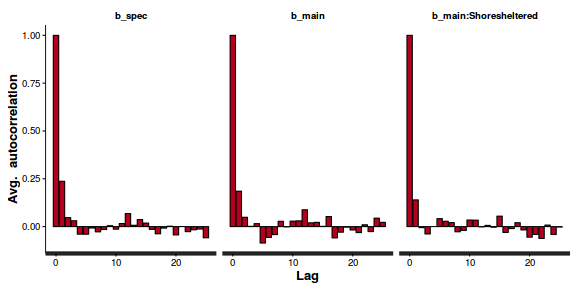

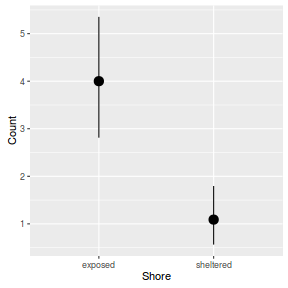

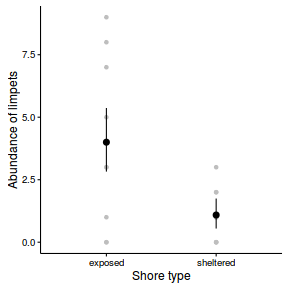

[1] 0.208 0.956 0.428 0.688 0.080 0.888 0.124 0.728 0.144 0.824 0.460 0.352 0.100 0.172 0.684 0.208 0.004 0.688 0.892 0.876