Tutorial 12.10 - Integrated Nested Laplace Approximation (INLA) for (generalized) linear models

25 Feb 2016

Overview

Tutorial 12.9 introduced the basic INLA framework as well as a fully fleshed out example using a fabricated data set. The current tutorial will focus on linear models and using both fabricated data and real worked examples.

In this tutorial we have an opportunity to explore INLA for multilevel (hierarchical) models.Although performing very simple linear regression with INLA might be seen as a complete overkill (as it does not really utilize the main features for which INLA is designed), from a pedagogical perspective, starting with simpler models for which robust and well understood solutions exist allows us to gradually build up an understanding of how INLA works. Model complexity can be gradually introduced, thereby allowing us to explore techniques progressively by building on more solid foundations. All data sets will be fabricated from set parameters so that we always have a 'truth' from which to compare outcomes and as a point of comparison, each data set will be followed by Frequentist and Bayesian MCMC outcomes.

Simple regression

library(R2jags) library(INLA) library(ggplot2)

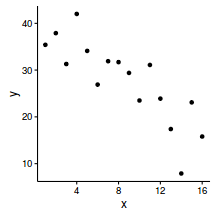

Lets say we had set up an experiment in which we applied a continuous treatment ($x$) ranging in magnitude from 0 to 16 to a total of 16 sampling units ($n=16$) and then measured a response ($y$) from each unit. As this section is mainly about the generation of artificial data (and not specifically about what to do with the data), understanding the actual details are optional and can be safely skipped. Consequently, I have folded (toggled) this section away.

- the sample size = 16

- the continuous $x$ variable ranging from 0 to 16

- when the value of $x$ is 0, $y$ is expected to be 40 ($\beta_0=40$)

- a 1 unit increase in $x$ is associated with a 1.5 unit decline in $y$ ($\beta_1=-1.5$)

- the data are drawn from normal distributions with a mean of 0 and standard deviation of 5 ($\sigma^2=25$)

set.seed(1) n <- 16 a <- 40 #intercept b <- -1.5 #slope sigma2 <- 25 #residual variance (sd=5) x <- 1:n #values of the year covariate eps <- rnorm(n, mean = 0, sd = sqrt(sigma2)) #residuals y <- a + b * x + eps #response variable #OR y <- (model.matrix(~x) %*% c(a,b))+eps data <- data.frame(y=round(y,1), x) #dataset head(data) #print out the first six rows of the data set

y x 1 35.4 1 2 37.9 2 3 31.3 3 4 42.0 4 5 34.1 5 6 26.9 6

ggplot(data, aes(y=y, x=x)) + geom_point() + theme_classic()

$$ y_i = \alpha + \beta x_i + \varepsilon_i \hspace{4em}\varepsilon_i\sim{}\mathcal{N}(0, \sigma^2) $$

summary(lm(y~x, data=data))

Call:

lm(formula = y ~ x, data = data)

Residuals:

Min 1Q Median 3Q Max

-11.3694 -3.5053 0.6239 2.6522 7.3909

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 40.7450 2.6718 15.250 4.09e-10 ***

x -1.5340 0.2763 -5.552 7.13e-05 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 5.095 on 14 degrees of freedom

Multiple R-squared: 0.6876, Adjusted R-squared: 0.6653

F-statistic: 30.82 on 1 and 14 DF, p-value: 7.134e-05

confint(lm(y~x, data=data))

2.5 % 97.5 % (Intercept) 35.014624 46.4753756 x -2.126592 -0.9413493

modelString=" model { #Likelihood for (i in 1:n) { y[i]~dnorm(mu[i],tau) mu[i] <- alpha+beta*x[i] } #Priors alpha ~ dnorm(0,1.0E-6) beta ~ dnorm(0,1.0E-6) tau <- 1 / (sigma * sigma) sigma~dgamma(0.1,0.001) #tau <- exp(log.tau) #log.tau ~ dgamma(1,0.01) } " data.list <- with(data, list(y=y, x=x, n=nrow(data))) data.jags <- jags(data=data.list, inits=NULL, parameters.to.save=c('alpha','beta', 'sigma'), model.file=textConnection(modelString), n.chains=3, n.iter=100000, n.burnin=20000, n.thin=100 )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 75 Initializing model

print(data.jags)

Inference for Bugs model at "5", fit using jags,

3 chains, each with 1e+05 iterations (first 20000 discarded), n.thin = 100

n.sims = 2400 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

alpha 40.813 2.837 35.375 38.950 40.766 42.656 46.533 1.001 2400

beta -1.540 0.291 -2.119 -1.729 -1.536 -1.344 -0.973 1.001 2400

sigma 5.383 1.089 3.739 4.600 5.195 6.001 7.939 1.000 2400

deviance 98.660 2.585 95.609 96.706 98.040 99.958 105.200 1.001 2300

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 3.3 and DIC = 102.0

DIC is an estimate of expected predictive error (lower deviance is better).

data.inla <- inla(y~x, data=data, control.compute=list(dic=TRUE, cpo=TRUE, waic=TRUE))

The inla() function returns an object of class inla of which there are two methods available (summary and plot). For an inla fitted model, the summary method returns the Fixed and Random effects as well as a number of information criterion and limited fit diagnostics.

summary(data.inla)

Call:

c("inla(formula = y ~ x, data = data, control.compute = list(dic = TRUE, ", " cpo = TRUE, waic = TRUE))")

Time used:

Pre-processing Running inla Post-processing Total

0.1013 0.0632 0.0360 0.2006

Fixed effects:

mean sd 0.025quant 0.5quant 0.975quant mode kld

(Intercept) 40.7441 2.5688 35.6573 40.7440 45.8219 40.7442 0

x -1.5339 0.2657 -2.0599 -1.5339 -1.0087 -1.5339 0

The model has no random effects

Model hyperparameters:

mean sd 0.025quant 0.5quant 0.975quant mode

Precision for the Gaussian observations 0.0438 0.0155 0.0199 0.0418 0.0798 0.0378

Expected number of effective parameters(std dev): 2.00(0.00)

Number of equivalent replicates : 8.00

Deviance Information Criterion (DIC) ...: 100.64

Effective number of parameters .........: 2.634

Watanabe-Akaike information criterion (WAIC) ...: 101.40

Effective number of parameters .................: 2.942

Marginal log-Likelihood: -64.55

CPO and PIT are computed

Posterior marginals for linear predictor and fitted values computed

Lets focus on the different components of this output. The 'Fixed' effects represent a set of summaries from the posterior distribution. These results are very similar to those yielded from the Frequentist and Bayesian MCMC approaches.

mean sd 0.025quant 0.5quant 0.975quant mode kld (Intercept) 40.7441 2.5688 35.6573 40.7440 45.8219 40.7442 0 x -1.5339 0.2657 -2.0599 -1.5339 -1.0087 -1.5339 0

mean sd 0.025quant 0.5quant 0.975quant mode Precision for the Gaussian observations 0.0438 0.0155 0.0199 0.0418 0.0798 0.0378

Expected number of effective parameters(std dev): 2.00(0.00) Number of equivalent replicates : 8.00

The number of equivalent replicates is the number of replicates (sample size) per effective parameter. In this case, there are 16 observations, so there are $16/2=8$ equivalent replicates. Low values (relative to the overall sample size) are indicative of a poor fit.

Out-of-sample or leave-one-out predictive performance

Arguably, the best way to assess the fit of a model is via an estimate of the models predictive accuracy, the gold standard of which is out-of-sample estimation via cross-validation (in which each observed response is compared to a prediction derived from a model that trained on without the focal response value). However, as this approach required repeated model fits, it is not overly practical for large complex models, MCMC simulations or very sparse data. Traditional alternatives to cross-validation include Akaike's Information Criterion (AIC) and Deviance Information Criterion (DIC) when associated with Bayesian analyses.

Deviance Information Criterion (DIC)...: 100.64 Effective number of parameters .........: 2.634

Watanabe-Akaike information criterion (WAIC) ...: 101.40 Effective number of parameters .................: 2.942

The effective number of parameters (pD) is the posterior mean deviance minus the deviance measured at the posterior mean of the parameters. Both the DIC and pD are similar to those reported by JAGS.

Wantanabe-Akaike Information Criterion or Widely Applicable Information Criterion (WAIC). By contrast to AIC (and DIC) WAIC is a more fully Bayesian approach for estimating the out-of-sample expectation based on the log pointwise posterior predictive density.

Two additional out-of-sample estimates (measures of fit) supported by INLA include:

- Conditional Predictive Ordinate (CPO) is the density of the observed value of $y_i$ within the out-of-sample ($y_{-i}$) posterior predictive distribution.

A small CPO value associated with an observation suggests that this observation is unlikely (or surprising) in light of the model, priors and other

data in the model. In addition, the sum of the CPO values (or alternatively, the negative of the mean natural logarithm of the CPO values) is a measure of fit.

In this case there are no CPO values that are considerably smaller (order of magnitude smaller) than the others - so with respect to the model, none of the observed values would be considered 'surprising'. Various assumptions are made behind INLA's computations. If any of these assumptions fail of an observation, it is flagged within (in this case) cpo$failure as a non-zero. When this is the case, it is prudent to recalculate the CPO values after removing the observations associated with failure flags not equal to zero and re-fitting the model. This can be performed using the inla.cpo() function.

data.inla$cpo$cpo

[1] 0.047749222 0.074109301 0.041001671 0.018540996 0.076175384 0.046941565 0.073009041 0.062423313 [9] 0.069163109 0.072927701 0.021584385 0.073660042 0.057706132 0.001797082 0.033613593 0.071977093

sum(data.inla$cpo$cpo)

[1] 0.8423796

-mean(log(data.inla$cpo$cpo))

[1] 3.173897

In this case, there are no non-zero CPO values.data.inla$cpo$failure

[1] 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

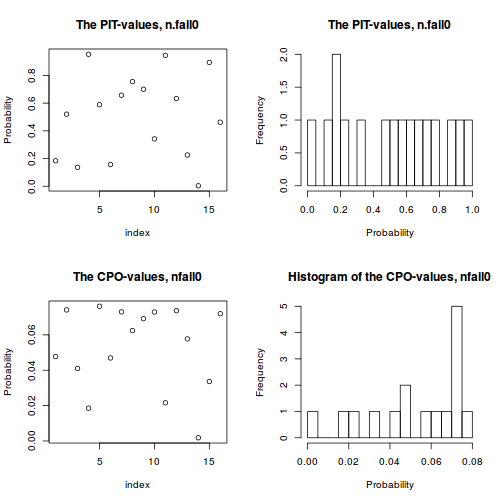

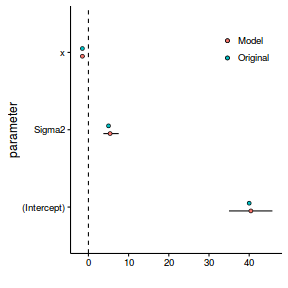

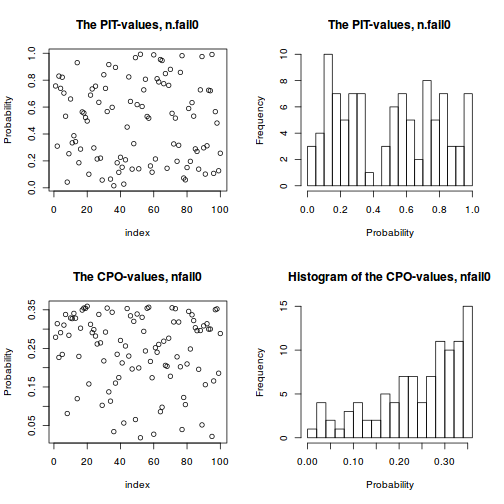

- Probability Integral Transforms (PIT) provides a version of CPO that is calibrated to the level of the Gaussian field so that it is clearer

whether or not any of the values are 'small' (all values must be between 0 and 1). A histogram of PIT values that does not look approximately uniform (flat) indicates a lack of model fit.

In this case the PIT values do not appear to deviate from a uniform distribution and thus do not indicate a lack of fit.

plot(data.inla, plot.fixed.effects=FALSE, plot.lincomb=FALSE, plot.random.effects=FALSE, plot.hyperparameters=FALSE, plot.predictor=FALSE, plot.q=FALSE, plot.cpo=TRUE, single=FALSE)

The influence of priors

When fitting the INLA model above, we did not specify priors. Consequently, the default priors were employed. To review what these priors are, we can use the inla.show.hyperspec() function - which returns a list containing information about all the hyper-priors used in a model.

inla.show.hyperspec(data.inla)

List of 3

$ predictor:List of 1

$ hyper:List of 1

$ theta:List of 8

$ name : atomic [1:1] log precision

$ short.name: atomic [1:1] prec

$ initial : atomic [1:1] 11

$ fixed : atomic [1:1] TRUE

$ prior : atomic [1:1] loggamma

$ param : atomic [1:2] 1e+00 1e-05

$ family :List of 1

$ :List of 3

$ label: chr "gaussian"

$ hyper:List of 1

$ theta:List of 8

$ name : atomic [1:1] log precision

$ short.name: atomic [1:1] prec

$ initial : atomic [1:1] 4

$ fixed : atomic [1:1] FALSE

$ prior : atomic [1:1] loggamma

$ param : atomic [1:2] 1e+00 5e-05

$ link :List of 1

$ hyper: list()

$ fixed :List of 2

$ :List of 3

$ label : chr "(Intercept)"

$ prior.mean: num 0

$ prior.prec: num 0

$ :List of 3

$ label : chr "x"

$ prior.mean: num 0

$ prior.prec: num 0.001

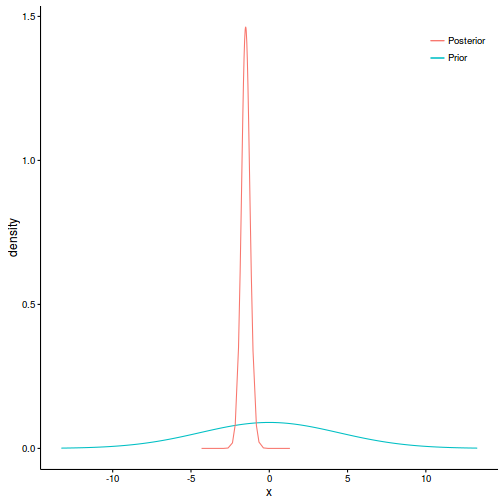

In order to drive the specification of weekly informative priors for variance components (a prior that allows the data to dictate the parameter estimates whilst constraining the range of the marginal distribution within sensible bounds), we can consider the range over which the marginal distribution of variance components would be sensible. For the range [-R,R], the equivalent gamma parameters: $$ \begin{align} a &= \frac{d}{2}\\ b &= \frac{R^2d}{2*(t^d_{1-(1-q)/2})^2} \end{align} $$ where $d$ is the degrees of freedom (for Cauchy marginal, $d=1$) and $t^d_{1-(1-q)/2}$ is the $q$th quantile of a Student $t$ with $d$ degrees of freedom. Hence if we considered variance likely to be in the range of [0,10], we could define a loggamma prior of: $$ \begin{align} a &= \frac{1}{2} = 0.5\\ b &= \frac{10^2}{2*161.447} = 0.31\\ \tau &\sim{} log\Gamma(0.5,0.31) \end{align} $$

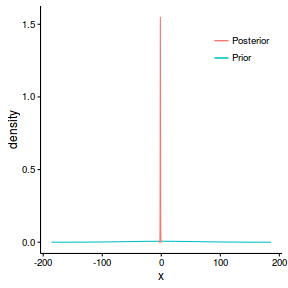

The priors for the fixed effects (intercept and slope) are both Normal distributions

with mean of 0 and precision (0.001). This implies that the prior for slope has a standard deviation

of approximately 31 (since $\sigma = \frac{1}{\sqrt{\tau}}$). As a general rule, three standard deviations

envelopes most of a distribution, and thus this prior defines a distribution whose density is almost entirely

within the range [-93,93]. Whilst this might be appropriate

for the intercept, it is arguably very wide for the slope given the range of the predictor variable.

In order to generate realistic informative Gaussian priors (for the purpose of constraining the posterior to a logical range) for fixed parameters, the following formulae are useful: $$ \begin{align} \mu &= \frac{z_2\theta_1 - z_1\theta_2}{z_2-z_1}\\ \sigma &= \frac{\theta_2 - \theta_1}{z_2-z_1} \end{align} $$ where $\theta_1$ and $\theta_2$ are the quantiles on the response scale and $z_1$ and $z_2$ are the corresponding quantiles on the standard normal scale. Hence, if we considered that the slope is likely to be in the range of [-10,10], we could specify a Normal prior with mean of ($\mu=\frac{(qnorm(0.5,0,1)*0) - (qnorm(0.975,0,1)*10)}{10-0} = 0$) and a standard deviation of ($\mu=\frac{10 - 0}{qnorm(0.975,0,1)-qnorm(0.5,0,1)} = 5.102$). In INLA (which defines priors in terms of precision rather than standard deviation), the associated prior would be $\beta \sim{} \mathcal{N}(0, 0.196)$.

Lets plot the prior and posterior for slope.

#the default priors specify a standard deviation of: (SD<-(1/sqrt(0.001) * qnorm(0.975,0,1)))

[1] 61.9795

prior <- data.frame(x=seq(-3*SD,3*SD,len=1000)) prior$density <- dnorm(prior$x,0,SD) post <- data.frame(data.inla$marginals.fixed[[2]]) head(post)

x y 1 -4.190463 1.765936e-16 2 -3.659143 9.807886e-11 3 -3.127823 1.422271e-06 4 -2.862163 6.240289e-05 5 -2.596503 1.483329e-03 6 -2.330843 2.134545e-02

ggplot(prior, aes(y=density, x=x)) + geom_line(aes(color='Prior')) + geom_line(data=post, aes(y=y, x=x, color='Posterior')) + scale_color_discrete('')+ theme_classic()+theme(legend.position=c(1,1), legend.justification=c(1,1))

s <- inla.contrib.sd(data.inla, nsamples = 1000) s$hyper

mean sd 2.5% 97.5% sd for the Gaussian observations 4.991154 0.9202552 3.527553 7.121831

data.inla <- inla(y~x, data=data, control.compute=list(dic=TRUE, cpo=TRUE, waic=TRUE), control.fixed=list(mean=0, prec=0.196, mean.intercept=0, prec.intercept=0.001), control.family=list(hyper=list(prec=list(prior='loggamma', param=c(0.1,0.1))))) summary(data.inla)

Call:

c("inla(formula = y ~ x, data = data, control.compute = list(dic = TRUE, ", " cpo = TRUE, waic = TRUE), control.family = list(hyper = list(prec = list(prior = \"loggamma\", ", " param = c(0.1, 0.1)))), control.fixed = list(mean = 0, prec = 0.196, ", " mean.intercept = 0, prec.intercept = 0.001))")

Time used:

Pre-processing Running inla Post-processing Total

0.0911 0.0326 0.0285 0.1523

Fixed effects:

mean sd 0.025quant 0.5quant 0.975quant mode kld

(Intercept) 40.2459 2.7140 34.8045 40.2675 45.5553 40.3057 0

x -1.4833 0.2804 -2.0322 -1.4855 -0.9215 -1.4894 0

The model has no random effects

Model hyperparameters:

mean sd 0.025quant 0.5quant 0.975quant mode

Precision for the Gaussian observations 0.0388 0.0146 0.0167 0.0368 0.073 0.0328

Expected number of effective parameters(std dev): 1.977(0.0069)

Number of equivalent replicates : 8.092

Deviance Information Criterion (DIC) ...: 100.79

Effective number of parameters .........: 2.636

Watanabe-Akaike information criterion (WAIC) ...: 101.14

Effective number of parameters .................: 2.604

Marginal log-Likelihood: -56.99

CPO and PIT are computed

Posterior marginals for linear predictor and fitted values computed

data.inla <- inla(y~x, data=data, control.compute=list(dic=TRUE, cpo=TRUE, waic=TRUE), control.fixed=list(mean=0, prec=0.0196, mean.intercept=0, prec.intercept=0.001), control.family=list(hyper=list(prec=list(prior='loggamma', param=c(0.1,0.1))))) summary(data.inla)

Call:

c("inla(formula = y ~ x, data = data, control.compute = list(dic = TRUE, ", " cpo = TRUE, waic = TRUE), control.family = list(hyper = list(prec = list(prior = \"loggamma\", ", " param = c(0.1, 0.1)))), control.fixed = list(mean = 0, prec = 0.0196, ", " mean.intercept = 0, prec.intercept = 0.001))")

Time used:

Pre-processing Running inla Post-processing Total

0.0923 0.0239 0.0286 0.1448

Fixed effects:

mean sd 0.025quant 0.5quant 0.975quant mode kld

(Intercept) 40.4222 2.7267 34.9757 40.4364 45.7778 40.4616 0

x -1.5041 0.2822 -2.0593 -1.5054 -0.9414 -1.5077 0

The model has no random effects

Model hyperparameters:

mean sd 0.025quant 0.5quant 0.975quant mode

Precision for the Gaussian observations 0.0388 0.0146 0.0167 0.0368 0.073 0.0328

Expected number of effective parameters(std dev): 1.991(0.0028)

Number of equivalent replicates : 8.036

Deviance Information Criterion (DIC) ...: 100.80

Effective number of parameters .........: 2.651

Watanabe-Akaike information criterion (WAIC) ...: 101.16

Effective number of parameters .................: 2.62

Marginal log-Likelihood: -57.94

CPO and PIT are computed

Posterior marginals for linear predictor and fitted values computed

s <- inla.contrib.sd(data.inla, nsamples = 1000) s$hyper

mean sd 2.5% 97.5% sd for the Gaussian observations 5.337874 1.088706 3.663546 7.863846

#the default priors specify a standard deviation of: (SD<-(1/sqrt(0.196) * qnorm(0.975,0,1)))

[1] 4.427107

prior <- data.frame(x=seq(-3*SD,3*SD,len=1000)) prior$density <- dnorm(prior$x,0,SD) post <- data.frame(data.inla$marginals.fixed[[2]]) head(post)

x y 1 -4.325697 2.134404e-16 2 -3.761375 1.037706e-10 3 -3.197053 1.349437e-06 4 -2.914892 5.713188e-05 5 -2.632731 1.331189e-03 6 -2.350570 1.925008e-02

ggplot(prior, aes(y=density, x=x)) + geom_line(aes(color='Prior')) + geom_line(data=post, aes(y=y, x=x, color='Posterior')) + scale_color_discrete('')+ theme_classic()+theme(legend.position=c(1,1), legend.justification=c(1,1))

More detailed information

In addition to the PIT and CPO distributions, there are numerous other summary plots available, each of which summarizes the marginal distribution of the different types of model parameters (fixed, random, hyper-parameters etc) and predictions. These can be produced in one hit using the plot() function. However, I will produce them individually in order to assist discussion. Moreover, as a result of complexities arising from the need for the plot() function to work in Rstudio, INLA's plot() function and knitr are slightly incompatible at the moment...

Additionally, the fitted INLA object (a list) contains a large number of items, each of which capture different aspects of the approximated parameters.

names(data.inla)

[1] "names.fixed" "summary.fixed" "marginals.fixed" [4] "summary.lincomb" "marginals.lincomb" "size.lincomb" [7] "summary.lincomb.derived" "marginals.lincomb.derived" "size.lincomb.derived" [10] "mlik" "cpo" "po" [13] "waic" "model.random" "summary.random" [16] "marginals.random" "size.random" "summary.linear.predictor" [19] "marginals.linear.predictor" "summary.fitted.values" "marginals.fitted.values" [22] "size.linear.predictor" "summary.hyperpar" "marginals.hyperpar" [25] "internal.summary.hyperpar" "internal.marginals.hyperpar" "offset.linear.predictor" [28] "model.spde2.blc" "summary.spde2.blc" "marginals.spde2.blc" [31] "size.spde2.blc" "model.spde3.blc" "summary.spde3.blc" [34] "marginals.spde3.blc" "size.spde3.blc" "logfile" [37] "misc" "dic" "mode" [40] "neffp" "joint.hyper" "nhyper" [43] "version" "Q" "graph" [46] "ok" "cpu.used" "all.hyper" [49] ".args" "call" "model.matrix"

This is obviously a very large list. In order to make more sense of all that is available, we can group the returned INLA list into functional categories:

- parameter estimates

INLA object Description names.fixed the names of fixed effects parameters summary.fixed summaries (mean, standard deviation and quantiles) of fixed posteriors (on link scale) marginals.fixed list of posterior marginal densities of the fixed effects size.random number of levels of each of the structured random covariates summary.random summaries (mean, standard deviation and quantiles) of the structured random effects (smoothers etc) marginals.random list of posterior marginal densities of the random effects summary.hyperpar summaries (mean, standard deviation and quantiles) of the model hyper-parameters (precision, overdispersion, correlation etc) marginals.hyperpar list of posterior marginal densities of the model hyper-parameters - predictions and contrasts

INLA object Description model.matrix model matrix of the fixed effects - a row for each row of the input data summary.linear.predictor summaries (mean, standard deviation and quantiles) of the linear predictor - a prediction (on the link scale) associated with each row of the input data marginals.linear.predictor list of posterior marginal densities of the linear predictor summary.fitted.values summaries (mean, standard deviation and quantiles) of the fitted values - a prediction (on the response scale) associated with each row of the input data marginals.fitted.values list of posterior marginal densities of the fitted values summary.lincomb summaries (mean, standard deviation and quantiles) of the defined linear combinations (on the link scale) marginals.lincomb list of posterior marginal densities of the defined linear combinations summary.lincomb.derived summaries (mean, standard deviation and quantiles) of the defined linear combinations (on the response scale) marginals.lincomb.derived list of posterior marginal densities of the defined linear combinations (response scale) - model performance measures

INLA object Description mlik log marginal likelihood of the model (when mlik=TRUE) dic deviance information criterion of the model (when dic=TRUE) waic Watanabe-Akaike (Widely Applicable) information criterion of the model (when dic=TRUE) neffp expected number of parameters (and standard deviation) and number of replicates per parameter in the model cpo conditional predictive ordinate (cpo), probability integral transform (pit) and vector of assumption failures (failures) of the model (when cpo=TRUE)

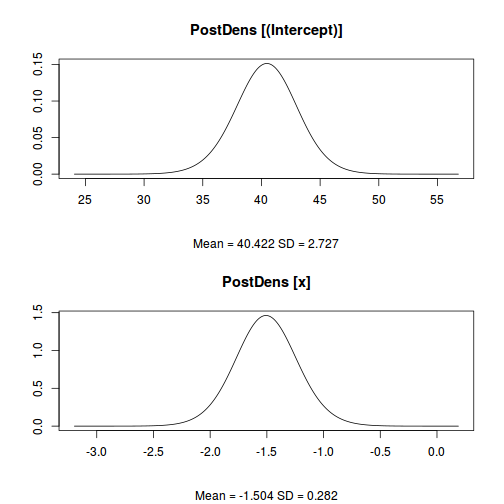

Fixed effects

plot(data.inla, plot.fixed.effects = TRUE, plot.lincomb = FALSE, plot.random.effects = FALSE, plot.hyperparameters = FALSE, plot.predictor = FALSE, plot.q = FALSE, plot.cpo = FALSE, single = FALSE)

data.inla$summary.fixed

mean sd 0.025quant 0.5quant 0.975quant mode kld (Intercept) 40.422174 2.7267362 34.97573 40.436435 45.7778033 40.461613 3.011495e-11 x -1.504087 0.2821624 -2.05931 -1.505424 -0.9413974 -1.507741 2.972068e-11

data.inla$marginals.fixed

$`(Intercept)`

x y

[1,] 13.15496 4.410151e-17

[2,] 18.60840 1.905779e-11

[3,] 24.06185 2.152067e-07

[4,] 26.78857 8.435060e-06

[5,] 29.51529 1.784278e-04

[6,] 32.24201 2.276125e-03

[7,] 33.83266 8.280310e-03

[8,] 35.92316 3.499961e-02

[9,] 36.96775 6.200022e-02

[10,] 37.65244 8.427154e-02

[11,] 38.19117 1.028661e-01

[12,] 38.64056 1.178672e-01

[13,] 39.04390 1.299800e-01

[14,] 39.41565 1.392874e-01

[15,] 39.76649 1.459042e-01

[16,] 40.10455 1.499193e-01

[17,] 40.27099 1.509687e-01

[18,] 40.43643 1.513822e-01

[19,] 40.60209 1.511608e-01

[20,] 40.76790 1.503044e-01

[21,] 41.10458 1.466640e-01

[22,] 41.45260 1.404086e-01

[23,] 41.81934 1.314582e-01

[24,] 42.21872 1.195765e-01

[25,] 42.66654 1.045571e-01

[26,] 43.18698 8.627934e-02

[27,] 43.85476 6.385703e-02

[28,] 44.86945 3.628873e-02

[29,] 46.88034 8.629793e-03

[30,] 48.60235 1.975121e-03

[31,] 51.32907 1.352744e-04

[32,] 54.05579 5.756324e-06

[33,] 56.78251 1.349249e-07

[34,] 62.23596 1.021137e-11

[35,] 67.68940 2.068547e-17

$x

x y

[1,] -4.32569687 2.134404e-16

[2,] -3.76137496 1.037706e-10

[3,] -3.19705306 1.349437e-06

[4,] -2.91489210 5.713188e-05

[5,] -2.63273115 1.331189e-03

[6,] -2.35057020 1.925008e-02

[7,] -2.17334332 8.304301e-02

[8,] -1.96491059 3.492400e-01

[9,] -1.85981324 6.148759e-01

[10,] -1.79056620 8.315525e-01

[11,] -1.73580056 1.011021e+00

[12,] -1.69001537 1.154394e+00

[13,] -1.64879258 1.269090e+00

[14,] -1.61067721 1.356196e+00

[15,] -1.57459777 1.416978e+00

[16,] -1.53973520 1.452399e+00

[17,] -1.52252620 1.460799e+00

[18,] -1.50542406 1.463031e+00

[19,] -1.48827869 1.459127e+00

[20,] -1.47107359 1.449088e+00

[21,] -1.43609861 1.410468e+00

[22,] -1.39985194 1.346777e+00

[23,] -1.36155020 1.257371e+00

[24,] -1.31985206 1.140529e+00

[25,] -1.27276242 9.936500e-01

[26,] -1.21787798 8.165285e-01

[27,] -1.14702386 6.008029e-01

[28,] -1.03864956 3.384795e-01

[29,] -0.82220815 7.976568e-02

[30,] -0.65760448 2.185504e-02

[31,] -0.37544353 1.705125e-03

[32,] -0.09328257 8.038838e-05

[33,] 0.18887838 2.048111e-06

[34,] 0.75320028 1.812337e-10

[35,] 1.31752219 4.198486e-16

library(dplyr) data.inla.fixed <- reshape2:::melt(data.inla$marginals.fixed) %>% reshape2:::dcast(L1+Var1~Var2, value='value') ggplot(data.inla.fixed, aes(y=y, x=x)) + geom_line() + facet_wrap(~L1, scales='free', nrow=1) + theme_classic()

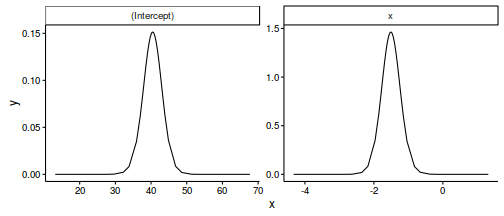

Predictor

plot(data.inla, plot.fixed.effects=FALSE, plot.lincomb=FALSE, plot.random.effects=FALSE, plot.hyperparameters=FALSE, plot.predictor=TRUE, plot.q=FALSE, plot.cpo=FALSE, single=FALSE)

data.inla$summary.linear.predictor

mean sd 0.025quant 0.5quant 0.975quant mode kld predictor.01 38.91874 2.483244 33.96448 38.93146 43.79792 38.95390 1.007436e-07 predictor.02 37.41459 2.247989 32.92995 37.42602 41.83181 37.44617 1.014385e-07 predictor.03 35.91045 2.024777 31.87159 35.92057 39.88955 35.93843 1.027624e-07 predictor.04 34.40632 1.818051 30.78060 34.41514 37.97993 34.43071 1.051053e-07 predictor.05 32.90219 1.634077 29.64458 32.90971 36.11533 32.92298 1.090693e-07 predictor.06 31.39807 1.481359 28.44673 31.40428 34.31258 31.41524 1.154613e-07 predictor.07 29.89397 1.370386 27.16634 29.89887 32.59239 29.90751 1.250763e-07 predictor.08 28.38989 1.311798 25.78241 28.39345 30.97613 28.39978 1.380414e-07 predictor.09 26.88582 1.312630 24.28112 26.88804 29.47847 26.89203 1.530650e-07 predictor.10 25.38177 1.372775 22.66255 25.38262 28.09812 25.38426 1.678379e-07 predictor.11 23.87775 1.485046 20.93976 23.87723 26.82085 23.87651 1.806989e-07 predictor.12 22.37373 1.638769 19.13512 22.37184 25.62556 22.36874 1.923988e-07 predictor.13 20.86972 1.823489 17.26929 20.86646 24.49158 20.86098 2.011791e-07 predictor.14 19.36572 2.030767 15.35897 19.36108 23.40225 19.35322 2.077685e-07 predictor.15 17.86174 2.254388 13.41636 17.85572 22.34529 17.84548 2.126772e-07 predictor.16 16.35775 2.489953 11.45012 16.35035 21.31196 16.33773 2.163935e-07

data.inla$summary.fitted.values

mean sd 0.025quant 0.5quant 0.975quant mode fitted.predictor.01 38.91874 2.483244 33.96448 38.93146 43.79792 38.95390 fitted.predictor.02 37.41459 2.247989 32.92995 37.42602 41.83181 37.44617 fitted.predictor.03 35.91045 2.024777 31.87159 35.92057 39.88955 35.93843 fitted.predictor.04 34.40632 1.818051 30.78060 34.41514 37.97993 34.43071 fitted.predictor.05 32.90219 1.634077 29.64458 32.90971 36.11533 32.92298 fitted.predictor.06 31.39807 1.481359 28.44673 31.40428 34.31258 31.41524 fitted.predictor.07 29.89397 1.370386 27.16634 29.89887 32.59239 29.90751 fitted.predictor.08 28.38989 1.311798 25.78241 28.39345 30.97613 28.39978 fitted.predictor.09 26.88582 1.312630 24.28112 26.88804 29.47847 26.89203 fitted.predictor.10 25.38177 1.372775 22.66255 25.38262 28.09812 25.38426 fitted.predictor.11 23.87775 1.485046 20.93976 23.87723 26.82085 23.87651 fitted.predictor.12 22.37373 1.638769 19.13512 22.37184 25.62556 22.36874 fitted.predictor.13 20.86972 1.823489 17.26929 20.86646 24.49158 20.86098 fitted.predictor.14 19.36572 2.030767 15.35897 19.36108 23.40225 19.35322 fitted.predictor.15 17.86174 2.254388 13.41636 17.85572 22.34529 17.84548 fitted.predictor.16 16.35775 2.489953 11.45012 16.35035 21.31196 16.33773

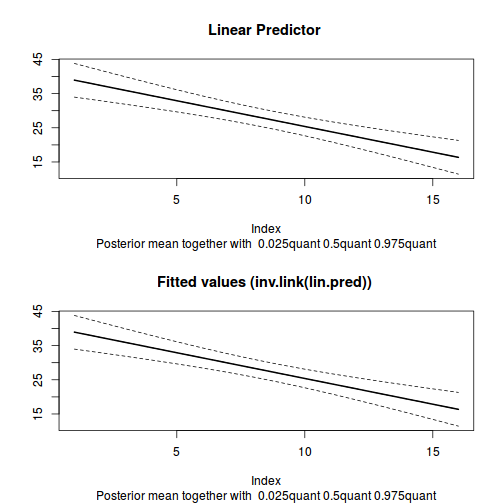

Hyper-parameters

plot(data.inla, plot.fixed.effects=FALSE, plot.lincomb=FALSE, plot.random.effects=FALSE, plot.hyperparameters=TRUE, plot.predictor=FALSE, plot.q=FALSE, plot.cpo=FALSE, single=FALSE)

data.inla$summary.hyperpar

mean sd 0.025quant 0.5quant 0.975quant mode Precision for the Gaussian observations 0.03882442 0.01458731 0.01670641 0.03679713 0.07303003 0.03278234

s <- inla.contrib.sd(data.inla, nsamples = 1000) s$hyper

mean sd 2.5% 97.5% sd for the Gaussian observations 5.393922 0.9865159 3.717143 7.535196

5.394 which is similar to the JAGS estimate.

Predictions

There are two main ways to generate predictions in INLA:

- append additional data cases with missing (NA) response to the model input data. Missing response data will not contribute to the likelihood, yet will

be imputed (estimated/predicted given the model trends). In order to illustrate producing a summary plot, I will predict the response for a sequence of predictor

values.

newdata <- data.frame(x=seq(min(data$x, na.rm=TRUE), max(data$x, na.rm=TRUE), len=100), y=NA) data.pred <- rbind(data, newdata) #fit the model data.inla1 <- inla(y~x, data=data.pred, control.compute=list(dic=TRUE, cpo=TRUE, waic=TRUE), control.predictor=list(compute=TRUE)) #examine the regular summary - not there are no changes to the first fit summary(data.inla1)

Call: c("inla(formula = y ~ x, data = data.pred, control.compute = list(dic = TRUE, ", " cpo = TRUE, waic = TRUE), control.predictor = list(compute = TRUE))" ) Time used: Pre-processing Running inla Post-processing Total 0.0663 0.0489 0.0239 0.1391 Fixed effects: mean sd 0.025quant 0.5quant 0.975quant mode kld (Intercept) 40.7441 2.5688 35.6573 40.7440 45.8219 40.7442 0 x -1.5339 0.2657 -2.0599 -1.5339 -1.0087 -1.5339 0 The model has no random effects Model hyperparameters: mean sd 0.025quant 0.5quant 0.975quant mode Precision for the Gaussian observations 0.0438 0.0155 0.0199 0.0418 0.0798 0.0378 Expected number of effective parameters(std dev): 2.00(0.00) Number of equivalent replicates : 8.00 Deviance Information Criterion (DIC) ...: 100.64 Effective number of parameters .........: 2.634 Watanabe-Akaike information criterion (WAIC) ...: 101.40 Effective number of parameters .................: 2.942 Marginal log-Likelihood: -64.55 CPO and PIT are computed Posterior marginals for linear predictor and fitted values computed#extract the predictor values associated with the appended data newdata <- cbind(newdata, data.inla1$summary.linear.predictor[(nrow(data)+1):nrow(data.pred),]) head(newdata)

x y mean sd 0.025quant 0.5quant 0.975quant mode kld predictor.017 1.000000 NA 39.21085 2.339342 34.58500 39.21057 43.83805 39.21031 1.031930e-07 predictor.018 1.151515 NA 38.97844 2.305138 34.42022 38.97816 43.53798 38.97790 1.031943e-07 predictor.019 1.303030 NA 38.74603 2.271133 34.25505 38.74575 43.23831 38.74550 1.031957e-07 predictor.020 1.454545 NA 38.51362 2.237335 34.08947 38.51334 42.93905 38.51309 1.031972e-07 predictor.021 1.606061 NA 38.28120 2.203755 33.92346 38.28094 42.64021 38.28069 1.031987e-07 predictor.022 1.757576 NA 38.04879 2.170402 33.75700 38.04853 42.34183 38.04828 1.032003e-07

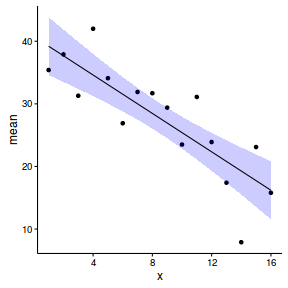

newdata <- reshape:::rename(newdata, c("0.025quant"="lower", "0.975quant"="upper")) ggplot(newdata, aes(y=mean, x=x)) + geom_point(data=data, aes(y=y)) + geom_ribbon(aes(ymin=lower, ymax=upper), fill='blue', alpha=0.2) + geom_line() + theme_classic()

- define linear combinations (contrasts) for the predictions. Note, the inla.make.lincombs() function seems a little fragile.

It is vital that it be parsed a data.frame. Furthermore, the model matrix needs to be converted to a data frame using the as.data.frame()

function rather than the data.frame() function as the latter alters the column names and causes problems within INLA.

newdata <- data.frame(x=seq(min(data$x, na.rm=TRUE), max(data$x, na.rm=TRUE), len=100)) Xmat <- model.matrix(~x, data=newdata) lincomb <- inla.make.lincombs(as.data.frame(Xmat)) #fit the model data.inla1 <- inla(y~x, lincomb=lincomb, #include the linear combinations data=data, control.compute=list(dic=TRUE, cpo=TRUE, waic=TRUE), control.inla=list(lincomb.derived.only=FALSE)) #examine the regular summary - not there are no changes to the first fit #summary(data.inla1) head(data.inla1$summary.lincomb)

ID mean sd 0.025quant 0.5quant 0.975quant mode kld lc001 1 39.20315 2.359954 34.52673 39.20440 43.86357 39.20635 1.371747e-11 lc002 2 38.97090 2.325452 34.36286 38.97213 43.56320 38.97404 1.372809e-11 lc003 3 38.73864 2.291151 34.19858 38.73985 43.26322 38.74173 1.370907e-11 lc004 4 38.50639 2.257060 34.03389 38.50758 42.96365 38.50942 1.371347e-11 lc005 5 38.27414 2.223188 33.86877 38.27530 42.66452 38.27711 1.373200e-11 lc006 6 38.04188 2.189545 33.70319 38.04303 42.36584 38.04480 1.369481e-11

newdata <- cbind(newdata, data.inla1$summary.lincomb) newdata <- reshape:::rename(newdata, c("0.025quant"="lower", "0.975quant"="upper")) ggplot(newdata, aes(y=mean, x=x)) + geom_point(data=data, aes(y=y)) + geom_ribbon(aes(ymin=lower, ymax=upper), fill='blue', alpha=0.2) + geom_line() + theme_classic()

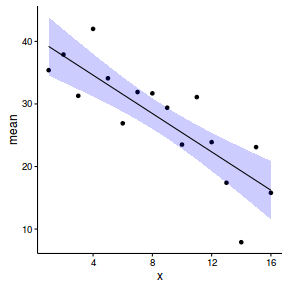

One very good way to evaluate the fit of a model is on how well it estimates known parameters. This example generated simulated data given a set of parameters. We could therefore compare the model parameter estimates to these truths.

orig <- data.frame(parameter=factor(c('(Intercept)','x','Sigma2')), value=c(40,-1.5,5)) mod <- subset(data.inla$summary.fixed, select=c('mean','sd','0.025quant','0.975quant')) mod <- reshape::rename(mod,c("0.025quant"="lower", "0.975quant"="upper")) colnames(s$hyper) <- c('mean','sd','lower','upper') mod <- rbind(mod, s$hyper) mod$parameter <- factor(c('(Intercept)','x','Sigma2')) ggplot(mod, aes(x=parameter, y=mean)) + geom_hline(yintercept=0, linetype='dashed')+ geom_blank()+ geom_linerange(aes(x=as.numeric(parameter)-0.05,ymin=lower, ymax=upper)) + geom_point(data=orig, aes(y=value, x=as.numeric(factor(parameter))+0.05, fill='Original'), shape=21)+ geom_point(aes(x=as.numeric(parameter)-0.05, fill='Model'), shape=21)+ coord_flip()+ scale_fill_discrete('')+ scale_y_continuous('')+ theme_classic()+theme(legend.position=c(1,1), legend.justification=c(1,1))

Hence, it appears that the estimated (modelled) parameters are entirely consistent with the original parameters from which the data were simulated.

Multiple linear regression

In the previous example, I displayed many of the basic components of the fitted INLA model. In the remaining examples, we will build upon some of these and ignore others completely - depending on what is required...

Lets say we had set up a natural experiment in which we measured a response ($y$) from each of 20 sampling units ($n=20$) across a landscape. At the same time, we also measured two other continuous covariates ($x1$ and $x2$) from each of the sampling units. As this section is mainly about the generation of artificial data (and not specifically about what to do with the data), understanding the actual details are optional and can be safely skipped. Consequently, I have folded (toggled) this section away.

set.seed(3) n = 100 intercept = 5 temp = runif(n) nitro = runif(n) + 0.8*temp int.eff=2 temp.eff <- 0.85 nitro.eff <- 0.5 res = rnorm(n, 0, 1) coef <- c(int.eff, temp.eff, nitro.eff,int.eff) mm <- model.matrix(~temp*nitro) y <- t(coef %*% t(mm)) + res data <- data.frame(y,x1=temp, x2=nitro, cx1=scale(temp,scale=F), cx2=scale(nitro,scale=F))

$$ y_i = \alpha + \beta_1 x_{1i} + \beta_2 x_{2i} + \varepsilon_i \hspace{4em}\varepsilon_i\sim{}\mathcal{N}(0, \sigma^2) $$

#additive model summary(lm(y~cx1+cx2, data=data))

Call:

lm(formula = y ~ cx1 + cx2, data = data)

Residuals:

Min 1Q Median 3Q Max

-2.45927 -0.78738 -0.00688 0.71051 2.88492

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 3.8234 0.1131 33.793 < 2e-16 ***

cx1 3.0250 0.4986 6.067 2.52e-08 ***

cx2 1.3878 0.4281 3.242 0.00163 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 1.131 on 97 degrees of freedom

Multiple R-squared: 0.5361, Adjusted R-squared: 0.5265

F-statistic: 56.05 on 2 and 97 DF, p-value: < 2.2e-16

confint(lm(y~cx1+cx2, data=data))

2.5 % 97.5 % (Intercept) 3.5988464 4.047962 cx1 2.0353869 4.014675 cx2 0.5381745 2.237488

#multiplicative model summary(lm(y~cx1*cx2, data=data))

Call:

lm(formula = y ~ cx1 * cx2, data = data)

Residuals:

Min 1Q Median 3Q Max

-2.34877 -0.85435 0.06905 0.71265 2.57068

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 3.6710 0.1315 27.924 < 2e-16 ***

cx1 2.9292 0.4914 5.961 4.15e-08 ***

cx2 1.3445 0.4207 3.196 0.00189 **

cx1:cx2 2.6651 1.2305 2.166 0.03281 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 1.111 on 96 degrees of freedom

Multiple R-squared: 0.5577, Adjusted R-squared: 0.5439

F-statistic: 40.35 on 3 and 96 DF, p-value: < 2.2e-16

confint(lm(y~cx1*cx2, data=data))

2.5 % 97.5 % (Intercept) 3.4100621 3.931972 cx1 1.9537887 3.904642 cx2 0.5094571 2.179451 cx1:cx2 0.2224680 5.107650

#additive model modelString=" model { #Likelihood for (i in 1:n) { y[i]~dnorm(mu[i],tau) mu[i] <- beta0+beta1*x1[i]+beta2*x2[i] } #Priors beta0 ~ dnorm(0.01,1.0E-6) beta1 ~ dnorm(0,1.0E-6) beta2 ~ dnorm(0,1.0E-6) tau <- 1 / (sigma * sigma) sigma~dunif(0,100) } " data.list <- with(data, list(y=y, x1=cx1, x2=cx2, n=nrow(data))) data.jags <- jags(data=data.list, inits=NULL, parameters.to.save=c('alpha','beta1', 'beta2', 'sigma'), model.file=textConnection(modelString), n.chains=3, n.iter=100000, n.burnin=20000, n.thin=100 )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 612 Initializing model

print(data.jags)

Inference for Bugs model at "6", fit using jags,

3 chains, each with 1e+05 iterations (first 20000 discarded), n.thin = 100

n.sims = 2400 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

beta1 3.022 0.504 2.013 2.684 3.006 3.360 4.008 1.001 2400

beta2 1.388 0.435 0.498 1.113 1.403 1.689 2.199 1.001 2400

sigma 1.149 0.082 1.002 1.091 1.144 1.202 1.325 1.000 2400

deviance 309.506 2.931 305.926 307.384 308.842 310.830 317.254 1.001 2400

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 4.3 and DIC = 313.8

DIC is an estimate of expected predictive error (lower deviance is better).

#multiplicative model modelString=" model { #Likelihood for (i in 1:n) { y[i]~dnorm(mu[i],tau) mu[i] <- beta0+beta1*x1[i]+beta2*x2[i]+beta3*x1[i]*x2[i] } #Priors beta0 ~ dnorm(0.01,1.0E-6) beta1 ~ dnorm(0,1.0E-6) beta2 ~ dnorm(0,1.0E-6) beta3 ~ dnorm(0,1.0E-6) tau <- 1 / (sigma * sigma) sigma~dunif(0,100) } " data.list <- with(data, list(y=y, x1=cx1, x2=cx2, n=nrow(data))) data.jags <- jags(data=data.list, inits=NULL, parameters.to.save=c('alpha','beta1', 'beta2', 'sigma'), model.file=textConnection(modelString), n.chains=3, n.iter=100000, n.burnin=20000, n.thin=100 )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 713 Initializing model

print(data.jags)

Inference for Bugs model at "7", fit using jags,

3 chains, each with 1e+05 iterations (first 20000 discarded), n.thin = 100

n.sims = 2400 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

beta1 2.949 0.504 1.971 2.608 2.951 3.284 3.939 1.001 2300

beta2 1.333 0.425 0.484 1.041 1.333 1.618 2.166 1.001 2400

sigma 1.127 0.084 0.977 1.070 1.121 1.181 1.313 1.001 2400

deviance 305.835 3.304 301.533 303.456 305.062 307.486 313.832 1.001 2400

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 5.5 and DIC = 311.3

DIC is an estimate of expected predictive error (lower deviance is better).

#additive model data.inla.a <- inla(y~cx1+cx2, data=data, control.compute=list(dic=TRUE, cpo=TRUE, waic=TRUE)) #multiplicative model data.inla.m <- inla(y~cx1*cx2, data=data, control.compute=list(dic=TRUE, cpo=TRUE, waic=TRUE))

We will first of all explore the INLA summaries for the two models, paying particular attention to the KLD, Expected number of effective parameters and number of equivalent replicates estimates.

summary(data.inla.a)

Call:

c("inla(formula = y ~ cx1 + cx2, data = data, control.compute = list(dic = TRUE, ", " cpo = TRUE, waic = TRUE))")

Time used:

Pre-processing Running inla Post-processing Total

0.0643 0.0534 0.0237 0.1415

Fixed effects:

mean sd 0.025quant 0.5quant 0.975quant mode kld

(Intercept) 3.8234 0.1125 3.6022 3.8234 4.0443 3.8234 0

cx1 3.0245 0.4957 2.0500 3.0245 3.9980 3.0245 0

cx2 1.3880 0.4256 0.5513 1.3880 2.2238 1.3880 0

The model has no random effects

Model hyperparameters:

mean sd 0.025quant 0.5quant 0.975quant mode

Precision for the Gaussian observations 0.7968 0.1133 0.5935 0.7905 1.037 0.7797

Expected number of effective parameters(std dev): 3.001(2e-04)

Number of equivalent replicates : 33.32

Deviance Information Criterion (DIC) ...: 312.74

Effective number of parameters .........: 3.647

Watanabe-Akaike information criterion (WAIC) ...: 312.77

Effective number of parameters .................: 3.546

Marginal log-Likelihood: -173.86

CPO and PIT are computed

Posterior marginals for linear predictor and fitted values computed

summary(data.inla.m)

Call:

c("inla(formula = y ~ cx1 * cx2, data = data, control.compute = list(dic = TRUE, ", " cpo = TRUE, waic = TRUE))")

Time used:

Pre-processing Running inla Post-processing Total

0.0658 0.0525 0.0239 0.1423

Fixed effects:

mean sd 0.025quant 0.5quant 0.975quant mode kld

(Intercept) 3.6712 0.1307 3.4143 3.6712 3.9279 3.6712 0

cx1 2.9288 0.4884 1.9685 2.9288 3.8882 2.9288 0

cx2 1.3446 0.4181 0.5226 1.3446 2.1659 1.3446 0

cx1:cx2 2.6613 1.2224 0.2579 2.6613 5.0621 2.6613 0

The model has no random effects

Model hyperparameters:

mean sd 0.025quant 0.5quant 0.975quant mode

Precision for the Gaussian observations 0.8273 0.1182 0.6153 0.8208 1.078 0.8094

Expected number of effective parameters(std dev): 3.999(4e-04)

Number of equivalent replicates : 25.00

Deviance Information Criterion (DIC) ...: 309.98

Effective number of parameters .........: 4.648

Watanabe-Akaike information criterion (WAIC) ...: 309.77

Effective number of parameters .................: 4.227

Marginal log-Likelihood: -174.76

CPO and PIT are computed

Posterior marginals for linear predictor and fitted values computed

KLD values for all parameters for both models are 0, and thus the Simplified Laplace Approximation is appropriate. The number of effective parameters for both models is approximately 3. Not surprisingly, the additive model therefore has more equivalent replicates per parameter than the multiplicative model. In either case, there appears to be ample replicates per parameter to ensure reliable parameter estimation.

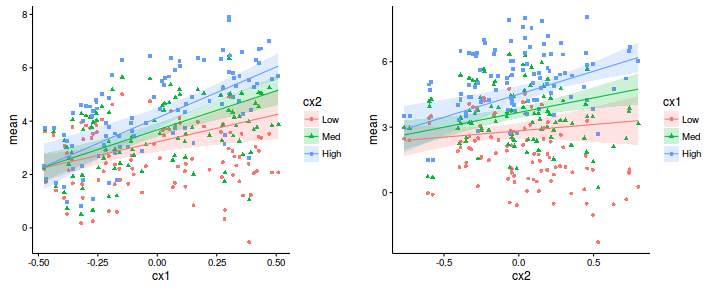

The multiplicative model has evidence of an interaction (95% credibility interval for the interaction parameter do not overlap zero) and both DIC and WAIC values are lower for the multiplicative model than the additive model. Consequently, the multiplicative model would be considered the better of the two models.

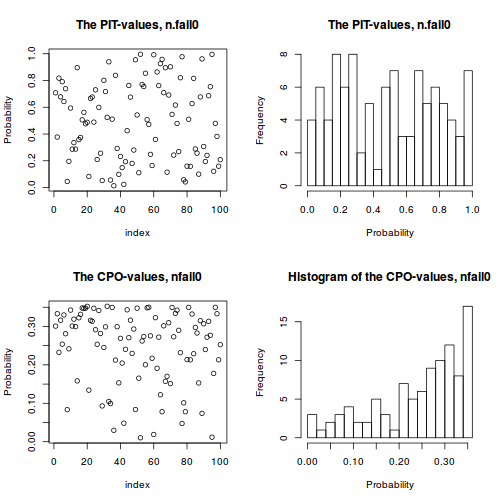

We will examine CPO and PIT from both models.

sum(data.inla.a$cpo$cpo)

[1] 24.45695

-mean(log(data.inla.a$cpo$cpo))

[1] 1.563885

plot(data.inla.a, plot.fixed.effects=FALSE, plot.lincomb=FALSE, plot.random.effects=FALSE, plot.hyperparameters=FALSE, plot.predictor=FALSE, plot.q=FALSE, plot.cpo=TRUE, single=FALSE)

sum(data.inla.m$cpo$cpo)

[1] 24.4262

-mean(log(data.inla.m$cpo$cpo))

[1] 1.54899

plot(data.inla.m, plot.fixed.effects=FALSE, plot.lincomb=FALSE, plot.random.effects=FALSE, plot.hyperparameters=FALSE, plot.predictor=FALSE, plot.q=FALSE, plot.cpo=TRUE, single=FALSE)

Predictions

Lets produce partial (conditional) plots for each of the main effects, at a couple of levels of the other covariates (since there was evidence of an interaction). I will do this via the imputation method, however, it could equally be performed via the linear combinations method.

#partial effect of cx1 newdata1 <- expand.grid(cx1=seq(min(data$cx1, na.rm=TRUE), max(data$cx1, na.rm=TRUE), len=100), cx2=c(mean(data$cx2, na.rm=TRUE)-sd(data$cx2, na.rm=TRUE), mean(data$cx2, na.rm=TRUE), mean(data$cx2, na.rm=TRUE)+sd(data$cx2, na.rm=TRUE)), y=NA) #partial residuals of cx1 newdata1r <- expand.grid(cx1=data$cx1, cx2=c(mean(data$cx2, na.rm=TRUE)-sd(data$cx2, na.rm=TRUE), mean(data$cx2, na.rm=TRUE), mean(data$cx2, na.rm=TRUE)+sd(data$cx2, na.rm=TRUE)), y=NA) #partial effect of cx2 newdata2 <- expand.grid(cx2=seq(min(data$cx2, na.rm=TRUE), max(data$cx2, na.rm=TRUE), len=100), cx1=c(mean(data$cx1, na.rm=TRUE)-sd(data$cx1, na.rm=TRUE), mean(data$cx1, na.rm=TRUE), mean(data$cx1, na.rm=TRUE)+sd(data$cx1, na.rm=TRUE)), y=NA) #partial residuals of cx2 newdata2r <- expand.grid(cx2=data$cx2, cx1=c(mean(data$cx1, na.rm=TRUE)-sd(data$cx1, na.rm=TRUE), mean(data$cx1, na.rm=TRUE), mean(data$cx1, na.rm=TRUE)+sd(data$cx1, na.rm=TRUE)), y=NA) newdata <- rbind(data.frame(Pred='cx1', newdata1),data.frame(Pred='cx2', newdata2), data.frame(Pred='cx1r', newdata1r),data.frame(Pred='cx2r', newdata2r)) data.pred <- rbind(data.frame(Pred='Orig',subset(data, select=c(cx1,cx2,y))), newdata) #fit the model data.inla.m1 <- inla(y~cx1*cx2, data=data.pred, control.compute=list(dic=TRUE, cpo=TRUE, waic=TRUE), control.predictor=list(compute=TRUE)) #extract the predictor values associated with the appended data newdata <- cbind(newdata, data.inla.m1$summary.linear.predictor[(nrow(data)+1):nrow(data.pred),]) newdata <- reshape:::rename(newdata, c("0.025quant"="lower", "0.975quant"="upper")) newdata1 <- droplevels(subset(newdata, Pred=='cx1')) newdata1$Cov <- factor(newdata1$cx2, labels=c('Low','Med','High')) newdata2 <- droplevels(subset(newdata, Pred=='cx2')) newdata2$Cov <- factor(newdata2$cx1, labels=c('Low','Med','High')) newdata1r <- droplevels(subset(newdata, Pred=='cx1r')) newdata1r$Cov <- factor(newdata1$cx2, labels=c('Low','Med','High')) newdata1r$y <- newdata1r$mean + (newdata1r$mean-data$y) newdata2r <- droplevels(subset(newdata, Pred=='cx2r')) newdata2r$Cov <- factor(newdata2$cx1, labels=c('Low','Med','High')) newdata2r$y <- newdata2r$mean + (newdata2r$mean-data$y) g1<-ggplot(newdata1, aes(y=mean, x=cx1, shape=Cov,fill=Cov, color=Cov)) + geom_point(data=newdata1r, aes(y=y)) + geom_ribbon(aes(ymin=lower, ymax=upper), alpha=0.2, color=NA) + scale_fill_discrete('cx2')+scale_color_discrete('cx2')+scale_shape_discrete('cx2')+ geom_line() + theme_classic() g2<-ggplot(newdata2, aes(y=mean, x=cx2, shape=Cov,fill=Cov, color=Cov)) + geom_point(data=newdata2r, aes(y=y)) + geom_ribbon(aes(ymin=lower, ymax=upper), alpha=0.2, color=NA) + scale_fill_discrete('cx1')+scale_color_discrete('cx1')+scale_shape_discrete('cx1')+ geom_line() + theme_classic() library(gridExtra) grid.arrange(g1,g2, nrow=1)

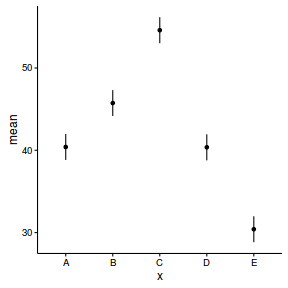

Single factor ANOVA

Imagine we has designed an experiment in which we had measured the response ($y$) under five different treatments ($x$), each replicated 10 times ($n=10$). As this section is mainly about the generation of artificial data (and not specifically about what to do with the data), understanding the actual details are optional and can be safely skipped. Consequently, I have folded (toggled) this section away.

- the sample size per treatment=10

- the categorical $x$ variable with 5 levels

- the population means associated with the 5 treatment levels are 40, 45, 55, 40, 35 ($\alpha_1=40$, $\alpha_2=45$, $\alpha_3=55$, $\alpha_4=40$, $\alpha_5=30$)

- the data are drawn from normal distributions with a mean of 0 and standard deviation of 3 ($\sigma^2=9$)

set.seed(1) ngroups <- 5 #number of populations nsample <- 10 #number of reps in each pop.means <- c(40, 45, 55, 40, 30) #population mean length sigma <- 3 #residual standard deviation n <- ngroups * nsample #total sample size eps <- rnorm(n, 0, sigma) #residuals x <- gl(ngroups, nsample, n, lab=LETTERS[1:5]) #factor means <- rep(pop.means, rep(nsample, ngroups)) X <- model.matrix(~x-1) #create a design matrix y <- as.numeric(X %*% pop.means+eps) data <- data.frame(y,x) head(data) #print out the first six rows of the data set

y x 1 38.12064 A 2 40.55093 A 3 37.49311 A 4 44.78584 A 5 40.98852 A 6 37.53859 A

$$ y_i = \alpha + \beta X_{i} + \varepsilon_i \hspace{4em}\varepsilon_i\sim{}\mathcal{N}(0, \sigma^2) $$

data.lm <- lm(y~x, data=data) summary(data.lm)

Call:

lm(formula = y ~ x, data = data)

Residuals:

Min 1Q Median 3Q Max

-7.3906 -1.2752 0.3278 1.7931 4.3892

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 40.39661 0.81333 49.668 < 2e-16 ***

xB 5.34993 1.15023 4.651 2.91e-05 ***

xC 14.20237 1.15023 12.347 4.74e-16 ***

xD -0.03442 1.15023 -0.030 0.976

xE -9.99420 1.15023 -8.689 3.50e-11 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 2.572 on 45 degrees of freedom

Multiple R-squared: 0.9129, Adjusted R-squared: 0.9052

F-statistic: 117.9 on 4 and 45 DF, p-value: < 2.2e-16

modelString=" model { #Likelihood for (i in 1:n) { y[i]~dnorm(mean[i],tau) mean[i] <- inprod(beta[],X[i,]) } #Priors for (i in 1:ngroups) { beta[i] ~ dnorm(0, 1.0E-6) } sigma ~ dunif(0, 100) tau <- 1 / (sigma * sigma) #Contrasts Group.means[1] <- beta[1] for (i in 2:ngroups) { Group.means[i] <- beta[i]+beta[1] } #Pairwise effects for (i in 1:ngroups) { for (j in 1:ngroups) { eff[i,j] <- Group.means[i] - Group.means[j] } } #Specific comparisons Comp1 <- Group.means[3] - Group.means[5] Comp2 <- (Group.means[1] + Group.means[2])/2 - (Group.means[3] + Group.means[4] + Group.means[5])/3 } " X <- model.matrix(~x, data) data.list <- with(data, list(y=y, X=X, n=nrow(data), ngroups=ncol(X) ) ) data.jags <- jags(data=data.list, inits=NULL, parameters.to.save=c('beta','sigma', 'eff', 'Comp1', 'Comp2'), model.file=textConnection(modelString), n.chains=3, n.iter=10000, n.burnin=2000, n.thin=100 )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 406 Initializing model

print(data.jags)

Inference for Bugs model at "6", fit using jags,

3 chains, each with 10000 iterations (first 2000 discarded), n.thin = 100

n.sims = 240 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

Comp1 24.082 1.190 21.973 23.314 24.100 24.837 26.545 1.006 210

Comp2 1.310 0.798 -0.236 0.771 1.382 1.809 2.765 1.001 240

beta[1] 40.440 0.844 38.720 39.847 40.472 40.974 42.135 1.011 140

beta[2] 5.326 1.222 2.887 4.578 5.278 6.102 7.821 1.021 120

beta[3] 14.133 1.224 11.678 13.358 14.106 14.953 16.338 0.999 240

beta[4] -0.124 1.155 -2.139 -0.883 -0.121 0.614 2.279 1.031 120

beta[5] -9.949 1.142 -11.916 -10.737 -9.991 -9.144 -7.772 1.005 240

eff[1,1] 0.000 0.000 0.000 0.000 0.000 0.000 0.000 1.000 1

eff[2,1] 5.326 1.222 2.887 4.578 5.278 6.102 7.821 1.021 120

eff[3,1] 14.133 1.224 11.678 13.358 14.106 14.953 16.338 0.999 240

eff[4,1] -0.124 1.155 -2.139 -0.883 -0.121 0.614 2.279 1.031 120

eff[5,1] -9.949 1.142 -11.916 -10.737 -9.991 -9.144 -7.772 1.005 240

eff[1,2] -5.326 1.222 -7.821 -6.102 -5.278 -4.578 -2.887 1.010 140

eff[2,2] 0.000 0.000 0.000 0.000 0.000 0.000 0.000 1.000 1

eff[3,2] 8.806 1.343 6.244 7.881 8.764 9.745 11.556 1.024 140

eff[4,2] -5.450 1.215 -7.740 -6.381 -5.380 -4.673 -3.025 0.996 240

eff[5,2] -15.276 1.222 -17.554 -15.996 -15.369 -14.446 -12.564 0.996 240

eff[1,3] -14.133 1.224 -16.338 -14.953 -14.106 -13.358 -11.678 0.999 240

eff[2,3] -8.806 1.343 -11.556 -9.745 -8.764 -7.881 -6.244 1.016 140

eff[3,3] 0.000 0.000 0.000 0.000 0.000 0.000 0.000 1.000 1

eff[4,3] -14.257 1.168 -16.241 -15.121 -14.245 -13.474 -11.660 1.018 98

eff[5,3] -24.082 1.190 -26.545 -24.837 -24.100 -23.314 -21.973 1.006 210

eff[1,4] 0.124 1.155 -2.279 -0.614 0.121 0.883 2.139 1.031 120

eff[2,4] 5.450 1.215 3.025 4.673 5.380 6.381 7.740 0.996 240

eff[3,4] 14.257 1.168 11.660 13.474 14.245 15.121 16.241 1.020 94

eff[4,4] 0.000 0.000 0.000 0.000 0.000 0.000 0.000 1.000 1

eff[5,4] -9.825 1.220 -12.114 -10.643 -9.854 -8.901 -7.713 1.001 240

eff[1,5] 9.949 1.142 7.772 9.144 9.991 10.737 11.916 1.005 240

eff[2,5] 15.276 1.222 12.564 14.446 15.369 15.996 17.554 0.996 240

eff[3,5] 24.082 1.190 21.973 23.314 24.100 24.837 26.545 1.006 210

eff[4,5] 9.825 1.220 7.713 8.901 9.854 10.643 12.114 0.999 240

eff[5,5] 0.000 0.000 0.000 0.000 0.000 0.000 0.000 1.000 1

sigma 2.655 0.298 2.153 2.462 2.622 2.823 3.228 0.998 240

deviance 237.918 3.727 232.555 235.288 237.245 239.878 246.813 1.010 170

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 6.9 and DIC = 244.8

DIC is an estimate of expected predictive error (lower deviance is better).

In preparation for fitting this model, we will also consider a number of contrasts (as we did for the Bayesian (JAGS) version of the analysis. Firstly, we will append missing response data in order to generate cell means predictions (for the summary plot) and secondly, we will define linear combinations (contrasts) to provide the pairwise and planned comparisons.

newdata <- data.frame(x=levels(data$x), y=NA) data.pred <- rbind(data, newdata) #Define linear combinations (pairwise comparisons) library(multcomp) tuk.mat <- contrMat(n=table(newdata$x), type="Tukey") Xmat <- model.matrix(~x, data=newdata) pairwise.Xmat <- tuk.mat %*% Xmat pairwise.Xmat

(Intercept) xB xC xD xE B - A 0 1 0 0 0 C - A 0 0 1 0 0 D - A 0 0 0 1 0 E - A 0 0 0 0 1 C - B 0 -1 1 0 0 D - B 0 -1 0 1 0 E - B 0 -1 0 0 1 D - C 0 0 -1 1 0 E - C 0 0 -1 0 1 E - D 0 0 0 -1 1

#Define linear combinations (planned contrasts) comp.Xmat <- rbind(Comp1=c(0,0,1,0,-1), Comp2=c(1/2,1/2,-1/3,-1/3,-1/3)) #make these appropriate for the treatment contrasts applied in the model fit comp.Xmat <-cbind(0,comp.Xmat %*% contr.treatment(5)) rownames(comp.Xmat) <- c("Grp 3 vs 5","Grp 1,2 vs 3,4,5") Xmat <- rbind(pairwise.Xmat, comp.Xmat) Xmat

(Intercept) xB xC xD xE B - A 0 1.0 0.0000000 0.0000000 0.0000000 C - A 0 0.0 1.0000000 0.0000000 0.0000000 D - A 0 0.0 0.0000000 1.0000000 0.0000000 E - A 0 0.0 0.0000000 0.0000000 1.0000000 C - B 0 -1.0 1.0000000 0.0000000 0.0000000 D - B 0 -1.0 0.0000000 1.0000000 0.0000000 E - B 0 -1.0 0.0000000 0.0000000 1.0000000 D - C 0 0.0 -1.0000000 1.0000000 0.0000000 E - C 0 0.0 -1.0000000 0.0000000 1.0000000 E - D 0 0.0 0.0000000 -1.0000000 1.0000000 Grp 3 vs 5 0 0.0 1.0000000 0.0000000 -1.0000000 Grp 1,2 vs 3,4,5 0 0.5 -0.3333333 -0.3333333 -0.3333333

lincomb <- inla.make.lincombs(as.data.frame(Xmat))

Now lets fit the model.

#fit the model data.inla1 <- inla(y~x, lincomb=lincomb, #include the linear combinations data=data.pred, control.compute=list(dic=TRUE, cpo=TRUE, waic=TRUE), control.inla=list(lincomb.derived.only=TRUE)) #examine the regular summary - not there are no changes to the first fit summary(data.inla1)

Call:

c("inla(formula = y ~ x, data = data.pred, lincomb = lincomb, control.compute = list(dic = TRUE, ", " cpo = TRUE, waic = TRUE), control.inla = list(lincomb.derived.only = TRUE))" )

Time used:

Pre-processing Running inla Post-processing Total

0.1363 0.0730 0.0552 0.2646

Fixed effects:

mean sd 0.025quant 0.5quant 0.975quant mode kld

(Intercept) 40.4027 0.8021 38.8235 40.4026 41.9806 40.4025 0

xB 5.3404 1.1346 3.1059 5.3405 7.5715 5.3407 0

xC 14.1871 1.1346 11.9524 14.1873 16.4180 14.1877 0

xD -0.0405 1.1346 -2.2748 -0.0405 2.1908 -0.0403 0

xE -9.9939 1.1346 -12.2279 -9.9939 -7.7624 -9.9939 0

Linear combinations (derived):

ID mean sd 0.025quant 0.5quant 0.975quant mode kld

lc01 1 5.3404 1.1346 3.1059 5.3405 7.5715 5.3407 0

lc02 2 14.1871 1.1346 11.9524 14.1873 16.4180 14.1877 0

lc03 3 -0.0405 1.1346 -2.2748 -0.0405 2.1908 -0.0403 0

lc04 4 -9.9939 1.1346 -12.2279 -9.9939 -7.7624 -9.9939 0

lc05 5 8.8467 1.1355 6.6106 8.8468 11.0799 8.8470 0

lc06 6 -5.3809 1.1355 -7.6166 -5.3810 -3.1474 -5.3810 0

lc07 7 -15.3342 1.1355 -17.5697 -15.3344 -13.1005 -15.3346 0

lc08 8 -14.2276 1.1355 -16.4632 -14.2278 -11.9939 -14.2280 0

lc09 9 -24.1810 1.1355 -26.4163 -24.1812 -21.9471 -24.1816 0

lc10 10 -9.9534 1.1355 -12.1890 -9.9535 -7.7198 -9.9536 0

lc11 11 24.1810 1.1355 21.9444 24.1812 26.4138 24.1816 0

lc12 12 1.2859 0.7326 -0.1565 1.2859 2.7269 1.2859 0

The model has no random effects

Model hyperparameters:

mean sd 0.025quant 0.5quant 0.975quant mode

Precision for the Gaussian observations 0.1577 0.0325 0.1018 0.1551 0.229 0.1506

Expected number of effective parameters(std dev): 4.995(9e-04)

Number of equivalent replicates : 10.01

Deviance Information Criterion (DIC) ...: 242.49

Effective number of parameters .........: 5.651

Watanabe-Akaike information criterion (WAIC) ...: 243.15

Effective number of parameters .................: 5.727

Marginal log-Likelihood: -142.21

CPO and PIT are computed

Posterior marginals for linear predictor and fitted values computed

Parameter estimates are entirely consistent with the Frequentist and JAGS outcomes. Lets explore the various contrasts. These are captured in the summary.lincomb.derived element of the INLA list. To make this a little more informative (so we can see what each contrast represents, I will apply the rownames from the Xmat.

rownames(data.inla1$summary.lincomb.derived) <- rownames(Xmat) data.inla1$summary.lincomb.derived

ID mean sd 0.025quant 0.5quant 0.975quant mode kld B - A 1 5.34035821 1.1345555 3.1059307 5.34045527 7.571501 5.34073924 0 C - A 2 14.18709661 1.1345572 11.9524362 14.18727158 16.418038 14.18770537 0 D - A 3 -0.04051494 1.1345548 -2.2748016 -0.04046525 2.190752 -0.04027242 0 E - A 4 -9.99387467 1.1345544 -12.2279022 -9.99391263 -7.762378 -9.99388837 0 C - B 5 8.84673840 1.1354933 6.6105973 8.84678312 11.079883 8.84696648 0 D - B 6 -5.38087314 1.1354931 -7.6166440 -5.38095391 -3.147399 -5.38101187 0 E - B 7 -15.33423287 1.1354941 -17.5697471 -15.33440142 -13.100526 -15.33462821 0 D - C 8 -14.22761155 1.1354940 -16.4631542 -14.22777033 -11.993931 -14.22797835 0 E - C 9 -24.18097128 1.1354959 -26.4162589 -24.18121785 -21.947056 -24.18159469 0 E - D 10 -9.95335973 1.1354934 -12.1890125 -9.95348082 -7.719779 -9.95361634 0 Grp 3 vs 5 11 24.18097128 1.1354959 21.9444267 24.18115124 26.413765 24.18159469 0 Grp 1,2 vs 3,4,5 12 1.28594339 0.7325942 -0.1565176 1.28589156 2.726922 1.28585466 0

Finally, it is time to extract the predicted values from the linear predictor and plot the cell means.

#summary figure newdata <- cbind(newdata, data.inla1$summary.linear.predictor[(nrow(data)+1):nrow(data.pred),]) newdata <- reshape:::rename(newdata, c("0.025quant"="lower", "0.975quant"="upper")) ggplot(newdata, aes(y=mean, x=x)) + geom_linerange(aes(ymin=lower, ymax=upper))+ geom_point() + theme_classic()

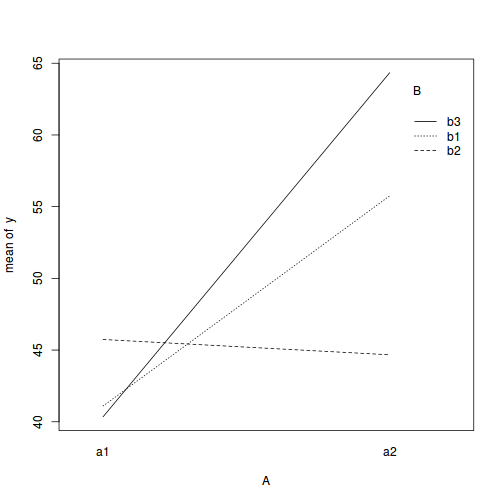

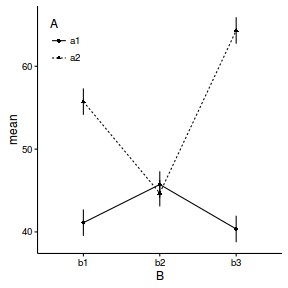

Factorial ANOVA

Imagine we has designed an experiment in which we had measured the response ($y$) under a combination of two different potential influences (Factor A: levels $a1$ and $a2$; and Factor B: levels $b1$, $b2$ and $b3$), each combination replicated 10 times ($n=10$). As this section is mainly about the generation of artificial data (and not specifically about what to do with the data), understanding the actual details are optional and can be safely skipped. Consequently, I have folded (toggled) this section away.

- the sample size per treatment=10

- factor A with 2 levels

- factor B with 3 levels

- the 6 effects parameters are 40, 15, 5, 0, -15,10 ($\mu_{a1b1}=40$, $\mu_{a2b1}=40+15=55$, $\mu_{a1b2}=40+5=45$, $\mu_{a1b3}=40+0=40$, $\mu_{a2b2}=40+15+5-15=45$ and $\mu_{a2b3}=40+15+0+10=65$)

- the data are drawn from normal distributions with a mean of 0 and standard deviation of 3 ($\sigma^2=9$)

set.seed(1) nA <- 2 #number of levels of A nB <- 3 #number of levels of B nsample <- 10 #number of reps in each A <- gl(nA,1,nA, lab=paste("a",1:nA,sep="")) B <- gl(nB,1,nB, lab=paste("b",1:nB,sep="")) data <-expand.grid(A=A,B=B, n=1:nsample) X <- model.matrix(~A*B, data=data) eff <- c(40,15,5,0,-15,10) sigma <- 3 #residual standard deviation n <- nrow(data) eps <- rnorm(n, 0, sigma) #residuals data$y <- as.numeric(X %*% eff+eps) head(data) #print out the first six rows of the data set

A B n y 1 a1 b1 1 38.12064 2 a2 b1 1 55.55093 3 a1 b2 1 42.49311 4 a2 b2 1 49.78584 5 a1 b3 1 40.98852 6 a2 b3 1 62.53859

with(data,interaction.plot(A,B,y))

## ALTERNATIVELY, we could supply the population means ## and get the effect parameters from these. ## To correspond to the model matrix, enter the population ## means in the order of: ## a1b1, a2b1, a1b1, a2b2,a1b3,a2b3 pop.means <- as.matrix(c(40,55,45,45,40,65),byrow=F) ## Generate a minimum model matrix for the effects XX <-model.matrix(~A*B, expand.grid(A=factor(1:2),B=factor(1:3))) ## Use the solve() function to solve what are effectively simultaneous equations (eff<-as.vector(solve(XX,pop.means)))

[1] 40 15 5 0 -15 10

data$y <- as.numeric(X %*% eff+eps)

$$ y_i = \alpha + \beta X_{i} + \varepsilon_i \hspace{4em}\varepsilon_i\sim{}\mathcal{N}(0, \sigma^2) $$

data.lm <- lm(y~A*B, data=data) summary(data.lm)

Call:

lm(formula = y ~ A * B, data = data)

Residuals:

Min 1Q Median 3Q Max

-7.3944 -1.5753 0.2281 1.5575 5.1909

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 41.0988 0.8218 50.010 < 2e-16 ***

Aa2 14.6515 1.1622 12.606 < 2e-16 ***

Bb2 4.6386 1.1622 3.991 0.0002 ***

Bb3 -0.7522 1.1622 -0.647 0.5202

Aa2:Bb2 -15.7183 1.6436 -9.563 3.24e-13 ***

Aa2:Bb3 9.3352 1.6436 5.680 5.54e-07 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 2.599 on 54 degrees of freedom

Multiple R-squared: 0.9245, Adjusted R-squared: 0.9175

F-statistic: 132.3 on 5 and 54 DF, p-value: < 2.2e-16

modelString=" model { #Likelihood for (i in 1:n) { y[i]~dnorm(mean[i],tau) mean[i] <- inprod(beta[],X[i,]) } #Priors and derivatives for (i in 1:p) { beta[i] ~ dnorm(0, 1.0E-6) #prior } sigma ~ dunif(0, 100) tau <- 1 / (sigma * sigma) } " X <- model.matrix(~A*B, data) data.list <- with(data, list(y=y, X=X, n=nrow(data), p=ncol(X) ) ) data.jags <- jags(data=data.list, inits=NULL, parameters.to.save=c('beta','sigma'), model.file=textConnection(modelString), n.chains=3, n.iter=10000, n.burnin=2000, n.thin=100 )

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 502 Initializing model

print(data.jags)

Inference for Bugs model at "8", fit using jags,

3 chains, each with 10000 iterations (first 2000 discarded), n.thin = 100

n.sims = 240 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

beta[1] 40.999 0.894 39.444 40.348 40.972 41.622 42.780 1.005 240

beta[2] 14.849 1.136 12.625 14.026 14.859 15.554 16.912 0.997 240

beta[3] 4.793 1.204 2.600 4.029 4.806 5.659 6.884 1.012 240

beta[4] -0.646 1.214 -3.104 -1.375 -0.585 0.182 1.544 0.996 240

beta[5] -16.021 1.739 -19.540 -16.976 -15.918 -14.945 -12.609 0.996 240

beta[6] 9.216 1.565 6.271 8.162 9.269 10.179 12.699 1.002 240

sigma 2.665 0.281 2.238 2.446 2.650 2.837 3.309 1.000 240

deviance 286.159 3.935 280.494 283.270 285.659 288.017 293.962 1.017 210

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 7.7 and DIC = 293.9

DIC is an estimate of expected predictive error (lower deviance is better).

newdata <- expand.grid(A=levels(data$A), B=levels(data$B), y=NA) data.pred <- rbind(subset(data, select=c(A,B,y)), newdata)

In addition to fitting the model in INLA, it might be useful to also derive the marginal effects of one of the factors (A) at each level of the other factor (B).

#Define linear combinations (planned contrasts) Xmat <- model.matrix(~A*B, data=newdata) cbind(newdata, Xmat)

A B y (Intercept) Aa2 Bb2 Bb3 Aa2:Bb2 Aa2:Bb3 1 a1 b1 NA 1 0 0 0 0 0 2 a2 b1 NA 1 1 0 0 0 0 3 a1 b2 NA 1 0 1 0 0 0 4 a2 b2 NA 1 1 1 0 1 0 5 a1 b3 NA 1 0 0 1 0 0 6 a2 b3 NA 1 1 0 1 0 1

library(plyr) Xmat <- daply(cbind(newdata, Xmat), ~B, function(x) { x[2,] - x[1,] }) Xmat

B A B y (Intercept) Aa2 Bb2 Bb3 Aa2:Bb2 Aa2:Bb3 b1 NA NA NA 0 1 0 0 0 0 b2 NA NA NA 0 1 0 0 1 0 b3 NA NA NA 0 1 0 0 0 1

lincomb <- inla.make.lincombs(as.data.frame(Xmat[,-1:-3]))

Now lets fit the model.

#fit the model data.inla <- inla(y~A*B, data=data.pred, lincomb=lincomb, control.compute=list(dic=TRUE, cpo=TRUE, waic=TRUE), control.inla=list(lincomb.derived.only=TRUE)) #examine the regular summary - not there are no changes to the first fit summary(data.inla)

Call:

c("inla(formula = y ~ A * B, data = data.pred, lincomb = lincomb, ", " control.compute = list(dic = TRUE, cpo = TRUE, waic = TRUE), ", " control.inla = list(lincomb.derived.only = TRUE))")

Time used:

Pre-processing Running inla Post-processing Total

0.0820 0.0597 0.0309 0.1727

Fixed effects:

mean sd 0.025quant 0.5quant 0.975quant mode kld

(Intercept) 41.1152 0.8119 39.5179 41.1150 42.7118 41.1147 0

Aa2 14.6213 1.1474 12.3621 14.6216 16.8761 14.6223 0

Bb2 4.6088 1.1479 2.3485 4.6091 6.8648 4.6098 0

Bb3 -0.7619 1.1479 -3.0216 -0.7619 1.4946 -0.7616 0

Aa2:Bb2 -15.6643 1.6225 -18.8558 -15.6650 -12.4729 -15.6661 0

Aa2:Bb3 9.3525 1.6225 6.1598 9.3523 12.5428 9.3519 0

Linear combinations (derived):

ID mean sd 0.025quant 0.5quant 0.975quant mode kld

lc1 1 14.6213 1.1474 12.3621 14.6216 16.8761 14.6223 0

lc2 2 -1.0430 1.1491 -3.3039 -1.0434 1.2168 -1.0438 0

lc3 3 23.9738 1.1491 21.7117 23.9739 26.2326 23.9742 0

The model has no random effects

Model hyperparameters:

mean sd 0.025quant 0.5quant 0.975quant mode

Precision for the Gaussian observations 0.1534 0.029 0.103 0.1513 0.2164 0.1476

Expected number of effective parameters(std dev): 5.991(0.0014)

Number of equivalent replicates : 10.02

Deviance Information Criterion (DIC) ...: 292.00

Effective number of parameters .........: 6.649

Watanabe-Akaike information criterion (WAIC) ...: 292.64

Effective number of parameters .................: 6.634

Marginal log-Likelihood: -169.80

CPO and PIT are computed

Posterior marginals for linear predictor and fitted values computed

Finally, it is time to extract the predicted values from the linear predictor and plot the cell means.

#summary figure newdata <- cbind(newdata, data.inla$summary.linear.predictor[(nrow(data)+1):nrow(data.pred),]) newdata <- reshape:::rename(newdata, c("0.025quant"="lower", "0.975quant"="upper")) head(newdata)

A B y mean sd lower 0.5quant upper mode kld predictor.61 a1 b1 NA 41.11522 0.8118338 39.52008 41.11503 42.71099 41.11468 1.674684e-09 predictor.62 a2 b1 NA 55.73648 0.8123780 54.13916 55.73664 57.33253 55.73696 1.885519e-09 predictor.63 a1 b2 NA 45.72401 0.8126511 44.12616 45.72417 47.32061 45.72447 1.878400e-09 predictor.64 a2 b2 NA 44.68097 0.8129257 43.08342 44.68085 46.27874 44.68063 1.702529e-09 predictor.65 a1 b3 NA 40.35329 0.8126503 38.75616 40.35321 41.95042 40.35307 1.732000e-09 predictor.66 a2 b3 NA 64.32707 0.8129237 62.72894 64.32714 65.92440 64.32729 1.822437e-09

ggplot(newdata, aes(y=mean, x=B, shape=A)) + geom_linerange(aes(ymin=lower, ymax=upper))+ geom_point() + geom_line(aes(x=as.numeric(B), linetype=A)) + theme_classic()+ theme(legend.position=c(0,1),legend.justification=c(0,1))

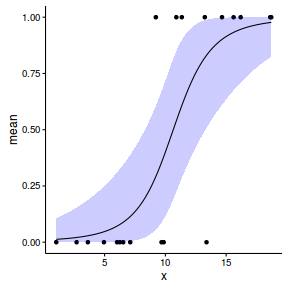

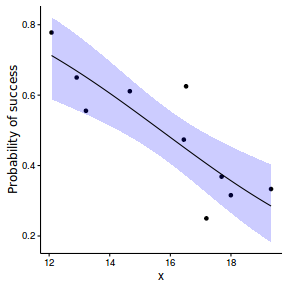

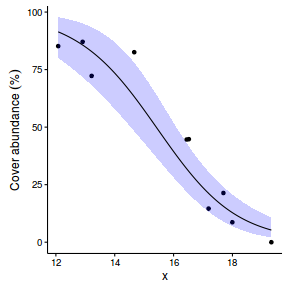

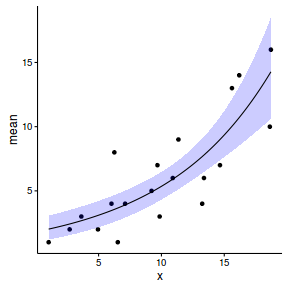

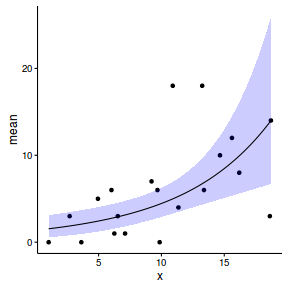

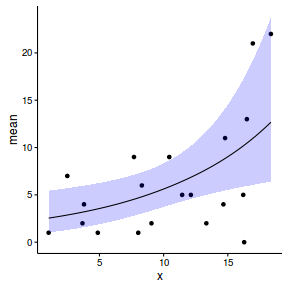

Logistic regression (binary response)

Lets say we wanted to model the presence/absence of an item ($y$) against a continuous predictor ($x$) As this section is mainly about the generation of artificial data (and not specifically about what to do with the data), understanding the actual details are optional and can be safely skipped. Consequently, I have folded (toggled) this section away.

- the sample size = 20

- the continuous $x$ variable is a random uniform spread of measurements between 1 and 20

- the rate of change in log odds ratio per unit change in x (slope) = 0.5. The magnitude of the slope is an indicator of how abruptly the log odds ratio changes. A very abrupt change would occur if there was very little variability in the trend. That is, a large slope would be indicative of a threshold effect. A lower slope indicates a gradual change in odds ratio and thus a less obvious and precise switch between the likelihood of 1 vs 0.

- the inflection point (point where the slope of the line is greatest) = 10. The inflection point indicates the value of the predictor ($x$) where the log Odds are 50% (switching between 1 being more likely and 0 being more likely). This is also known as the LD50.

- the intercept (which has no real interpretation) is equal to the negative of the slope multiplied by the inflection point

- to generate the values of $y$ expected at each $x$ value, we evaluate the linear predictor. These expected values are then transformed into a scale mapped by (0,1) by using the inverse logit function $\frac{e^{linear~predictor}}{1+e^{linear~predictor}}$

- finally, we generate $y$ values by using the expected $y$ values as probabilities when drawing random numbers from a binomial distribution. This step adds random noise to the expected $y$ values and returns only 1's and 0's.

set.seed(8) #The number of samples n.x <- 20 #Create x values that at uniformly distributed throughout the rate of 1 to 20 x <- sort(runif(n = n.x, min = 1, max =20)) #The slope is the rate of change in log odds ratio for each unit change in x # the smaller the slope, the slower the change (more variability in data too) slope=0.5 #Inflection point is where the slope of the line is greatest #this is also the LD50 point inflect <- 10 #Intercept (no interpretation) intercept <- -1*(slope*inflect) #The linear predictor linpred <- intercept+slope*x #Predicted y values y.pred <- exp(linpred)/(1+exp(linpred)) #Add some noise and make binomial n.y <-rbinom(n=n.x,20,p=0.9) y<- rbinom(n = n.x,size=1, prob = y.pred) dat <- data.frame(y,x)

$$ log\left(\frac{\pi}{1-\pi}\right)=\beta_0+\beta_1x_1+\epsilon_i, \hspace{1cm}\epsilon\sim Binom(\lambda) $$

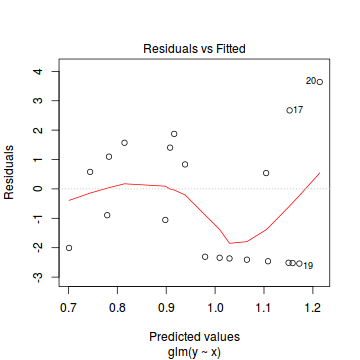

dat.glm <- glm(y~x, data=dat, family="binomial") summary(dat.glm)

Call:

glm(formula = y ~ x, family = "binomial", data = dat)

Deviance Residuals:

Min 1Q Median 3Q Max

-1.97157 -0.33665 -0.08191 0.30035 1.59628

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) -6.9899 3.1599 -2.212 0.0270 *

x 0.6559 0.2936 2.234 0.0255 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 27.526 on 19 degrees of freedom

Residual deviance: 11.651 on 18 degrees of freedom

AIC: 15.651

Number of Fisher Scoring iterations: 6

modelString=" model{ for (i in 1:N) { y[i] ~ dbern(p[i]) logit(p[i]) <- max(-20,min(20,beta0+beta1*x[i])) } beta0 ~ dnorm(0,1.0E-06) beta1 ~ dnorm(0,1.0E-06) } " dat.list <- with(dat, list(y=y, x=x, N=nrow(dat))) dat.jags <- jags(data=dat.list,model.file=textConnection(modelString), param=c('beta0','beta1'), n.chains=3, n.iter=20000, n.burnin=10000, n.thin=100)

Compiling model graph Resolving undeclared variables Allocating nodes Graph Size: 147 Initializing model

print(dat.jags)

Inference for Bugs model at "9", fit using jags,

3 chains, each with 20000 iterations (first 10000 discarded), n.thin = 100

n.sims = 300 iterations saved

mu.vect sd.vect 2.5% 25% 50% 75% 97.5% Rhat n.eff

beta0 -9.497 3.970 -18.493 -12.068 -8.922 -6.449 -3.740 1.004 300

beta1 0.898 0.370 0.353 0.612 0.844 1.123 1.772 1.003 300

deviance 13.872 2.108 11.683 12.301 13.291 14.811 19.290 1.018 200

For each parameter, n.eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor (at convergence, Rhat=1).

DIC info (using the rule, pD = var(deviance)/2)

pD = 2.2 and DIC = 16.1

DIC is an estimate of expected predictive error (lower deviance is better).

library(rstan) modelString=" data { int<lower=1> n; int<lower=1> nX; int y[n]; matrix [n,nX] X; } parameters { vector [nX] beta; } transformed parameters { vector [n] mu; mu<-X*beta; } model { beta ~ normal(0,1000); y ~ binomial_logit(1, mu); } " Xmat <- model.matrix(~x,data=dat) dat.list <- with(dat, list(y = y, X = Xmat, nX=ncol(Xmat),n = nrow(dat))) library(rstan) dat.rstan <- stan(data=dat.list, model_code=modelString, chains=3, iter=1000, warmup=500, thin=2, save_dso=TRUE )

SAMPLING FOR MODEL '8378cae68bcb88c343bcb3d714aeb387' NOW (CHAIN 1). Chain 1, Iteration: 1 / 1000 [ 0%] (Warmup) Chain 1, Iteration: 100 / 1000 [ 10%] (Warmup) Chain 1, Iteration: 200 / 1000 [ 20%] (Warmup) Chain 1, Iteration: 300 / 1000 [ 30%] (Warmup) Chain 1, Iteration: 400 / 1000 [ 40%] (Warmup) Chain 1, Iteration: 500 / 1000 [ 50%] (Warmup) Chain 1, Iteration: 501 / 1000 [ 50%] (Sampling) Chain 1, Iteration: 600 / 1000 [ 60%] (Sampling) Chain 1, Iteration: 700 / 1000 [ 70%] (Sampling) Chain 1, Iteration: 800 / 1000 [ 80%] (Sampling) Chain 1, Iteration: 900 / 1000 [ 90%] (Sampling) Chain 1, Iteration: 1000 / 1000 [100%] (Sampling)# # Elapsed Time: 0.060304 seconds (Warm-up) # 0.053233 seconds (Sampling) # 0.113537 seconds (Total) # SAMPLING FOR MODEL '8378cae68bcb88c343bcb3d714aeb387' NOW (CHAIN 2). Chain 2, Iteration: 1 / 1000 [ 0%] (Warmup) Chain 2, Iteration: 100 / 1000 [ 10%] (Warmup) Chain 2, Iteration: 200 / 1000 [ 20%] (Warmup) Chain 2, Iteration: 300 / 1000 [ 30%] (Warmup) Chain 2, Iteration: 400 / 1000 [ 40%] (Warmup) Chain 2, Iteration: 500 / 1000 [ 50%] (Warmup) Chain 2, Iteration: 501 / 1000 [ 50%] (Sampling) Chain 2, Iteration: 600 / 1000 [ 60%] (Sampling) Chain 2, Iteration: 700 / 1000 [ 70%] (Sampling) Chain 2, Iteration: 800 / 1000 [ 80%] (Sampling) Chain 2, Iteration: 900 / 1000 [ 90%] (Sampling) Chain 2, Iteration: 1000 / 1000 [100%] (Sampling)# # Elapsed Time: 0.078958 seconds (Warm-up) # 0.046298 seconds (Sampling) # 0.125256 seconds (Total) # SAMPLING FOR MODEL '8378cae68bcb88c343bcb3d714aeb387' NOW (CHAIN 3). Chain 3, Iteration: 1 / 1000 [ 0%] (Warmup) Chain 3, Iteration: 100 / 1000 [ 10%] (Warmup) Chain 3, Iteration: 200 / 1000 [ 20%] (Warmup) Chain 3, Iteration: 300 / 1000 [ 30%] (Warmup) Chain 3, Iteration: 400 / 1000 [ 40%] (Warmup) Chain 3, Iteration: 500 / 1000 [ 50%] (Warmup) Chain 3, Iteration: 501 / 1000 [ 50%] (Sampling) Chain 3, Iteration: 600 / 1000 [ 60%] (Sampling) Chain 3, Iteration: 700 / 1000 [ 70%] (Sampling) Chain 3, Iteration: 800 / 1000 [ 80%] (Sampling) Chain 3, Iteration: 900 / 1000 [ 90%] (Sampling) Chain 3, Iteration: 1000 / 1000 [100%] (Sampling)# # Elapsed Time: 0.063185 seconds (Warm-up) # 0.053685 seconds (Sampling) # 0.11687 seconds (Total) #

print(dat.rstan, pars=c('beta'))

Inference for Stan model: 8378cae68bcb88c343bcb3d714aeb387.

3 chains, each with iter=1000; warmup=500; thin=2;

post-warmup draws per chain=250, total post-warmup draws=750.

mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

beta[1] -9.56 0.28 3.86 -18.39 -11.73 -9.04 -6.74 -3.78 189 1

beta[2] 0.90 0.03 0.36 0.36 0.64 0.85 1.09 1.71 193 1

Samples were drawn using NUTS(diag_e) at Mon Feb 22 12:02:04 2016.

For each parameter, n_eff is a crude measure of effective sample size,

and Rhat is the potential scale reduction factor on split chains (at

convergence, Rhat=1).

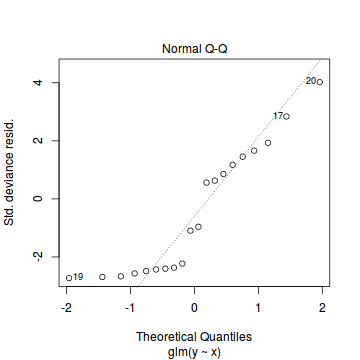

library(brms) dat.brm <- brm(y~x, data=dat, family='binomial', prior=c(set_prior('normal(0,1000)', class='b')), n.chains=3, n.iter=1000, warmup=500, n.thin=2 )