Tutorial 8.1 - Independence, Variance, Normality and Linearity

05 Feb 2018

Overview

Up until now (in the proceeding tutorials and workshops), the focus has been on models that adhere to specific assumptions about the underlying populations (and data). Indeed, both before and immediately after fitting these models, I have stressed the importance of evaluating and validating the proposed and fitted models to ensure reliability of the models.

It is now worth us revisiting those fundamental assumptions as well as exploring the options that are available when the populations (data) do not conform. Let's explore a simple linear regression model to see how each of the assumptions relate to the model.

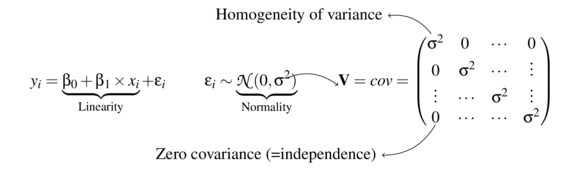

The above simple statistical model models the linear relationship of $y_i$ against $x_i$. The residuals ($\varepsilon$) are assumed to be normally distributed with a mean of zero and a constant (yet unknown) variance ($\sigma$, homogeneity of variance). The residuals (and thus observations) are also assumed to all be independent.

Homogeneity of variance and independence are encapsulated within the single symbol for variance ($\sigma^2$). In assuming equal variances and independence, we are actually making an assumption about the variance-covariance structure of the populations (and thus residuals). Specifically, we assume that all populations are equally varied and thus can be represented well by a single variance term (all diagonal values are the same, $\sigma^2$) and the covariances between each population are zero (off diagonals).

In simple regression, each observation (data point) represents a single observation drawn (sampled) from an entire population of possible observations. The above covariance structure thus assumes that the covariance between each population (observation) is zero - that is, each observation is completely independent of each other observation.

Whilst it is mathematically convenient when data conform to these conditions (normality, homogeneity of variance, independence and linearity), data often violate one or more of these assumptions. In the following tutorials and workshops, I want to discuss and explore the causes and options for dealing with non-compliance to each of these conditions. By gaining a better understanding of how the various model fitting engines perform their task, we are better equipped to accommodate aspects of the data that don't otherwise conform to the simple regression assumptions.

The following table should act as a menu for the treatment of various modelling issues:

| Issue | Frequentist | Bayesian |

|---|---|---|

| Heterogeneity | Tutorial 8.2a | Tutorial 8.2b |

| Temporal autocorrelation (non-independence) | Tutorial 8.3a | Tutorial 8.3b |

| Spatial autocorrelation (non-independence) | Tutorial 8.4a | Tutorial 8.4b |

| Hierarchical structures (non-independence) | Tutorial 9.1 | Tutorial 9.2b |

| Non-normality | Tutorial 10.4 | Tutorial 10.5b |

| Non-linearity | Tutorial 12.1 | Tutorial 12.2b |